In this tutorial, you will learn how to deploy ELK stack 8 Cluster on Docker using Ansible, an an open-source automation tool used for configuration management, application deployment, and task automation.

Table of Contents

Deploying ELK stack 8 Cluster on Docker using Ansible

In our previous tutorial, we learnt how to deploy ELK stack 8 cluster using Docker containers. In this tutorial, we are looking at how we can automate the whole process to ensure a seamless deployment of ELK stack 8 Cluster on Docker containers from a single point across the cluster nodes without breaking a sweat!

Deployment Environment Nodes

In this guide, we have multiple nodes for various purposes. See the table below;

| Linux Distribution | Node IP Address | Node Role |

| Debian 12 | 192.168.122.229 | Ansible Control Plane |

| Debian 12 | 192.168.122.60 | ES node 01 |

| Debian 12 | 192.168.122.152 | ES node 02 |

| Debian 12 | 192.168.122.123 | ES node 03 |

| Debian 12 | 192.168.122.47 | NFS Server |

Install Ansible on Linux

Install Ansible on your control node. A control node is the machine where Ansible is installed and from which all the automation tasks are executed.

Thus, to install Ansible on Debian 12, proceed as follows;

Run system update;

sudo apt updateInstall and upgrade PIP3

sudo apt install python3-pip -yCreate a virtual environment to keep your Ansible installation isolated from your system’s Python environment;

sudo apt install python3-venvpython3 -m venv ansible-envActivate Ansible Python’s virtual environment and install PIP3;

source ansible-env/bin/activatepython3 -m pip install --upgrade pipInstall Ansible;

python3 -m pip install ansibleEnable Ansible auto-completion;

python3 -m pip install argcompleteConfirm the Ansible version;

ansible --versionansible [core 2.16.2]

config file = None

configured module search path = ['/home/kifarunix/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /home/kifarunix/ansible-env/lib/python3.11/site-packages/ansible

ansible collection location = /home/kifarunix/.ansible/collections:/usr/share/ansible/collections

executable location = /home/kifarunix/ansible-env/bin/ansible

python version = 3.11.2 (main, Mar 13 2023, 12:18:29) [GCC 12.2.0] (/home/kifarunix/ansible-env/bin/python3)

jinja version = 3.1.3

libyaml = True

Create Ansible User on Managed Nodes

Ansible uses the user you are logged in on control node as to connect to all remote devices.

If the user doesn’t exists on the remote hosts, you need to create the account.

So, on all Ansible remotely managed hosts, create an account with sudo rights. This is the only manual task you might need to do at the beggining, -:).

Replace the user, kifarunix, below with your username.

On Debian based systems;

sudo useradd -G sudo -s /bin/bash -m kifarunixOn RHEL based systems;

sudo useradd -G wheel -s /bin/bash -m kifarunixSet the account password;

sudo passwd kifarunixSetup Ansible SSH Keys

When using Ansible, you have two options for authenticating with remote systems:

- SSH keys or

- Password Authentication

SSH keys authentication is more preferred as a method of connection and is considered more secure and convenient compared to password authentication.

Therefore, on the Ansible control node, you need to generate a public and private SSH key pair. Once you have the keys, copy the public key to the remote system’s authorized keys file.

Thus, as a non root user, on the control node, run the command below to generate SSH key pair. It is recommended to use SSH keys with a passphrase for increased security.

ssh-keygenSample output;

Generating public/private rsa key pair.

Enter file in which to save the key (/home/kifarunix/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/kifarunix/.ssh/id_rsa

Your public key has been saved in /home/kifarunix/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:mv7b7OynAaft9wnDNniAfxE+dzMJTKP6Ag53DMb4SpE kifarunix@kifarunix-dev

The key's randomart image is:

+---[RSA 3072]----+

| o |

| + + . |

| E + ..o |

| + o... .. .|

| o +S+o + .+.|

| . =oo*.+ + .o|

| .o...=.O |

| . =.=o+ . |

| ..o+O+ .o |

+----[SHA256]-----+

If you used a different path other than the default one to store SSH key pair, then in Ansible configuration file, you can define custom path to SSH key using the private_key_file option.

Copy Ansible SSH Public Key to Managed Nodes

Once you have the SSH key pair generated, proceed to copy the key to the user accounts you will use on managed nodes for Ansible deployment tasks;

(We are using the name, kifarunix, in this guide. Replace the username accordingly)

for i in 60 152 123 47; do ssh-copy-id [email protected].$i; doneIf you are using non default SSH key file, specify the path to the key using the -i option;

for i in 142 144 152 153; do ssh-copy-id -i <custom-ssh-key-path> [email protected].$i; doneCreate Ansible Configuration Directory

By default, /etc/ansible is a default Ansible directory.

If you install Ansible via PIP, chances are, default configuration for Ansible is not created.

Thus, to create you can create your custom configuration directory to easily control Ansible.

mkdir $HOME/ansibleSimilarly, create your custom Ansible configuration file, ansible.cfg.

This is how a default Ansible configuration looks like by default;

cat /etc/ansible/ansible.cfg# config file for ansible -- https://ansible.com/

# ===============================================

# nearly all parameters can be overridden in ansible-playbook

# or with command line flags. ansible will read ANSIBLE_CONFIG,

# ansible.cfg in the current working directory, .ansible.cfg in

# the home directory or /etc/ansible/ansible.cfg, whichever it

# finds first

[defaults]

# some basic default values...

#inventory = /etc/ansible/hosts

#library = /usr/share/my_modules/

#module_utils = /usr/share/my_module_utils/

#remote_tmp = ~/.ansible/tmp

#local_tmp = ~/.ansible/tmp

#plugin_filters_cfg = /etc/ansible/plugin_filters.yml

#forks = 5

#poll_interval = 15

#sudo_user = root

#ask_sudo_pass = True

#ask_pass = True

#transport = smart

#remote_port = 22

#module_lang = C

#module_set_locale = False

# plays will gather facts by default, which contain information about

# the remote system.

#

# smart - gather by default, but don't regather if already gathered

# implicit - gather by default, turn off with gather_facts: False

# explicit - do not gather by default, must say gather_facts: True

#gathering = implicit

# This only affects the gathering done by a play's gather_facts directive,

# by default gathering retrieves all facts subsets

# all - gather all subsets

# network - gather min and network facts

# hardware - gather hardware facts (longest facts to retrieve)

# virtual - gather min and virtual facts

# facter - import facts from facter

# ohai - import facts from ohai

# You can combine them using comma (ex: network,virtual)

# You can negate them using ! (ex: !hardware,!facter,!ohai)

# A minimal set of facts is always gathered.

#gather_subset = all

# some hardware related facts are collected

# with a maximum timeout of 10 seconds. This

# option lets you increase or decrease that

# timeout to something more suitable for the

# environment.

# gather_timeout = 10

# Ansible facts are available inside the ansible_facts.* dictionary

# namespace. This setting maintains the behaviour which was the default prior

# to 2.5, duplicating these variables into the main namespace, each with a

# prefix of 'ansible_'.

# This variable is set to True by default for backwards compatibility. It

# will be changed to a default of 'False' in a future release.

# ansible_facts.

# inject_facts_as_vars = True

# additional paths to search for roles in, colon separated

#roles_path = /etc/ansible/roles

# uncomment this to disable SSH key host checking

#host_key_checking = False

# change the default callback, you can only have one 'stdout' type enabled at a time.

#stdout_callback = skippy

## Ansible ships with some plugins that require whitelisting,

## this is done to avoid running all of a type by default.

## These setting lists those that you want enabled for your system.

## Custom plugins should not need this unless plugin author specifies it.

# enable callback plugins, they can output to stdout but cannot be 'stdout' type.

#callback_whitelist = timer, mail

# Determine whether includes in tasks and handlers are "static" by

# default. As of 2.0, includes are dynamic by default. Setting these

# values to True will make includes behave more like they did in the

# 1.x versions.

#task_includes_static = False

#handler_includes_static = False

# Controls if a missing handler for a notification event is an error or a warning

#error_on_missing_handler = True

# change this for alternative sudo implementations

#sudo_exe = sudo

# What flags to pass to sudo

# WARNING: leaving out the defaults might create unexpected behaviours

#sudo_flags = -H -S -n

# SSH timeout

#timeout = 10

# default user to use for playbooks if user is not specified

# (/usr/bin/ansible will use current user as default)

#remote_user = root

# logging is off by default unless this path is defined

# if so defined, consider logrotate

#log_path = /var/log/ansible.log

# default module name for /usr/bin/ansible

#module_name = command

# use this shell for commands executed under sudo

# you may need to change this to bin/bash in rare instances

# if sudo is constrained

#executable = /bin/sh

# if inventory variables overlap, does the higher precedence one win

# or are hash values merged together? The default is 'replace' but

# this can also be set to 'merge'.

#hash_behaviour = replace

# by default, variables from roles will be visible in the global variable

# scope. To prevent this, the following option can be enabled, and only

# tasks and handlers within the role will see the variables there

#private_role_vars = yes

# list any Jinja2 extensions to enable here:

#jinja2_extensions = jinja2.ext.do,jinja2.ext.i18n

# if set, always use this private key file for authentication, same as

# if passing --private-key to ansible or ansible-playbook

#private_key_file = /path/to/file

# If set, configures the path to the Vault password file as an alternative to

# specifying --vault-password-file on the command line.

#vault_password_file = /path/to/vault_password_file

# format of string {{ ansible_managed }} available within Jinja2

# templates indicates to users editing templates files will be replaced.

# replacing {file}, {host} and {uid} and strftime codes with proper values.

#ansible_managed = Ansible managed: {file} modified on %Y-%m-%d %H:%M:%S by {uid} on {host}

# {file}, {host}, {uid}, and the timestamp can all interfere with idempotence

# in some situations so the default is a static string:

#ansible_managed = Ansible managed

# by default, ansible-playbook will display "Skipping [host]" if it determines a task

# should not be run on a host. Set this to "False" if you don't want to see these "Skipping"

# messages. NOTE: the task header will still be shown regardless of whether or not the

# task is skipped.

#display_skipped_hosts = True

# by default, if a task in a playbook does not include a name: field then

# ansible-playbook will construct a header that includes the task's action but

# not the task's args. This is a security feature because ansible cannot know

# if the *module* considers an argument to be no_log at the time that the

# header is printed. If your environment doesn't have a problem securing

# stdout from ansible-playbook (or you have manually specified no_log in your

# playbook on all of the tasks where you have secret information) then you can

# safely set this to True to get more informative messages.

#display_args_to_stdout = False

# by default (as of 1.3), Ansible will raise errors when attempting to dereference

# Jinja2 variables that are not set in templates or action lines. Uncomment this line

# to revert the behavior to pre-1.3.

#error_on_undefined_vars = False

# by default (as of 1.6), Ansible may display warnings based on the configuration of the

# system running ansible itself. This may include warnings about 3rd party packages or

# other conditions that should be resolved if possible.

# to disable these warnings, set the following value to False:

#system_warnings = True

# by default (as of 1.4), Ansible may display deprecation warnings for language

# features that should no longer be used and will be removed in future versions.

# to disable these warnings, set the following value to False:

#deprecation_warnings = True

# (as of 1.8), Ansible can optionally warn when usage of the shell and

# command module appear to be simplified by using a default Ansible module

# instead. These warnings can be silenced by adjusting the following

# setting or adding warn=yes or warn=no to the end of the command line

# parameter string. This will for example suggest using the git module

# instead of shelling out to the git command.

# command_warnings = False

# set plugin path directories here, separate with colons

#action_plugins = /usr/share/ansible/plugins/action

#become_plugins = /usr/share/ansible/plugins/become

#cache_plugins = /usr/share/ansible/plugins/cache

#callback_plugins = /usr/share/ansible/plugins/callback

#connection_plugins = /usr/share/ansible/plugins/connection

#lookup_plugins = /usr/share/ansible/plugins/lookup

#inventory_plugins = /usr/share/ansible/plugins/inventory

#vars_plugins = /usr/share/ansible/plugins/vars

#filter_plugins = /usr/share/ansible/plugins/filter

#test_plugins = /usr/share/ansible/plugins/test

#terminal_plugins = /usr/share/ansible/plugins/terminal

#strategy_plugins = /usr/share/ansible/plugins/strategy

# by default, ansible will use the 'linear' strategy but you may want to try

# another one

#strategy = free

# by default callbacks are not loaded for /bin/ansible, enable this if you

# want, for example, a notification or logging callback to also apply to

# /bin/ansible runs

#bin_ansible_callbacks = False

# don't like cows? that's unfortunate.

# set to 1 if you don't want cowsay support or export ANSIBLE_NOCOWS=1

#nocows = 1

# set which cowsay stencil you'd like to use by default. When set to 'random',

# a random stencil will be selected for each task. The selection will be filtered

# against the `cow_whitelist` option below.

#cow_selection = default

#cow_selection = random

# when using the 'random' option for cowsay, stencils will be restricted to this list.

# it should be formatted as a comma-separated list with no spaces between names.

# NOTE: line continuations here are for formatting purposes only, as the INI parser

# in python does not support them.

#cow_whitelist=bud-frogs,bunny,cheese,daemon,default,dragon,elephant-in-snake,elephant,eyes,\

# hellokitty,kitty,luke-koala,meow,milk,moofasa,moose,ren,sheep,small,stegosaurus,\

# stimpy,supermilker,three-eyes,turkey,turtle,tux,udder,vader-koala,vader,www

# don't like colors either?

# set to 1 if you don't want colors, or export ANSIBLE_NOCOLOR=1

#nocolor = 1

# if set to a persistent type (not 'memory', for example 'redis') fact values

# from previous runs in Ansible will be stored. This may be useful when

# wanting to use, for example, IP information from one group of servers

# without having to talk to them in the same playbook run to get their

# current IP information.

#fact_caching = memory

#This option tells Ansible where to cache facts. The value is plugin dependent.

#For the jsonfile plugin, it should be a path to a local directory.

#For the redis plugin, the value is a host:port:database triplet: fact_caching_connection = localhost:6379:0

#fact_caching_connection=/tmp

# retry files

# When a playbook fails a .retry file can be created that will be placed in ~/

# You can enable this feature by setting retry_files_enabled to True

# and you can change the location of the files by setting retry_files_save_path

#retry_files_enabled = False

#retry_files_save_path = ~/.ansible-retry

# squash actions

# Ansible can optimise actions that call modules with list parameters

# when looping. Instead of calling the module once per with_ item, the

# module is called once with all items at once. Currently this only works

# under limited circumstances, and only with parameters named 'name'.

#squash_actions = apk,apt,dnf,homebrew,pacman,pkgng,yum,zypper

# prevents logging of task data, off by default

#no_log = False

# prevents logging of tasks, but only on the targets, data is still logged on the master/controller

#no_target_syslog = False

# controls whether Ansible will raise an error or warning if a task has no

# choice but to create world readable temporary files to execute a module on

# the remote machine. This option is False by default for security. Users may

# turn this on to have behaviour more like Ansible prior to 2.1.x. See

# https://docs.ansible.com/ansible/become.html#becoming-an-unprivileged-user

# for more secure ways to fix this than enabling this option.

#allow_world_readable_tmpfiles = False

# controls the compression level of variables sent to

# worker processes. At the default of 0, no compression

# is used. This value must be an integer from 0 to 9.

#var_compression_level = 9

# controls what compression method is used for new-style ansible modules when

# they are sent to the remote system. The compression types depend on having

# support compiled into both the controller's python and the client's python.

# The names should match with the python Zipfile compression types:

# * ZIP_STORED (no compression. available everywhere)

# * ZIP_DEFLATED (uses zlib, the default)

# These values may be set per host via the ansible_module_compression inventory

# variable

#module_compression = 'ZIP_DEFLATED'

# This controls the cutoff point (in bytes) on --diff for files

# set to 0 for unlimited (RAM may suffer!).

#max_diff_size = 1048576

# This controls how ansible handles multiple --tags and --skip-tags arguments

# on the CLI. If this is True then multiple arguments are merged together. If

# it is False, then the last specified argument is used and the others are ignored.

# This option will be removed in 2.8.

#merge_multiple_cli_flags = True

# Controls showing custom stats at the end, off by default

#show_custom_stats = True

# Controls which files to ignore when using a directory as inventory with

# possibly multiple sources (both static and dynamic)

#inventory_ignore_extensions = ~, .orig, .bak, .ini, .cfg, .retry, .pyc, .pyo

# This family of modules use an alternative execution path optimized for network appliances

# only update this setting if you know how this works, otherwise it can break module execution

#network_group_modules=eos, nxos, ios, iosxr, junos, vyos

# When enabled, this option allows lookups (via variables like {{lookup('foo')}} or when used as

# a loop with `with_foo`) to return data that is not marked "unsafe". This means the data may contain

# jinja2 templating language which will be run through the templating engine.

# ENABLING THIS COULD BE A SECURITY RISK

#allow_unsafe_lookups = False

# set default errors for all plays

#any_errors_fatal = False

[inventory]

# enable inventory plugins, default: 'host_list', 'script', 'auto', 'yaml', 'ini', 'toml'

#enable_plugins = host_list, virtualbox, yaml, constructed

# ignore these extensions when parsing a directory as inventory source

#ignore_extensions = .pyc, .pyo, .swp, .bak, ~, .rpm, .md, .txt, ~, .orig, .ini, .cfg, .retry

# ignore files matching these patterns when parsing a directory as inventory source

#ignore_patterns=

# If 'true' unparsed inventory sources become fatal errors, they are warnings otherwise.

#unparsed_is_failed=False

[privilege_escalation]

#become=True

#become_method=sudo

#become_user=root

#become_ask_pass=False

[paramiko_connection]

# uncomment this line to cause the paramiko connection plugin to not record new host

# keys encountered. Increases performance on new host additions. Setting works independently of the

# host key checking setting above.

#record_host_keys=False

# by default, Ansible requests a pseudo-terminal for commands executed under sudo. Uncomment this

# line to disable this behaviour.

#pty=False

# paramiko will default to looking for SSH keys initially when trying to

# authenticate to remote devices. This is a problem for some network devices

# that close the connection after a key failure. Uncomment this line to

# disable the Paramiko look for keys function

#look_for_keys = False

# When using persistent connections with Paramiko, the connection runs in a

# background process. If the host doesn't already have a valid SSH key, by

# default Ansible will prompt to add the host key. This will cause connections

# running in background processes to fail. Uncomment this line to have

# Paramiko automatically add host keys.

#host_key_auto_add = True

[ssh_connection]

# ssh arguments to use

# Leaving off ControlPersist will result in poor performance, so use

# paramiko on older platforms rather than removing it, -C controls compression use

#ssh_args = -C -o ControlMaster=auto -o ControlPersist=60s

# The base directory for the ControlPath sockets.

# This is the "%(directory)s" in the control_path option

#

# Example:

# control_path_dir = /tmp/.ansible/cp

#control_path_dir = ~/.ansible/cp

# The path to use for the ControlPath sockets. This defaults to a hashed string of the hostname,

# port and username (empty string in the config). The hash mitigates a common problem users

# found with long hostnames and the conventional %(directory)s/ansible-ssh-%%h-%%p-%%r format.

# In those cases, a "too long for Unix domain socket" ssh error would occur.

#

# Example:

# control_path = %(directory)s/%%h-%%r

#control_path =

# Enabling pipelining reduces the number of SSH operations required to

# execute a module on the remote server. This can result in a significant

# performance improvement when enabled, however when using "sudo:" you must

# first disable 'requiretty' in /etc/sudoers

#

# By default, this option is disabled to preserve compatibility with

# sudoers configurations that have requiretty (the default on many distros).

#

#pipelining = False

# Control the mechanism for transferring files (old)

# * smart = try sftp and then try scp [default]

# * True = use scp only

# * False = use sftp only

#scp_if_ssh = smart

# Control the mechanism for transferring files (new)

# If set, this will override the scp_if_ssh option

# * sftp = use sftp to transfer files

# * scp = use scp to transfer files

# * piped = use 'dd' over SSH to transfer files

# * smart = try sftp, scp, and piped, in that order [default]

#transfer_method = smart

# if False, sftp will not use batch mode to transfer files. This may cause some

# types of file transfer failures impossible to catch however, and should

# only be disabled if your sftp version has problems with batch mode

#sftp_batch_mode = False

# The -tt argument is passed to ssh when pipelining is not enabled because sudo

# requires a tty by default.

#usetty = True

# Number of times to retry an SSH connection to a host, in case of UNREACHABLE.

# For each retry attempt, there is an exponential backoff,

# so after the first attempt there is 1s wait, then 2s, 4s etc. up to 30s (max).

#retries = 3

[persistent_connection]

# Configures the persistent connection timeout value in seconds. This value is

# how long the persistent connection will remain idle before it is destroyed.

# If the connection doesn't receive a request before the timeout value

# expires, the connection is shutdown. The default value is 30 seconds.

#connect_timeout = 30

# The command timeout value defines the amount of time to wait for a command

# or RPC call before timing out. The value for the command timeout must

# be less than the value of the persistent connection idle timeout (connect_timeout)

# The default value is 30 second.

#command_timeout = 30

[accelerate]

#accelerate_port = 5099

#accelerate_timeout = 30

#accelerate_connect_timeout = 5.0

# The daemon timeout is measured in minutes. This time is measured

# from the last activity to the accelerate daemon.

#accelerate_daemon_timeout = 30

# If set to yes, accelerate_multi_key will allow multiple

# private keys to be uploaded to it, though each user must

# have access to the system via SSH to add a new key. The default

# is "no".

#accelerate_multi_key = yes

[selinux]

# file systems that require special treatment when dealing with security context

# the default behaviour that copies the existing context or uses the user default

# needs to be changed to use the file system dependent context.

#special_context_filesystems=nfs,vboxsf,fuse,ramfs,9p,vfat

# Set this to yes to allow libvirt_lxc connections to work without SELinux.

#libvirt_lxc_noseclabel = yes

[colors]

#highlight = white

#verbose = blue

#warn = bright purple

#error = red

#debug = dark gray

#deprecate = purple

#skip = cyan

#unreachable = red

#ok = green

#changed = yellow

#diff_add = green

#diff_remove = red

#diff_lines = cyan

[diff]

# Always print diff when running ( same as always running with -D/--diff )

# always = no

# Set how many context lines to show in diff

# context = 3

Based on the default config, we will create our own configuration file;

vim $HOME/ansible/ansible.cfgIn the configuration file, we will define some basic values;

(note the value of private_key_file option. If using default SSH key path, then ignore it, otherwise, set correct path).

[defaults]

inventory = /home/kifarunix/ansible/inventory/hosts

roles_path = /home/kifarunix/ansible/roles

private_key_file = /home/kifarunix/.ssh/id_ansible_rsa

interpreter_python = /usr/bin/python3

Whenever you use a custom Ansible configuration directory, you can specify it using ANSIBLE_CONFIG environment variable or -c command-line option;

Let’s set the environment variable to make it easy;

echo "export ANSIBLE_CONFIG=$HOME/ansible/ansible.cfg" >> $HOME/.bashrcsource $HOME/.bashrcWith this, you wont have to specify the path to Ansible configuration or path to any path that is defined on the custom configuration.

Create Ansible Inventory File

The inventory file contains a list of hosts and groups of hosts that are managed by Ansible. By default, inventory file is set to /etc/ansible/hosts. You can specify a different inventory file using the -i option or using the inventory option in the configuration file.

We set out inventory file to /home/kifarunix/ansible/inventory/hosts in the configuration above;

mkdir ~/ansible/inventoryvim /home/kifarunix/ansible/inventory/hosts[elk-stack]

192.168.122.60

192.168.122.123

192.168.122.152

[nfs-server]

192.168.122.47

Save and exit the file.

There are different formats of the hosts file configuration. That is just an example.

See below a sample hosts file!

cat /etc/ansible/hosts# This is the default ansible 'hosts' file.

#

# It should live in /etc/ansible/hosts

#

# - Comments begin with the '#' character

# - Blank lines are ignored

# - Groups of hosts are delimited by [header] elements

# - You can enter hostnames or ip addresses

# - A hostname/ip can be a member of multiple groups

# Ex 1: Ungrouped hosts, specify before any group headers.

#green.example.com

#blue.example.com

#192.168.100.1

#192.168.100.10

# Ex 2: A collection of hosts belonging to the 'webservers' group

#[webservers]

#alpha.example.org

#beta.example.org

#192.168.1.100

#192.168.1.110

# If you have multiple hosts following a pattern you can specify

# them like this:

#www[001:006].example.com

# Ex 3: A collection of database servers in the 'dbservers' group

#[dbservers]

#

#db01.intranet.mydomain.net

#db02.intranet.mydomain.net

#10.25.1.56

#10.25.1.57

# Here's another example of host ranges, this time there are no

# leading 0s:

#db-[99:101]-node.example.com

Run Ansible Passphrase Protected SSH Key Without Prompting for Passphrase

If you setup passphrase protected SSH key, you will be prompted to enter the phrase for every single command to be ran against a managed node.

As a work around for this, you can use ssh-agent to cache your passphrase, so that you only need to enter it once per session as follows;

eval "$(ssh-agent -s)"ssh-add /home/kifarunix/.ssh/id_ansible_rsaYou will be prompted to enter your passphrase.

Test Connection to Ansible Managed Nodes

Once you have setup SSH key authentication and an inventory of the managed hosts, run Ansible ping module to check hosts connection and aliveness. Replace the user accordingly.

ansible all -m ping -u kifarunixSample output;

192.168.122.47 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.122.123 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.122.60 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.122.152 | SUCCESS => {

"changed": false,

"ping": "pong"

}

That confirms that you are ready to deploy ELK stack 8 Cluster on Docker.

Create ELK Stack 8 Docker Containers Playbook Roles and Tasks

To begin with, here is how our directory setup looks like;

tree ~/ansible/home/kifarunix/ansible

├── ansible.cfg

├── elkstack-configs

│ ├── docker-compose-v1.yml

│ ├── docker-compose-v2.yml

│ ├── docker-compose.yml

│ └── logstash

│ └── modsec.conf

├── inventory

│ ├── group_vars

│ │ └── all.yml

│ └── hosts

├── main.yml

└── roles

├── clean

│ └── tasks

│ └── main.yml

├── configure-nfs-share

│ └── tasks

│ └── main.yml

├── copy-docker-compose

│ └── tasks

│ └── main.yml

├── deploy-elk-cluster

│ └── tasks

│ └── main.yml

├── extra-configs

│ └── tasks

│ └── main.yml

├── install-docker

│ └── tasks

│ └── main.yml

└── remove_initial_master_nodes_setting

└── tasks

└── main.yml

20 directories, 15 files

Ansible Playbook Variables

We have defined various Ansible playbook environment variables. Those that apply to all hosts under the inventory/group_vars and those that are specific to each role under respective role directory.

Sample variables use for all hosts;

cat inventory/group_vars/all.ymltarget_user: kifarunix

docker_compose_ver: 2.24.0

vm_max_map_count: 262144

domain_name: kifarunix-demo.com

elkstack_base_path: "/home/{{ target_user }}/elkstack_docker"

src_elkstack_configs: /home/kifarunix/ansible/elkstack-configs

elk_nfs_export: /mnt/elkstack

nfs_clients:

- "192.168.122.152(rw,sync,no_root_squash,no_subtree_check)"

- "192.168.122.123(rw,sync,no_root_squash,no_subtree_check)"

- "192.168.122.60(rw,sync,no_root_squash,no_subtree_check)"

Tasks to Install Docker and Docker Compose on the Nodes

Under the directory roles/docker/tasks, we define how to install Docker and Docker compose on cluster nodes. We will also define how to adjust

cat roles/install-docker/tasks/main.yml---

- name: Install Docker Engine

block:

- name: Install Packages Required to install Various Repos and GPG Keys:Ubuntu/Debian

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

state: present

update_cache: yes

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname in groups['elk-stack']

- name: Install Docker Engine Repo GPG Key on Ubuntu/Debian

shell: "curl -fsSL https://download.docker.com/linux/{{ ansible_distribution | lower }}/gpg | gpg --dearmor > /etc/apt/trusted.gpg.d/docker.gpg"

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname in groups['elk-stack']

- name: Install Docker Engine Repository on Ubuntu/Debian

apt_repository:

repo: "deb [arch=amd64] https://download.docker.com/linux/{{ ansible_distribution | lower }} {{ ansible_distribution_release }} stable"

state: present

update_cache: yes

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname in groups['elk-stack']

- name: Install Docker Repos on CentOS/Rocky/RHEL Derivatives

command: "yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo"

when: ansible_distribution in ['CentOS', 'Rocky'] or ansible_os_family == "RedHat" and inventory_hostname in groups['elk-stack']

- name: Install Docker CE on Ubuntu/Debian

apt:

name:

- docker-ce

state: present

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname in groups['elk-stack']

- name: Install Docker CE on CentOS/RHEL/Rocky

yum:

name:

- docker-ce

state: present

when: ansible_distribution in ['CentOS', 'Rocky'] or ansible_os_family == "RedHat" and inventory_hostname in groups['elk-stack']

- name: Start and Enable Docker Engine

systemd:

name: docker

state: started

enabled: yes

when: inventory_hostname in groups['elk-stack']

- name: Add User to Docker group

user:

name: "{{ target_user }}"

groups: docker

append: yes

when: inventory_hostname in groups['elk-stack']

- name: Install Docker Compose

shell: 'curl -L "https://github.com/docker/compose/releases/download/v{{ docker_compose_ver }}/docker-compose-{{ ansible_system | lower }}-{{ ansible_architecture }}" -o /usr/local/bin/docker-compose;chmod +x /usr/local/bin/docker-compose'

when: inventory_hostname in groups['elk-stack']

Important Elasticsearch Settings

There are multiple important settings required for Elasticsearch such as disabling swappiness, setting max number of open files and max user processes, JVM settings, max virtual memory map count…

Here, we will only create a role for setting up maximum virtual memory map count on the Elastic cluster nodes.

Similarly, let’s ensure the nodes can access the other nodes via domain addresses. Hence, we will define a static hosts file entry for our ELK stack nodes.

cat roles/extra-configs/tasks/main.yml---

- name: Update Virtual Memory Settings on All Cluster Nodes

sysctl:

name: vm.max_map_count

value: '{{ vm_max_map_count }}'

sysctl_set: true

when: inventory_hostname in groups['elk-stack']

- name: Update hosts file

lineinfile:

dest: /etc/hosts

regexp: "^{{ item.split()[0] }}"

line: "{{ item }}"

with_items:

- "192.168.122.60 es01.kifarunix-demo.com es01"

- "192.168.122.123 es02.kifarunix-demo.com es02"

- "192.168.122.152 es03.kifarunix-demo.com es03"

when: inventory_hostname in groups['elk-stack']

The variable used vm_max_map_count, is defined in the global variables file, inventory/group_vars/all.yml. See above.

Create NFS Share on NFS Server

We will be using shared volumes to store some ELK stack configuration files. Some configuration files will need to be accessed by various containers running on separate ELK stack nodes. As such, we will create an NFS share called /mnt/elkstack as we did in our previous guide.

Kindly note that NFS server is already installed and running.

cat roles/configure-nfs-share/tasks/main.yml---

- name: Check if NFS Share is already configured

shell: grep -q "{{ elk_nfs_export }} {{ nfs_clients | join(' ') }}" /etc/exports

register: grep_result

failed_when: false

when: inventory_hostname in groups['nfs-server']

- name: Configure NFS Share

lineinfile:

path: /etc/exports

line: "{{ elk_nfs_export }} {{ nfs_clients | join(' ') }}"

create: yes

when: inventory_hostname in groups['nfs-server'] and grep_result.rc != 0

- name: Create ELK Stack Docker Volumes Directories

file:

path: "{{ item }}"

state: directory

recurse: yes

with_items:

- "{{ elk_nfs_export }}/certs"

- "{{ elk_nfs_export }}/data"

- "{{ elk_nfs_export }}/configs/logstash"

- "{{ elk_nfs_export }}/data/elasticsearch/01"

- "{{ elk_nfs_export }}/data/elasticsearch/02"

- "{{ elk_nfs_export }}/data/elasticsearch/03"

- "{{ elk_nfs_export }}/data/kibana/01"

- "{{ elk_nfs_export }}/data/kibana/02"

- "{{ elk_nfs_export }}/data/kibana/03"

- "{{ elk_nfs_export }}/data/logstash/01"

- "{{ elk_nfs_export }}/data/logstash/02"

- "{{ elk_nfs_export }}/data/logstash/03"

when: inventory_hostname in groups['nfs-server']

- name: Reload NFS Exports

command: exportfs -arvf

when: inventory_hostname in groups['nfs-server']

ELK Stack Configs

Under the elkstack-configs directory, we have configuration files related to Elasticsearch, Kibana or Logstash and (File)beats.

To begin with, let’s create a Logstash pipeline filters configuration as we used in our previous guide.

cat elkstack-configs/logstash/modsec.confinput {

beats {

port => 5044

}

}

filter {

# Extract event time, log severity level, source of attack (client), and the alert message.

grok {

match => { "message" => "(?%{MONTH}\s%{MONTHDAY}\s%{TIME}\s%{YEAR})\] \[\:%{LOGLEVEL:log_level}.*client\s%{IPORHOST:src_ip}:\d+]\s(?.*)" }

}

# Extract Rules File from Alert Message

grok {

match => { "alert_message" => "(?\[file \"(/.+.conf)\"\])" }

}

grok {

match => { "rulesfile" => "(?/.+.conf)" }

}

# Extract Attack Type from Rules File

grok {

match => { "rulesfile" => "(?[A-Z]+-[A-Z][^.]+)" }

}

# Extract Rule ID from Alert Message

grok {

match => { "alert_message" => "(?\[id \"(\d+)\"\])" }

}

grok {

match => { "ruleid" => "(?\d+)" }

}

# Extract Attack Message (msg) from Alert Message

grok {

match => { "alert_message" => "(?\[msg \S(.*?)\"\])" }

}

grok {

match => { "msg" => "(?\"(.*?)\")" }

}

# Extract the User/Scanner Agent from Alert Message

grok {

match => { "alert_message" => "(?User-Agent' \SValue: `(.*?)')" }

}

grok {

match => { "scanner" => "(?:(.*?)\')" }

}

grok {

match => { "alert_message" => "(?User-Agent: (.*?)\')" }

}

grok {

match => { "agent" => "(?: (.*?)\')" }

}

# Extract the Target Host

grok {

match => { "alert_message" => "(hostname \"%{IPORHOST:dst_host})" }

}

# Extract the Request URI

grok {

match => { "alert_message" => "(uri \"%{URIPATH:request_uri})" }

}

grok {

match => { "alert_message" => "(?referer: (.*))" }

}

grok {

match => { "ref" => "(? (.*))" }

}

mutate {

# Remove unnecessary characters from the fields.

gsub => [

"alert_msg", "[\"]", "",

"user_agent", "[:\"'`]", "",

"user_agent", "^\s*", "",

"referer", "^\s*", ""

]

# Remove the Unnecessary fields so we can only remain with

# General message, rules_file, attack_type, rule_id, alert_msg, user_agent, hostname (being attacked), Request URI and Referer.

remove_field => [ "alert_message", "rulesfile", "ruleid", "msg", "scanner", "agent", "ref" ]

}

}

output {

elasticsearch {

hosts => ["https://${ES_NAME}:9200"]

user => "${ELASTICSEARCH_USERNAME}"

password => "${ELASTICSEARCH_PASSWORD}"

ssl => true

cacert => "config/certs/ca/ca.crt"

}

}

Basically, we will have three ELK Stack nodes in the cluster and each running a single instance of Elasticsearch, Logstash, Kibana containers.

The variables used in Elastic configs will be defined in the Docker compose environment variables file.

We also have two compose files:

- The compose file required to setup and start ELK stack container on the first node. Will generate the required SSL certs and mount on the NFS share volumes for use by other ELK stack on the other two nodes.

- The second compose file to start the two other ELK stack containers and join them to the first ELK stack to make a cluster.

Create Initial ELK Stack 8 Cluster Node Docker compose file;

cat elkstack-configs/docker-compose-v1.ymlversion: '3.8'

services:

es_setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/${CERTS_PATH}

user: "0"

command: >

bash -c '

echo "Creating ES certs directory..."

[[ -d ${CERTS_PATH} ]] || mkdir ${CERTS_PATH}

# Check if CA certificate exists

if [ ! -f ${CERTS_PATH}/ca/ca.crt ]; then

echo "Generating Wildcard SSL certs for ES (in PEM format)..."

bin/elasticsearch-certutil ca --pem --days 3650 --out ${CERTS_PATH}/elkstack-ca.zip

unzip -d ${CERTS_PATH} ${CERTS_PATH}/elkstack-ca.zip

bin/elasticsearch-certutil cert \

--name elkstack-certs \

--ca-cert ${CERTS_PATH}/ca/ca.crt \

--ca-key ${CERTS_PATH}/ca/ca.key \

--pem \

--dns "*.${DOMAIN_SUFFIX},localhost,${NODE01_NAME},${NODE02_NAME},${NODE03_NAME}" \

--ip ${NODE01_IP} \

--ip ${NODE02_IP} \

--ip ${NODE03_IP} \

--days ${DAYS} \

--out ${CERTS_PATH}/elkstack-certs.zip

unzip -d ${CERTS_PATH} ${CERTS_PATH}/elkstack-certs.zip

else

echo "CA certificate already exists. Skipping Certificates generation."

fi

# Check if Elasticsearch is ready

until curl -s --cacert ${CERTS_PATH}/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" https://${NODE01_NAME}:9200 | grep -q "${CLUSTER_NAME}"; do sleep 10; done

# Set kibana_system password

if curl -sk -XGET --cacert ${CERTS_PATH}/ca/ca.crt "https://${NODE01_NAME}:9200" -u "kibana_system:${KIBANA_PASSWORD}" | grep -q "${CLUSTER_NAME}"; then

echo "Password for kibana_system is working. Proceeding with Elasticsearch setup for kibana_system."

else

echo "Failed to authenticate with kibana_system password. Trying to set the password for kibana_system."

until curl -s -XPOST --cacert ${CERTS_PATH}/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://${NODE01_NAME}:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done

fi

echo "Setup is done!"

'

networks:

- elastic

healthcheck:

test: ["CMD-SHELL", "[ -f ${CERTS_PATH}/elkstack-certs/elkstack-certs.crt ]"]

interval: 1s

timeout: 5s

retries: 120

elasticsearch:

depends_on:

es_setup:

condition: service_healthy

container_name: ${NODE01_NAME}

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

environment:

- node.name=${NODE01_NAME}

- network.publish_host=${NODE01_IP}

- cluster.name=${CLUSTER_NAME}

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.enrollment.enabled=false

- xpack.security.autoconfiguration.enabled=false

- xpack.security.http.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.http.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.transport.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- cluster.initial_master_nodes=${NODE01_NAME},${NODE02_NAME},${NODE03_NAME}

- discovery.seed_hosts=${NODE01_IP},${NODE02_IP},${NODE03_IP}

- KIBANA_USERNAME=${KIBANA_USERNAME}

- KIBANA_PASSWORD=${KIBANA_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- elasticsearch_data:/usr/share/elasticsearch/data

- certs:/usr/share/elasticsearch/${CERTS_PATH}

- /etc/hosts:/etc/hosts

ports:

- ${ES_PORT}:9200

- ${ES_TS_PORT}:9300

networks:

- elastic

healthcheck:

test: ["CMD-SHELL", "curl --fail -k -s -u elastic:${ELASTIC_PASSWORD} --cacert ${CERTS_PATH}/ca/ca.crt https://${NODE01_NAME}:9200"]

interval: 30s

timeout: 10s

retries: 5

restart: unless-stopped

kibana:

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

container_name: kibana

environment:

- SERVER_NAME=${KIBANA_SERVER_HOST}

- ELASTICSEARCH_HOSTS=https://${NODE01_NAME}:9200

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=${CERTS_PATH}/ca/ca.crt

- ELASTICSEARCH_USERNAME=${KIBANA_USERNAME}

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- XPACK_REPORTING_ROLES_ENABLED=false

- XPACK_REPORTING_KIBANASERVER_HOSTNAME=localhost

- XPACK_ENCRYPTEDSAVEDOBJECTS_ENCRYPTIONKEY=${SAVEDOBJECTS_ENCRYPTIONKEY}

- XPACK_SECURITY_ENCRYPTIONKEY=${REPORTING_ENCRYPTIONKEY}

- XPACK_REPORTING_ENCRYPTIONKEY=${SECURITY_ENCRYPTIONKEY}

volumes:

- kibana_data:/usr/share/kibana/data

- certs:/usr/share/kibana/${CERTS_PATH}

- /etc/hosts:/etc/hosts

ports:

- ${KIBANA_PORT}:5601

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

logstash:

image: docker.elastic.co/logstash/logstash:${STACK_VERSION}

container_name: logstash

environment:

- XPACK_MONITORING_ENABLED=false

- ELASTICSEARCH_USERNAME=${ES_USER}

- ELASTICSEARCH_PASSWORD=${ELASTIC_PASSWORD}

- NODE_NAME=${NODE01_NAME}

- CERTS_PATH=${CERTS_PATH}

ports:

- ${BEATS_INPUT_PORT}:5044

volumes:

- logstash_filters:/usr/share/logstash/pipeline/:ro

- certs:/usr/share/logstash/${CERTS_PATH}

- logstash_data:/usr/share/logstash/data

- /etc/hosts:/etc/hosts

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

volumes:

certs:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_CERTS}"

elasticsearch_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/elasticsearch/01"

kibana_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/kibana/01"

logstash_filters:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_CONFIGS}/logstash"

logstash_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/logstash/01"

networks:

elastic:

The second compose file;

cat elkstack-configs/docker-compose-v2.ymlservices:

elasticsearch:

container_name: ${NODENN_NAME}

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

command: >

bash -c '

until [ -f "${CERTS_PATH}/elkstack-certs/elkstack-certs.crt" ]; do

sleep 10;

done;

exec /usr/local/bin/docker-entrypoint.sh

'

environment:

- node.name=${NODENN_NAME}

- network.publish_host=${NODENN_IP}

- cluster.name=${CLUSTER_NAME}

- bootstrap.memory_lock=true

- cluster.initial_master_nodes=${NODE01_NAME},${NODE02_NAME},${NODE03_NAME}

- discovery.seed_hosts=${NODE01_IP},${NODE02_IP},${NODE03_IP}

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.enrollment.enabled=false

- xpack.security.autoconfiguration.enabled=false

- xpack.security.transport.ssl.enabled=true

- xpack.security.http.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.http.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.transport.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- KIBANA_USERNAME=${KIBANA_USERNAME}

- KIBANA_PASSWORD=${KIBANA_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- elasticsearch_data:/usr/share/elasticsearch/data

- certs:/usr/share/elasticsearch/${CERTS_PATH}

- /etc/hosts:/etc/hosts

ports:

- ${ES_PORT}:9200

- ${ES_TS_PORT}:9300

networks:

- elastic

healthcheck:

test: ["CMD-SHELL", "curl --fail -k -s -u elastic:${ELASTIC_PASSWORD} --cacert ${CERTS_PATH}/ca/ca.crt https://${NODENN_NAME}:9200"]

interval: 30s

timeout: 10s

retries: 5

restart: unless-stopped

kibana:

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

container_name: kibana

environment:

- SERVER_NAME=${KIBANA_SERVER_HOST}

- ELASTICSEARCH_HOSTS=https://${NODENN_NAME}:9200

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=${CERTS_PATH}/ca/ca.crt

- ELASTICSEARCH_USERNAME=${KIBANA_USERNAME}

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- XPACK_REPORTING_ROLES_ENABLED=false

- XPACK_REPORTING_KIBANASERVER_HOSTNAME=localhost

- XPACK_ENCRYPTEDSAVEDOBJECTS_ENCRYPTIONKEY=${SAVEDOBJECTS_ENCRYPTIONKEY}

- XPACK_SECURITY_ENCRYPTIONKEY=${REPORTING_ENCRYPTIONKEY}

- XPACK_REPORTING_ENCRYPTIONKEY=${SECURITY_ENCRYPTIONKEY}

volumes:

- kibana_data:/usr/share/kibana/data

- certs:/usr/share/kibana/${CERTS_PATH}

- /etc/hosts:/etc/hosts

ports:

- ${KIBANA_PORT}:5601

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

logstash:

image: docker.elastic.co/logstash/logstash:${STACK_VERSION}

container_name: logstash

environment:

- XPACK_MONITORING_ENABLED=false

- ELASTICSEARCH_USERNAME=${ES_USER}

- ELASTICSEARCH_PASSWORD=${ELASTIC_PASSWORD}

- NODE_NAME=${NODENN_NAME}

- CERTS_PATH=${CERTS_PATH}

ports:

- ${BEATS_INPUT_PORT}:5044

volumes:

- certs:/usr/share/logstash/${CERTS_PATH}

- logstash_filters:/usr/share/logstash/pipeline/:ro

- logstash_data:/usr/share/logstash/data

- /etc/hosts:/etc/hosts

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

volumes:

certs:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_CERTS}"

elasticsearch_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/elasticsearch/NN"

kibana_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/kibana/NN"

logstash_filters:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_CONFIGS}/logstash"

logstash_data:

driver: local

driver_opts:

type: nfs

o: "addr=${NFS_SVR_IP},nfsvers=4,rw"

device: ":${NFS_ELK_DATA}/logstash/NN"

networks:

elastic:

The variables are defined in the Docker compose environment variable;

cat elkstack-configs/.env# Version of Elastic products

STACK_VERSION=8.12.0

# Set the cluster name

CLUSTER_NAME=elk-docker-cluster

# Set Elasticsearch Node Name

NODE01_NAME=es01

NODE02_NAME=es02

NODE03_NAME=es03

# Docker Host IP to advertise to cluster nodes

NODE01_IP=192.168.122.60

NODE02_IP=192.168.122.123

NODE03_IP=192.168.122.152

# Elasticsearch super user

ES_USER=elastic

# Password for the 'elastic' user (at least 6 characters). No special characters, ! or @ or $.

ELASTIC_PASSWORD=ChangeME

# Elasticsearch container name

ES_NAME=elasticsearch

# Port to expose Elasticsearch HTTP API to the host

ES_PORT=9200

#ES_PORT=127.0.0.1:9200

ES_TS_PORT=9300

# Port to expose Kibana to the host

KIBANA_PORT=5601

KIBANA_SERVER_HOST=0.0.0.0

# Kibana Encryption. Requires atleast 32 characters. Can be generated using `openssl rand -hex 16

SAVEDOBJECTS_ENCRYPTIONKEY=ca11560aec8410ff002d011c2a172608

REPORTING_ENCRYPTIONKEY=288f06b3a14a7f36dd21563d50ec76d4

SECURITY_ENCRYPTIONKEY=62c781d3a2b2eaee1d4cebcc6bf42b48

# Kibana - Elasticsearch Authentication Credentials for user kibana_system

# Password for the 'kibana_system' user (at least 6 characters). No special characters, ! or @ or $.

KIBANA_USERNAME=kibana_system

KIBANA_PASSWORD=ChangeME

# Domain Suffix for ES Wildcard SSL certs

DOMAIN_SUFFIX=kifarunix-demo.com

# Generated Certs Validity Period

DAYS=3650

# SSL/TLS Certs Directory

CERTS_PATH=config/certs

# Logstash Input Port

BEATS_INPUT_PORT=5044

# NFS Server

NFS_SVR_IP=192.168.122.47

NFS_ELK_CERTS=/mnt/elkstack/certs

NFS_ELK_DATA=/mnt/elkstack/data

NFS_ELK_CONFIGS=/mnt/elkstack/configs

Docker environment variables will be same across the entire cluster. We will however replace NODENN with respective node variable. For example, on second node, NODENN will change to NODE02 and NODE03 on the third node. We also mount the NFS share data path based on each node’s number, signified by NN, the container is running on and hence, on node02, NN=02 and on node03, NN=03.

Copy ELK Stack Configs and Docker Compose Files to Respective Nodes

Now, we need to copy the Docker compose files, environment variables and configs to the respective nodes.

cat roles/copy-docker-compose/tasks/main.yml---

- name: Create directory for ELK Stack Docker Compose files

file:

path: "{{ elkstack_base_path }}"

state: directory

when: inventory_hostname in groups['elk-stack']

- name: Create Docker-Compose file for ELK Node 01

copy:

src: "{{ src_elkstack_configs }}/docker-compose-v1.yml"

dest: "{{ elkstack_base_path }}/docker-compose.yml"

when: ansible_host == 'node01'

- name: Create Docker-Compose file for ELK Node 02/03

copy:

src: "{{ src_elkstack_configs }}/docker-compose-v2.yml"

dest: "{{ elkstack_base_path }}/docker-compose.yml"

when: ansible_host in ["node02","node03"]

- name: Copy Environment Variables

copy:

src: "{{ src_elkstack_configs }}/.env"

dest: "{{ elkstack_base_path }}"

when: inventory_hostname in groups['elk-stack']

- name: Update the NODENN variable accordingly

replace:

path: "{{ elkstack_base_path }}/docker-compose.yml"

regexp: 'NN'

replace: "{{ '02' if ansible_host == 'node02' else ('03' if ansible_host == 'node03') }}"

when: "'node02' in ansible_host or 'node03' in ansible_host"

Deploying ELK Stack 8 Cluster on Docker using Ansible

Next, create a task to deploy ELK stack 8 cluster on Docker.

We are using docker compose to build the cluster containers.

cat roles/deploy-elk-cluster/tasks/main.yml---

- name: Deploy ELK Stack on Docker using Docker Compose

command: docker compose up -d

args:

chdir: "{{elkstack_base_path}}/"

when: inventory_hostname in groups['elk-stack']

Remove cluster.initial_master_nodes setting

Accordingly to Elastic;

After the cluster has formed, remove thecluster.initial_master_nodessetting from each node’s configuration. It should not be set for master-ineligible nodes, master-eligible nodes joining an existing cluster, or nodes which are restarting.If you leave

cluster.initial_master_nodesin place once the cluster has formed then there is a risk that a future misconfiguration may result in bootstrapping a new cluster alongside your existing cluster. It may not be possible to recover from this situation without losing data.

cat roles/remove_initial_master_nodes_setting/tasks/main.yml---

- name: Check ELK Stack 8 Cluster Status

shell: "docker exec -it es01 bash -c 'curl -sk -XGET https://es01:9200 -u \"${ES_USER}:${ELASTIC_PASSWORD}\"'"

register: es_cluster_status

run_once: true

when: inventory_hostname in groups['elk-stack']

- name: Remove initial_master_nodes Setting After Cluster Formed

lineinfile:

path: "{{ elkstack_base_path }}/docker-compose.yml"

regexp: '^(\s*- cluster\.initial_master_nodes=.*)$'

state: absent

when: inventory_hostname in groups['elk-stack'] and es_cluster_status.rc == 0

- name: Restart Docker Compose Services

command: docker compose restart elasticsearch

args:

chdir: "{{ elkstack_base_path }}"

when: inventory_hostname in groups['elk-stack'] and es_cluster_status.rc == 0

Docker Clean Up Role

Similarly, we have created a role to tear down the ELK stack cluster!

cat roles/clean/tasks/main.yml---

- name: Check if ELK Stack Compose directory exists

stat:

path: "{{ elkstack_base_path }}"

register: dir_exists

when: inventory_hostname in groups['elk-stack']

- name: Check if ELK Stack Docker Compose yml/yaml file exists

find:

path: "{{ elkstack_base_path }}"

patterns: "*.yml,*.yaml"

register: compose_files

when: "inventory_hostname in groups['elk-stack'] and dir_exists.stat.exists"

- name: Tear down the stack!

command: docker compose down -v

args:

chdir: "{{ elkstack_base_path }}"

when: "inventory_hostname in groups['elk-stack'] and dir_exists.stat.exists and compose_files.matched > 0"

- name: Remove Docker compose and env files

file:

path: "{{ elkstack_base_path }}"

state: absent

when: inventory_hostname in groups['elk-stack']

- name: Check if Docker service is active

command: systemctl is-active docker

ignore_errors: true

register: docker_service_status

when: inventory_hostname in groups['elk-stack']

- name: Check if Docker images exist

command: docker image ls -q

ignore_errors: true

register: docker_images

when: inventory_hostname not in groups['nfs-server'] and docker_service_status.rc == 0

- name: Remove Docker Images

shell: docker image rm -f {{ docker_images.stdout_lines | default([]) }}

ignore_errors: true

when: inventory_hostname in groups['elk-stack'] and docker_images.stdout_lines | default([]) | length > 0

- name: Delete ELK Stack Data/Certs/Configs on NFS Share

file:

path: "{{ elk_nfs_export }}"

state: absent

when: inventory_hostname in groups['nfs-server']

- name: Remove ELK Stack NFS Export from the NFS Server

lineinfile:

path: /etc/exports

state: absent

regexp: "^{{ elk_nfs_export}}.*"

when: inventory_hostname in groups['nfs-server']

- name: Remove user from Docker group

user:

name: "{{ target_user }}"

groups: docker

append: no

when: inventory_hostname not in groups['nfs-server']

- name: Remove Docker Engine Repo GPG Key on Ubuntu/Debian

file:

path: /etc/apt/trusted.gpg.d/docker.gpg

state: absent

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname not in groups['nfs-server']

- name: Remove Docker Engine Repository on Ubuntu/Debian

apt_repository:

repo: "deb [arch=amd64] https://download.docker.com/linux/{{ ansible_distribution | lower }} {{ ansible_distribution_release }} stable"

state: absent

update_cache: yes

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname not in groups['nfs-server']

- name: Remove Docker Repos on CentOS/Rocky/RHEL Derivatives

command: "yum-config-manager --disable docker-ce"

when: ansible_distribution in ['CentOS', 'Rocky'] or ansible_os_family == "RedHat" and inventory_hostname not in groups['nfs-server']

- name: Uninstall Docker CE on Ubuntu/Debian

apt:

name:

- docker-ce

state: absent

when: ansible_distribution in ['Debian', 'Ubuntu'] and inventory_hostname not in groups['nfs-server']

- name: Uninstall Docker CE on CentOS/RHEL/Rocky

yum:

name:

- docker-ce

state: absent

when: ansible_distribution in ['CentOS', 'Rocky'] or ansible_os_family == "RedHat" and inventory_hostname not in groups['nfs-server']

- name: Remove Docker Compose

file:

path: /usr/local/bin/docker-compose

state: absent

when: inventory_hostname not in groups['nfs-server']

Create Main Ansible Playbook

It is now time to deploy ELK stack 8 cluster on Docker using playbooks/tasks created above.

To ensure that everything runs as expected, we need to run all plays/tasks in a specific order.

For example, in our case here, our order of execution is install docker > extra-configs (update some of the settings on the Docker host) > configure nfs share > copy compose and env to Docker hosts > Deploy ELK cluster using Docker compose.

Thus, our main Ansible playbook;

cat main.yml---

- hosts: all

gather_facts: True

remote_user: "{{ target_user }}"

become: yes

roles:

- install-docker

- extra-configs

- configure-nfs-share

- copy-docker-compose

- deploy-elk-cluster

- remove_initial_master_nodes_setting

# - clean

Perform ELK Stack Deployment via Ansible Dry Run

You can do a dry-run just to see how the plays will be executed without actually making changes on the nodes. This will also help you identify any issue and fix before running the deployment.

ansible-playbook main.yml --ask-become-pass -CWhere:

ansible-playbook: This is the Ansible command for running playbooks.main.yml: This is the name of our main Ansible playbook file.--ask-become-pass: This option tells Ansible to prompt you for the password that is required to become a privileged user (typically, to escalate tosudoorroot). This is useful when running tasks that require elevated privileges on the target hosts. Without this option, Ansible might fail to execute tasks that require elevated privileges.-Cor--check: This option is used for a “dry-run” or check mode. When you use this option, Ansible will not make any changes to the target hosts. Instead, it will simulate the execution of tasks and report what changes would have been made. This is useful for previewing the impact of your playbook without actually applying changes.

Some of the tasks may show as failed because, the destination files to make changes on have not been copied yet to the target hosts.

Deploy ELK Stack 8 Cluster on Docker Containers using Ansible

When ready to run the play, remove option -C.

ansible-playbook main.yml --ask-become-passSample output;

BECOME password:

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [all] *********************************************************************************************************************************************************

TASK [Gathering Facts] *********************************************************************************************************************************************

ok: [192.168.122.47]

ok: [192.168.122.60]

ok: [192.168.122.123]

ok: [192.168.122.152]

TASK [install-docker : Install Packages Required to install Various Repos and GPG Keys:Ubuntu/Debian] **************************************************************

skipping: [192.168.122.47]

ok: [192.168.122.152]

ok: [192.168.122.60]

ok: [192.168.122.123]

TASK [install-docker : Install Docker Engine Repo GPG Key on Ubuntu/Debian] ****************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.123]

changed: [192.168.122.60]

changed: [192.168.122.152]

TASK [install-docker : Install Docker Engine Repository on Ubuntu/Debian] ******************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.152]

changed: [192.168.122.60]

changed: [192.168.122.123]

TASK [install-docker : Install Docker Repos on CentOS/Rocky/RHEL Derivatives] **************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.123]

skipping: [192.168.122.152]

skipping: [192.168.122.47]

TASK [install-docker : Install Docker CE on Ubuntu/Debian] *********************************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.60]

changed: [192.168.122.123]

changed: [192.168.122.152]

TASK [install-docker : Install Docker CE on CentOS/RHEL/Rocky] *****************************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.123]

skipping: [192.168.122.152]

skipping: [192.168.122.47]

TASK [install-docker : Start and Enable Docker Engine] *************************************************************************************************************

skipping: [192.168.122.47]

ok: [192.168.122.123]

ok: [192.168.122.60]

ok: [192.168.122.152]

TASK [install-docker : Add User to Docker group] *******************************************************************************************************************

skipping: [192.168.122.47]

ok: [192.168.122.60]

ok: [192.168.122.152]

ok: [192.168.122.123]

TASK [install-docker : Install Docker Compose] *********************************************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.60]

changed: [192.168.122.123]

changed: [192.168.122.152]

TASK [extra-configs : Update Virtual Memory Settings on All Cluster Nodes] *****************************************************************************************

skipping: [192.168.122.47]

ok: [192.168.122.60]

ok: [192.168.122.152]

ok: [192.168.122.123]

TASK [extra-configs : Update hosts file] ***************************************************************************************************************************

skipping: [192.168.122.47] => (item=192.168.122.60 es01.kifarunix-demo.com es01)

skipping: [192.168.122.47] => (item=192.168.122.123 es02.kifarunix-demo.com es02)

skipping: [192.168.122.47] => (item=192.168.122.152 es03.kifarunix-demo.com es03)

skipping: [192.168.122.47]

ok: [192.168.122.123] => (item=192.168.122.60 es01.kifarunix-demo.com es01)

ok: [192.168.122.152] => (item=192.168.122.60 es01.kifarunix-demo.com es01)

ok: [192.168.122.60] => (item=192.168.122.60 es01.kifarunix-demo.com es01)

ok: [192.168.122.152] => (item=192.168.122.123 es02.kifarunix-demo.com es02)

ok: [192.168.122.60] => (item=192.168.122.123 es02.kifarunix-demo.com es02)

ok: [192.168.122.123] => (item=192.168.122.123 es02.kifarunix-demo.com es02)

ok: [192.168.122.60] => (item=192.168.122.152 es03.kifarunix-demo.com es03)

ok: [192.168.122.152] => (item=192.168.122.152 es03.kifarunix-demo.com es03)

ok: [192.168.122.123] => (item=192.168.122.152 es03.kifarunix-demo.com es03)

TASK [configure-nfs-share : Check if NFS Share is already configured] **********************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.123]

skipping: [192.168.122.152]

changed: [192.168.122.47]

TASK [configure-nfs-share : Configure NFS Share] *******************************************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.123]

skipping: [192.168.122.152]

changed: [192.168.122.47]

TASK [configure-nfs-share : Create ELK Stack Docker Volumes Directories] *******************************************************************************************

skipping: [192.168.122.60] => (item=/mnt/elkstack/certs)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data)

skipping: [192.168.122.60] => (item=/mnt/elkstack/configs/logstash)

skipping: [192.168.122.123] => (item=/mnt/elkstack/certs)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/elasticsearch/01)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/elasticsearch/02)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/elasticsearch/03)

skipping: [192.168.122.123] => (item=/mnt/elkstack/configs/logstash)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/kibana/01)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/elasticsearch/01)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/kibana/02)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/elasticsearch/02)

skipping: [192.168.122.152] => (item=/mnt/elkstack/certs)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/kibana/03)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/elasticsearch/03)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/logstash/01)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/kibana/01)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/logstash/02)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/kibana/02)

skipping: [192.168.122.152] => (item=/mnt/elkstack/configs/logstash)

skipping: [192.168.122.60] => (item=/mnt/elkstack/data/logstash/03)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/elasticsearch/01)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/kibana/03)

skipping: [192.168.122.60]

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/logstash/01)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/elasticsearch/02)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/logstash/02)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/elasticsearch/03)

skipping: [192.168.122.123] => (item=/mnt/elkstack/data/logstash/03)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/kibana/01)

skipping: [192.168.122.123]

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/kibana/02)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/kibana/03)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/logstash/01)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/logstash/02)

skipping: [192.168.122.152] => (item=/mnt/elkstack/data/logstash/03)

skipping: [192.168.122.152]

changed: [192.168.122.47] => (item=/mnt/elkstack/certs)

changed: [192.168.122.47] => (item=/mnt/elkstack/data)

changed: [192.168.122.47] => (item=/mnt/elkstack/configs/logstash)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/elasticsearch/01)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/elasticsearch/02)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/elasticsearch/03)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/kibana/01)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/kibana/02)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/kibana/03)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/logstash/01)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/logstash/02)

changed: [192.168.122.47] => (item=/mnt/elkstack/data/logstash/03)

TASK [configure-nfs-share : Reload NFS Exports] ********************************************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.123]

skipping: [192.168.122.152]

changed: [192.168.122.47]

TASK [copy-docker-compose : Create directory for ELK Stack Docker Compose files] ***********************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.60]

changed: [192.168.122.123]

changed: [192.168.122.152]

TASK [copy-docker-compose : Create Docker-Compose file for ELK Node 01] ********************************************************************************************

skipping: [192.168.122.123]

skipping: [192.168.122.152]

skipping: [192.168.122.47]

changed: [192.168.122.60]

TASK [copy-docker-compose : Create Docker-Compose file for ELK Node 02/03] *****************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.47]

changed: [192.168.122.123]

changed: [192.168.122.152]

TASK [copy-docker-compose : Copy Environment Variables] ************************************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.152]

changed: [192.168.122.123]

changed: [192.168.122.60]

TASK [copy-docker-compose : Update the NODENN variable accordingly] ************************************************************************************************

skipping: [192.168.122.60]

skipping: [192.168.122.47]

changed: [192.168.122.123]

changed: [192.168.122.152]

TASK [deploy-elk-cluster : Deploy ELK Stack on Docker using Docker Compose] ****************************************************************************************

skipping: [192.168.122.47]

changed: [192.168.122.123]

changed: [192.168.122.152]

changed: [192.168.122.60]

PLAY RECAP *********************************************************************************************************************************************************

192.168.122.123 : ok=15 changed=9 unreachable=0 failed=0 skipped=7 rescued=0 ignored=0

192.168.122.152 : ok=15 changed=9 unreachable=0 failed=0 skipped=7 rescued=0 ignored=0

192.168.122.47 : ok=5 changed=4 unreachable=0 failed=0 skipped=17 rescued=0 ignored=0

192.168.122.60 : ok=14 changed=8 unreachable=0 failed=0 skipped=8 rescued=0 ignored=0

And that is it!!

Our ELK Stack 8 Cluster is now running on Docker containers!

Verify ELK Stack 8 Cluster

Now, login to one of the ELK Stack nodes and check the cluster status;

kifarunix@es01:~$ docker exec -it es01 bash -c 'curl -k -XGET https://es01:9200/_cat/nodes -u elastic'Sample output;

Enter host password for user 'elastic':

192.168.122.152 39 98 44 2.53 1.17 0.75 cdfhilmrstw - es03

192.168.122.123 48 95 44 2.63 1.21 0.78 cdfhilmrstw - es02

192.168.122.60 65 97 35 1.96 0.87 0.43 cdfhilmrstw * es01

Cluster is up!! ES01 is the master!

Check health;

kifarunix@es02:~$ docker exec -it es02 bash -c 'curl -k -XGET https://es01:9200/_cat/health?v -u elastic'

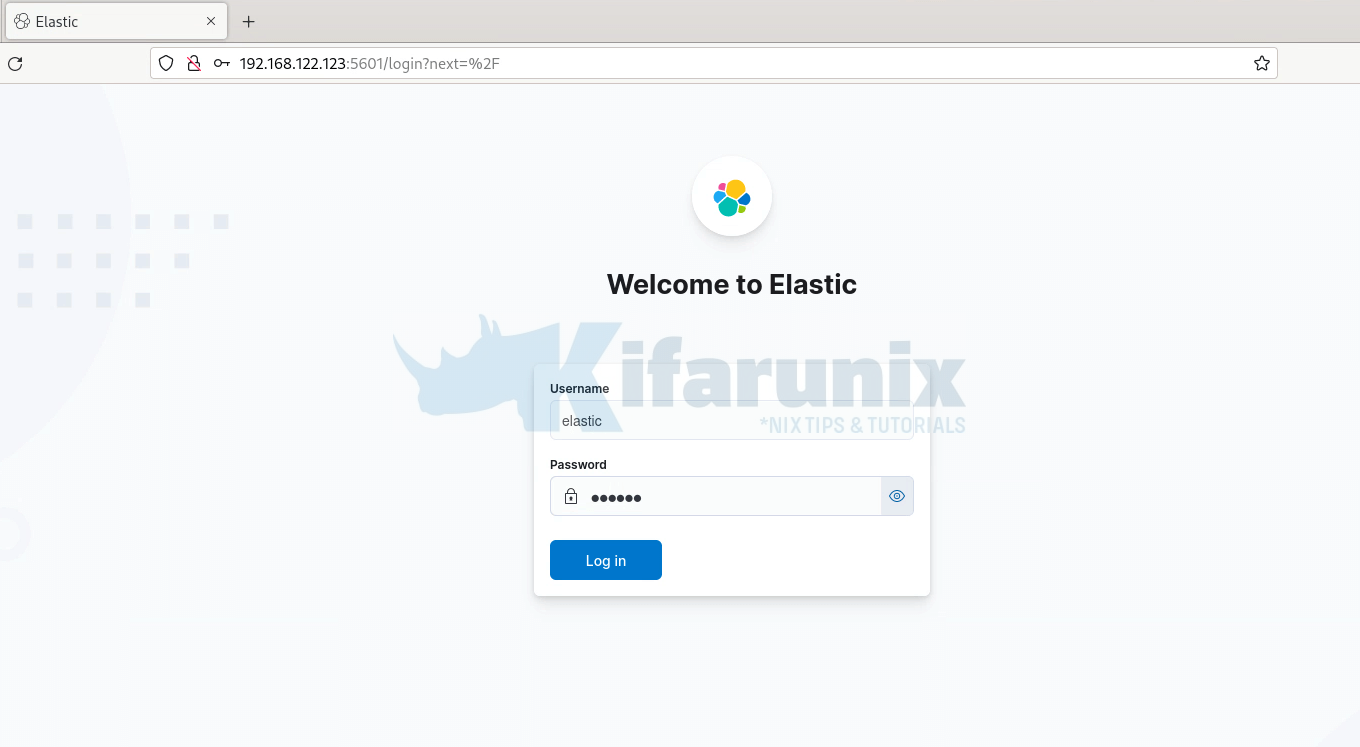

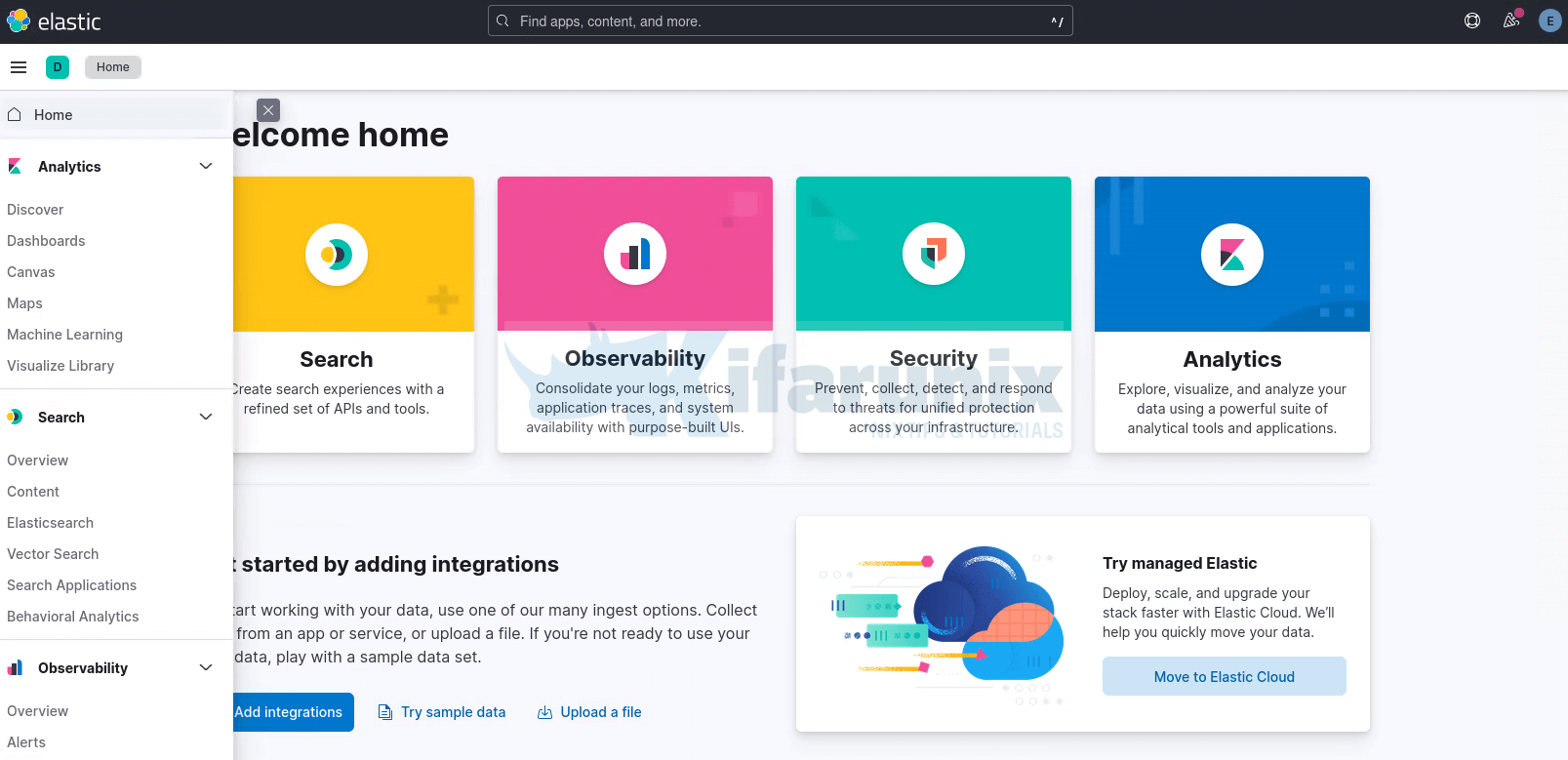

Sample output;