Welcome to our guide on configuring shared filesystem for Kubernetes on Rook Ceph Storage. In a Kubernetes cluster, the need for reliable and scalable storage solutions is paramount, especially when it comes to supporting stateful applications. Rook, an open-source cloud-native storage orchestrator, can be used to deploy Ceph storage cluster in Kubernetes. This offers a robust solution for providing shared storage for Kubernetes workloads.

Table of Contents

Configure Shared Filesystem for Kubernetes on Rook Ceph Storage

The Ceph Storage Types

Ceph provides several storage types to cater for different use cases and requirements within a distributed storage infrastructure. The primary storage types provided by Ceph include:

- Object Storage (RADOS Gateway – RGW):

- Ceph’s Object Storage is a scalable and distributed storage system that allows users to store and retrieve data using an HTTP RESTful interface.

- Commonly accessed via the RADOS Gateway (RGW), and it seamlessly integrates with applications and tools supporting S3 or Swift API.

- Ceph Object storage is ideal for handling large amounts of unstructured data like images, videos, and backups.

- Block Storage (RBD – RADOS Block Device):

- Provides raw block device access for applications, similar to physical disks.

- Suitable for stateful applications like databases, virtual machines, and containerized workloads such as Kubernetes persistent volumes.

- Offers high performance and low latency for frequent data access.

- File System (CephFS):

- Ceph File System (CephFS) offers a distributedPOSIX-compliant file system that can be mounted on client machines, providing a shared file storage solution for applications. CephFS supports POSIX-compliant file operations, making it suitable for applications that require a traditional file system interface. It is commonly used for shared storage in multi-node environments, including scenarios where multiple pods or virtual machines need access to shared data.

If you want to learn how to provision block storage, you can check the links below;

Provision Block Storage for Kubernetes on Rook Ceph Cluster

Configure and Use Ceph Block Device on Linux Clients

The Ceph Filesystem Components

There are two primary components of the CephFS:

- The MetaData Server (MDS):

- MDS handles file system metadata, including file and directory structures, permissions, and attributes. The metadata includes information such as file names, sizes, timestamps, and access control lists

- They handle file system operations like create, delete, rename, and attribute lookup.

- CephFS requires at least one Metadata Server daemon (

ceph-mds) to run.

- The CephFS Clients:

- These are libraries or user programs that interacts with CephFS.

- They communicate with the MDS for metadata operations and directly access the OSDs for reading and writing user data.

- Examples include the

ceph-fuseclient for FUSE mounting and thekcephfsclient for native kernel integration.

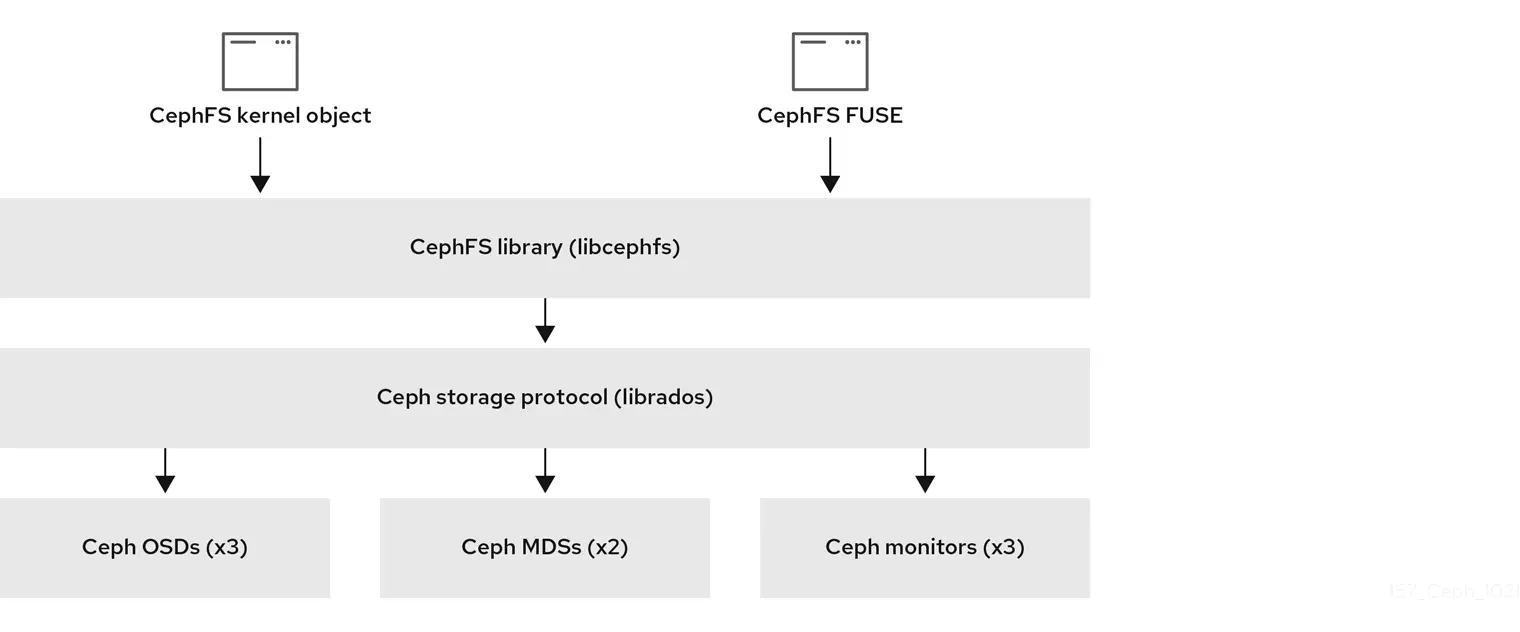

Other components include (as depicted in the screenshot below):

- Ceph File System Library (libcephfs): This is the library that provides a higher-level interface for applications to interact with CephFS.

- Ceph RADOS Library (librados): The librados library provides a low-level interface that allows applications to interact with the core Ceph storage cluster.

- Ceph OSDs (Object Storage Daemons): These daemons store the actual data chunks (objects) for both CephFS metadata and user data

- Ceph Manager: It is responsible for managing and monitoring the Ceph cluster

In order to configure a shared storage filesystem for Kubernetes workloads on Rook ceph storage, proceed as follows.

Deploy Ceph Storage Cluster in Kubernetes using Rook

In our previous guide, we provided a comprehensive tutorial on how to deploy Ceph storage cluster in Kubernetes using Rook. Check the link below;

Deploy Ceph Storage Cluster in Kubernetes using Rook

Configure Shared Filesystem for Kubernetes

Ensure Cluster is in Healthy State

Before you can proceed to configure Ceph shared filesystem on Rook Ceph storage cluster, ensure that your Ceph cluster is in healthy state.

Note that we are using Rook toolbox pod to execute the Ceph commands;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph -sOutput;

cluster:

id: e1466372-9f01-42af-8ad7-0bfcfa71ef78

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 2d)

mgr: a(active, since 5h), standbys: b

osd: 3 osds: 3 up (since 2d), 3 in (since 2d)

data:

pools: 2 pools, 33 pgs

objects: 124 objects, 209 MiB

usage: 728 MiB used, 299 GiB / 300 GiB avail

pgs: 33 active+clean

Create Shared Fileystem StorageClass on Rook Ceph Cluster

A StorageClass is an object that defines the different storage configurations, such as volume type, provisioning policies, and parameters for dynamic provisioning of Persistent Volumes (PVs).

Rook ships with a StorageClass manifest file that defines the CephFS storage configuration options.

The manifest is located under the rook/deploy/examples/csi/cephfs/ directory.

cat ~/rook/deploy/examples/csi/cephfs/There are two types of storageclass manifests file. One for the replicated pool storage option (storageclass.yaml) and the other for the erasure-coded pool option (storageclass-ec.yaml). It is recommended to use the default replicated data pool for CephFS.

This is how the replica pool StorageClass is configured (without comment lines);

cat ~/rook/deploy/examples/csi/cephfs/storageclass.yamlapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-cephfs

provisioner: rook-ceph.cephfs.csi.ceph.com # driver:namespace:operator

parameters:

clusterID: rook-ceph # namespace:cluster

fsName: myfs

pool: myfs-replicated

csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # namespace:cluster

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

Where:

apiVersionandkind:- Specifies the API version and kind of the Kubernetes resource. In this case, it’s a

StorageClassin thestorage.k8s.io/v1API version.

- Specifies the API version and kind of the Kubernetes resource. In this case, it’s a

metadatasection:- Defines metadata for the StorageClass. The name of the StorageClass is set to “rook-cephfs.”

- provisioner:

rook-ceph.cephfs.csi.ceph.com- This identifies the CSI provisioner responsible for creating and managing CephFS volumes for your Kubernetes pods. In this case, it specifies the Rook-Ceph CSI provisioner for CephFS.

- Rook provides a CSI driver for CephFS, enabling containerized applications to access and utilize persistent storage from your Ceph cluster.

- Ensure the namespace used, rook-ceph, matches the namespace of the Rook operator.

parameterssection:- Specifies parameters specific to Rook CephFS. These parameters include:

fsName: The name of the CephFS file system, set to “myfs.” You can update this to your preferred name.pool: The Ceph pool to use for provisioning, set to “myfs-replicated.”csi.storage.k8s.io/provisioner-secret-name: The name of the secret containing the provisioner credentials for Rook CephFS.csi.storage.k8s.io/controller-expand-secret-name: The name of the secret containing the credentials for expanding the file system.csi.storage.k8s.io/node-stage-secret-name: The name of the secret containing the credentials for staging on the node.

- Specifies parameters specific to Rook CephFS. These parameters include:

reclaimPolicy:- Specifies the reclaim policy for Persistent Volumes (PVs) created by this StorageClass. In this case, it’s set to “Delete,” meaning that when a PVC (Persistent Volume Claim) using this StorageClass is deleted, the corresponding PV will also be deleted. The other option is Retain.

allowVolumeExpansion:- Enables or disables volume expansion for PVCs created using this StorageClass. It is set to

true, allowing volume expansion.

- Enables or disables volume expansion for PVCs created using this StorageClass. It is set to

mountOptions:- Specifies additional mount options for the file system. In this manifest, it’s left empty, but you can add specific mount options if needed.

So, run the command below to create the replicated pool CephFS StorageClass;

cd ~/rook/deploy/exampleskubectl create -f csi/cephfs/storageclass.yamlYou can list available StorageClasses using the command below;

kubectl get scNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 20h

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 39s

Create the Ceph Filesystem

Similarly, Rook ships with two manifests YAML files for creating a replicated or erasure-coded pools, filesystem.yaml and filesystem-ec.yaml files respectively. We are using the replicated-pool filesystem in this guide!

The files provide configurations for the pools to be created, (both data and metadata pools) as well as the MDS settings.

This is how the filesystem.yaml manifest is defined (without comment lines);

cat ~/rook/deploy/examples/filesystem.yamlapiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: myfs

namespace: rook-ceph # namespace:cluster

spec:

metadataPool:

replicated:

size: 3

requireSafeReplicaSize: true

parameters:

compression_mode:

none

dataPools:

- name: replicated

failureDomain: host

replicated:

size: 3

requireSafeReplicaSize: true

parameters:

compression_mode:

none

preserveFilesystemOnDelete: true

metadataServer:

activeCount: 1

activeStandby: true

placement:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: topology.kubernetes.io/zone

priorityClassName: system-cluster-critical

livenessProbe:

disabled: false

startupProbe:

disabled: false

---

apiVersion: ceph.rook.io/v1

kind: CephFilesystemSubVolumeGroup

metadata:

name: myfs-csi # lets keep the svg crd name same as `filesystem name + csi` for the default csi svg

namespace: rook-ceph # namespace:cluster

spec:

filesystemName: myfs

pinning:

distributed: 1

Where:

apiVersionandkind:- Specifies the API version and kind of the Kubernetes resource. In this case, it’s a

CephFilesystem, which represents a Ceph distributed file system managed by Rook.

- Specifies the API version and kind of the Kubernetes resource. In this case, it’s a

metadatasection:- Defines metadata for the StorageClass, for example the name of the StorageClass is set to myfs.” You can set the name to a different name.

- namespace set to the default operator namesace, rook-ceph.

- spec:

- defines the desired state of a Kubernetes object.

- Ceph File System uses two pools: Metadata and data pools.

- The metadata pool stores the data of the Ceph Metadata Server (MDS), which generally consists of inodes; that is, the file ownership, permissions, creation date and time, last modified or accessed date and time, parent directory, and so on.

- The data pool stores file data. Ceph may store a file as one or more objects, typically representing smaller chunks of file data such as extents.

- Hence,

metadataPoolanddataPoolsspecifies the replication size, the failure domain, the compression mode…

preserveFilesystemOnDelete:- Set to

trueto preserve the filesystem on deletion, allowing for recovery in case of accidental deletion.

- Set to

metadataServer: configures CephFS Metadata Server (MDS):- activeCount: sets 1 active MDS instance.

- activeStandby: enables hot spare MDS for automatic failover.

- placement: defines rules for scheduling MDS pods:

- podAntiAffinity: avoids scheduling MDS pods on the same node or zone as another MDS.

preferredDuringSchedulingIgnoredDuringExecution: Specifies a preferred rule for scheduling. The weight (here set to 100) indicates the strength of the preference.podAffinityTerm: Defines the label selector and the topology domain on which the preference is based.labelSelector: Specifies that pods with the label “app: rook-ceph-mds” are preferred to be scheduled on different nodes.topologyKey: : This line specifies that the anti-affinity rule should be based on thetopology.kubernetes.io/zonelabel associated with nodes.

- a

priorityClassNamedefines a level of importance for pods. It acts as a way to differentiate and prioritize which pods receive more resources during scheduling and resource allocation. Kubernetes comes with two default priority classes:- system-cluster-critical: This class represents critical system components like etcd and the Kubernetes control plane. Pods using this class are prioritized above everything else and receive resources first.

- system-node-critical: This class is for crucial node-level daemons such as kubelet and network plugins. Pods using this class have higher priority than user-defined workloads but lower than system-cluster-critical.

- livenessProbe & startupProbe: enable health checks for MDS pods.

- A CephFilesystemSubVolumeGroup (SVG) is an abstraction within Ceph File System (CephFS) that allows for fine-grained control over data placement and management. It acts like a logical grouping of CephFS subvolumes that makes it easy to organize data, apply placement policies…

- metadata:

name: Sets the name of the SVG tocsi. This name will be used to identify and reference the group for data placement and management.- namespace: defines the namespace

- spec:

filesystemName: This field specifies the CephFS filesystem where this SVG belongs. Here, it references the previously defined filesystem namedmyfs.pinning: This section defines the data placement policy for subvolumes within this SVG.distributed: 1: This configuration instructs Rook to evenly distribute the subvolumes of this SVG across available Ceph Object Storage Daemons (OSDs) in the cluster. The number1doesn’t represent a specific distribution algorithm, but rather implies a basic “spread-the-data-around” approach.- Read more about pinning.

Read more on the documentation!

Thus, execute the command below to create CephFS (with default settings);

kubectl create -f ~/rook/deploy/examples/filesystem.yamlVerify the creation of the Ceph filesystem MDS. It was named it as myfs.

kubectl -n rook-ceph get pods | grep myfsrook-ceph-mds-myfs-a-587bb456c-g4g4t 2/2 Running 0 6m52s

rook-ceph-mds-myfs-b-fbf99cb49-jpqsh 2/2 Running 0 6m51s

Or, check with label;

kubectl -n rook-ceph get pod -l app=rook-ceph-mdsCheck the cluster status;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph -s cluster:

id: e1466372-9f01-42af-8ad7-0bfcfa71ef78

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 2d)

mgr: a(active, since 3h), standbys: b

mds: 1/1 daemons up, 1 hot standby

osd: 3 osds: 3 up (since 5d), 3 in (since 5d)

data:

volumes: 1/1 healthy

pools: 4 pools, 81 pgs

objects: 146 objects, 209 MiB

usage: 796 MiB used, 299 GiB / 300 GiB avail

pgs: 81 active+clean

io:

client: 853 B/s rd, 1 op/s rd, 0 op/s wr

As you can see, we have 1 active MDS and 1 standby.

You can check the CephFS status from the Rook toolbox;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph fs ls -f json-prettySample output;

[

{

"name": "myfs",

"metadata_pool": "myfs-metadata",

"metadata_pool_id": 6,

"data_pool_ids": [

7

],

"data_pools": [

"myfs-replicated"

]

}

]

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph fs statusmyfs - 0 clients

====

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active myfs-b Reqs: 0 /s 12 15 14 0

0-s standby-replay myfs-a Evts: 0 /s 2 5 4 0

POOL TYPE USED AVAIL

myfs-metadata metadata 132k 94.7G

myfs-replicated data 0 94.7G

MDS version: ceph version 18.2.0 (5dd24139a1eada541a3bc16b6941c5dde975e26d) reef (stable)

List the hosts, daemons, and processes:

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph orch ps --daemon_type=mdsNAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID

mds.myfs-a worker02 running (43m) 0s ago 43m - - 8e1c0c287ee0

mds.myfs-b worker03 running (43m) 0s ago 43m - - 8e1c0c287ee0

Configure Pods to Access the Shared CephFS Storage

The CephFS storage is now ready for consumption. Therefore, to demonstrate how you have configure Kubernetes Pods to access the shared CephFS storage, let’s create two Pods that will access the same shared storage filesystem.

Create a PersistentVolumeClaim (PVC) for each Pod

A PersistentVolumeClaim (PVC) is a resource in Kubernetes that allows a Pod to request and use a specific amount of storage from a storage class. Remember we have created a CephFS storage class above named rook-cephfs;

kubectl get scNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 3d21h

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 4h9m

A PVC acts as a request for storage and enables dynamic provisioning, allowing pods to access persistent storage that is automatically provisioned and managed by the underlying storage system.

Below is a manifest file to create a sample PVC;

vim cephf-test-pods-pvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: rook-cephfs

resources:

requests:

storage: 10Gi

Where:

apiVersionandkind: Specify the API version and type of resource, in this case, a PersistentVolumeClaim.metadata: Contains metadata for the PVC, including the name (e.gcephfs-pvc).spec: Defines the specifications for the PVC.accessModes: Specifies the access mode for the volume (ReadWriteMany), allowing multiple pods to access the volume simultaneously.storageClassName: Specifies the StorageClass (rook-cephfs) to use for provisioning the persistent storage.resources.requests.storage: 10Gi: Requests a storage capacity of 10 gigabyte for this PVC.

So, in summary, this PVC is requesting 10 gigabytes of storage with the ReadWriteMany access mode from the rook-cephfs StorageClass. The storage is expected to be dynamically provisioned by Rook CephFS, allowing multiple pods to mount and access the volume simultaneously.

When you have the PVC manifest file ready, then create it using the command, kubectl create -f <path-to.yaml>.

kubectl create -f demo-cephfs-pvc.yamlYou can get the status of the PVC;

kubectl get pvcSample output;

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Pending rook-cephfs 6s

As you can see, the STATUS is Pending.

Status of a PVC can be:

- Pending: The PVC is in the process of being provisioned. This status occurs when the storage system is still allocating the requested resources.

- Bound: The PVC has been successfully provisioned, and it is now bound to a Persistent Volume (PV). The PV is the actual storage resource in the cluster.

- Lost: This status may occur if the connection to the PV is lost, often due to a failure in the underlying storage system or other issues.

- Failed: The provisioning process failed, and the PVC couldn’t be bound to a PV. This status may occur if the requested resources are not available or if there are issues with the storage class.

- Released: This status indicates that the PVC is no longer attached to any storage resource either due to manual deletion or under some conditions, StorageClass or reclaim policy releases it. While the PVC is in the “Released” state, the associated PV maybe retained, deleted or recycled depending on the reclaim policy defined.

If you descibe the PVC, you should see that it is being provisioned;

Name: cephfs-pvc

Namespace: default

StorageClass: rook-cephfs

Status: Pending

Volume:

Labels:

Annotations: volume.beta.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

volume.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Provisioning 47s rook-ceph.cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-fd76b9895-888tm_8ce04e2b-2580-4f72-a856-eaca75629dfa External provisioner is provisioning volume for claim "default/cephfs-pvc"

Normal ExternalProvisioning 2s (x5 over 47s) persistentvolume-controller Waiting for a volume to be created either by the external provisioner 'rook-ceph.cephfs.csi.ceph.com' or manually by the system administrator. If volume creation is delayed, please verify that the provisioner is running and correctly registered.

If you check the status after a short while, it should be bound now!

kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-bafde748-ba57-4d50-abd3-45ba07851f1c 10Gi RWX rook-cephfs 2m22s

To get more details of a PVC;

kubectl describe pvc <name-of-the-pvc>E.g;

kubectl describe cephfs-pvcSample output;

Name: cephfs-pvc

Namespace: default

StorageClass: rook-cephfs

Status: Bound

Volume: pvc-bafde748-ba57-4d50-abd3-45ba07851f1c

Labels:

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

volume.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 10Gi

Access Modes: RWX

VolumeMode: Filesystem

Used By:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Provisioning 2m56s rook-ceph.cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-fd76b9895-888tm_8ce04e2b-2580-4f72-a856-eaca75629dfa External provisioner is provisioning volume for claim "default/cephfs-pvc"

Normal ExternalProvisioning 41s (x11 over 2m56s) persistentvolume-controller Waiting for a volume to be created either by the external provisioner 'rook-ceph.cephfs.csi.ceph.com' or manually by the system administrator. If volume creation is delayed, please verify that the provisioner is running and correctly registered.

Normal Provisioning 35s rook-ceph.cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-fd76b9895-888tm_cfaa8394-7477-4457-a2ab-410afcc95b05 External provisioner is provisioning volume for claim "default/cephfs-pvc"

Normal ProvisioningSucceeded 35s rook-ceph.cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-fd76b9895-888tm_cfaa8394-7477-4457-a2ab-410afcc95b05 Successfully provisioned volume pvc-bafde748-ba57-4d50-abd3-45ba07851f1c

StorageClass acts as a template for creating PVs. It defines the type of storage (local disk, network storage, etc.), provisioner to use (CSI drivers, etc.), and other configuration parameters for a PV.

So, if you check, you should now have a PV created for the PVCs created above (StorageClass is root-cephfs;

kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-9d962a97-808f-4ca6-8f6d-576e9b302884 20Gi RWO Delete Bound rook-ceph/wp-pv-claim rook-ceph-block 4d7h

pvc-9daf6557-5a94-4878-9b5b-9c35342abd38 20Gi RWO Delete Bound rook-ceph/mysql-pv-claim rook-ceph-block 4d7h

pvc-bafde748-ba57-4d50-abd3-45ba07851f1c 10Gi RWX Delete Bound default/cephfs-pvc rook-cephfs 84s

If you noticed, we didn’t manually create a PersistentVolume (PV). This is because, one of the primary purposes of StorageClasses is to enable dynamic provisioning of Persistent Volumes. When a PVC (Persistent Volume Claim) is created without specifying a particular PV, the StorageClass determines the appropriate volume type and provisions a PV dynamically.

Create Pods to Use CephFS Persistent Volume

We have created a PVC that requests a filesystem of 10G volume.

To demonstrate how this shared filesystem volume can be mounted and used by multiple Pods (remember the access mode is RWX), let’s create two Pods of an Nginx web server with access to the same configuration files stored under the shared filesystem.

vim nginx-pv-pods.yamlapiVersion: v1

kind: Pod

metadata:

name: nginx-demo-app1

spec:

volumes:

- name: cephfs-volume

persistentVolumeClaim:

claimName: cephfs-pvc

containers:

- name: nginx-demo-app1

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: cephfs-volume

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-demo-app2

spec:

volumes:

- name: cephfs-volume

persistentVolumeClaim:

claimName: cephfs-pvc

containers:

- name: nginx-demo-app2

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: cephfs-volume

Where:

apiVersionandkind: Indicate the API version (v1) and the resource type (Pod).metadata: Contains metadata for the Pod, including the name (nginx-demo-app1).spec: Specifies the specifications for the Pod.volumes: Describes the volumes to be mounted. In this case, it defines a volume namedcephfs-volumethat uses thecephfs-pvcPVC.name: cephfs-volume: The name of the volume.persistentVolumeClaim:: Specifies that the volume is backed by a PersistentVolumeClaim.claimName: cephfs-pvc: Specifies the name of the PersistentVolumeClaim (cephfs-pvc) to use.

containers: Describes the containers within the Pod.name: nginx-demo-app1: The name of the container.image: nginx: Specifies the Docker image to use for the container (NGINX in this case).ports:: Specifies the container’s port configuration.containerPort: 80: Exposes port 80 for the NGINX web server.name: "http-server": Provides a name for the exposed port.

volumeMounts:: Describes the volume mounts for the container.mountPath: "/usr/share/nginx/html": Specifies the path within the container where the CephFS volume will be mounted.name: cephfs-volume: Refers to the previously defined volume (cephfs-volume).

Create the Pods;

kubectl create -f nginx-pv-pods.yamlOnce created, check the running pods;

kubectl get podsNAME READY STATUS RESTARTS AGE

nginx-demo-app1 1/1 Running 0 21s

nginx-demo-app2 1/1 Running 0 21s

You can show the details of a Pod;

kubectl describe pod nginx-demo-app1Name: nginx-demo-app1

Namespace: default

Priority: 0

Service Account: default

Node: worker01/192.168.122.11

Start Time: Wed, 20 Dec 2023 20:42:52 +0000

Labels:

Annotations: cni.projectcalico.org/containerID: f0e1cab7f5ad236b39403296cf4c7f4b66a1e5b795e2a5be878f0ae0cf176900

cni.projectcalico.org/podIP: 10.100.5.35/32

cni.projectcalico.org/podIPs: 10.100.5.35/32

Status: Running

IP: 10.100.5.35

IPs:

IP: 10.100.5.35

Containers:

nginx-demo-app1:

Container ID: containerd://619fb7bec4512ef62b4c3d72a3e89f787d3974456cf3a84dbc4db99f0347b264

Image: nginx

Image ID: docker.io/library/nginx@sha256:5040a25cc87f100efc43c5c8c2f504c76035441344345c86d435c693758874b7

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Wed, 20 Dec 2023 20:43:03 +0000

Ready: True

Restart Count: 0

Environment:

Mounts:

/usr/share/nginx/html from cephfs-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-44nj7 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cephfs-volume:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: cephfs-pvc

ReadOnly: false

kube-api-access-44nj7:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 102s default-scheduler Successfully assigned default/nginx-demo-app1 to worker01

Normal SuccessfulAttachVolume 101s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-bafde748-ba57-4d50-abd3-45ba07851f1c"

Normal Pulling 91s kubelet Pulling image "nginx"

Normal Pulled 91s kubelet Successfully pulled image "nginx" in 825ms (825ms including waiting)

Normal Created 91s kubelet Created container nginx-demo-app1

Normal Started 91s kubelet Started container nginx-demo-app1

The Pods are now running.

So, drop into the shell of one of them, say nginx-demo-app1;

kubectl exec -it nginx-demo-app1 -- bashCheck mounted filesystems;

df -hT -t cephroot@nginx-demo-app1:/# df -hT -t ceph

Filesystem Type Size Used Avail Use% Mounted on

10.98.191.150:6789,10.110.254.175:6789,10.110.73.98:6789:/volumes/csi/csi-vol-8d10c28b-42b0-4fba-8287-3e1fbb2887fd/ce77007d-12d4-40a6-8136-81ca642d79dd ceph 10G 0 10G 0% /usr/share/nginx/html

Check the content of the mount point, /usr/share/nginx/html. Remember this is the default root directory for Nginx.

root@nginx-demo-app1:/# ls usr/share/nginx/html/

root@nginx-demo-app1:/# Create a sample web page;

echo "hello form k8s CephFS test" > usr/share/nginx/html/index.htmlConfirm;

root@nginx-demo-app1:/# curl -k http://localhost

hello form k8s CephFS test

root@nginx-demo-app1:/#

Now, login to the second pod and check mounted filesystems as well as if the created file above is available;

kubectl exec -it nginx-demo-app2 -- bashdf -hT -t cephroot@nginx-demo-app2:/# df -hT -t ceph

Filesystem Type Size Used Avail Use% Mounted on

10.98.191.150:6789,10.110.254.175:6789,10.110.73.98:6789:/volumes/csi/csi-vol-8d10c28b-42b0-4fba-8287-3e1fbb2887fd/ce77007d-12d4-40a6-8136-81ca642d79dd ceph 10G 0 10G 0% /usr/share/nginx/html

root@nginx-demo-app2:/# ls usr/share/nginx/html/

index.htmlcat usr/share/nginx/html/index.htmlroot@nginx-demo-app2:/# cat usr/share/nginx/html/index.html

hello form k8s CephFS testcurl -k http://localhostroot@nginx-demo-app2:/# curl -k http://localhost

hello form k8s CephFS testAnd that confirms that you have successfully configured a Pod to use storage from a PersistentVolumeClaim.

You can attach multiple apps to your PVC for shared storage!

Clean up!

If you want to clean your shared storage filesystem;

- Delete the pods

- Delete the PVCs

- Delete the PV

Depending on your retain policy, the volume will be retained or deleted with data .

That concludes our guide on how to configure shared filesystem for Kubernetes on Rook Ceph Storage.

Reference

Read more on Filesystem Storage Overview.