In this guide, you will to learn how to deploy Multinode OpenStack using Kolla-Ansible. We will be runing our OpenStack on Ubuntu 22.04 servers.

Kolla provides Docker containers and Ansible playbooks to meet Kolla’s mission. Kolla’s mission is to provide production-ready containers and deployment tools for operating OpenStack clouds. It allows the operators with minimal experience to deploy Multinode OpenStack quickly and as experience grows modify the OpenStack configuration to suit the operator’s exact requirements.

Table of Contents

Deploying Multi-node OpenStack using Kolla-Ansible

Deployment Architecture

In this tutorial, we will be deploying a three-node Openstack with separate controller, storage and compute nodes. Each node will play a specific role as follows;

Controller Node:

The controller node is the central point of control and coordination in the OpenStack environment. It hosts various services responsible for managing and controlling other components. Some of the key services running on the controller node include:

- Identity Service (Keystone): Responsible for authentication and authorization of users and services within OpenStack.

- Image Service (Glance): Stores and manages virtual machine images used to create instances (VMs).

- Dashboard Service (Horizon): Provides a web-based graphical user interface for managing and monitoring the cloud resources.

- Networking Service (Neutron): Handles the networking aspects, including the creation and management of virtual networks, routers, and floating IPs.

- Compute Service (Nova API): Handles user requests to create, start, stop, and manage instances (VMs).

- Orchestration Service (Heat): Allows users to define and manage cloud applications using templates (e.g. YAML files) for automatic deployment.

- Placement Service (Placement API): Tracks and manages resource allocations on the compute nodes.

Storage Node:

The storage node can configured to provide;

- Cinder Volume Service:

- Block Storage: Primary role is to host and manage Cinder volumes, offering block-level storage/volumes to Nova virtual machines, Ironic bare metal hosts, containers etc.

- The default OpenStack Block Storage service implementation is an iSCSI solution that uses Logical Volume Manager (LVM) for Linux.

- Glance Image Service:

- Glance Service: Hosts and serves images to compute nodes, facilitating instance launches.

- Swift Object Storage Service:

- Object Storage: Can be configured to run the Swift service, providing scalable and redundant object storage.

In this tutorial, we will just be using our storage node to provide shared storage via NFS shares for glance images and cinder volume for block storage.

Thus, follow the guides below on how to setup NFS shares on Linux;

How to install and setup NFS server shares on Linux

On Storage node;

showmount -eExport list for storage01:

/mnt/glance *Compute Node:

The compute node is where the instances (VMs) are actually deployed and run. It is responsible for hosting the Nova Compute service, which interacts with the underlying hypervisor (e.g., KVM, VMware, Hyper-V) to manage the lifecycle of instances. The compute node handles instance creation, termination, and resource management, including CPU, memory, and network resources. Instances can be migrated between compute nodes for load balancing or maintenance purposes.

Please note that this is just one of the many ways of deploying OpenStack. You can plan your own architecture to meet your requirements!

System Requirements

Below are the minimum requirements we have for our OpenStack nodes:

- 2 (or more) network interfaces.

- At least 8gb main memory

- At least 40gb disk space

Below are our deployment system specifics;

| Node/Hostname | Management/Floating IP | DISK | RAM | CPU |

| controller01 Also acts as Kolla-ansible deployment node | Mgt: 192.168.200.100/24 Floating: 10.100.0.100/24 | 100G | 8G | 2 cores |

| compute01 | Mgt: 192.168.200.120/24 Floating: 10.100.0.110/24 | 100G | 8G | 2 cores |

| strorage01 (provides NFS share for image storage and cinder block storage) | 192.168.200.110/24 | 250G | 8G | 2 cores |

You can provide as much resources since the more resources you have the better the performance of the stack.

Network Configurations on the Nodes

Below are the network configurations on the nodes;

Controller Node;

cat /etc/netplan/00-installer-config.yamlnetwork:

renderer: networkd

ethernets:

enp1s0:

dhcp4: false

enp2s0:

dhcp4: false

vethint: {}

vethext: {}

bridges:

br0:

interfaces: [enp1s0]

dhcp4: false

addresses: [192.168.200.200/24]

routes:

- to: 0.0.0.0/0

via: 192.168.200.1

nameservers:

addresses:

- 8.8.8.8

br-ex:

interfaces: [ enp2s0, vethint ]

dhcp4: false

addresses: [10.100.0.100/24]

version: 2

As you can see, we have also created virtual ethernets (veth interfaces) whose configuration is shown below. Veth pairs are commonly used to connect network namespaces, which are isolated network environments within a Linux system.

cat /etc/systemd/network/10-veth.netdev[NetDev]

Name=vethint

Kind=veth

[Peer]

Name=vethextThe devices are connected to each other, and are also independent of the bridged interface, br-ex.

- The

[NetDev]section defines the first veth device,vethint. TheKindparameter specifies that this is a veth device. - The

[Peer]section defines the second veth device,vethext. This device is automatically paired with thevethintdevice.

So, once you create the .netdev systemd configuration, restart the systemd-networkd service.

systemctl restart systemd-networkdWhen this configuration file is loaded by systemd-networkd, it will create the two veth devices and connect them together. Any traffic sent to one device will be received by the other device. This allows network traffic to be passed between the two network namespaces that the veth devices are attached to.

ip a1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0: mtu 1500 qdisc fq_codel master br0 state UP group default qlen 1000

link/ether 52:54:00:82:68:e4 brd ff:ff:ff:ff:ff:ff

3: enp7s0: mtu 1500 qdisc fq_codel master br-ex state UP group default qlen 1000

link/ether 52:54:00:54:81:bc brd ff:ff:ff:ff:ff:ff

4: br-ex: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 46:98:06:c2:e7:21 brd ff:ff:ff:ff:ff:ff

inet 10.100.0.100/24 brd 10.100.0.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::4498:6ff:fec2:e721/64 scope link

valid_lft forever preferred_lft forever

5: br0: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:1f:79:9b:18:8b brd ff:ff:ff:ff:ff:ff

inet 192.168.200.200/24 brd 192.168.200.255 scope global br0

valid_lft forever preferred_lft forever

inet6 fe80::a81f:79ff:fe9b:188b/64 scope link

valid_lft forever preferred_lft forever

6: vethext@vethint: mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 46:1d:4f:ba:dd:7f brd ff:ff:ff:ff:ff:ff

inet6 fe80::441d:4fff:feba:dd7f/64 scope link

valid_lft forever preferred_lft forever

7: vethint@vethext: mtu 1500 qdisc noqueue master br-ex state UP group default qlen 1000

link/ether d2:bd:a0:d3:2d:21 brd ff:ff:ff:ff:ff:ff

Compute Node

cat /etc/netplan/00-installer-config.yamlnetwork:

renderer: networkd

ethernets:

enp1s0:

dhcp4: false

addresses: [192.168.200.202/24]

routes:

- to: 0.0.0.0/0

via: 192.168.200.1

nameservers:

addresses:

- 8.8.8.8

enp2s0:

dhcp4: false

addresses: [10.100.0.110/24]

version: 2

ip a1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0: mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:3a:88:51 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.202/24 brd 192.168.200.255 scope global enp1s0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe3a:8851/64 scope link

valid_lft forever preferred_lft forever

3: enp2s0: mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:ea:cc:5d brd ff:ff:ff:ff:ff:ff

inet 10.100.0.110/24 brd 10.100.0.255 scope global enp2s0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feea:cc5d/64 scope link

valid_lft forever preferred_lft forever

Storage Node

cat /etc/netplan/00-installer-config.yamlnetwork:

ethernets:

enp1s0:

dhcp4: false

addresses:

- 192.168.200.201/24

routes:

- to: default

via: 192.168.200.1

nameservers:

addresses:

- 8.8.8.8

enp10s0:

dhcp4: false

version: 2

ip a1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0: mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:78:91:f2 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global enp1s0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe78:91f2/64 scope link

valid_lft forever preferred_lft forever

4: enp10s0: mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:e2:9b:b0 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fee2:9bb0/64 scope link

valid_lft forever preferred_lft forever

You can also update your hosts file with respective DNS entries for your nodes on the controller node or the node where you will run Ansible-kolla. DNS entries in other nodes will be taken care by Ansible.

vim /etc/hosts

127.0.0.1 localhost

192.168.200.200 controller01

192.168.200.201 compute01

192.168.200.202 storage01

NOTE: We are running the installation as non root user with sudo privileges.

Install Required Packages on Deployment Node

Before you can proceed, there are a number of required packages that needs to be installed on the node that will host Kolla-ansible. We are using controller01 node as the Kolla-Ansible node.

Update and upgrade your system packages

sudo apt update

sudo apt upgrade

Reboot, if it is necessary;

[[ -f /var/run/reboot-required ]] && systemctl reboot -iInstall the required packages;

sudo apt install python3-dev python3-venv libffi-dev gcc libssl-dev git

Create Kolla-Ansible Deployment User Account

We already have Kolla-ansible deployment user account (with passwordless sudo) created on all the nodes. Sample sudoers file entry for the user;

sudo cat /etc/sudoers.d/kifarunixkifarunix ALL=NOPASSWD: ALLYou also need to generate passwordless SSH keys to be used for logging into the compute and storage nodes from the controller node.

Generate SSH keys on the controller node or the node that runs Kolla-ansible.

Ensure you are generating the keys as the non-root sudoed user used for deployment;

kifarunix@controller01:~$ whoami

kifarunixssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/kifarunix/.ssh/id_rsa): ENTER

Enter passphrase (empty for no passphrase): ENTER

Enter same passphrase again: ENTER

Your identification has been saved in /home/kifarunix/.ssh/id_rsa

Your public key has been saved in /home/kifarunix/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:q+M37Axp1BJJV5cKa4HyS16vTUhE94N9T2A7R9uDdMg kifarunix@controller01

The key's randomart image is:

+---[RSA 3072]----+

| .oo.o oo+..|

| ...o+. =oEo+o|

| oo. +..+.+o+|

| oo= . o =.|

| oo=So .|

| .oo..o |

| +..+ |

| ..+= . |

| .o+o. |

+----[SHA256]-----+

Next, copy the keys to the other nodes;

for i in compute01 storage01; do ssh-copy-id kifarunix@$i; doneCreate a Virtual Environment for Deploying Kolla-ansible

To avoid conflict between system packages and Kolla-ansible packages, it is recommended that Kolla-ansible be installed in a virtual environment.

You can create a virtual environment on the controller/deployment node by executing the command below. Be sure to replace the path to your virtual environment.

mkdir ~/kolla-ansiblepython3 -m venv ~/kolla-ansible

Next, activate your virtual environment;

source ~/kolla-ansible/bin/activate

Once you activate the Kolla-ansible virtual environment, your shell prompt should change. See my shell prompt;

(kolla-ansible) kifarunix@controller01:~$

Upgrade pip;

pip install -U pip

Install Ansible on Ubuntu 22.04

Install Ansible from the virtual environment. If you ever log out of the virtual environment, you can always source the path to activate it;

source $HOME/kolla-ansible/bin/activate

Next, install Ansible. Kolla requires at least Ansible 6 up to 7, as of this writing.

pip install 'ansible>=6,<8'

Create an ansible configuration file on your home directory with the following tunables;

vim $HOME/ansible.cfg

[defaults]

host_key_checking=False

pipelining=True

forks=100Save and exit the file.

Install Kolla-ansible on Ubuntu 22.04

Install Kolla-ansible on Ubuntu 22.04 using pip from the virtual environment above;

source $HOME/kolla-ansible/bin/activate

The command below installs the current stable release Kolla-ansible as of this writing.

pip install git+https://opendev.org/openstack/kolla-ansible@stable/2023.1

Configure Kolla-ansible for Multinode OpenStack Deployment

Prepare Kolla Ansible Configuration Files

Next, create Kolla configuration directory;

sudo mkdir /etc/kolla

Update the ownership of the Kolla config directory to the user with which you activated Koll-ansible deployment virtual environment as.

sudo chown $USER:$USER /etc/kolla

Copy the main Kolla configuration file, globals.yml and the OpenStack services passwords file, passwords.yml into the Kolla configuration directory above from the virtual environment.

cp $HOME/kolla-ansible/share/kolla-ansible/etc_examples/kolla/* /etc/kolla/

Confirm;

ls /etc/kollaglobals.yml passwords.ymlDefine Kolla-Ansible Global Deployment Options

Open the globals.yml configuration file and define the Multinode Kolla global deployment options;

vim /etc/kolla/globals.yml

Below are the basic options that we enabled for our AIO OpenStack deployment.

grep -vE '^$|^#' /etc/kolla/globals.yml

---

workaround_ansible_issue_8743: yes

config_strategy: "COPY_ALWAYS"

kolla_base_distro: "ubuntu"

openstack_release: "2023.1"

kolla_internal_vip_address: "192.168.200.254"

kolla_container_engine: docker

docker_configure_for_zun: "yes"

containerd_configure_for_zun: "yes"

docker_apt_package_pin: "5:20.*"

network_address_family: "ipv4"

neutron_plugin_agent: "openvswitch"

enable_openstack_core: "yes"

enable_glance: "{{ enable_openstack_core | bool }}"

enable_haproxy: "yes"

enable_keepalived: "{{ enable_haproxy | bool }}"

enable_keystone: "{{ enable_openstack_core | bool }}"

enable_mariadb: "yes"

enable_memcached: "yes"

enable_neutron: "{{ enable_openstack_core | bool }}"

enable_nova: "{{ enable_openstack_core | bool }}"

enable_aodh: "yes"

enable_ceilometer: "yes"

enable_cinder: "yes"

enable_cinder_backend_lvm: "yes"

enable_etcd: "yes"

enable_gnocchi: "yes"

enable_gnocchi_statsd: "yes"

enable_grafana: "yes"

enable_heat: "{{ enable_openstack_core | bool }}"

enable_horizon: "{{ enable_openstack_core | bool }}"

enable_horizon_zun: "{{ enable_zun | bool }}"

enable_kuryr: "yes"

enable_prometheus: "yes"

enable_zun: "yes"

glance_backend_file: "yes"

glance_file_datadir_volume: "/mnt/glance"

cinder_volume_group: "cinder-volumes"

NOTE that we enabled use of NFS share for the glance images mounted under /mnt/glance on the controller node. The NFS share is provided by the storage node.

On controller node, this is how NFS share is mounted;

df -hT -P /mnt/glanceFilesystem Type Size Used Avail Use% Mounted on

storage01:/mnt/glance nfs4 100G 746M 100G 1% /mnt/glance

Refer to Kolla-ansible documentation guide to learn more about the global options used above. The configuration is also highly commented. Go through the comments for each option to learn what it is about a specific option.

Configure Multinode Deployment Inventory

Copy Kolla-ansible deployment multinode inventory to the current working directory. Since we are deploying multinode OpenStack using Kolla-ansible, copy the multinode ansible inventory file. This is the file which contains all the information needed to determine what services run on which nodes

cp $HOME/kolla-ansible/share/kolla-ansible/ansible/inventory/multinode .

This is how our multinode inventory is configured.

cat multinode# These initial groups are the only groups required to be modified. The

# additional groups are for more control of the environment.

[control]

controller01 ansible_connection=local neutron_external_interface=vethext

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

controller01 ansible_connection=local neutron_external_interface=vethext network_interface=br0

[compute]

compute01 neutron_external_interface=enp2s0 network_interface=enp1s0

[monitoring]

controller01 ansible_connection=local neutron_external_interface=vethext

# When compute nodes and control nodes use different interfaces,

# you need to comment out "api_interface" and other interfaces from the globals.yml

# and specify like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 tunnel_interface=em1

[storage]

storage01 neutron_external_interface=enp10s0 network_interface=enp1s0

[deployment]

localhost ansible_connection=local

[baremetal:children]

control

network

compute

storage

monitoring

[tls-backend:children]

control

# You can explicitly specify which hosts run each project by updating the

# groups in the sections below. Common services are grouped together.

[common:children]

control

network

compute

storage

monitoring

[collectd:children]

compute

[grafana:children]

monitoring

[etcd:children]

control

[influxdb:children]

monitoring

[prometheus:children]

monitoring

[kafka:children]

control

[telegraf:children]

compute

control

monitoring

network

storage

[hacluster:children]

control

[hacluster-remote:children]

compute

[loadbalancer:children]

network

[mariadb:children]

control

[rabbitmq:children]

control

[outward-rabbitmq:children]

control

[monasca-agent:children]

compute

control

monitoring

network

storage

[monasca:children]

monitoring

[storm:children]

monitoring

[keystone:children]

control

[glance:children]

control

[nova:children]

control

[neutron:children]

network

[openvswitch:children]

network

compute

manila-share

[cinder:children]

control

[cloudkitty:children]

control

[freezer:children]

control

[memcached:children]

control

[horizon:children]

control

[swift:children]

control

[barbican:children]

control

[heat:children]

control

[murano:children]

control

[solum:children]

control

[ironic:children]

control

[magnum:children]

control

[sahara:children]

control

[mistral:children]

control

[manila:children]

control

[ceilometer:children]

control

[aodh:children]

control

[cyborg:children]

control

compute

[gnocchi:children]

control

[tacker:children]

control

[trove:children]

control

[senlin:children]

control

[vitrage:children]

control

[watcher:children]

control

[octavia:children]

control

[designate:children]

control

[placement:children]

control

[bifrost:children]

deployment

[zookeeper:children]

control

[zun:children]

control

[skyline:children]

control

[redis:children]

control

[blazar:children]

control

[venus:children]

monitoring

# Additional control implemented here. These groups allow you to control which

# services run on which hosts at a per-service level.

#

# Word of caution: Some services are required to run on the same host to

# function appropriately. For example, neutron-metadata-agent must run on the

# same host as the l3-agent and (depending on configuration) the dhcp-agent.

# Common

[cron:children]

common

[fluentd:children]

common

[kolla-logs:children]

common

[kolla-toolbox:children]

common

[opensearch:children]

control

# Opensearch dashboards

[opensearch-dashboards:children]

opensearch

# Glance

[glance-api:children]

glance

# Nova

[nova-api:children]

nova

[nova-conductor:children]

nova

[nova-super-conductor:children]

nova

[nova-novncproxy:children]

nova

[nova-scheduler:children]

nova

[nova-spicehtml5proxy:children]

nova

[nova-compute-ironic:children]

nova

[nova-serialproxy:children]

nova

# Neutron

[neutron-server:children]

control

[neutron-dhcp-agent:children]

neutron

[neutron-l3-agent:children]

neutron

[neutron-metadata-agent:children]

neutron

[neutron-ovn-metadata-agent:children]

compute

network

[neutron-bgp-dragent:children]

neutron

[neutron-infoblox-ipam-agent:children]

neutron

[neutron-metering-agent:children]

neutron

[ironic-neutron-agent:children]

neutron

[neutron-ovn-agent:children]

compute

network

# Cinder

[cinder-api:children]

cinder

[cinder-backup:children]

storage

[cinder-scheduler:children]

cinder

[cinder-volume:children]

storage

# Cloudkitty

[cloudkitty-api:children]

cloudkitty

[cloudkitty-processor:children]

cloudkitty

# Freezer

[freezer-api:children]

freezer

[freezer-scheduler:children]

freezer

# iSCSI

[iscsid:children]

compute

storage

ironic

[tgtd:children]

storage

# Manila

[manila-api:children]

manila

[manila-scheduler:children]

manila

[manila-share:children]

network

[manila-data:children]

manila

# Swift

[swift-proxy-server:children]

swift

[swift-account-server:children]

storage

[swift-container-server:children]

storage

[swift-object-server:children]

storage

# Barbican

[barbican-api:children]

barbican

[barbican-keystone-listener:children]

barbican

[barbican-worker:children]

barbican

# Heat

[heat-api:children]

heat

[heat-api-cfn:children]

heat

[heat-engine:children]

heat

# Murano

[murano-api:children]

murano

[murano-engine:children]

murano

# Monasca

[monasca-agent-collector:children]

monasca-agent

[monasca-agent-forwarder:children]

monasca-agent

[monasca-agent-statsd:children]

monasca-agent

[monasca-api:children]

monasca

[monasca-log-persister:children]

monasca

[monasca-log-metrics:children]

monasca

[monasca-thresh:children]

monasca

[monasca-notification:children]

monasca

[monasca-persister:children]

monasca

# Storm

[storm-worker:children]

storm

[storm-nimbus:children]

storm

# Ironic

[ironic-api:children]

ironic

[ironic-conductor:children]

ironic

[ironic-inspector:children]

ironic

[ironic-tftp:children]

ironic

[ironic-http:children]

ironic

# Magnum

[magnum-api:children]

magnum

[magnum-conductor:children]

magnum

# Sahara

[sahara-api:children]

sahara

[sahara-engine:children]

sahara

# Solum

[solum-api:children]

solum

[solum-worker:children]

solum

[solum-deployer:children]

solum

[solum-conductor:children]

solum

[solum-application-deployment:children]

solum

[solum-image-builder:children]

solum

# Mistral

[mistral-api:children]

mistral

[mistral-executor:children]

mistral

[mistral-engine:children]

mistral

[mistral-event-engine:children]

mistral

# Ceilometer

[ceilometer-central:children]

ceilometer

[ceilometer-notification:children]

ceilometer

[ceilometer-compute:children]

compute

[ceilometer-ipmi:children]

compute

# Aodh

[aodh-api:children]

aodh

[aodh-evaluator:children]

aodh

[aodh-listener:children]

aodh

[aodh-notifier:children]

aodh

# Cyborg

[cyborg-api:children]

cyborg

[cyborg-agent:children]

compute

[cyborg-conductor:children]

cyborg

# Gnocchi

[gnocchi-api:children]

gnocchi

[gnocchi-statsd:children]

gnocchi

[gnocchi-metricd:children]

gnocchi

# Trove

[trove-api:children]

trove

[trove-conductor:children]

trove

[trove-taskmanager:children]

trove

# Multipathd

[multipathd:children]

compute

storage

# Watcher

[watcher-api:children]

watcher

[watcher-engine:children]

watcher

[watcher-applier:children]

watcher

# Senlin

[senlin-api:children]

senlin

[senlin-conductor:children]

senlin

[senlin-engine:children]

senlin

[senlin-health-manager:children]

senlin

# Octavia

[octavia-api:children]

octavia

[octavia-driver-agent:children]

octavia

[octavia-health-manager:children]

octavia

[octavia-housekeeping:children]

octavia

[octavia-worker:children]

octavia

# Designate

[designate-api:children]

designate

[designate-central:children]

designate

[designate-producer:children]

designate

[designate-mdns:children]

network

[designate-worker:children]

designate

[designate-sink:children]

designate

[designate-backend-bind9:children]

designate

# Placement

[placement-api:children]

placement

# Zun

[zun-api:children]

zun

[zun-wsproxy:children]

zun

[zun-compute:children]

compute

[zun-cni-daemon:children]

compute

# Skyline

[skyline-apiserver:children]

skyline

[skyline-console:children]

skyline

# Tacker

[tacker-server:children]

tacker

[tacker-conductor:children]

tacker

# Vitrage

[vitrage-api:children]

vitrage

[vitrage-notifier:children]

vitrage

[vitrage-graph:children]

vitrage

[vitrage-ml:children]

vitrage

[vitrage-persistor:children]

vitrage

# Blazar

[blazar-api:children]

blazar

[blazar-manager:children]

blazar

# Prometheus

[prometheus-node-exporter:children]

monitoring

control

compute

network

storage

[prometheus-mysqld-exporter:children]

mariadb

[prometheus-haproxy-exporter:children]

loadbalancer

[prometheus-memcached-exporter:children]

memcached

[prometheus-cadvisor:children]

monitoring

control

compute

network

storage

[prometheus-alertmanager:children]

monitoring

[prometheus-openstack-exporter:children]

monitoring

[prometheus-elasticsearch-exporter:children]

opensearch

[prometheus-blackbox-exporter:children]

monitoring

[prometheus-libvirt-exporter:children]

compute

[prometheus-msteams:children]

prometheus-alertmanager

[masakari-api:children]

control

[masakari-engine:children]

control

[masakari-hostmonitor:children]

control

[masakari-instancemonitor:children]

compute

[ovn-controller:children]

ovn-controller-compute

ovn-controller-network

[ovn-controller-compute:children]

compute

[ovn-controller-network:children]

network

[ovn-database:children]

control

[ovn-northd:children]

ovn-database

[ovn-nb-db:children]

ovn-database

[ovn-sb-db:children]

ovn-database

[venus-api:children]

venus

[venus-manager:children]

venus

In the storage node, we also created a block storage LVM volume group called cinder-volumes;

See below command output executed on storage node;

sudo vgs

VG #PV #LV #SN Attr VSize VFree

cinder-volumes 1 0 0 wz--n- <100.00g <100.00g

glance 1 1 0 wz--n- <100.00g 0

ubuntu-vg 1 1 0 wz--n- <101.00g 0

See also that if different nodes have different interface names for network and management, define respective interface on each node as defined in the inventory file above.

Generate Kolla Passwords

Kolla passwords.yml configuration file stores various OpenStack services passwords. You can automatically generate the password using the Kolla-ansible kolla-genpwd in your virtual environment.

Ensure that your virtual environment is activated

source $HOME/kolla-ansible/bin/activate

Next, generate the passwords;

kolla-genpwd

All generated passwords will be populated to /etc/kolla/passwords.yml file.

Using Kolla-Ansible to Deploy Multinode OpenStack

Install Ansible Galaxy requirements

The Kolla Ansible Galaxy requirements are a set of Ansible roles and collections that are required to deploy OpenStack using Kolla Ansible.

To install them, run the command below;

kolla-ansible install-depsBoostrap OpenStack Nodes with Kolla Deploy Dependencies

Since everything is setup so far, you can now start to deploy Multinode OpenStack.

Again, ensure that your virtual environment is activated.

source $HOME/kolla-ansible/bin/activate

Bootstrap your OpenStack nodes with kolla deploy dependencies using bootstrap-servers subcommand.

kolla-ansible -i multinode bootstrap-servers

Below is just a snippet of the bootstrapping command;

Bootstrapping servers : ansible-playbook -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=bootstrap-servers /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/kolla-host.yml --inventory multinode

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [Gather facts for all hosts] ****************************************************************************************************************************

TASK [Gather facts] ******************************************************************************************************************************************

ok: [localhost]

ok: [storage01]

ok: [compute01]

TASK [Gather package facts] **********************************************************************************************************************************

skipping: [compute01]

skipping: [localhost]

skipping: [storage01]

TASK [Group hosts to determine when using --limit] ***********************************************************************************************************

ok: [compute01]

ok: [localhost]

ok: [storage01]

[WARNING]: Could not match supplied host pattern, ignoring: all_using_limit_True

PLAY [Gather facts for all hosts (if using --limit)] *********************************************************************************************************

skipping: no hosts matched

PLAY [Apply role baremetal] **********************************************************************************************************************************

TASK [openstack.kolla.etc_hosts : Include etc-hosts.yml] *****************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/etc_hosts/tasks/etc-hosts.yml for localhost, compute01, storage01

TASK [openstack.kolla.etc_hosts : Ensure localhost in /etc/hosts] ********************************************************************************************

ok: [compute01]

ok: [storage01]

ok: [localhost]

TASK [openstack.kolla.etc_hosts : Ensure hostname does not point to 127.0.1.1 in /etc/hosts] *****************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.etc_hosts : Generate /etc/hosts for all of the nodes] **********************************************************************************

[WARNING]: Module remote_tmp /root/.ansible/tmp did not exist and was created with a mode of 0700, this may cause issues when running as another user. To

avoid this, create the remote_tmp dir with the correct permissions manually

changed: [storage01]

changed: [compute01]

changed: [localhost]

TASK [openstack.kolla.etc_hosts : Check whether /etc/cloud/cloud.cfg exists] *********************************************************************************

ok: [storage01]

ok: [localhost]

ok: [compute01]

TASK [openstack.kolla.etc_hosts : Disable cloud-init manage_etc_hosts] ***************************************************************************************

changed: [storage01]

changed: [localhost]

changed: [compute01]

TASK [openstack.kolla.baremetal : Ensure unprivileged users can use ping] ************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Set firewall default policy] ***********************************************************************************************

ok: [localhost]

ok: [storage01]

ok: [compute01]

TASK [openstack.kolla.baremetal : Check if firewalld is installed] *******************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Disable firewalld] *********************************************************************************************************

skipping: [localhost] => (item=firewalld)

skipping: [localhost]

skipping: [compute01] => (item=firewalld)

skipping: [compute01]

skipping: [storage01] => (item=firewalld)

skipping: [storage01]

TASK [openstack.kolla.packages : Install packages] ***********************************************************************************************************

ok: [compute01]

ok: [storage01]

ok: [localhost]

TASK [openstack.kolla.packages : Remove packages] ************************************************************************************************************

changed: [storage01]

changed: [localhost]

changed: [compute01]

TASK [openstack.kolla.docker : include_tasks] ****************************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/repo-Debian.yml for localhost, compute01, storage01

TASK [openstack.kolla.docker : Install CA certificates and gnupg packages] ***********************************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.docker : Ensure apt sources list directory exists] *************************************************************************************

ok: [storage01]

ok: [compute01]

ok: [localhost]

TASK [openstack.kolla.docker : Ensure apt keyrings directory exists] *****************************************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.docker : Install docker apt gpg key] ***************************************************************************************************

changed: [compute01]

changed: [localhost]

changed: [storage01]

TASK [openstack.kolla.docker : Install docker apt pin] *******************************************************************************************************

changed: [localhost]

changed: [storage01]

changed: [compute01]

TASK [openstack.kolla.docker : Enable docker apt repository] *************************************************************************************************

changed: [storage01]

changed: [compute01]

changed: [localhost]

TASK [openstack.kolla.docker : Check which containers are running] *******************************************************************************************

ok: [compute01]

ok: [localhost]

ok: [storage01]

TASK [openstack.kolla.docker : Check if docker systemd unit exists] ******************************************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.docker : Mask the docker systemd unit on Debian/Ubuntu] ********************************************************************************

changed: [localhost]

changed: [compute01]

changed: [storage01]

TASK [openstack.kolla.docker : Install packages] *************************************************************************************************************

changed: [localhost]

changed: [storage01]

changed: [compute01]

TASK [openstack.kolla.docker : Start docker] *****************************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker : Wait for Docker to start] *****************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker : Ensure containers are running after Docker upgrade] ***************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker : Ensure docker config directory exists] ****************************************************************************************

changed: [localhost]

changed: [compute01]

changed: [storage01]

TASK [openstack.kolla.docker : Write docker config] **********************************************************************************************************

changed: [localhost]

changed: [compute01]

changed: [storage01]

TASK [openstack.kolla.docker : Remove old docker options file] ***********************************************************************************************

skipping: [compute01]

ok: [localhost]

ok: [storage01]

TASK [openstack.kolla.docker : Ensure docker service directory exists] ***************************************************************************************

skipping: [localhost]

skipping: [storage01]

changed: [compute01]

TASK [openstack.kolla.docker : Configure docker service] *****************************************************************************************************

skipping: [localhost]

skipping: [storage01]

changed: [compute01]

TASK [openstack.kolla.docker : Ensure the path for CA file for private registry exists] **********************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker : Ensure the CA file for private registry exists] *******************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker : Flush handlers] ***************************************************************************************************************

TASK [openstack.kolla.docker : Flush handlers] ***************************************************************************************************************

TASK [openstack.kolla.docker : Flush handlers] ***************************************************************************************************************

RUNNING HANDLER [openstack.kolla.docker : Reload docker service file] ****************************************************************************************

ok: [compute01]

RUNNING HANDLER [openstack.kolla.docker : Restart docker] ****************************************************************************************************

changed: [localhost]

changed: [storage01]

changed: [compute01]

TASK [openstack.kolla.docker : Start and enable docker] ******************************************************************************************************

changed: [storage01]

changed: [localhost]

changed: [compute01]

TASK [openstack.kolla.docker : include_tasks] ****************************************************************************************************************

skipping: [localhost]

skipping: [storage01]

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/configure-containerd-for-zun.yml for compute01

TASK [openstack.kolla.docker : Ensuring CNI config directory exist] ******************************************************************************************

changed: [compute01]

TASK [openstack.kolla.docker : Copying CNI config file] ******************************************************************************************************

changed: [compute01]

TASK [openstack.kolla.docker : Ensuring CNI bin directory exist] *********************************************************************************************

changed: [compute01]

TASK [openstack.kolla.docker : Copy zun-cni script] **********************************************************************************************************

changed: [compute01]

TASK [openstack.kolla.docker : Copying over containerd config] ***********************************************************************************************

changed: [compute01]

TASK [openstack.kolla.kolla_user : Ensure groups are present] ************************************************************************************************

skipping: [localhost] => (item=docker)

skipping: [localhost] => (item=sudo)

skipping: [localhost] => (item=kolla)

skipping: [localhost]

skipping: [compute01] => (item=docker)

skipping: [compute01] => (item=sudo)

skipping: [compute01] => (item=kolla)

skipping: [compute01]

skipping: [storage01] => (item=docker)

skipping: [storage01] => (item=sudo)

skipping: [storage01] => (item=kolla)

skipping: [storage01]

TASK [openstack.kolla.kolla_user : Create kolla user] ********************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.kolla_user : Add public key to kolla user authorized keys] *****************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.kolla_user : Grant kolla user passwordless sudo] ***************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker_sdk : Install packages] *********************************************************************************************************

changed: [localhost]

changed: [compute01]

changed: [storage01]

TASK [openstack.kolla.docker_sdk : Install latest pip in the virtualenv] *************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.docker_sdk : Install docker SDK for python] ********************************************************************************************

changed: [localhost]

changed: [storage01]

changed: [compute01]

TASK [openstack.kolla.baremetal : Ensure node_config_directory directory exists] *****************************************************************************

ok: [localhost]

changed: [compute01]

changed: [storage01]

TASK [openstack.kolla.apparmor_libvirt : include_tasks] ******************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/apparmor_libvirt/tasks/remove-profile.yml for localhost, compute01, storage01

TASK [openstack.kolla.apparmor_libvirt : Get stat of libvirtd apparmor profile] ******************************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.apparmor_libvirt : Get stat of libvirtd apparmor disable profile] **********************************************************************

ok: [localhost]

ok: [compute01]

ok: [storage01]

TASK [openstack.kolla.apparmor_libvirt : Remove apparmor profile for libvirt] ********************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Change state of selinux] ***************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Set https proxy for git] ***************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Set http proxy for git] ****************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

TASK [openstack.kolla.baremetal : Configure ceph for zun] ****************************************************************************************************

skipping: [localhost]

skipping: [compute01]

skipping: [storage01]

RUNNING HANDLER [openstack.kolla.docker : Restart containerd] ************************************************************************************************

changed: [compute01]

PLAY RECAP ***************************************************************************************************************************************************

compute01 : ok=42 changed=23 unreachable=0 failed=0 skipped=20 rescued=0 ignored=0

localhost : ok=33 changed=14 unreachable=0 failed=0 skipped=22 rescued=0 ignored=0

storage01 : ok=33 changed=15 unreachable=0 failed=0 skipped=22 rescued=0 ignored=0

This is what the bootstrap command do;

- Customisation of

/etc/hosts - Creation of user and group

- Kolla configuration directory

- Package installation and removal

- Docker engine installation and configuration

- Disabling firewalls

- Creation of Python virtual environment

- Configuration of Apparmor

- Configuration of SELinux

- Configuration of NTP daemon

Run pre-deployment checks on the nodes

Execute the command below to run the pre-deployment checks for hosts.

kolla-ansible -i multinode prechecks

Sample output;

...

PLAY RECAP ***************************************************************************************************************************************************

compute01 : ok=50 changed=0 unreachable=0 failed=0 skipped=43 rescued=0 ignored=0

localhost : ok=132 changed=0 unreachable=0 failed=0 skipped=163 rescued=0 ignored=0

storage01 : ok=35 changed=0 unreachable=0 failed=0 skipped=28 rescued=0 ignored=0

Use Kolla-Ansible to deploy Multinode OpenStack

If everything is fine, proceed to deploy Multinode OpenStack on Ubuntu 22.04;

kolla-ansible -i multinode deploy

The process might take a while as it involves building containers for different OpenStack services.

If all ends well, you should get 0 failed tasks;

If during the deployment you encounter this error;

TASK [haproxy-config : Copying over nova-cell:nova-novncproxy haproxy config] ********************************************************************************

fatal: [localhost]: FAILED! => {"msg": "An unhandled exception occurred while templating '{{ cell_proxy_project_services | namespace_haproxy_for_cell(cell_name) }}'. Error was a <class 'ansible.errors.AnsibleError'>, original message: template error while templating string: Could not load \"namespace_haproxy_for_cell\": 'namespace_haproxy_for_cell'. String: {{ cell_proxy_project_services | namespace_haproxy_for_cell(cell_name) }}. Could not load \"namespace_haproxy_for_cell\": 'namespace_haproxy_for_cell'"}It is suggested here, that you downgrade the version of Core ansible from 2.14.11 to 2.14.10 before running the deployment command;

pip install ansible-core==2.14.10Next, re-run the deployment;

kolla-ansible -i multinode deployEnsure that no deployment task failed. If any fails, fix it before you can proceed.

Verify Multinode OpenStack Deployment

List Running OpenStack Docker Containers

Once the deployment is done, you can list running OpenStack docker containers.

Controller Node Containers:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ca9cecdf07eb quay.io/openstack.kolla/zun-wsproxy:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) zun_wsproxy

c3058fa2b631 quay.io/openstack.kolla/zun-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) zun_api

555bf24841ed quay.io/openstack.kolla/grafana:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes grafana

4ee3cb6600ef quay.io/openstack.kolla/aodh-notifier:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) aodh_notifier

028536a0b6d1 quay.io/openstack.kolla/aodh-listener:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) aodh_listener

c86a15aa5c6d quay.io/openstack.kolla/aodh-evaluator:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) aodh_evaluator

fb83c42b6a73 quay.io/openstack.kolla/aodh-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) aodh_api

6bed912c6098 quay.io/openstack.kolla/ceilometer-central:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (unhealthy) ceilometer_central

5294be9ba04f quay.io/openstack.kolla/ceilometer-notification:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) ceilometer_notification

cfd49c155691 quay.io/openstack.kolla/gnocchi-statsd:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (unhealthy) gnocchi_statsd

7ba7dab61ca7 quay.io/openstack.kolla/gnocchi-metricd:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) gnocchi_metricd

605dd04439a1 quay.io/openstack.kolla/gnocchi-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) gnocchi_api

dd0919801045 quay.io/openstack.kolla/horizon:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) horizon

b16fa404322e quay.io/openstack.kolla/heat-engine:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) heat_engine

55af05818f8a quay.io/openstack.kolla/heat-api-cfn:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) heat_api_cfn

978aaaccae90 quay.io/openstack.kolla/heat-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) heat_api

062fef51d803 quay.io/openstack.kolla/neutron-metadata-agent:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_metadata_agent

089d46bf865d quay.io/openstack.kolla/neutron-l3-agent:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_l3_agent

f772f1b1489c quay.io/openstack.kolla/neutron-dhcp-agent:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_dhcp_agent

9eeaba1aafda quay.io/openstack.kolla/neutron-openvswitch-agent:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_openvswitch_agent

ad3c51b32405 quay.io/openstack.kolla/neutron-server:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_server

5f0cf1f39419 quay.io/openstack.kolla/openvswitch-vswitchd:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) openvswitch_vswitchd

e848738cf82a quay.io/openstack.kolla/openvswitch-db-server:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) openvswitch_db

75bee8399705 quay.io/openstack.kolla/nova-novncproxy:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_novncproxy

063a33022b2e quay.io/openstack.kolla/nova-conductor:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_conductor

60dfe04def4c quay.io/openstack.kolla/nova-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_api

78ed93d64411 quay.io/openstack.kolla/nova-scheduler:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_scheduler

3b870664710d quay.io/openstack.kolla/placement-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) placement_api

7f9d1e1665c2 quay.io/openstack.kolla/cinder-scheduler:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) cinder_scheduler

e149f97d312d quay.io/openstack.kolla/cinder-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) cinder_api

db46e9afa5c7 quay.io/openstack.kolla/glance-api:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) glance_api

db46f61525f0 quay.io/openstack.kolla/haproxy:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) haproxy

852fc98e1ea1 quay.io/openstack.kolla/keystone:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes (healthy) keystone

573a2af3a09b quay.io/openstack.kolla/keystone-fernet:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes (healthy) keystone_fernet

0e0f71e8e708 quay.io/openstack.kolla/keystone-ssh:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes (healthy) keystone_ssh

029256854ce7 quay.io/openstack.kolla/etcd:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes etcd

b74512e6271e quay.io/openstack.kolla/rabbitmq:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes (healthy) rabbitmq

fdeb9baf82a4 quay.io/openstack.kolla/prometheus-blackbox-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_blackbox_exporter

0d23b50e8024 quay.io/openstack.kolla/prometheus-openstack-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_openstack_exporter

dc9ba602c3de quay.io/openstack.kolla/prometheus-alertmanager:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_alertmanager

9f2de50c7835 quay.io/openstack.kolla/prometheus-cadvisor:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_cadvisor

ab28657f81ee quay.io/openstack.kolla/prometheus-memcached-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_memcached_exporter

df50420038b5 quay.io/openstack.kolla/prometheus-haproxy-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_haproxy_exporter

75941e5b1290 quay.io/openstack.kolla/prometheus-mysqld-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_mysqld_exporter

8e589fcf6ca0 quay.io/openstack.kolla/prometheus-node-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_node_exporter

59704ffe90b8 quay.io/openstack.kolla/prometheus-v2-server:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes prometheus_server

cef8926622bd quay.io/openstack.kolla/memcached:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes (healthy) memcached

c50a17f0fe26 quay.io/openstack.kolla/mariadb-server:2023.1-ubuntu-jammy "dumb-init -- kolla_…" 15 minutes ago Up 15 minutes (healthy) mariadb

9f1b1c0f060a quay.io/openstack.kolla/mariadb-clustercheck:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes mariadb_clustercheck

5cb589f36080 quay.io/openstack.kolla/keepalived:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes keepalived

58931dccea50 quay.io/openstack.kolla/cron:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes cron

40b4dc10c199 quay.io/openstack.kolla/kolla-toolbox:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes kolla_toolbox

09304a67aa14 quay.io/openstack.kolla/fluentd:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes fluentd

OpenStack services are now up and running.

If you want to run Docker commands as standard user, add the user to the docker group.

sudo usermod -aG docker kifarunixConfiirm the use of NFS share for the Glance images. The NFS share is bound to Glance image path, /var/lib/glance.

docker inspect glance_api {

"Type": "bind",

"Source": "/mnt/glance",

"Destination": "/var/lib/glance",

"Mode": "rw",

"RW": true,

"Propagation": "rprivate"

},Compute Node Containers:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

58208486f3a8 quay.io/openstack.kolla/zun-cni-daemon:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) zun_cni_daemon

ff14d52b7e14 quay.io/openstack.kolla/zun-compute:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) zun_compute

f66ff68bdf24 quay.io/openstack.kolla/ceilometer-compute:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (unhealthy) ceilometer_compute

dabed085c5b7 quay.io/openstack.kolla/kuryr-libnetwork:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) kuryr

8f0d741d001e quay.io/openstack.kolla/neutron-openvswitch-agent:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) neutron_openvswitch_agent

ec57feba083f quay.io/openstack.kolla/openvswitch-vswitchd:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) openvswitch_vswitchd

9118cffd2892 quay.io/openstack.kolla/openvswitch-db-server:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) openvswitch_db

deba7e6de5a4 quay.io/openstack.kolla/nova-compute:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_compute

c9db01568849 quay.io/openstack.kolla/nova-libvirt:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_libvirt

b1370a657a30 quay.io/openstack.kolla/nova-ssh:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) nova_ssh

61d7027d0ded quay.io/openstack.kolla/iscsid:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes iscsid

77627a749fe1 quay.io/openstack.kolla/prometheus-libvirt-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes prometheus_libvirt_exporter

d9269fbd6c1b quay.io/openstack.kolla/prometheus-cadvisor:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes prometheus_cadvisor

14d2478293fc quay.io/openstack.kolla/prometheus-node-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes prometheus_node_exporter

a20ab3756886 quay.io/openstack.kolla/cron:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes cron

ad85243d6c75 quay.io/openstack.kolla/kolla-toolbox:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes kolla_toolbox

976a7e42f49d quay.io/openstack.kolla/fluentd:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 11 minutes fluentd

Storage Node Containers:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e97f28a62dab quay.io/openstack.kolla/cinder-backup:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) cinder_backup

ab53f97af025 quay.io/openstack.kolla/cinder-volume:2023.1-ubuntu-jammy "dumb-init --single-…" 11 minutes ago Up 11 minutes (healthy) cinder_volume

9fb22368d98c quay.io/openstack.kolla/tgtd:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes tgtd

6d89f289b8ba quay.io/openstack.kolla/iscsid:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes iscsid

42521f44e85d quay.io/openstack.kolla/prometheus-cadvisor:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes prometheus_cadvisor

d09e87ddaf81 quay.io/openstack.kolla/prometheus-node-exporter:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes prometheus_node_exporter

d32fdcb737a4 quay.io/openstack.kolla/cron:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes cron

1ba5afa44662 quay.io/openstack.kolla/kolla-toolbox:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes kolla_toolbox

c9217e8a1dae quay.io/openstack.kolla/fluentd:2023.1-ubuntu-jammy "dumb-init --single-…" 15 minutes ago Up 15 minutes fluentd

The block storage cinder volume

Multinode OpenStack Post Deployment Tasks

Install OpenStack command line administration tools

You can now install OpenStack command line administration tools. You need to do this from the virtual environment on the control node.

source $HOME/kolla-ansible/bin/activate

pip install python-openstackclient -c https://releases.openstack.org/constraints/upper/2023.1pip install python-neutronclient -c https://releases.openstack.org/constraints/upper/2023.1pip install python-glanceclient -c https://releases.openstack.org/constraints/upper/2023.1pip install python-heatclient -c https://releases.openstack.org/constraints/upper/2023.1Generate OpenStack Admin Credentials

Generate OpenStack admin user credentials file (openrc) using the command below;

kolla-ansible post-deployThis command generates the admin credentials file, /etc/kolla/admin-openrc.sh.

To be able to use OpenStack command line tools, you need to activate the credentials using the command below;

source /etc/kolla/admin-openrc.shYou can now administer OpenStack from cli. For example, to list the currently available services on the controller node;

openstack service list

+----------------------------------+-----------+----------------+

| ID | Name | Type |

+----------------------------------+-----------+----------------+

| 0f7f02e155b947b1a1f9b4241b9d59ca | nova | compute |

| 2d5e37c3de0d4050bed2b4d774438bcd | glance | image |

| 3964a7fd68ff443ea8cbdd43bf6d7c87 | zun | container |

| 399d392f39114345806383ddccd97ea1 | cinderv3 | volumev3 |

| 51c2d69870ce4a5bbed416f910006ab5 | neutron | network |

| 7eb695afcee24953afe9d2c0bdae13b0 | aodh | alarming |

| 85d545bc729e4ef68350c00bfaa1dd71 | placement | placement |

| a816fbd9022c46aaacd3b8a1cbcee0c6 | heat-cfn | cloudformation |

| bd51d3dbbb304ea98cdc6f89606b618d | keystone | identity |

| e329af93def14d0c850adb7a80b4597a | gnocchi | metric |

| ff9b5a4ac2fb47d798c21d933f592e63 | heat | orchestration |

+----------------------------------+-----------+----------------+

Create Initial Networks, Images, Flavors etc [OPTIONAL]

Kolla-ansible ship with an initial script that you can use to create initial OpenStack networks, images, nova keys using init-runonce (kolla-ansible/share/kolla-ansible/init-runonce) script. The script downloads a cirros image and registers it. Then it configures networking and nova quotas to allow 40 m1.small instances to be created.

It is optional to use this script.

If you want to use this script, you can edit it and configure your floating network, that you want your instances to use.

See our guide on how to configure OpenStack Networks for Internet Access.

Reconfiguring the Stack

If you want to reconfigure the stack by adding or removing services, edit the globals.yml configuration file and redoply the changes from the virtual environment.

For example, after making changes on the globals config file, reconfigure the stack;

source /path/to/virtual-environment/bin/activate

The redeploy the changes;

kolla-ansible -i multinode reconfigure

You can also specify the specific nodes you want to deploy changes on.

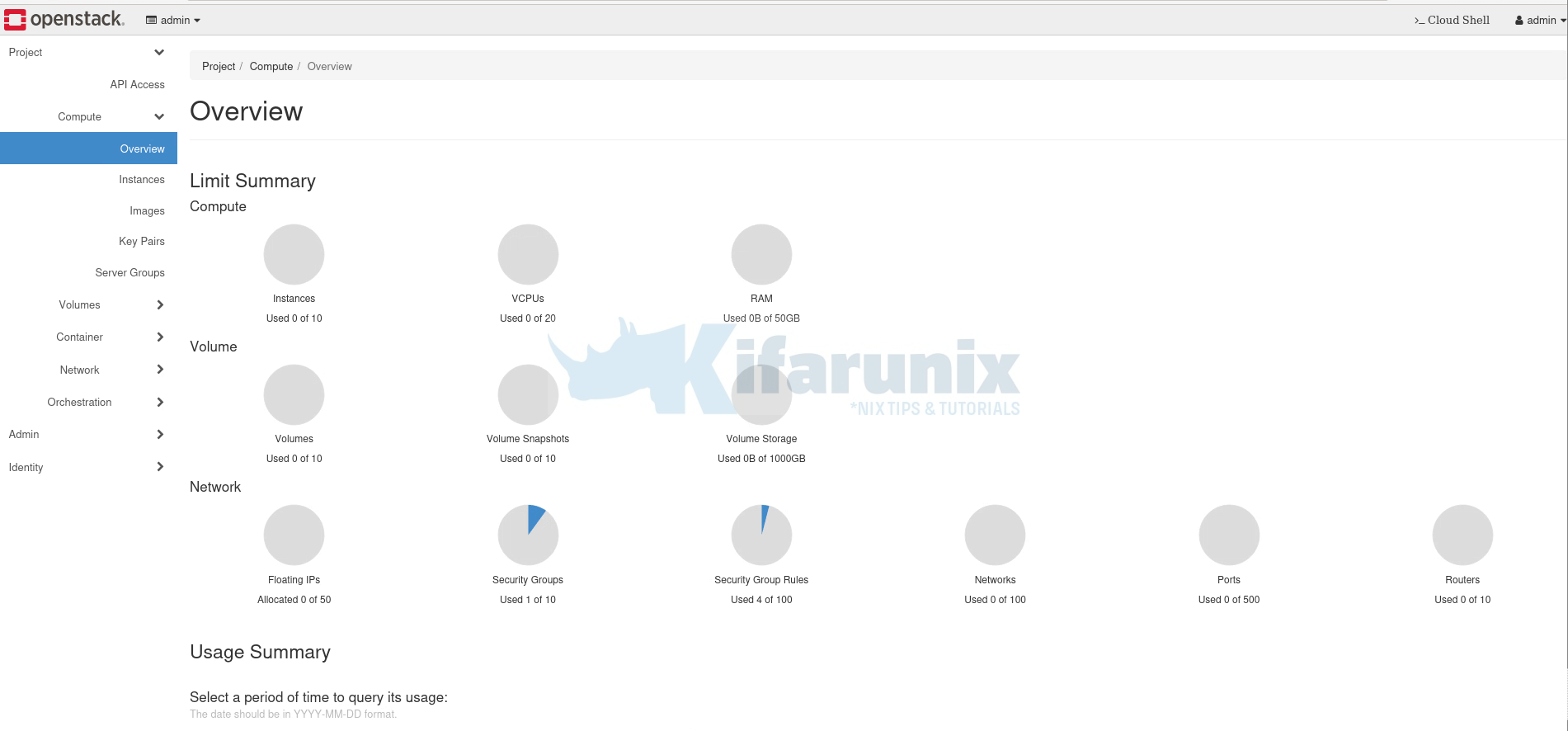

Accessing OpenStack Web Interface (Horizon)

So far so good! OpenStack is up and running. It is time we login to the web interface via the defined Kolla VIP address. We set this to 192.168.200.254 in this guide.

Therefore, to access the OpenStack Horizon from the browser, use the address, http://192.168.200.254.

.This should take you to OpenStack web interface login page;

Login using admin as the username.

You can obtain the admin credentials from the Kolla passwords file, /etc/kolla/passwords.yml. For the Horizon authentication, you need to the Keystone admin password.

grep keystone_admin_password /etc/kolla/passwords.yml

When you successfully log in, you land on OpenStack horizon dashboard.

Configure Networks for Internet Access

Check the guide below on how to configure networks for internet access.

How to Configure OpenStack Networks for Internet Access

And that marks the end of our guide on how to deploy Multi-node OpenStack using Kolla-Ansible on Ubuntu 22.04.

Further Reading

OpenStack Administration guides

Reference

OpenStack Kolla-Ansible Quick Start Guide

Related Tutorials

Install and Run MariaDB as a Docker Container

Install and Deploy Kubernetes Cluster on Ubuntu 20.04

good afternoon,

why using veth instead of plain simple enp?

thank you.

“The “neutron_external_interface” variable is the interface that will be used for the external bridge in Neutron. Without this bridge the deployment instance traffic will be unable to access the rest of the Internet. In the case of a single interface on a machine, a veth pair may be used where one end of the veth pair is listed here and the other end is in a bridge on the system.”

hello

thanks for tuto

there is something wrong under section :

Below are the basic options that we enabled for our AIO OpenStack deployment.

you ‘are providing configuration for AIO openstack ? that i suppose has you are not speaking about interface to user ( #network_interface: “eth0 etc ? )

Hi,

Please refer to the section, “Network Configurations on the Nodes” and follow through.