How to run single node ELK stack 8 on Docker? In this tutorial, you will learn how to deploy ELK stack 8 on Docker containers. ELK stack is a group of open source software projects: Elasticsearch, Logstash, and Kibana and Beats, where:

- Elasticsearch is a search and analytics engine

- Logstash is a server‑side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a “stash” like Elasticsearch

- Kibana lets users visualize data with charts and graphs in Elasticsearch and

- Beats are the data shippers. They ship system logs, network, infrastructure data, etc to either Logstash for further processing or Elasticsearch for indexing.

We will be running Elasticsearch 8.11.4, Logstash 8.11.4, Kibana 8.11.4, as Docker containers in this tutorial.

Kindly note that Elasticsearch 8.x enables authentication and TLS/SSL connection by default.

Table of Contents

Deploying ELK Stack 8 on Docker Containers

In this tutorial, therefore, we will learn how to deploy a single node ELK Stack 8 on Docker containers using Docker and Docker compose. We will run all required ELK stack 8 Docker containers on a single node.

Docker is a platform that enables developers and system administrators to build, run, and share applications with containers. It provides command line interface tools such as docker and docker-compose that are used for managing Docker containers. While docker is a Docker cli for managing single Docker containers, docker-compose on the other hand is used for running and managing multiple Docker containers.

Install Docker Engine

Depending on the your host system distribution, you need to install the Docker engine. You can follow the links below to install Docker Engine on Ubuntu/Debian/CentOS 8.

Install and Use Docker on Linux

Checking Installed Docker version;

docker version

Sample output;

Docker version 24.0.7, build afdd53bInstall Docker Compose

For Docker compose to work, ensure that you have Docker Engine installed. You can follow the links above to install Docker Engine.

Once you have the Docker engine installed, proceed to install Docker compose.

Check the current stable release version of Docker Compose on their Github release page. As of this writing, the Docker Compose version 2.24.0 is the current stable release.

VER=2.24.0sudo curl -L "https://github.com/docker/compose/releases/download/v$VER/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-composeThis downloads docker compose tool to /usr/local/bin directory.

Make the Docker compose binary executable;

chmod +x /usr/local/bin/docker-composeYou should now be able to use Docker compose (docker-compose) on the CLI.

Check the version of installed Docker compose to confirm that it is working as expected.

docker-compose versionDocker Compose version v2.24.0Running Docker as Non-Root User

We are running both Docker and Docker compose as non root user. To be able to do this, ensure that your add your standard user to the docker group.

For example, am running this setup as user kifarunix. So, add the user to Docker group. Replace the username accordingly.

sudo usermod -aG docker kifarunix

Log out and log in again as the user that is added to the docker group and you should be able to run the docker and docker-compose CLI tools.

Important Elasticsearch Settings

Disable Memory Swapping on a Container

There are different ways to disable swapping on Elasticsearch. For an Elasticsearch Docker container, you can simply set the value of the bootstrap.memory_lock option to true. With this option, you also need to ensure that there is no maximum limit imposed on the locked-in-memory address space (LimitMEMLOCK=infinity). We will see how this can be achieved in a Docker compose file.

You can confirm the default value set on the Docker image using the command below;

docker run --rm docker.elastic.co/elasticsearch/elasticsearch:8.11.4 /bin/bash -c 'ulimit -Hm && ulimit -Sm'Sample output;

unlimited

unlimited

If the value, other than unlimited is printed, then you have to set this via the Docker compose file to ensure that it set to unlimited for both Soft and Hard limits.

For a respective service, this is how this config looks like in Docker compose file.

ulimits:

memlock:

soft: -1

hard: -1

Set JVM Heap Size on All Cluster Nodes

Elasticsearch usually sets the heap size automatically based on the roles of the nodes’s roles and the total memory available to the node’s container. This is the recommended approach! For the Elasticsearch Docker containers, any custom JVM settings can be defined using the ES_JAVA_OPTS environment variable, either in compose file or on command line. For example, you can set JVM heap size, Min and Max to 512M using the ES_JAVA_OPTS=-Xms512m -Xmx512m.

Set Maximum Open File Descriptor and Processes on Elasticsearch Containers

Set the maximum number of open files (nofile) for the containers to 65,536 and max number of processes to 4096 (both soft and hard limits).

We will also define these limits in the compose file. However, the Elasticsearch images usually comes with these limits already defined. You can use the command below to check the maximum number of user processes (-u) and max number of opens files (-n);

docker run --rm docker.elastic.co/elasticsearch/elasticsearch:8.11.4 /bin/bash -c 'ulimit -Hn && ulimit -Sn && ulimit -Hu && ulimit -Su'Sample output;

1048576

1048576

unlimited

unlimited

These values may look like this on Docker compose file. Adjust them accordingly!

ulimits:

nofile:

soft: 65536

hard: 65536

nproc:

soft: 2048

hard: 2048

Update Virtual Memory Settings on All Cluster Nodes

Elasticsearch uses a mmapfs directory by default to store its indices. To ensure that you do not run out of virtual memory, edit the /etc/sysctl.conf on the Docker host and update the value of vm.max_map_count as shown below.

vm.max_map_count=262144On the Docker host, simply run the command below to configure virtual memory settings.

echo "vm.max_map_count=262144" >> /etc/sysctl.confTo apply the changes;

sysctl -pDeploying ELK Stack 8 on Docker Using Docker Compose

In this setup, we will deploy a single node ELK stack 8 cluster with all the three components, Elasticsearch, Logstash and Kibana containers running on the same host as Docker containers.

If you are running Elastic stack on Docker containers for development or testing purposes, then you can run each component Docker container using docker run commands. Use of Docker compose is recommended for production containers!

To begin, create a parent directory from where you will build your stack from.

mkdir $HOME/elastic-docker

Create Docker Compose file for ELK Stack 8 Services

Docker compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

Using Compose is basically a three-step process:

- Define your app’s environment with a

Dockerfileso it can be reproduced anywhere. - Define the services that make up your app in

docker-compose.ymlso they can be run together in an isolated environment. - Run

docker-compose upand Compose starts and runs your entire app

In this setup, we will build everything using a Docker Compose file.

Now it is time to create the Docker Compose file for our deployment. Note, we will be using Docker images of the current stable release versions of the Elastic components, v8.11.4.

The default path for a Compose file is

compose.yaml(preferred) orcompose.ymlthat is placed in the working directory. Compose also supportsdocker-compose.yamlanddocker-compose.ymlfor backwards compatibility of earlier versions. If both files exist, Compose prefers the canonicalcompose.yaml.

cd $HOME/elastic-dockervim docker-compose.yml

Usually, when deploying ELK stack, the specific order to follow in the setup is Elasticsearch > Kibana > Logstash > and finally Beat agents.

Similarly, while defining the ELK stack 8 services in the Docker compose file, Elasticsearch should be the first container to be deployed followed by the rest of the components.

Below is our Docker compose outlining the ELK stack services to be deployed.

version: '3.8'

services:

es_setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

user: "0"

command: >

bash -c '

echo "Creating ES certs directory..."

[[ -d config/certs ]] || mkdir config/certs

# Check if CA certificate exists

if [ ! -f config/certs/ca/ca.crt ]; then

echo "Generating Wildcard SSL certs for ES (in PEM format)..."

bin/elasticsearch-certutil ca --pem --days 3650 --out config/certs/elkstack-ca.zip

unzip -d config/certs config/certs/elkstack-ca.zip

bin/elasticsearch-certutil cert \

--name elkstack-certs \

--ca-cert config/certs/ca/ca.crt \

--ca-key config/certs/ca/ca.key \

--pem \

--dns "*.${DOMAIN_SUFFIX},${ES_NAME}" \

--days ${DAYS} \

--out config/certs/elkstack-certs.zip

unzip -d config/certs config/certs/elkstack-certs.zip

else

echo "CA certificate already exists. Skipping Certificates generation."

fi

until curl -s --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" https://${ES_NAME}:9200 | grep -q "${CLUSTER_NAME}"; do sleep 10; done

until curl -s -XPOST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://${ES_NAME}:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done

echo "Setup is done!"

'

networks:

- elastic

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/elkstack-certs/elkstack-certs.crt ]"]

interval: 1s

timeout: 5s

retries: 120

elasticsearch:

depends_on:

es_setup:

condition: service_healthy

container_name: ${ES_NAME}

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

environment:

- node.name=${NODE_NAME}

- cluster.name=${CLUSTER_NAME}

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.transport.ssl.enabled=true

- xpack.security.http.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.http.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.key=certs/elkstack-certs/elkstack-certs.key

- xpack.security.transport.ssl.certificate=certs/elkstack-certs/elkstack-certs.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- cluster.initial_master_nodes=${NODE_NAME}

- KIBANA_USERNAME=${KIBANA_USERNAME}

- KIBANA_PASSWORD=${KIBANA_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- elasticsearch-data:/usr/share/elasticsearch/data

- certs:/usr/share/elasticsearch/config/certs

ports:

- 9200:9200

- 9300:9300

networks:

- elastic

healthcheck:

test: ["CMD-SHELL", "curl --fail -k -s -u elastic:${ELASTIC_PASSWORD} --cacert config/certs/ca/ca.crt https://${ES_NAME}:9200"]

interval: 30s

timeout: 10s

retries: 5

restart: unless-stopped

kibana:

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

container_name: kibana

environment:

- SERVER_NAME=${KIBANA_SERVER_HOST}

- ELASTICSEARCH_HOSTS=https://${ES_NAME}:9200

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

- ELASTICSEARCH_USERNAME=${KIBANA_USERNAME}

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- XPACK_REPORTING_ROLES_ENABLED=false

- XPACK_REPORTING_KIBANASERVER_HOSTNAME=localhost

- XPACK_ENCRYPTEDSAVEDOBJECTS_ENCRYPTIONKEY=${SAVEDOBJECTS_ENCRYPTIONKEY}

- XPACK_SECURITY_ENCRYPTIONKEY=${REPORTING_ENCRYPTIONKEY}

- XPACK_REPORTING_ENCRYPTIONKEY=${SECURITY_ENCRYPTIONKEY}

volumes:

- kibana-data:/usr/share/kibana/data

- certs:/usr/share/kibana/config/certs

ports:

- ${KIBANA_PORT}:5601

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

logstash:

image: docker.elastic.co/logstash/logstash:${STACK_VERSION}

container_name: logstash

environment:

- XPACK_MONITORING_ENABLED=false

- ELASTICSEARCH_USERNAME=${ES_USER}

- ELASTICSEARCH_PASSWORD=${ELASTIC_PASSWORD}

- ES_NAME=${ES_NAME}

ports:

- 5044:5044

volumes:

- ./logstash/conf.d/:/usr/share/logstash/pipeline/:ro

- certs:/usr/share/logstash/config/certs

- logstash-data:/usr/share/logstash/data

networks:

- elastic

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

volumes:

elasticsearch-data:

driver: local

kibana-data:

driver: local

logstash-data:

driver:

local

certs:

driver: local

networks:

elastic:

In summary:

- This Docker Compose file defines a multi-container setup for the Elasticsearch (ES) stack, including Elasticsearch, Kibana, and Logstash, along with a setup service (

es_setup). - The

es_setupservice initializes the Elasticsearch environment, generating SSL certificates, setting up security configurations, and ensuring the cluster is healthy before starting the other services. - The

elasticsearchservice runs the Elasticsearch node with specified configurations, including security settings, network configurations, and health checks. - The

kibanaservice sets up Kibana with connections to Elasticsearch and SSL configurations. - Lastly, the

logstashservice runs Logstash with configurations for Elasticsearch connections and SSL settings. - The

volumessection defines data volumes for Elasticsearch, Kibana, Logstash, and SSL certificates. - The entire setup is connected through a Docker network named

elastic.

Create ELK Stack 8 Docker Compose Environment Variables

You can define all the values that are dynamic using environment variables in a .env file. This environment variables file should reside in the same directory are compose file.

See below how we have assigned actual values to the environment variables referenced in the compose file.

cat .env# Version of Elastic products

STACK_VERSION=8.11.4

# Set the cluster name

CLUSTER_NAME=elk-docker-cluster

# Set Elasticsearch Node Name

NODE_NAME=es01

# Elasticsearch super user

ES_USER=elastic

# Password for the 'elastic' user (at least 6 characters). No special characters, ! or @ or $.

ELASTIC_PASSWORD=ChangeME

# Elasticsearch container name

ES_NAME=elasticsearch

# Port to expose Elasticsearch HTTP API to the host

ES_PORT=9200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

KIBANA_SERVER_HOST=localhost

# Kibana Encryption. Requires atleast 32 characters. Can be generated using `openssl rand -hex 16`

SAVEDOBJECTS_ENCRYPTIONKEY=ca11560aec8410ff002d011c2a172608

REPORTING_ENCRYPTIONKEY=288f06b3a14a7f36dd21563d50ec76d4

SECURITY_ENCRYPTIONKEY=62c781d3a2b2eaee1d4cebcc6bf42b48

# Kibana - Elasticsearch Authentication Credentials for user kibana_system

# Password for the 'kibana_system' user (at least 6 characters). No special characters, ! or @ or $.

KIBANA_USERNAME=kibana_system

KIBANA_PASSWORD=ChangeME

# Domain Suffix for ES Wildcard SSL certs

DOMAIN_SUFFIX=kifarunix-demo.com

# Elasticsearch Certificate Validity Period

DAYS=3650

Define Logstash Data Processing Pipeline

In this setup, we will configure Logstash to receive event data from Beats (Filebeat to be specific) for further processing and stashing onto the search analytics engine, Elasticsearch.

Note that Logstash is only necessary if you need to apply further processing to your event data. For example, extracting custom fields from the event data, mutating the event data etc. Otherwise, you can push the data directly to Elasticsearch from Beats.

So we use a sample Logstash processing pipeline for ModSecurity audit logs;

mkdir -p logstash/conf.d

vim logstash/conf.d/modsec.conf

input {

beats {

port => 5044

}

}

filter {

# Extract event time, log severity level, source of attack (client), and the alert message.

grok {

match => { "message" => "(?<event_time>%{MONTH}\s%{MONTHDAY}\s%{TIME}\s%{YEAR})\] \[\:%{LOGLEVEL:log_level}.*client\s%{IPORHOST:src_ip}:\d+]\s(?<alert_message>.*)" }

}

# Extract Rules File from Alert Message

grok {

match => { "alert_message" => "(?<rulesfile>\[file \"(/.+.conf)\"\])" }

}

grok {

match => { "rulesfile" => "(?<rules_file>/.+.conf)" }

}

# Extract Attack Type from Rules File

grok {

match => { "rulesfile" => "(?<attack_type>[A-Z]+-[A-Z][^.]+)" }

}

# Extract Rule ID from Alert Message

grok {

match => { "alert_message" => "(?<ruleid>\[id \"(\d+)\"\])" }

}

grok {

match => { "ruleid" => "(?<rule_id>\d+)" }

}

# Extract Attack Message (msg) from Alert Message

grok {

match => { "alert_message" => "(?<msg>\[msg \S(.*?)\"\])" }

}

grok {

match => { "msg" => "(?<alert_msg>\"(.*?)\")" }

}

# Extract the User/Scanner Agent from Alert Message

grok {

match => { "alert_message" => "(?<scanner>User-Agent' \SValue: `(.*?)')" }

}

grok {

match => { "scanner" => "(?<user_agent>:(.*?)\')" }

}

grok {

match => { "alert_message" => "(?<agent>User-Agent: (.*?)\')" }

}

grok {

match => { "agent" => "(?<user_agent>: (.*?)\')" }

}

# Extract the Target Host

grok {

match => { "alert_message" => "(hostname \"%{IPORHOST:dst_host})" }

}

# Extract the Request URI

grok {

match => { "alert_message" => "(uri \"%{URIPATH:request_uri})" }

}

grok {

match => { "alert_message" => "(?<ref>referer: (.*))" }

}

grok {

match => { "ref" => "(?<referer> (.*))" }

}

mutate {

# Remove unnecessary characters from the fields.

gsub => [

"alert_msg", "[\"]", "",

"user_agent", "[:\"'`]", "",

"user_agent", "^\s*", "",

"referer", "^\s*", ""

]

# Remove the Unnecessary fields so we can only remain with

# General message, rules_file, attack_type, rule_id, alert_msg, user_agent, hostname (being attacked), Request URI and Referer.

remove_field => [ "alert_message", "rulesfile", "ruleid", "msg", "scanner", "agent", "ref" ]

}

}

output {

elasticsearch {

hosts => ["https://${ES_NAME}:9200"]

user => "${ELASTICSEARCH_USERNAME}"

password => "${ELASTICSEARCH_PASSWORD}"

ssl => true

cacert => "config/certs/ca/ca.crt"

}

}

Verify Docker Compose File Syntax

Check Docker Compose file Syntax;

docker-compose -f docker-compose.yml config

If there is any error, it will be printed. Otherwise, the Docker compose file contents are printed to standard output.

If you are in the same directory where docker-compose.yml file is located, simply run;

docker-compose config

Deploy ELK Stack 8 Using Docker Compose file

Everything is now setup and we are ready to build and start our Elastic Stack instances using the docker-compose up command.

Navigate to the main directory where the Docker compose file is located. In my setup the directory is $HOME/elastic-docker.

cd $HOME/elastic-docker

docker-compose up

The command creates and starts the containers in foreground.

Sample output;

...

elasticsearch | {"@timestamp":"2024-01-16T19:02:40.690Z", "log.level": "INFO", "current.health":"GREEN","message":"Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[.security-7][0]]]).","previous.health":"YELLOW","reason":"shards started [[.security-7][0]]" , "ecs.version": "1.2.0","service.name":"ES_ECS","event.dataset":"elasticsearch.server","process.thread.name":"elasticsearch[es01][masterService#updateTask][T#1]","log.logger":"org.elasticsearch.cluster.routing.allocation.AllocationService","elasticsearch.cluster.uuid":"lhhCqqyfRrOuJ5lIHuOCww","elasticsearch.node.id":"Q9-_fRo7Q6awepd8PYGvnQ","elasticsearch.node.name":"es01","elasticsearch.cluster.name":"elk-docker-cluster"}

...

...

logstash | [2024-01-16T19:03:03,172][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/share/logstash/pipeline/modsec.conf"], :thread=>"#"}

logstash | [2024-01-16T19:03:03,927][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {"seconds"=>0.75}

logstash | [2024-01-16T19:03:03,939][INFO ][logstash.inputs.beats ][main] Starting input listener {:address=>"0.0.0.0:5044"}

logstash | [2024-01-16T19:03:03,944][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

logstash | [2024-01-16T19:03:03,962][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

logstash | [2024-01-16T19:03:04,023][INFO ][org.logstash.beats.Server][main][5c2281c3c7dc3fa0cecb74e0eb418d31f5ca88d19e9d33bf9ac5902cf7ffec49] Starting server on port: 5044

...

...

{"log":"[2024-01-16T19:03:08.805+00:00][INFO ][plugins.alerting] Installing ILM policy .alerts-ilm-policy\n","stream":"stdout","time":"2024-01-16T19:03:08.80584097Z"}

{"log":"[2024-01-16T19:03:08.807+00:00][INFO ][plugins.alerting] Installing component template .alerts-framework-mappings\n","stream":"stdout","time":"2024-01-16T19:03:08.807954546Z"}

{"log":"[2024-01-16T19:03:08.809+00:00][INFO ][plugins.alerting] Installing component template .alerts-legacy-alert-mappings\n","stream":"stdout","time":"2024-01-16T19:03:08.809793742Z"}

{"log":"[2024-01-16T19:03:08.826+00:00][INFO ][plugins.alerting] Installing component template .alerts-ecs-mappings\n","stream":"stdout","time":"2024-01-16T19:03:08.827201234Z"}

{"log":"[2024-01-16T19:03:08.839+00:00][INFO ][plugins.ruleRegistry] Installing component template .alerts-technical-mappings\n","stream":"stdout","time":"2024-01-16T19:03:08.840094086Z"}

{"log":"[2024-01-16T19:03:10.330+00:00][INFO ][http.server.Kibana] http server running at http://0.0.0.0:5601\n","stream":"stdout","time":"2024-01-16T19:03:10.330814866Z"}

...

When you stop the docker-compose up command, all containers are stopped.

From another console, you can check running containers. Note that you can use docker-compose command as you would docker command. However, to use it, you need to be in the same directory as compose file or specify path using the -f option..

docker-compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

elasticsearch docker.elastic.co/elasticsearch/elasticsearch:8.11.4 "/bin/tini -- /usr/l…" elasticsearch 21 minutes ago Up 17 minutes (healthy) 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp

kibana docker.elastic.co/kibana/kibana:8.11.4 "/bin/tini -- /usr/l…" kibana 21 minutes ago Up 16 minutes 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp

logstash docker.elastic.co/logstash/logstash:8.11.4 "/usr/local/bin/dock…" logstash 21 minutes ago Up 16 minutes 0.0.0.0:5044->5044/tcp, :::5044->5044/tcp, 9600/tcp

From the output, you can see that the containers are running and their ports exposed on the host (any IP address) to allow external access.

You can run the stack containers in background using the -d option. You can press ctrl+c to cancel the command and stop the containers.

To relaunch containers in background

docker-compose up -d

[+] Running 4/4

[+] Running 4/4kstack-docker-es_setup-1 Healthy 0.0s

✔ Container elkstack-docker-es_setup-1 Healthy 0.0s

✔ Container elasticsearch Healthy 0.0s

✔ Container logstash Started 0.0s

✔ Container kibana Started

You can as well list the running containers using docker command;

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

40516edb2827 docker.elastic.co/logstash/logstash:8.11.4 "/usr/local/bin/dock…" 24 minutes ago Up 22 seconds 0.0.0.0:5044->5044/tcp, :::5044->5044/tcp, 9600/tcp logstash

2efaeccc67a3 docker.elastic.co/kibana/kibana:8.11.4 "/bin/tini -- /usr/l…" 24 minutes ago Up 22 seconds 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp kibana

a3f453974592 docker.elastic.co/elasticsearch/elasticsearch:8.11.4 "/bin/tini -- /usr/l…" 24 minutes ago Up 53 seconds (healthy) 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp elasticsearch

To find the details of each container, use docker inspect <container-name> command. For example

docker inspect elasticsearch

To get the logs of a container, use the command docker logs [OPTIONS] CONTAINER. For example, to get Elasticsearch container logs;

docker logs elasticsearch

If you need to check specific number of logs, you can use the tail option. E.g to get the last 50 log lines;

docker logs --tail 50 -f elasticsearch

Or check the /var/lib/docker/containers/<long-docker-id>/<long-docker-id>.log

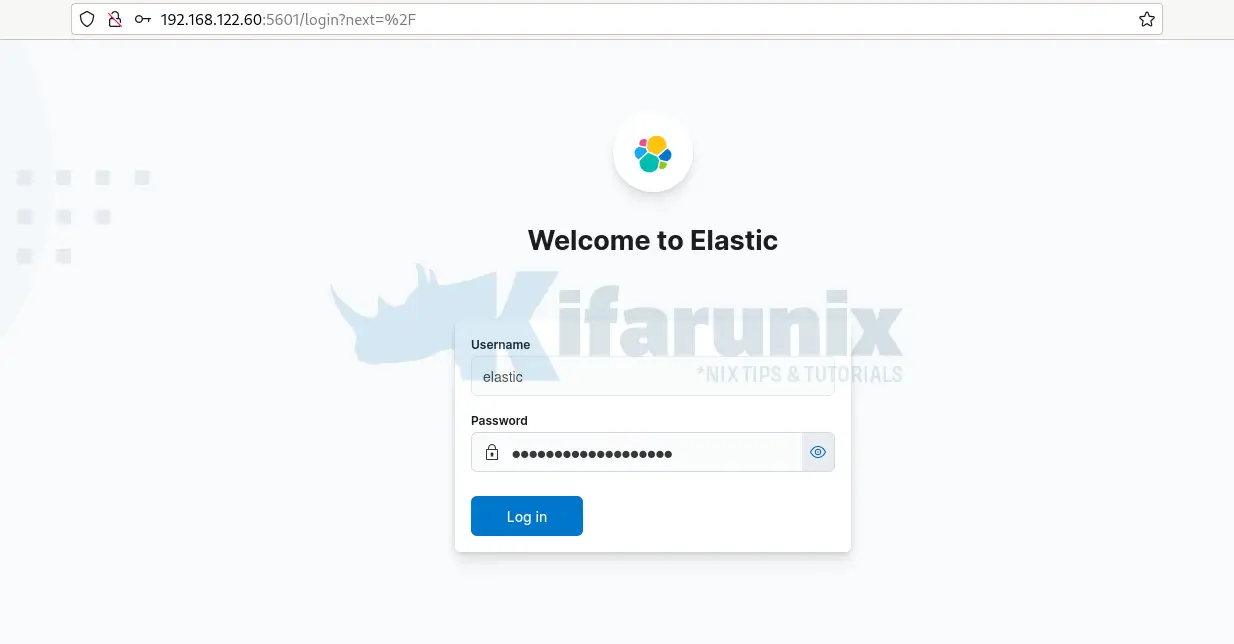

Accessing Kibana Container from Browser

Once the stack is up and running, you can access Kibana externally using the host IP address and the port on which it is exposed on. In our setup, Kibana container port 5601 is exposed on the same port on the host;

docker port kibana

5601/tcp -> 0.0.0.0:5601 5601/tcp -> [::]:5601

This means that you can access Kibana container port on via any interface on the host, port 5601. Similarly, you can check container port exposure using the command above.

Therefore, you can access Kibana using your Container host address, http://<IP-Address>:5601.

Login using the Elastic user. You can add other accounts thereafter.

And there you go!

Sending Data Logs to Elastic Stack

Since we configured our Logstash receive event data from the Beats, we will configure Filebeat to forward events.

We already covered how to install and configure Filebeat to forward event data in our previous guides;

Install and Configure Filebeat on CentOS 8

Install Filebeat on Fedora 30/Fedora 29/CentOS 7

Install and Configure Filebeat 7 on Ubuntu 18.04/Debian 9.8

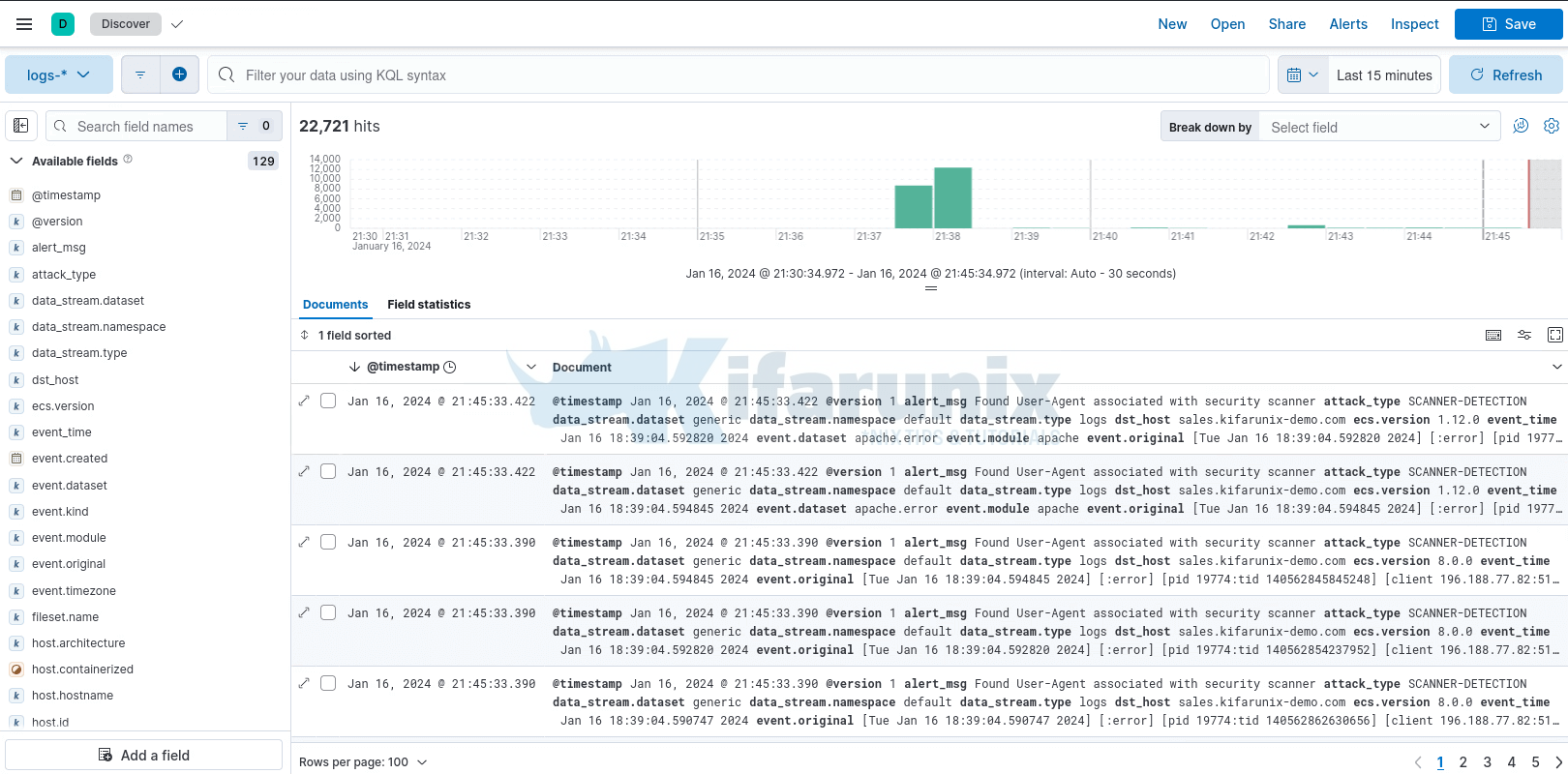

Once you forward data to your Logstash container, the next thing you need to do is create Kibana index.

Open the menu, then go to Stack Management > Kibana > Index Patterns.

Once done, heading to Discover menu to view your data. You should now be able to see your Logstash custom fields populated.

That marks the end of our tutorial on how to deploy a single node Elastic Stack cluster on Docker Containers.

Other Tutorials

Configure Kibana Dashboards/Visualizations to use Custom Index