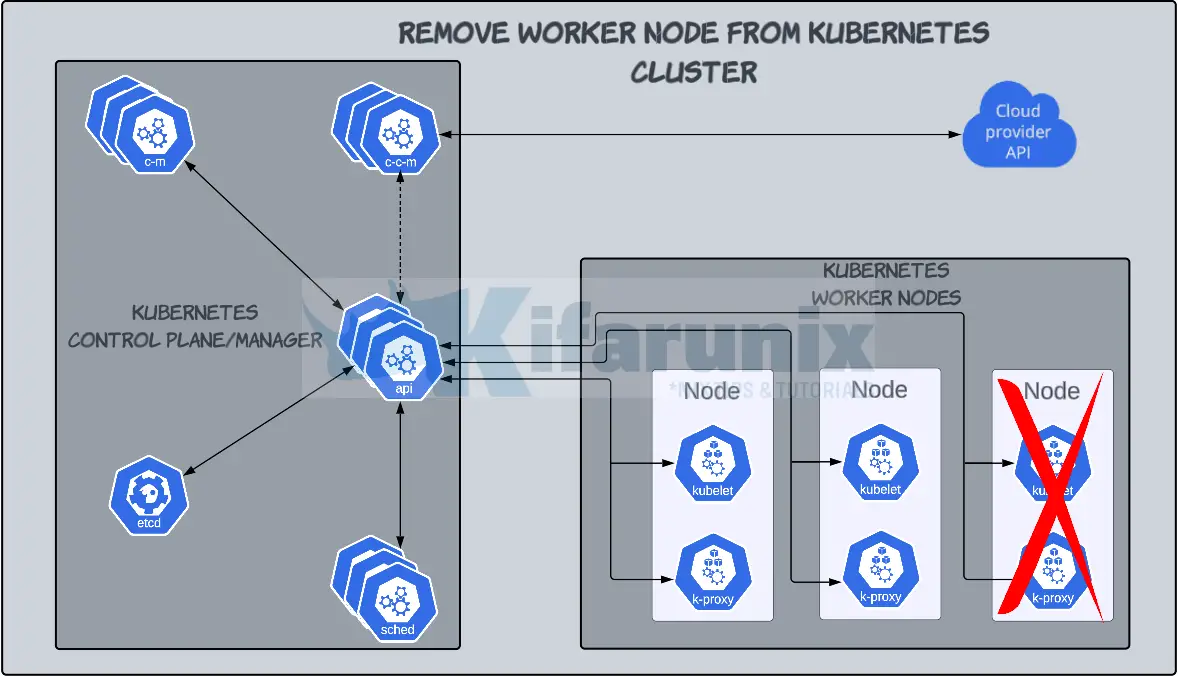

In this tutorial, you will learn how to gracefully remove worker node from Kubernetes cluster. If you are managing a Kubernetes cluster, there might arise a need to remove a worker node from it. The process of removing a worker node from a Kubernetes cluster may slightly vary with the tool or platform that is used to deploy Kubernetes cluster. For example, the process of removing a worker node from a kubeadm Kubernetes cluster might be different from removing a worker node from a Kubernetes deployed using GKE, Rancher, OpenShift, Kubermatic, or kops.

Table of Contents

Removing Worker Node from Kubernetes Cluster Gracefully

In this guide, we will learn how to safely or gracefully remove a worker node from a Kubernetes cluster created with kubeadm.

So, what are the steps to removing worker node from Kubernetes cluster gracefully?

Prepare the Node for Removal

In a production environment, you can’t just wake up and decide to remove a node that is running production workloads. You need to prepare for the removal in order to minimize the risk of downtime or performance issues in the Kubernetes.

Preparing the node for removal from Kubernetes cluster involves various tasks such as;

- Ensure that there is sufficient resources in the cluster to support the cluster workloads

- Ensure that services hosted by specific node being removed are distributed across Kubernetes cluster nodes.

- Backup any data and configurations on the node being removed from the cluster.

- Ensure that removal of the node doesn’t affect any networking settings on the cluster.

Drain the Worker Node

Before you can remove a worker node from Kubernetes cluster, you need to migrate all the Pods scheduled on that node to other nodes in the cluster. This is what is called draining a node in Kubernetes and is one of the Kubernetes workloads voluntary disruption action. This will ensure that the workloads runs uninterrupted during the decommissioning of the node.

kubectl drain is the command that can be used to migrate the nodes running on the node marked for decommissioning into other cluster nodes.

The command needs to be executed on the Kubernetes control plane/master node.

The syntax of the kubectl drain command is;

kubectl drain NODE [options]List the nodes on the cluster;

kubectl get nodesSample output;

NAME STATUS ROLES AGE VERSION

master.kifarunix-demo.com Ready control-plane 6d16h v1.27.1

wk01.kifarunix-demo.com Ready worker 6d16h v1.27.1

wk02.kifarunix-demo.com Ready <none> 6d15h v1.27.1

wk03.kifarunix-demo.com Ready <none> 6d15h v1.27.1

You can then run the command below to drain the worker node marked for decommissioning.

For example, let’s drain wk01 in example output above;

kubectl drain wk01.kifarunix-demo.comThe ‘drain’ command waits for graceful termination. You should not operate on the machine until the command completes.

The drain command will also cordone the node. This means that the node is marked as unschedulable and prevents the Kubernetes scheduler from placing any new pods onto the node.

If the node has daemon set-managed pods, the drain command wont drain it. Similarly, if there are any pods that are neither mirror pods nor managed by a replication controller, replica set, daemon set, stateful set, or job, then drain will not delete any pods unless you use --force. The --force will also allow deletion to proceed if the managing resource of one or more pods is missing.

Sample output in my drain command above;

node/wk01.kifarunix-demo.com cordoned

error: unable to drain node "wk01.kifarunix-demo.com" due to error:cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): calico-system/calico-node-g4vlv, calico-system/csi-node-driver-9b9xx, kube-system/kube-proxy-sn66b, continuing command...

There are pending nodes to be drained:

wk01.kifarunix-demo.com

cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): calico-system/calico-node-g4vlv, calico-system/csi-node-driver-9b9xx, kube-system/kube-proxy-sn66b

To forcefully drain the node, i just add the --force, --ignore-daemonsets options to the command.

kubectl drain wk01.kifarunix-demo.com --force --ignore-daemonsetsUse these flags with caution as it can lead to disruption of running pods

As already mentioned, let the command terminates gracefully.

Note that this process may take sometime to complete.

Check more options using the --help option.

kubectl drain --helpOnce the command runs, you can check nodes status to ensure that it is now not read to accept any workloads.

There are also a number of constraints that may affect the drain process of a node;

Pod Disruption Budgets (PDBs): This is a Kubernetes feature that sets a minimum number of replicas a given pod must have during voluntary disruption actions such during node maintenance, updates, etc. Draining a node a cannot violate the PDBs, as this could result in a service disruption. And thus, your drain command may just hang and does nothing. You can check for any PDBs on all namespaces;kubectl get poddisruptionbudget --all-namespaces.Resource Constraints: The cluster must have enough resources to accommodate the Pods being evicted from the node being drained.Node Affinity/Anti-affinity. These are some of the Kubernetes Pod scheduler decision making concepts. Affinity defines conditions under which a Pod is scheduled on a node while anti-affinity define conditions under which a Pod should not be scheduled on a node. Drain process respects whatever conditions defined by the schedulerDaemonsets: In Kubernetes, daemonsets ensure that a pod is running on every node in the cluster. Before a node is successfully drained, the pods running on it must be rescheduled elsewhere. Drain constraints must account for daemonsets to avoid service disruption.Taints/Tolerations: Taints is the opposite of Node affinity. They are defined to prevent certain pods from being scheduled on specific nodes. Tolerations on the other hand are applied to Pods to allow them to be scheduled on nodes with matching taints. The drain command will only evict Pods from tainted nodes if they have tolerations that match.

Delete a Worker Node

Once the node has been successfully evicted, you can now remove it from the cluster.

You can remove an evicted node from the cluster using the kubectl delete command.

kubectl delete node wk01.kifarunix-demo.comConfirm Removal of Worker Node

You can verify that the node has been removed using the kubectl get nodes command.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.kifarunix-demo.com Ready control-plane 6d21h v1.27.1

wk02.kifarunix-demo.com Ready <none> 6d21h v1.27.1

wk03.kifarunix-demo.com Ready <none> 6d21h v1.27.1

wk01 is gone!

You can also check Pods;

kubectl get pod --all-namespacesTo list Pods running on a specific node;

kubectl get pods --field-selector spec.nodeName=<node-name>E.g;

kubectl get pods --field-selector spec.nodeName=wk01.kifarunix-demo.comYou should have now successfully and gracefully removed a node from the kubeadm Kubernetes cluster.

Optionally Reset the Node

You can optionally remove all the configurations related to K8s on the node.

Thus, login to the node that was drained and run the command below;

kubeadm resetConclusion

In summary, the steps you need to take gracefully remove worker node from cluster;

- ensure cluster is ready to support workload after removal of a specific node

- drain the node to from the cluster (kubectl drain <node-name>)

- fix any constraints that might prevent the removal of a node

- delete the node from the cluster

That marks the end of our guide on removing worker node from Kubernetes cluster gracefully.

Other Tutorials

What are the core concepts in Kubernetes?

Kubernetes Architecture: A High-level Overview of Kubernetes Cluster Components