In this blog post, we will describe Kubernetes Architecture: a high-level overview of Kubernetes cluster components. Organizations are continually adopting cloud-native technologies with containerization becoming the de facto way of packaging and deploying applications. However, managing large scale number of containers can be challenging and thus, this is where Kubernetes comes in. Kubernetes is an open-source container orchestration system that provides a platform for automating the deployment, scaling, and management of containers across multiple hosts. But what makes up Kubernetes cluster?

Table of Contents

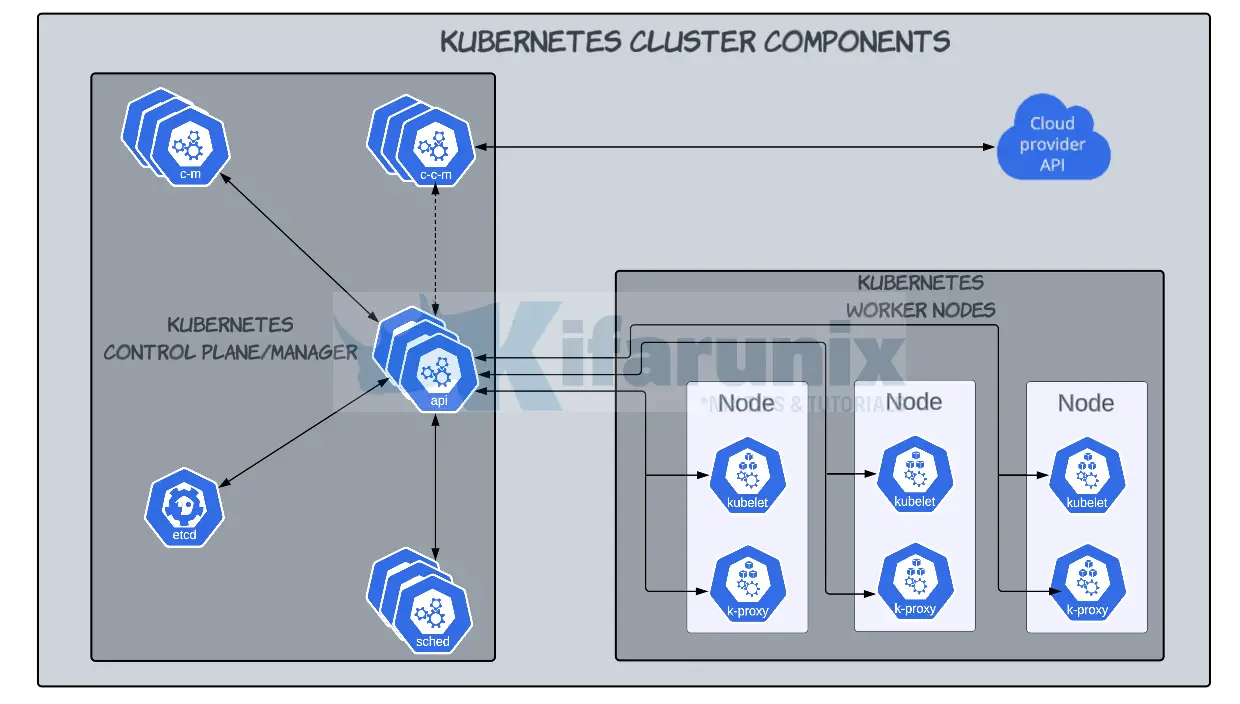

Kubernetes Architecture: Overview of Kubernetes Cluster Components

Kubernetes Architecture Overview

When you deploy Kubernetes, you simply create a Kubernetes cluster. A Kubernetes cluster is composed of several components that work together to provide a scalable, fault-tolerant, and highly available environment for running containers.

As can be seen in the architecture diagram above, Kubernetes uses Master-worker architecture. This was traditionally referred to as Master-slave architecture.

- The

master node, hosts Kubernetescontrol planewhich is responsible for controlling and managing all the cluster activities such as;- scheduling/rescheduling of the containers (Pods, a Pod is a single instance of an application. Containers run inside a Pod, which then runs on a node) on the

worker nodes. - monitoring the health of worker nodes in the cluster.

- creating, updating, and deleting services in the cluster

- replication of the pods in the cluster…

- scheduling/rescheduling of the containers (Pods, a Pod is a single instance of an application. Containers run inside a Pod, which then runs on a node) on the

- The

worker nodeshosts and runs the actual containerized workloads, aka Pods. They also provide the compute resources required to run the Pods.

Each node in the cluster can either be a physical or virtual machine.

A cluster must have at least one master node and at least on worker node. For redundancy purposes, at least three worker nodes are recommended in a cluster.

Overview of Kubernetes Cluster Components

Various Kubernetes components that runs on either control plane or worker node are outlined in the diagram above.

Master Node/Control Plane Components

The control plane runs various Kubernetes components, such as;

- Kubernetes API server (kube-apiserver)

- etcd

- Kubernetes scheduler (sched)

- Kubernetes controller manager (c-m)

- Optional cloud controller manager (c-c-m)

So, what is the role of each component?

Kubernetes API Server (kube-apiserver): The kube-apiserver exposes Kubernetes API which is the front end of the Kubernetes control plane. It handles all the API requests from various clients interacting with the Kubernetes cluster.Cluster State Store (etcd): This is a distributed key-value store that stores the entire state of the Kubernetes cluster. It provides a consistent and highly available storage solution for the Kubernetes API server.Kubernetes Scheduler: Kubernetes schedulers are responsible for scheduling pods onto worker nodes based on resource availabilityKubernetes controller manager: The kube-controller-manager is responsible for managing various controllers, such as;- the

Replication Controller: ensures that a specified number of pod replicas are running at any one time. Deployment Controller: controls the state of Pod/ReplicaSets by changing the actual state to the desired state at a controlled rate.Job Controller: responsible for creating one or more Pods to perform specific tasks and ensures that the specified number of successful completions is achieved before terminating the Job.Node controller: Responsible for noticing and responding when nodes go down.DaemonSet Controller: ensures that a Pod runs on every node in a Kubernetes cluster.- And other controllers.

- the

- The optional cloud controller manager acts as a bridge between the cloud providers such as AWS, GCP and Kubernetes API server and is responsible for managing various cloud resources such as load balancers, volumes, and network interfaces, etc.

Worker Node Components

There are a number of components that run on Kubernetes Nodes;

- Kubernetes

kubelet: kubelets are agents that run on each worker node and are responsible for starting, stopping, and maintaining the containers in a Pod. - Pod: A Pod is logical host for one or more containers, which share the same network namespace and storage volumes. Containers within the same Pod can communicate with each other using the loopback network interface, and they can also share the same set of resources, such as CPU, memory, and storage.

- Kubernetes

proxy: This is a network proxy that runs on each worker node. It manages the network rules that controls the flow of the network traffic to the appropriate container or service based on the Kubernetes service configuration. kube-proxy uses the operating system packet filtering layer if there is one and it’s available. Otherwise, kube-proxy forwards the traffic itself. Container runtime: The container runtime is responsible for running containers on the node. Examples of container runtimes include Docker Engine, containerd, CRI-O, Mirantis container runtime.

Add-On Kubernetes Components

These are the components that are used to extend the functionality of Kubernetes cluster.

Some common add-ons include:

- Kubernetes

Cluster DNS: In addition to DNS servers in your environment, Kubernetes cluster DNS provides DNS services in Kubernetes cluster. This allows Pods to resolve each other by their names. The DNS server is automatically included in DNS searches for each container started by Kubernetes. Web UI Dashboardwhich provides a web based UI dashboard for managing Kubernetes cluster.Container resource monitoringwhich collects the container resource utilization time-series metrics and provides a user interface for browsing the metrics.Cluster level loggingwhich collect logs from the Kubernetes cluster. You will need a separate storage to store, analyze, and query logs.

Conclusion

And that is it on our high level overview of Kubernetes architecture. In general, Kubernetes is designed to be highly scalable, fault-tolerant, and flexible. All components work together to provides a powerful platform for running containerized applications at scale.

Reference

Other Tutorials

Introduction to Kubernetes: What is it and why do you need it?