This blog post will take you through Kubernetes resource optimization with Vertical Pod Autoscaler. Managing resource allocation efficiently in Kubernetes is crucial for optimizing costs and ensuring consistent application performance. Vertical Pod Autoscaler (VPA) addresses these challenges by dynamically adjusting resource requests and limits for individual pods based on their actual usage patterns. This blog post explores VPA’s functionalities, benefits over Horizontal Pod Autoscaler (HPA), installation steps, configuration, and practical examples.

Table of Contents

Optimizing Kubernetes Resources with Vertical Pod Autoscaler (VPA)

What is Vertical Pod Autoscaler (VPA) in Kubernetes?

Vertical Pod Autoscaler (VPA) is a Kubernetes API resource that automatically adjusts the resource requests (CPU and memory) of pods to better match their actual usage patterns. Unlike Horizontal Pod Autoscaler (HPA), which scales the number of pod instances in the cluster based on metrics like CPU or memory utilization, VPA focuses on optimizing the resource requests of individual pods.

You can read more about Kubernetes Horizontal Pod Autoscaler (HPA) in the guide below;

Horizontal Scaling in Kubernetes

How does Vertical Pod Autoscaler Work in Kubernetes

VPA analyzes historical resource usage patterns of pods and adjusts their resource requests (CPU and memory) accordingly. VPA works in multi-step process to adjust the pods resource requests. This process is summarized below;

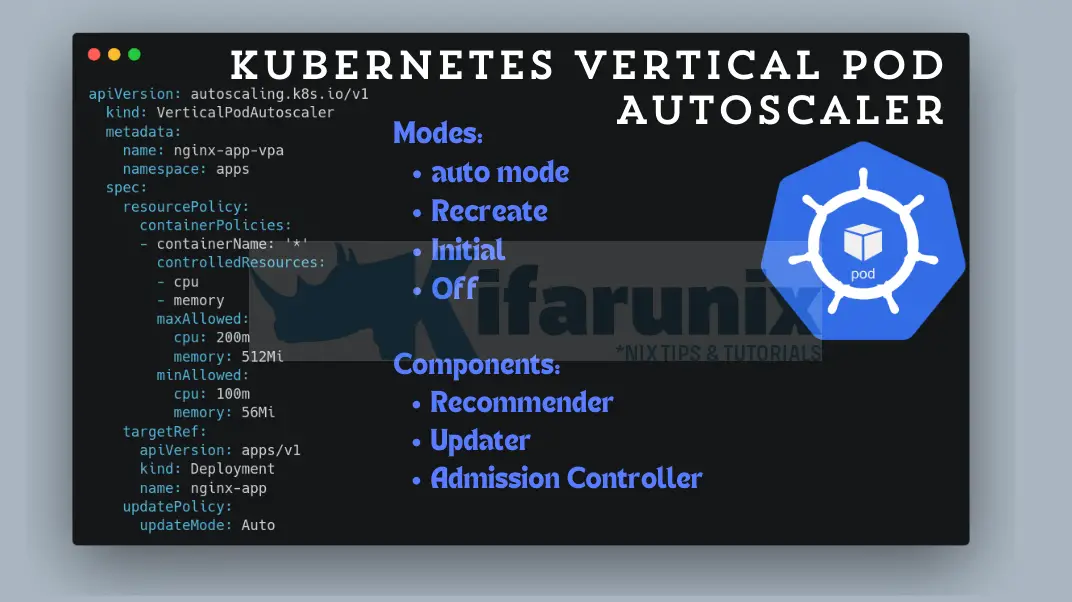

VPA is made up three components that work to together ensure efficient resource optimization.

- VPA Recommender: The recommender continuously monitors and analyzes the Pods resource requests (CPU and memory…). Based on the analysis, it provides recommended values for the containers’ cpu and memory requests.

- VPA Updater: Based on the recommendations by the recommender, the Updater checks if the managed Pods have the correct recommended resource requests set. If not, it initiates actions to adjust resource requests and limits of the pods. The action to take depends on the chosen VPA mode which can either be Auto, Recreate, Initial or Off. There are four modes under which a VPA can operate:

- Auto Mode (Recommended): This mode allows VPA to automatically adjust resource requests for Pods when they are being created. However, it also updates resource requests and limits for existing pods based on the recommendations from the recommender. Therefore, you don’t necessarily need to set initial requests and limits for pods managed by VPA in Auto mode.

- Recreate: Just like the Auto mode, this mode allows VPA to update existing pods resource requests by evicting and recreating them when the resource requests differ significantly from the new recommendation. It will honor PDB if any is set. When using this mode, be cautious as it can lead to downtime.

- Initial Mode: This mode allows VPA to assign resource requests to Pods only on creation and never updates them once the Pods are running. As such, you need to manually set both resource requests and limits for your pods.

- Off: In this mode, VPA does not automatically update the resource requirements of the pods. The recommendations are calculated and can be inspected in the VPA object. Uoto admin to apply. Similarly, you need to manually set both resource requests and limits for your pods.

- VPA Admission Controller: This component works alongside the Kubernetes API server. When a new pod managed by the VPA is created, the admission controller intercepts the request and injects the recommended resource values from the recommender into the pod specification before scheduling the pod on a node. This ensures the pod starts with the optimal resource allocation from the beginning.

In essence:

- The VPA recommender gathers resource utilization data from the Kubernetes metrics server. This data typically includes CPU usage, memory usage, and other relevant metrics depending on your configuration.

- Based on this data, the recommender analyzes historical usage patterns for each pod. It considers factors like peak usage, average usage, and resource constraints.

- The recommender then calculates the optimal resource requests and limits for each pod. Resource requests define the minimum amount of resources a pod needs to function properly, while limits set the maximum resources a pod can consume.

Master Kubernetes with a beginner-friendly book, The Kubernetes Book 2024 Edition by Nigel Poulton;

VPA vs. HPA: Understanding the Differences

When you deploy your Kubernetes application, you may estimate its resource requirements and probably set the initial CPU and memory requests/limits. But what happens when the workload fluctuates? Two things can happen:

- Over-provisioning: You may overestimate the resource requirements leading to wastage and higher cloud costs.

- Under-provisioning: Underestimating resource requirements can result in pod performance degradation and potential application outages.

In HPA, you have to manually define the resource (CPU and Memory) requests and limits, which may lead to situations above. This is what VPA aims to solve by automatically adjusting resource requests and limits for individual pods based on their actual usage. As such, it is suitable for controlling resources requirements for workloads with fluctuating resource demands.

While Kubernetes natively supports HPA, you need to manually install VPA using custom resource definitions (CRDs) to utilize it.

Getting Started with VPA in Kubernetes Cluster

So, how can you implement VPA in your Kubernetes cluster to control workload resources.

Install and Setup Kubernetes cluster

You can check any of our guides below to install and setup Kubernetes cluster.

Install and Setup Kubernetes Cluster on Ubuntu 24.04

Setup Highly Available Kubernetes Cluster with Haproxy and Keepalived

Install and Setup Metrics Server

You need a Metrics server collect metrics from Pods and send it VPA controller!

Install Kubernetes Metrics Server on a Kubernetes Cluster

Install VPA Controller

VPA (Vertical Pod Autoscaler) is not natively available in Kubernetes like HPA (Horizontal Pod Autoscaler). Therefore, you have to install it.

Therefore, clone the kubernetes/autoscaler git repo;

sudo apt install gitgit clone https://github.com/kubernetes/autoscaler.gitThen navigate to the VPA directory and install it as follows;

cd autoscaler/vertical-pod-autoscaler/./hack/vpa-up.shSample installation output;

customresourcedefinition.apiextensions.k8s.io/verticalpodautoscalercheckpoints.autoscaling.k8s.io created

customresourcedefinition.apiextensions.k8s.io/verticalpodautoscalers.autoscaling.k8s.io created

clusterrole.rbac.authorization.k8s.io/system:metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:vpa-actor created

clusterrole.rbac.authorization.k8s.io/system:vpa-status-actor created

clusterrole.rbac.authorization.k8s.io/system:vpa-checkpoint-actor created

clusterrole.rbac.authorization.k8s.io/system:evictioner created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-actor created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-status-actor created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-checkpoint-actor created

clusterrole.rbac.authorization.k8s.io/system:vpa-target-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-target-reader-binding created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-evictioner-binding created

serviceaccount/vpa-admission-controller created

serviceaccount/vpa-recommender created

serviceaccount/vpa-updater created

clusterrole.rbac.authorization.k8s.io/system:vpa-admission-controller created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-admission-controller created

clusterrole.rbac.authorization.k8s.io/system:vpa-status-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-status-reader-binding created

deployment.apps/vpa-updater created

deployment.apps/vpa-recommender created

Generating certs for the VPA Admission Controller in /tmp/vpa-certs.

Certificate request self-signature ok

subject=CN = vpa-webhook.kube-system.svc

Uploading certs to the cluster.

secret/vpa-tls-certs created

Deleting /tmp/vpa-certs.

deployment.apps/vpa-admission-controller created

service/vpa-webhook created

Confirm that the main VPA components have been installed under the kube-system namespace;

kubectl get pod -n kube-system...

vpa-admission-controller-c5c5b4fcc-lcb5p 1/1 Running 0 5m38s

vpa-recommender-6c4585968-l4jnk 1/1 Running 0 5m39s

vpa-updater-7686fd5bf9-kmgxt 1/1 Running 0 5m39s

Create VPA Custom Resource

Let’s create a VPA resource to specify which deployments or pods VPA should manage.

In my example setup, we have an Nginx app deployment in the apps namespace.

kubectl get deployment -n appsNAME READY UP-TO-DATE AVAILABLE AGE

nginx-app 1/1 1 1 17d

kubectl describe deployment nginx-app -n appsapiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "8"

creationTimestamp: "2024-06-17T07:28:33Z"

generation: 15

labels:

app: nginx-app

name: nginx-app

namespace: apps

resourceVersion: "3811115"

uid: d0437a23-6574-4d3f-b1b9-d9995f7188ca

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-app

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx-app

spec:

containers:

- image: nginx:latest

imagePullPolicy: Always

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html-volume

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

name: html-page

name: html-volume

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2024-07-01T20:29:21Z"

lastUpdateTime: "2024-07-01T20:29:21Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2024-06-17T07:28:33Z"

lastUpdateTime: "2024-07-04T17:16:31Z"

message: ReplicaSet "nginx-app-6ff7b5d8f6" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 15

readyReplicas: 1

replicas: 1

updatedReplicas: 1

As you can see from our Deployment details above, we haven’t defined the resource requests and limits because we are going to create an Auto mode VPA.

This is our VPA custom resource definition, named nginx-app-vpa, for auto-scaling the Nginx deployment workload;

cat vpa-auto.yamlapiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: nginx-app-vpa

namespace: apps

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: nginx-app

updatePolicy:

updateMode: "Auto"

Apply the manifest file!

kubectl apply -f vpn-auto.yamlConfirm;

kubectl get vpa -n appsNAME MODE CPU MEM PROVIDED AGE

nginx-app-vpa Auto 6s

You can describe it to see more details;

kubectl describe vpa nginx-app-vpa -n appsName: nginx-app-vpa

Namespace: apps

Labels: <none>

Annotations: <none>

API Version: autoscaling.k8s.io/v1

Kind: VerticalPodAutoscaler

Metadata:

Creation Timestamp: 2024-07-04T17:47:23Z

Generation: 1

Resource Version: 3816539

UID: 5ea19dff-6bd8-43cc-b758-bf7c1487b8c4

Spec:

Target Ref:

API Version: apps/v1

Kind: Deployment

Name: nginx-app

Update Policy:

Update Mode: Auto

Status:

Conditions:

Last Transition Time: 2024-07-04T17:48:21Z

Status: True

Type: RecommendationProvided

Recommendation:

Container Recommendations:

Container Name: nginx

Lower Bound:

Cpu: 25m

Memory: 262144k

Target:

Cpu: 25m

Memory: 262144k

Uncapped Target:

Cpu: 25m

Memory: 262144k

Upper Bound:

Cpu: 25m

Memory: 262144k

Events: <none>

As you can see, the VPA will monitor and adjust the resource requests of the pods managed by the nginx-app Deployment. It is set to automatically update the resource requests of the pods (nginx-app Deployment) based on their resource usage patterns.

From the description output above, the VPA recommender has already analyzed the current Deployment pods resource usage.

Status:

Conditions:

Last Transition Time: 2024-07-04T17:48:21Z

Status: True

Type: RecommendationProvided

And the recommendation suggests allocating 25 millicores of CPU and 262144 KiB of memory for the nginx container.;

Recommendation:

Container Recommendations:

Container Name: nginx

Lower Bound:

Cpu: 25m

Memory: 262144k

Target:

Cpu: 25m

Memory: 262144k

Uncapped Target:

Cpu: 25m

Memory: 262144k

Upper Bound:

Cpu: 25m

Memory: 262144k

Where:

- Lower Bound: Minimum recommended resource allocation (25m CPU, 262144k memory).

- Target: Ideal resource allocation based on observed usage (25m CPU, 262144k memory).

- Uncapped Target: Same as Target in this case.

- Upper Bound: Maximum recommended resource allocation (25m CPU, 262144k memory).

If you check the VPA, now it shows the recommended resources;

kubectl get vpa -n appsNAME MODE CPU MEM PROVIDED AGE

nginx-app-vpa Auto 25m 262144k True 40m

As you can see:

- MODE: Indicates the mode in which the VPA is operating. In this case, it is set to

Auto, which means the VPA automatically adjusts resource requests based on observed usage patterns. - CPU: Specifies the recommended CPU request in milliCPU (m). In this case, it recommends 25 milliCPU, which is equivalent to 0.025 CPU cores.

- MEM: Specifies the recommended memory request in kilobytes (k). Here, it recommends 262144 kilobytes, which is 262144 / 1024 = 256 megabytes (Mi).

- PROVIDED: Indicates whether the recommendations provided by the VPA are being applied (

True). IfFalse, it would mean the VPA is not currently enforcing its recommendations. - AGE: Represents the age of the VPA resource, indicating how long it has been active or since it was created or updated.

Simulating Events to Trigger Vertical Scaling

Now, let’s see VPA in action. How does it react to an increase load on the Deployment workload?

We will use ApacheBench (ab) to perform load testing on our web app.

while true; do sleep 0.01; ab -n 500000 -c 1000 http://192.168.122.62:30833/; doneThe command will send 500,000 HTTP requests to http://192.168.122.62:30833/ with a concurrency level of 1000 requests at a time, pausing for 0.01 seconds between each iteration.

Before we run the stress test command above, let’s watch the Pod resource usage;

watch -n 1 'kubectl top pod -n apps -l app=nginx-app'Next, execute the stress test command above.

After a short while, this is the output of the watch command above;

Every 1.0s: kubectl top pod -n apps -l app=nginx-app master-02: Thu Jul 4 19:27:00 2024

NAME CPU(cores) MEMORY(bytes)

nginx-app-6ff7b5d8f6-km5j5 1127m 6Mi

As you can see, CPU and Memory resource is being adjusted as per the load.

Defining Resource Requests and Limits for a VPA

If you understand the resource requirements for your app, then you can set resource limits, for all the containers in a Pod of a Deployment or even just a specific container.

See our updated VPA resource manifest yaml.

cat vpn-auto.yamlapiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: nginx-app-vpa

namespace: apps

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: nginx-app

updatePolicy:

updateMode: "Auto"

resourcePolicy:

containerPolicies:

- containerName: '*'

minAllowed:

cpu: 100m

memory: 56Mi

maxAllowed:

cpu: 200m

memory: 512Mi

controlledResources: ["cpu", "memory"]

minAllowedspecifies the lower bounds (minimum resource requests).maxAllowedspecifies the upper bounds (maximum resource requests).

You can then apply;

kubectl apply -f vpn-auto.yamlOr edit the vpa directly and update;

kubectl edit vpa nginx-app-vpa -n appsAnd add the resource requests/limits;

...

name: nginx-app-vpa

namespace: apps

resourceVersion: "3842404"

uid: 5ea19dff-6bd8-43cc-b758-bf7c1487b8c4

spec:

resourcePolicy:

containerPolicies:

- containerName: '*'

controlledResources:

- cpu

- memory

maxAllowed:

cpu: 200m

memory: 512Mi

minAllowed:

cpu: 100m

memory: 56Mi

targetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-app

...

Note that the values defined by the minAllowed and maxAllowed settings in the VPA are used as guidance. VPA, based on the continuous analysis of the resource usage, can recommend values outside this range based on observed usage. It strives to balance resource utilization with the need to prevent resource contention or performance degradation.

You can simulate the app stress test to check how VPA will respond to application load.

Updating the VPA Configuration

If you want to make changes to your VPA configuration, there are two ways;

- edit the VPA custom resource definition manifest file and make the changes. Once done, apply the manifest file.

- edit the VPA directly using kubectl edit command and make the changes. See above section.

Deleting the VPA in Kubernetes Cluster

You can get a list of available VPAs on various namespaces;

kubectl get vpa -AThis list VPAs in all the namespaces.

You can then delete respective VPA on a respective Namespace;

kubectl delete VPA <name-of-vpa> -n <namespace>E.g

kubectl delete VPA nginx-app-vpa -n appsOr if you created a VPA sing CRD manifest file, then run;

kubectl delete -f <manifest.yaml>Best Practices when using VPA

- Before enabling Auto Mode in production, thoroughly test the impact of VPA recommendations in a staging environment. Monitor metrics closely during and after updates to ensure they align with expected outcomes.

- Implement rolling updates or other deployment strategies to mitigate downtime. Kubernetes provides mechanisms like Deployment strategies and PodDisruptionBudgets (PDBs) to manage updates and maintain application availability.

- Continuously monitor the recommendations provided by the VPA. Validate these recommendations against actual application performance and adjust VPA configurations as necessary to optimize resource allocation.

Further Reading

You can read more about Kubernetes Autoscaling on the Documentation page.

Conclusion

VPA is a powerful tool for optimizing resource management in Kubernetes. By dynamically adjusting resource allocation for individual pods, VPA helps reduce costs, improve application performance, and achieve a more efficient and cost-effective containerized environment.