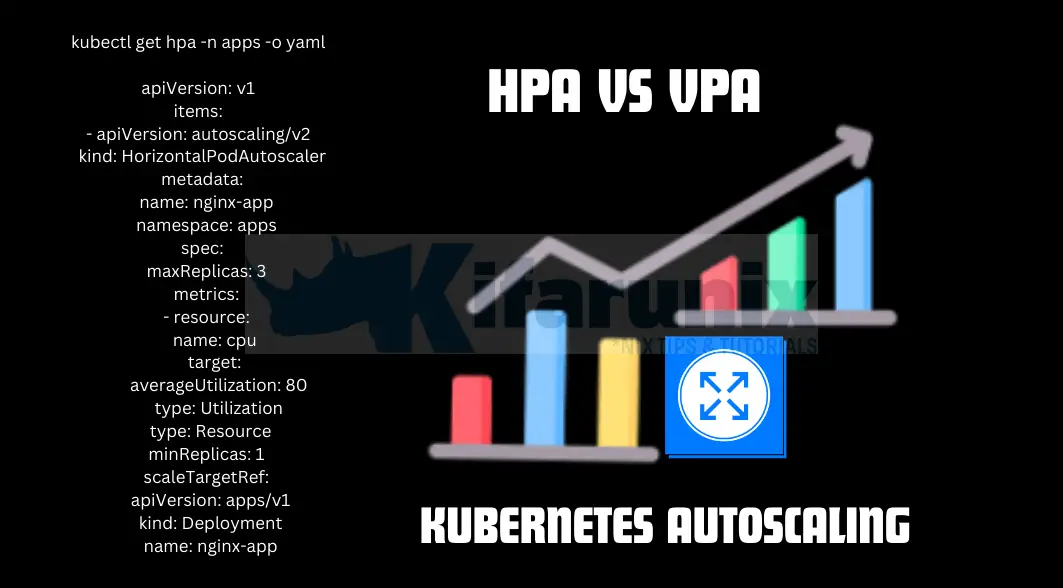

This tutorial serves as a guide to mastering Kubernetes Autoscaling. We’ll explore the two main techniques of Kubernetes scaling: horizontal scaling and vertical scaling. Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA) are the tools that implement these concepts within Kubernetes, respectively. By understanding both horizontal and vertical scaling, along with HPA and VPA, you’ll be equipped to achieve peak performance and efficient resource management for your containerized applications. Ensuring optimal resource utilization is crucial for cloud applications built with Kubernetes. However, fluctuating workloads can quickly turn manual scaling into a tedious and inefficient task. This is where Kubernetes autoscaling comes in, offering a dynamic approach to resource management. Let’s get started.

Table of Contents

Kubernetes Autoscaling: HPA vs VPA

What is Autoscaling?

Autoscaling is a technique that is used to automatically adjust the resources allocated to an application based on predefined metrics. An application deployment/statefulset or any other resource can be dynamically scaled up or down to meet fluctuating demand, ensuring efficient resource utilization and optimal application performance.

Benefits of Autoscaling

Autoscaling is needed in cloud-native environments like Kubernetes for several reasons:

- Dynamic Workload Demands: Applications often experience fluctuating traffic patterns throughout the day or across different seasons. Autoscaling allows resources to be added or removed automatically based on these variations, ensuring that the application can handle peak loads without being over-provisioned during quieter periods.

- Optimal Resource Utilization: Without autoscaling, resources may be provisioned based on peak loads, leading to underutilization during off-peak times and increased costs. Autoscaling adjusts resources dynamically, optimizing resource utilization and reducing unnecessary expenses.

- Improved Performance and Availability: By scaling resources in response to workload changes, autoscaling helps maintain consistent performance levels and availability. It ensures that applications remain responsive even under heavy traffic conditions, enhancing user experience and minimizing downtime.

- Cost Efficiency: Autoscaling helps control infrastructure costs by scaling resources up only when needed and scaling down during periods of lower demand. This elasticity allows organizations to pay for resources based on actual usage rather than maintaining fixed-capacity infrastructure.

- Operational Efficiency: Manual scaling processes can be time-consuming and prone to human error. Autoscaling automates the scaling process, reducing the burden on operations teams and enabling faster response to workload changes.

Types of Autoscaling in Kubernetes

There are different types of workload autoscaling in Kubernetes:

- Horizontal workload autoscaling

- Vertical workload autoscaling

- Cluster-size based autoscaling

- Event-driven autoscaling

- Autoscaling based on schedules.

In this guide, we will be focusing on Horizontal and Vertical workload autoscaling.

Horizontal Scaling with Horizontal Pod Autoscaler (HPA)

In Kubernetes, horizontal scaling refers to the capability of increasing or decreasing the number of replicas (instances) of a particular workload based on metrics such as CPU utilization, memory usage or any custom chosen metrics to meet the demand (HPA mostly uses CPU metric). This is achieved using the Horizontal Pod Autoscaler (HPA), a Kubernetes API resource/controller that automatically adjusts the number of Pods in a replication controller, deployment, replica set, or stateful set.

How does horizontal scaling work in Kubernetes?

First of all, you need to enable horizontal scaling by defining an HPA resource in Kubernetes and specifying the target resource (deployment, replica set, stateful set etc.) and the metrics against which scaling decisions should be made (e.g., CPU utilization, memory usage).

When specified metrics exceed the defined thresholds, Kubernetes increases the number of replicas (Pods) to distribute the workload across more instances, ensuring optimal performance and responsiveness. Conversely, when workload decreases and metrics fall below a specified threshold, Kubernetes scales down the number of replicas to conserve resources and reduce costs.

HPA api resource/controller is available out-of-the-box in Kubernetes cluster.

Vertical Scaling with Vertical Pod Autoscaler (VPA)

In Kubernetes, Vertical scaling refers to the practice of dynamically adjusting the CPU and memory resource requests and limits for already running Pods within a deployment, statefulset, or replicaset to ensure that they have sufficient resources to meet their workload requirements effectively.

Vertical scaling is achieved using Vertical Pod Autoscaler, aka, VPA. Kubernetes does not ship with VPA API resource/controller by default. Hence, you need to install it as custom resource if you want to use it.

So, how does Vertical scaling works in Kubernetes?

Unlike HPA which adds or removes Pods in a cluster to meet the demand, VPA focuses on the analysis of individual Pod resource utilization (CPU, memory) and recommends optimal requests and limits. These requests and limits define the minimum and maximum resources a pod can use. VPA can automatically update them, ensuring pods have the resources they need to function effectively without exceeding limitations.

Read more on Kubernetes Vertical scaling on the guide Kubernetes Resource Optimization with Vertical Pod Autoscaler (VPA).

Are you preparing to take a Certified Kubernetes Administrator (CKA) certification exam? Look no further as Certified Kubernetes Administrator (CKA) Study Guide: In-Depth Guidance and Practice 1st Edition by Benjamin Muschko is what you are looking for.

Prerequisites for Enabling Autoscaling in Kubernetes

Enabling autoscaling in Kubernetes typically requires several prerequisites to ensure effective operation:

- Metrics Server: A functional Kubernetes Metrics Server is essential for gathering resource utilization metrics such as CPU and memory usage from cluster nodes and Pods. This server provides the data necessary for autoscaling controllers to make scaling decisions.

Follow the link below on how to install and configure Metrics API Server in Kubernetes.

Install Kubernetes Metrics Server on a Kubernetes Cluster - Resource Metrics: Ensure that Pods or applications within the cluster are configured to expose relevant resource metrics. This includes defining metrics endpoints or utilizing metrics providers compatible with Kubernetes (e.g., Prometheus).

- Autoscaler Installation: Depending on the type of autoscaling (horizontal or vertical), install the appropriate autoscaler components:

- Horizontal Pod Autoscaler (HPA): Typically included by default in Kubernetes clusters, but verify and ensure it is enabled.

- Vertical Pod Autoscaler (VPA): Install VPA components as Custom Resource Definitions (CRDs) if not already included in the Kubernetes distribution.

- A running Kubernetes cluster: of course, you must be having a running Kubernetes cluster e.g., Minikube, GKE, EKS, e.t.c.

Creating Horizontal Pod Autoscalers (HPA) in Kubernetes Cluster

To horizontally scale your resources; Deployments, StatefulSets, ReplicaSets… you need to create the Horizontal Pod Autoscaler (HPA).

Install Kubernetes Metrics Server

Before you can proceed, ensure Metrics Server has been installed as outlined here.

Just to confirm that we have the metric server up and running, let’s check resource usage of the pods in my default namespace.

kubectl top podSample output;

NAME CPU(cores) MEMORY(bytes)

example-deployment-77d66d9f6f-h9nwn 0m 2Mi

example-deployment-77d66d9f6f-hhq4t 0m 2Mi

example-deployment-77d66d9f6f-lnpm8 0m 2Mi

mysql-0 9m 350Mi

mysql-1 9m 350Mi

If Metrics server is not installed yet, you will get such an output as, error: Metrics API not available.

Deploy an Application

If you already have an application in place that you want to autoscale, then you are good to go.

We already have a sample Nginx app running under a namespace called apps.

kubectl get deployment -n appsNAME READY UP-TO-DATE AVAILABLE AGE

nginx-app 3/3 3 3 13d

operator 1/1 1 1 11d

This is the deployment that we will try to scale horizontally. It currently has three replicas. So, first of all, let’s scale it down to single Pod.

kubectl scale deployment nginx-app --replicas=1 -n appsConfirm;

kubectl get deployment -n appsNAME READY UP-TO-DATE AVAILABLE AGE

nginx-app 1/1 1 1 13d

operator 1/1 1 1 11d

Define Resource Requests and Limits in Pod Specifications

When deploying your application, you need to specify CPU and memory requests and limits in the Pod’s container specifications. This helps Kubernetes scheduler make placement decisions based on available resources and ensures fair resource allocation among Pods. It also helps the autoscalers to improve resource usage in the cluster.

Requests specify guaranteed minimum resources (CPU and memory) for a container while limits define maximum resource that the containers can consume.

To check if the resource requests and limits are set for a deployment, you can use kubectl get command. For example, to check resource definition for my nginx-app deployment in the apps namespace.

kubectl get deployment nginx-app -n apps -o yamlUnder the deployment container template specifications, you should see the resource requests and limits for the Pods.

template:

metadata:

creationTimestamp: null

labels:

app: nginx-app

spec:

containers:

- image: nginx:latest

imagePullPolicy: Always

name: nginx

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 100m

memory: 256Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html-volume

If you don’t see them on your Deployment, then these resources requests and limits are not defined.

You can edit the deployment and set them;

kubectl edit deployment nginx-app -n appsAnd add your resource requests and limits as shown above.

You can also use kubectl set command to set the CPU and Memory requests and limits.

To set the requests;

kubectl set resources deployment nginx-app -n apps --requests=cpu=100mkubectl set resources deployment nginx-app -n apps --requests=memory=256MiLimits;

kubectl set resources deployment nginx-app -n apps --limits=cpu=500mkubectl set resources deployment nginx-app -n apps --limits=memory=512MiOr you can combine the resources;

kubectl set resources deployment nginx-app -n apps --requests=cpu=100m,memory=256Mikubectl set resources deployment nginx-app -n apps --limits=cpu=500m,memory=512MiWithout the resource requests defined, you HPA may show unknown for the target metric being checked.

kubectl get hpa -n appsNAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-app Deployment/nginx-app cpu: <unknown>/80% 1 3 1 5m29s

Similarly, you may see that the HPA was unable to compute the replica count: failed to get cpu utilization: missing request for cpu in container when you render the HPA;

kubectl describe hpa nginx-app -n appsName: nginx-app

Namespace: apps

Labels: <none>

Annotations: <none>

CreationTimestamp: Sun, 30 Jun 2024 13:17:00 +0000

Reference: Deployment/nginx-app

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): <unknown> / 80%

Min replicas: 1

Max replicas: 3

Deployment pods: 1 current / 0 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale

ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: missing request for cpu in container nginx of Pod nginx-app-6ff7b5d8f6-k5k48

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedComputeMetricsReplicas 4m3s (x12 over 6m48s) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get cpu resource metric value: failed to get cpu utilization: missing request for cpu in container nginx of Pod nginx-app-6ff7b5d8f6-k5k48

Warning FailedGetResourceMetric 108s (x21 over 6m48s) horizontal-pod-autoscaler failed to get cpu utilization: missing request for cpu in container nginx of Pod nginx-app-6ff7b5d8f6-k5k48

Once you expose the Deployment resource metrics, you should be able to see the correct current and target metrics limit.

kubectl get hpa -n appsNAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 1 27s

Create HorizontalPodAutoscaler (HPA) Resource

You can create an HPA resource declaratively using manifests YAML file or imperatively via the kubectl autoscale command.

Let’s see how to create an HPA imperatively via the kubectl autoscale command.

kubectl autoscale --helpSample command to create an HPA that autoscales my deployment, nginx-apps, in the apps namespace;

kubectl autoscale deployment nginx-app --cpu-percent=80 --min=1 --max=3 -n apps--cpu-percent=80: This flag sets the target CPU utilization for the deployment. The HPA will aim to maintain the average CPU usage across all pods in the deployment at or below 80%.--min=1: This flag sets the minimum number of replicas allowed for the deployment. Even if the HPA determines scaling down is necessary based on CPU usage, it won’t go below 1 replica.--max=3: This flag sets the maximum number of replicas allowed for the deployment. The HPA won’t scale the deployment beyond 3 replicas regardless of how high the CPU usage climbs.

The HPA will take the name of the deployment if you don’t specify the name. Use –name <name> to set custom HPA name.

kubectl autoscale deployment nginx-app --name nginx-app --cpu-percent=80 --min=1 --max=3 -n appsIn essence:

- Scaling Up: When the average CPU usage across all pods in the deployment consistently exceeds 80% for a period defined by the HPA’s cooldown configuration, the HPA triggers a scale-up event. It adds additional replicas to the deployment to distribute the workload and bring the average CPU utilization back down towards the target of 80%.

- Scaling Down: While the

--cpu-percentflag doesn’t define a specific threshold for scaling down, the HPA does have built-in logic for scaling down deployments. This logic considers several factors, including:- Target CPU Utilization: The HPA aims to maintain the average CPU usage around the target of 80%. If the average CPU utilization remains consistently below 80% for a cooldown period, the HPA might consider scaling down.

- Minimum Replicas: The HPA won’t scale the deployment below the minimum number of replicas specified by the

--min=1flag in this case. - HPA Cool Down: Even if the CPU usage falls below 80%, the HPA won’t immediately scale down. It waits for a cooldown period to ensure the decrease in resource usage is not a temporary fluctuation.

To create an HPA via declarative way, create a manifest file;

cat hpa.yamlapiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: nginx-app

namespace: apps

spec:

metrics:

- type: Resource

resource:

name: cpu

target:

averageUtilization: 80

type: Utilization

minReplicas: 1

maxReplicas: 3

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-app

Then apply;

kubectl apply -f hpa.yamlIf you want to use both CPU and Memory resouce usage to scale the Deployment pods, then edit the manifest file and define both resources;

cat hpa.yamlapiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: nginx-app

namespace: apps

spec:

metrics:

- type: Resource

resource:

name: cpu

target:

averageUtilization: 80

type: Utilization

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

minReplicas: 1

maxReplicas: 3

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-app

Once you apply, you should then see both metrics shown on the HPA.

List available HPAs;

Use the command below to list available HPAs. You can omit -n <namespace>

kubectl get hpa -n appsNAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 1 12m

If you had defined both CPU and Memory, output;

Get Details of an HPA:

kubectl describe hpa <name> -n <namespace>Simulating Events to Trigger Horizontal Scaling

Now, let’s see how we can stress our app to test the horizontal scaling.

We will use ApacheBench (ab) to perform load testing on our web app.

while true; do sleep 0.01; ab -n 500000 -c 1000 http://192.168.122.62:30833/; doneThe command will send 500,000 HTTP requests to http://192.168.122.62:30833/ with a concurrency level of 1000 requests at a time, pausing for 0.01 seconds between each iteration.

Before you execute the command, run the command below on the control plane node to watch how HPA responds to the load on the web app;

kubectl get hpa -n apps -wBefore we executed the load testing command, this is the status of the HPA;

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 1 27m

Now, execute the load the testing command and see what happens.

Sample output of the load testing command;

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 192.168.122.62 (be patient)

Completed 50000 requests

Completed 100000 requests

Completed 150000 requests

Completed 200000 requests

Completed 250000 requests

Completed 300000 requests

Completed 350000 requests

Completed 400000 requests

Completed 450000 requests

apr_pollset_poll: The timeout specified has expired (70007)

Total of 499996 requests completed

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 192.168.122.62 (be patient)

Completed 50000 requests

Completed 100000 requests

Completed 150000 requests

Completed 200000 requests

Completed 250000 requests

Completed 300000 requests

Completed 350000 requests

Completed 400000 requests

Completed 450000 requests

apr_pollset_poll: The timeout specified has expired (70007)

Total of 499995 requests completed

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 192.168.122.62 (be patient)

Completed 50000 requests

Completed 100000 requests

Completed 150000 requests

Completed 200000 requests

Completed 250000 requests

Completed 300000 requests

Completed 350000 requests

Completed 400000 requests

Completed 450000 requests

apr_pollset_poll: The timeout specified has expired (70007)

Total of 499997 requests completed

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 192.168.122.62 (be patient)

Completed 50000 requests

Completed 100000 requests

Completed 150000 requests

HPA response to load;

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 1 27m

nginx-app Deployment/nginx-app cpu: 124%/80% 1 3 1 44m

nginx-app Deployment/nginx-app cpu: 64%/80% 1 3 2 44m

nginx-app Deployment/nginx-app cpu: 135%/80% 1 3 2 44m

nginx-app Deployment/nginx-app cpu: 179%/80% 1 3 3 44m

nginx-app Deployment/nginx-app cpu: 160%/80% 1 3 3 45m

nginx-app Deployment/nginx-app cpu: 159%/80% 1 3 3 45m

nginx-app Deployment/nginx-app cpu: 157%/80% 1 3 3 45m

nginx-app Deployment/nginx-app cpu: 30%/80% 1 3 3 45m

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 3 46m

nginx-app Deployment/nginx-app cpu: 70%/80% 1 3 3 46m

nginx-app Deployment/nginx-app cpu: 156%/80% 1 3 3 46m

nginx-app Deployment/nginx-app cpu: 154%/80% 1 3 3 46m

nginx-app Deployment/nginx-app cpu: 153%/80% 1 3 3 47m

nginx-app Deployment/nginx-app cpu: 118%/80% 1 3 3 47m

nginx-app Deployment/nginx-app cpu: 31%/80% 1 3 3 47m

nginx-app Deployment/nginx-app cpu: 14%/80% 1 3 3 47m

nginx-app Deployment/nginx-app cpu: 98%/80% 1 3 3 48m

nginx-app Deployment/nginx-app cpu: 158%/80% 1 3 3 48m

nginx-app Deployment/nginx-app cpu: 160%/80% 1 3 3 48m

nginx-app Deployment/nginx-app cpu: 156%/80% 1 3 3 48m

nginx-app Deployment/nginx-app cpu: 103%/80% 1 3 3 49m

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 3 49m

nginx-app Deployment/nginx-app cpu: 96%/80% 1 3 3 49m

nginx-app Deployment/nginx-app cpu: 151%/80% 1 3 3 49m

nginx-app Deployment/nginx-app cpu: 100%/80% 1 3 3 50m

nginx-app Deployment/nginx-app cpu: 1%/80% 1 3 3 50m

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 3 50m

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 3 55m

nginx-app Deployment/nginx-app cpu: 0%/80% 1 3 1 55m

As a summary, the Horizontal Pod Autoscaler (HPA) for the nginx-app deployment responded dynamically to varying levels of CPU utilization over a period of time. Initially, with low CPU usage, it maintained 1 replica. As CPU demand increased, the HPA gradually scaled up, reaching a peak of 3 replicas when CPU usage spiked to 179%. Throughout this scaling process, the HPA adjusted replicas based on the configured target of 80% CPU utilization, demonstrating its ability to automatically scale the deployment to handle increased workload and then scale down as demand decreased, ensuring efficient resource utilization.

After stopping the AB load tester, the last entry shows a reduction to 1 replica suggesting a decrease in CPU load, indicating the HPA’s ongoing responsiveness to workload fluctuations.

Using VPA to Autoscale Kubernetes Containers

In our next guide, we will learn how to install and use VPA to control resource usage by Kubernetes cluster.

Kubernetes Resource Optimization with Vertical Pod Autoscaler (VPA)

Conclusion

In this blog post, you have learnt the major types of autoscaling in Kubernetes, horizontal and vertical scaling along with the api resources that implements them, HPA and VPA. As you can see from the simulation done above, Kubernetes provides robust autoscaling capabilities through Horizontal Pod Autoscaler (HPA). HPA scales the number of pod replicas based on metrics like CPU utilization or custom metrics, ensuring applications can handle varying workloads efficiently.