In this tutorial, you will learn how to provision block storage for Kubernetes on Rook ceph cluster. Rook is an open-source platform for managing and operating a Ceph storage cluster within Kubernetes. Currently, Kubernetes is the de facto standard for automating deployment, scaling, and management of containerized applications. As organizations embrace Kubernetes, the demand for resilient and scalable storage solutions to support stateful workloads becomes paramount. This is where Ceph block storage comes in.

Table of Contents

Configure Block Storage for Kubernetes

Deply Ceph Storage Cluster in Kubernetes using Rook

To begin with, ensure you have a Ceph storage cluster running in Kubernetes.

You can check our previous guide on how to deploy Ceph cluster in Kubernetes using Rook by following the link below.

Deploy Ceph Storage Cluster in Kubernetes using Rook

Configuring Block Storage for Kubernetes on Rook Ceph Cluster

Once the cluster is up, you can proceed to provision block storage for use by Kubernetes applications.

Rook provides a manifest called storageclass.yaml that resides in the RBD directory under ~/rook/deploy/examples/csi/ directory. This manifest defines various configuration options that can be used to provision persistent block storage volumes for use by Kubernetes application pods.

By default, without command lines, this is how it is defined;

cat ~/rook/deploy/examples/csi/rbd/storageclass.yamlapiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph # namespace:cluster

spec:

failureDomain: host

replicated:

size: 3

requireSafeReplicaSize: true

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

clusterID: rook-ceph # namespace:cluster

pool: replicapool

imageFormat: "2"

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/fstype: ext4

allowVolumeExpansion: true

reclaimPolicy: Delete

The YAML file defines two resources: a CephBlockPool and a StorageClass, both related to block storage in a Rook Ceph cluster. Here’s a breakdown of each resource:

- CephBlockPool:

apiVersion: ceph.rook.io/v1: Specifies the API version for the Rook CephBlockPool resource.kind: CephBlockPool: Declares the type of resource as a CephBlockPool.metadata: Contains information about the metadata of the CephBlockPool, including its name.spec: Defines the specifications for the CephBlockPool.failureDomain: host: Specifies the failure domain as “host,” indicating that the pool should be distributed across hosts.replicated: Indicates that the pool is replicated.size: 3: Sets the replication size to 3, meaning data will be stored on three different nodes.requireSafeReplicaSize: true: Ensures that the pool won’t be created unless the desired replica size can be satisfied.

- StorageClass:

apiVersion: storage.k8s.io/v1: Specifies the API version for the Kubernetes StorageClass resource.kind: StorageClass: Declares the type of resource as a StorageClass.metadata: Contains information about the metadata of the StorageClass, including its name.provisioner: rook-ceph.rbd.csi.ceph.com: Specifies the Rook Ceph CSI provisioner, whererook-cephis the namespace.parameters: Defines parameters for the StorageClass.pool: replicapool: Specifies the CephBlockPool to use.imageFormat: "2": Sets the RBD image format to version 2.imageFeatures: layering: Enables RBD image layering.csi.storage.k8s.io/*secret*: These parameters define the location and names of various secrets required by the provisioner and CSI driver, ensuring secure access to cluster credentials and resources.csi.storage.k8s.io/fstype: ext4: Specifies the filesystem type as ext4.

allowVolumeExpansion: true: Enables online volume expansion for applications.reclaimPolicy: Delete: Specifies the reclaim policy as “Delete,” meaning that when a PersistentVolume (PV) using this StorageClass is released, the associated volume and data will be deleted. If you want to the images to exist even after thePersistentVolumehas been deleted, you can use Retain policy. Note that you will need to remove them images manually usingrbd rmCeph command.

Note that failure domain is set to host and a replica size of 3. This therefore requires 3 hosts/nodes with at least 1 OSD per node.

In this demo guide, we will provision the block storage using the default manifest provided. Take note of the namespace. If you are using a namespace other than the default, be sure to make appropriate adjustments.

Verify the cluster status before you can proceed.

kubectl -n rook-ceph exec deploy/rook-ceph-tools -- ceph status cluster:

id: e1466372-9f01-42af-8ad7-0bfcfa71ef78

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 38h)

mgr: a(active, since 16m), standbys: b

osd: 3 osds: 3 up (since 38h), 3 in (since 38h)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 81 MiB used, 300 GiB / 300 GiB avail

pgs: 1 active+clean

Next, proceed to configure block storage for Kubernetes. Start by creating the storage class.

cd ~/rook/deploy/exampleskubectl create -f csi/rbd/storageclass.yamlWhen executed, the command will now create a Ceph pool called replicapool;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph osd pool ls.mgr

replicapool

To get the details of a pool;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph osd pool ls detailYou can show in pretty json format;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- ceph osd pool ls detail -f json-pretty

[

{

"pool_id": 1,

"pool_name": ".mgr",

"create_time": "2023-12-14T21:08:22.463543+0000",

"flags": 1,

"flags_names": "hashpspool",

"type": 1,

"size": 3,

"min_size": 2,

"crush_rule": 0,

"peering_crush_bucket_count": 0,

"peering_crush_bucket_target": 0,

"peering_crush_bucket_barrier": 0,

"peering_crush_bucket_mandatory_member": 2147483647,

"object_hash": 2,

"pg_autoscale_mode": "on",

"pg_num": 1,

"pg_placement_num": 1,

"pg_placement_num_target": 1,

"pg_num_target": 1,

"pg_num_pending": 1,

"last_pg_merge_meta": {

"source_pgid": "0.0",

"ready_epoch": 0,

"last_epoch_started": 0,

"last_epoch_clean": 0,

"source_version": "0'0",

"target_version": "0'0"

},

"last_change": "16",

"last_force_op_resend": "0",

"last_force_op_resend_prenautilus": "0",

"last_force_op_resend_preluminous": "0",

"auid": 0,

"snap_mode": "selfmanaged",

"snap_seq": 0,

"snap_epoch": 0,

"pool_snaps": [],

"removed_snaps": "[]",

"quota_max_bytes": 0,

"quota_max_objects": 0,

"tiers": [],

"tier_of": -1,

"read_tier": -1,

"write_tier": -1,

"cache_mode": "none",

"target_max_bytes": 0,

"target_max_objects": 0,

"cache_target_dirty_ratio_micro": 400000,

"cache_target_dirty_high_ratio_micro": 600000,

"cache_target_full_ratio_micro": 800000,

"cache_min_flush_age": 0,

"cache_min_evict_age": 0,

"erasure_code_profile": "",

"hit_set_params": {

"type": "none"

},

"hit_set_period": 0,

"hit_set_count": 0,

"use_gmt_hitset": true,

"min_read_recency_for_promote": 0,

"min_write_recency_for_promote": 0,

"hit_set_grade_decay_rate": 0,

"hit_set_search_last_n": 0,

"grade_table": [],

"stripe_width": 0,

"expected_num_objects": 0,

"fast_read": false,

"options": {

"pg_num_max": 32,

"pg_num_min": 1

},

"application_metadata": {

"mgr": {}

},

"read_balance": {

"score_acting": 3,

"score_stable": 3,

"optimal_score": 1,

"raw_score_acting": 3,

"raw_score_stable": 3,

"primary_affinity_weighted": 1,

"average_primary_affinity": 1,

"average_primary_affinity_weighted": 1

}

},

{

"pool_id": 3,

"pool_name": "replicapool",

"create_time": "2023-12-16T11:29:26.186490+0000",

"flags": 8193,

"flags_names": "hashpspool,selfmanaged_snaps",

"type": 1,

"size": 3,

"min_size": 2,

"crush_rule": 2,

"peering_crush_bucket_count": 0,

"peering_crush_bucket_target": 0,

"peering_crush_bucket_barrier": 0,

"peering_crush_bucket_mandatory_member": 2147483647,

"object_hash": 2,

"pg_autoscale_mode": "on",

"pg_num": 32,

"pg_placement_num": 32,

"pg_placement_num_target": 32,

"pg_num_target": 32,

"pg_num_pending": 32,

"last_pg_merge_meta": {

"source_pgid": "0.0",

"ready_epoch": 0,

"last_epoch_started": 0,

"last_epoch_clean": 0,

"source_version": "0'0",

"target_version": "0'0"

},

"last_change": "60",

"last_force_op_resend": "0",

"last_force_op_resend_prenautilus": "0",

"last_force_op_resend_preluminous": "58",

"auid": 0,

"snap_mode": "selfmanaged",

"snap_seq": 3,

"snap_epoch": 55,

"pool_snaps": [],

"removed_snaps": "[]",

"quota_max_bytes": 0,

"quota_max_objects": 0,

"tiers": [],

"tier_of": -1,

"read_tier": -1,

"write_tier": -1,

"cache_mode": "none",

"target_max_bytes": 0,

"target_max_objects": 0,

"cache_target_dirty_ratio_micro": 400000,

"cache_target_dirty_high_ratio_micro": 600000,

"cache_target_full_ratio_micro": 800000,

"cache_min_flush_age": 0,

"cache_min_evict_age": 0,

"erasure_code_profile": "",

"hit_set_params": {

"type": "none"

},

"hit_set_period": 0,

"hit_set_count": 0,

"use_gmt_hitset": true,

"min_read_recency_for_promote": 0,

"min_write_recency_for_promote": 0,

"hit_set_grade_decay_rate": 0,

"hit_set_search_last_n": 0,

"grade_table": [],

"stripe_width": 0,

"expected_num_objects": 0,

"fast_read": false,

"options": {},

"application_metadata": {

"rbd": {}

},

"read_balance": {

"score_acting": 1.1299999952316284,

"score_stable": 1.1299999952316284,

"optimal_score": 1,

"raw_score_acting": 1.1299999952316284,

"raw_score_stable": 1.1299999952316284,

"primary_affinity_weighted": 1,

"average_primary_affinity": 1,

"average_primary_affinity_weighted": 1

}

}

]

After a few, the cluster pool and PGs should be created.

Confirm the status of the cluster;

kubectl -n rook-ceph exec deploy/rook-ceph-tools -- ceph status cluster:

id: e1466372-9f01-42af-8ad7-0bfcfa71ef78

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 38h)

mgr: a(active, since 21m), standbys: b

osd: 3 osds: 3 up (since 38h), 3 in (since 38h)

data:

pools: 2 pools, 33 pgs

objects: 3 objects, 449 KiB

usage: 81 MiB used, 300 GiB / 300 GiB avail

pgs: 33 active+clean

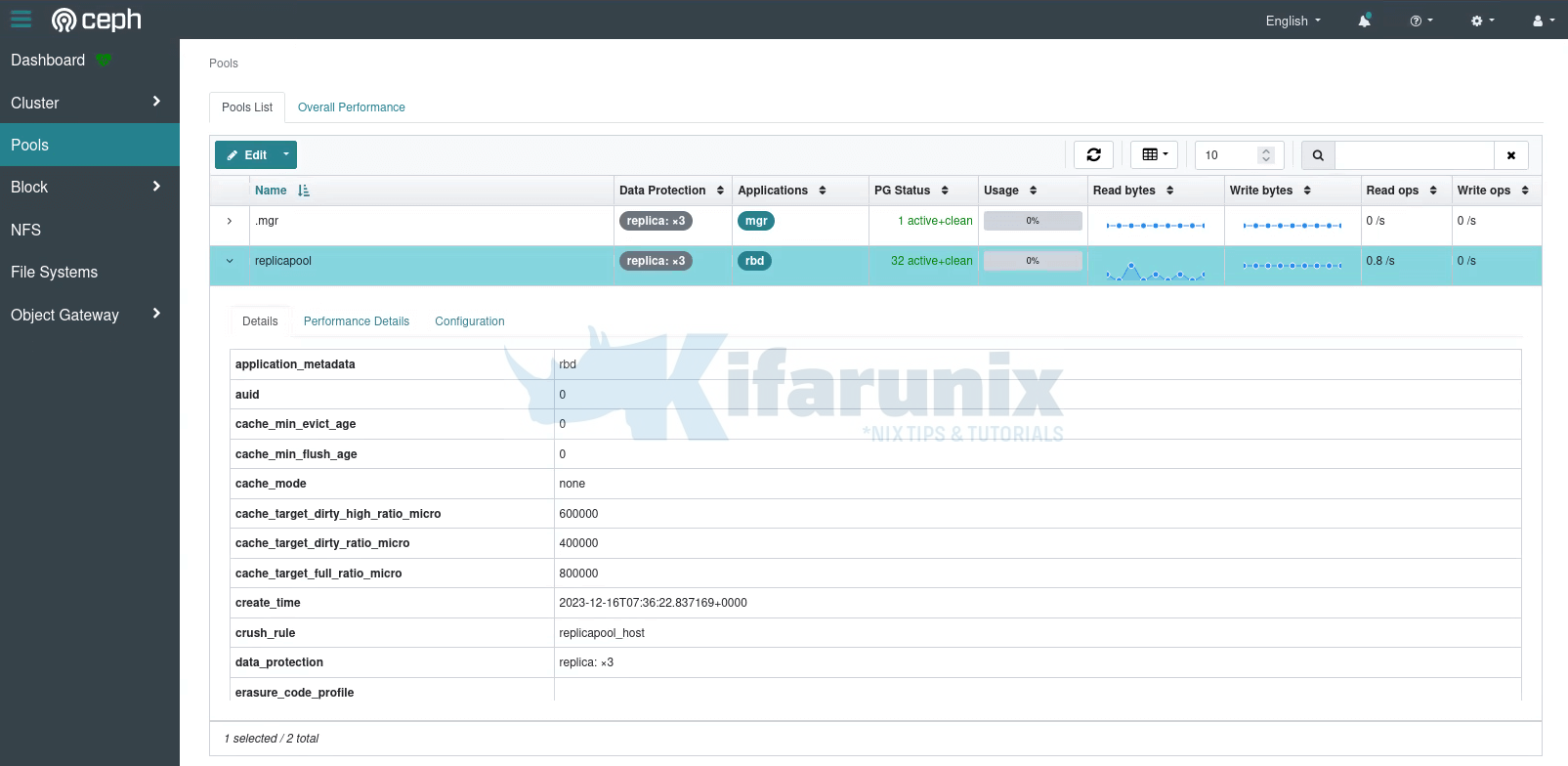

You can also see the details on the Ceph dashboard;

The RBD pools for block devices are now ready for use.

Configure Kubernetes Applications to use Ceph Block Storage

You can then configure your Kubernetes applications to use the provisioned block storage.

Rook ship with sample manifest files for MySQL and WordPress applications that can deploy to demo the use of block storage.

cd ~/rook/deploy/examplescat mysql.yamlapiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

ports:

- port: 3306

selector:

app: wordpress

tier: mysql

clusterIP: None

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

app: wordpress

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-mysql

labels:

app: wordpress

tier: mysql

spec:

selector:

matchLabels:

app: wordpress

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: changeme

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

cat wordpress.yamlapiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

ports:

- port: 80

selector:

app: wordpress

tier: frontend

type: LoadBalancer

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

labels:

app: wordpress

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

tier: frontend

spec:

selector:

matchLabels:

app: wordpress

tier: frontend

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

containers:

- image: wordpress:4.6.1-apache

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

value: changeme

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim

The manifest files defines the service, the persistenvolumeclaim and the deployment of each app.

The two apps have been configured with a PersistentVolumeClaim (PVC) that allows the apps to request a specific amount of storage from a storage class, rook-ceph-block, 20GiB each.

You can list available storage classes using the command;

kubectl get storageclassNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 20m

Similarly, the PVC also defines the access modes to be for the block storage. In this case, the access mode defined is ReadWriteOnce (RWO). Which means, only one pod can mount the PV and read/write data at any given time. Access mode can also be, ReadWriteMany (RWX), which means, multiple pods can simultaneously mount and access the PV, reading and writing data, or ROX (ReadOnlyMany) which is used when multiple Pods need read-only access to the storage.

You can create your own apps and define the required volumes from the block storage!

Using the default MySQL and WordPress, let’s start them;

Start MySQL app from the roo-ceph namespace;

kubectl create -n rook-ceph -f mysql.yaml After a short while, the Pod should be running;

kubectl get pods -n rook-ceph | grep mysqlwordpress-mysql-688ddf7f77-nwshk 1/1 Running 0 56sYou can also list pods based on their labels.

kubectl -n <name-space> get pod -l app=<app-label>For example;

kubectl -n rook-ceph get pods -l app=wordpressYou can get labels using;

kubectl -n <name-space> get pod --show-labelsGet the persistent volume claims;

kubectl get pvc -n rook-cephNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-9daf6557-5a94-4878-9b5b-9c35342abd38 20Gi RWO rook-ceph-block 119s

Similarly, start WordPress;

kubectl create -n rook-ceph -f wordpress.yamlOnce it starts, you can check the PVC;

kubectl get pvc -n rook-cephNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-9daf6557-5a94-4878-9b5b-9c35342abd38 20Gi RWO rook-ceph-block 3m55s

wp-pv-claim Bound pvc-9d962a97-808f-4ca6-8f6d-576e9b302884 20Gi RWO rook-ceph-block 49s

The two apps are now running;

kubectl get svc -n rook-cephNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

...

wordpress LoadBalancer 10.107.77.47 <pending> 80:30689/TCP 7m48s

wordpress-mysql ClusterIP None <none> 3306/TCP 10m

WordPress is exposed via the Kubernetes cluster nodes’ IPs on port 30689/TCP.

Check Block Devices on Ceph RBD Pool

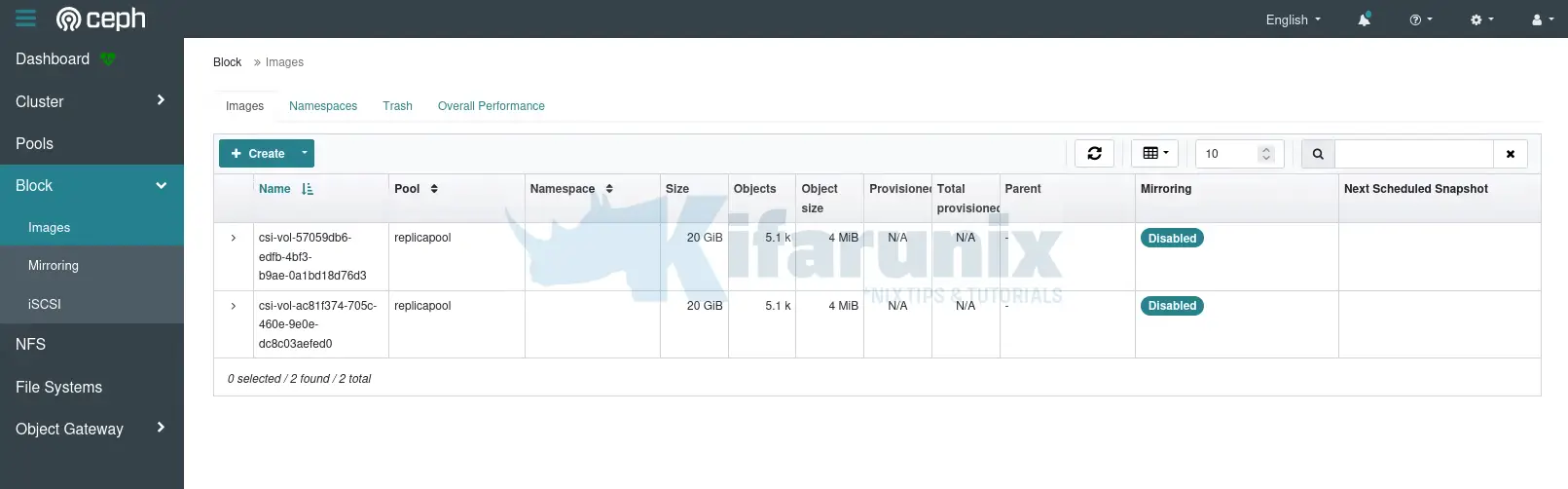

You can check the block devices on the Ceph pool using the Rook toolbox (rbd -p replicapool ls) or from the Ceph dashboard;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- rbd -p replicapool lscsi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3

csi-vol-ac81f374-705c-460e-9e0e-dc8c03aefed0

Check the usage size;

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- rbd -p replicapool duNAME PROVISIONED USED

csi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3 20 GiB 232 MiB

csi-vol-ac81f374-705c-460e-9e0e-dc8c03aefed0 20 GiB 140 MiB

<TOTAL> 40 GiB 372 MiB

Get block device image information (rbd --image <IMAGE_NAME> info -p <poolname>).

kubectl -n rook-ceph exec -it rook-ceph-tools-564c8446db-xh6qp -- rbd --image csi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3 info -p replicapoolSample output;

rbd image 'csi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 1810dae9feeeb

block_name_prefix: rbd_data.1810dae9feeeb

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat Dec 16 12:11:16 2023

access_timestamp: Sat Dec 16 12:11:16 2023

modify_timestamp: Sat Dec 16 12:11:16 2023

So, how can you know which persistent volume belongs to which pod? Well, you can list the available persistent volumes;

kubectl get pv -n rook-cephNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-9d962a97-808f-4ca6-8f6d-576e9b302884 20Gi RWO Delete Bound rook-ceph/wp-pv-claim rook-ceph-block 24m

pvc-9daf6557-5a94-4878-9b5b-9c35342abd38 20Gi RWO Delete Bound rook-ceph/mysql-pv-claim rook-ceph-block 27m

You can describe each one of them;

kubectl describe pv pvc-9d962a97-808f-4ca6-8f6d-576e9b302884Name: pvc-9d962a97-808f-4ca6-8f6d-576e9b302884

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: rook-ceph.rbd.csi.ceph.com

volume.kubernetes.io/provisioner-deletion-secret-name: rook-csi-rbd-provisioner

volume.kubernetes.io/provisioner-deletion-secret-namespace: rook-ceph

Finalizers: [kubernetes.io/pv-protection]

StorageClass: rook-ceph-block

Status: Bound

Claim: rook-ceph/wp-pv-claim

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 20Gi

Node Affinity: <none>

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: rook-ceph.rbd.csi.ceph.com

FSType: ext4

VolumeHandle: 0001-0009-rook-ceph-0000000000000003-ac81f374-705c-460e-9e0e-dc8c03aefed0

ReadOnly: false

VolumeAttributes: clusterID=rook-ceph

imageFeatures=layering

imageFormat=2

imageName=csi-vol-ac81f374-705c-460e-9e0e-dc8c03aefed0

journalPool=replicapool

pool=replicapool

storage.kubernetes.io/csiProvisionerIdentity=1702728513475-2507-rook-ceph.rbd.csi.ceph.com

Events: <none>

Volume attributes will also show the block device image name.

From the dashboard, you can see the images under Block > Images.

Verify Block Device Image Usage on the Pod

You can login to the pod and check the attached block devices;

WordPress for example;

kubectl -n rook-ceph exec -it wordpress-658d46dddd-zpmfh -- bashCheck block devices;

lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop1 7:1 0 63.5M 1 loop

rbd0 251:0 0 20G 0 disk /var/www/html

vdb 252:16 0 100G 0 disk

loop4 7:4 0 40.9M 1 loop

loop2 7:2 0 112M 1 loop

loop5 7:5 0 63.9M 1 loop

vda 252:0 0 100G 0 disk

|-vda2 252:2 0 2G 0 part

|-vda3 252:3 0 98G 0 part

`-vda1 252:1 1M 0 part

loop3 7:3 0 49.9M 1 loop

The block device is mapped to the /var/www/html.

Manually Create Block Device Image and Mount in a Pod

It is also possible to create a block device image manually in the Kubernetes Ceph cluster and mount it directly on a pod, similar to what we did in our previous guide on how to Configure and Use Ceph Block Device on Linux Clients.

This however, is not recommended for production environment, but for testing purposes only. If a pod dies, the volume attached to it dies as well.

Create Direct Mount Pod

So, create and start direct mount pod provided by Rook;

cd ~/rook/deploy/exampleskubectl create -f direct-mount.yamlThis will create and start rook-direct-mount-* pod.

Create Block Device Image of specific Size

List the pods to get the name of the direct mount pod and login to it;

kubectl -n rook-ceph exec -it rook-direct-mount-57b6f954d9-lgqkb -- bashYou can now run the RBD commands to create the image and mount!

rbd create <image-name> --size <megabytes> --pool <pool-name>e.g;

rbd create disk01 --size 10G --pool replicapoolGet Block Device Image Information

To list the images in your pool;

rbd ls -l replicapoolNAME SIZE PARENT FMT PROT LOCK

csi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3 20 GiB 2

csi-vol-ac81f374-705c-460e-9e0e-dc8c03aefed0 20 GiB 2

disk01 10 GiB 2

To retrieve information about the image created, run the command;

rbd --image disk01 -p replicapool infoMap Block Device Image to Local Device

After creating an image, you can map it to block devices to a local device node accessible within your system/Pod.

rbd map disk01 --pool replicapoolYou will see an output like;

/dev/rbd1To show block device images mapped to kernel modules with the rbd command;

rbd showmappedid pool namespace image snap device

0 replicapool csi-vol-57059db6-edfb-4bf3-b9ae-0a1bd18d76d3 - /dev/rbd0

1 replicapool disk01 - /dev/rbd1

Create Filesystem on Mapped Block Device Image

The Ceph mapped block device is now ready. All is left is to create a file system on it and mount it to make it use-able.

For example, to create an XFS file system on it (you can use your preferred filesystem type);

mkfs.xfs /dev/rbd1 -L ceph-disk01Mount/Unmount Block Device Image

You can now mount the block device. For example, to mount it under /mnt/ceph directory;

mkdir /mnt/cephmount /dev/rbd1 /mnt/cephCheck mounted Filesystems;

df -hT -P /dev/rbd1Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd1 xfs 10G 105M 9.9G 2% /mnt/ceph

There you go.

You can now start using the volume.

When done using it, you can unmount and unmap!

umount /dev/rbd1Unmap;

rbd unmap /dev/rbd1And that pretty summarizes our guide on how to configure block storage for Kubernetes on Rook ceph cluster.

You can also check our guide on how to provision shared filesystem;

Configuring Shared Filesystem for Kubernetes on Rook Ceph Storage