In this blog post, you will learn how to integrate OpenStack with Ceph storage cluster. This is the first part of our 3-part series on how to integrate OpenStack with Ceph cluster. In cloud computing, OpenStack and Ceph stand as two prominent pillars, each offering distinct yet complementary capabilities. While OpenStack provides a comprehensive cloud computing platform, Ceph delivers distributed and scalable storage services.

While integrating OpenStack with Ceph storage, you need to ensure that you have a good network bandwidth connecting OpenStack and Ceph storage. As much as network requirements is affected by several factors, such as but not limited to the size of the cluster, the workload, and the desired performance, you need to ensure the connection doesn’t affect the performance or lead to data inconsistencies. Consider using a dedicated network for Ceph traffic as this will help isolate Ceph traffic from other traffic on your network and ensure optimal performance.

Part 2: Integrate OpenStack with Ceph Storage Cluster

Part 3: Integrate OpenStack with Ceph Storage Cluster

Table of Contents

Integrating OpenStack with Ceph Storage Cluster

Note: We are integrating fresh installation of OpenStack with Ceph storage cluster.

Three parts of OpenStack integrate with Ceph storage:

- OpenStack Glance: This is a core components of the OpenStack that is responsible for discovering, registering, and retrieving virtual machine (VM) images.

- OpenStack Cinder: Cinder is the OpenStack Block Storage service for providing volumes (block devices) to Nova virtual machines, Ironic bare metal hosts, containers…OpenStack uses volumes to boot VMs, or to attach volumes to running VMs. Cinder volumes offer persistent storage that can be attached to and detached from OpenStack instances.

- Guest Disks: Guest disks are guest operating system disks. By default, when you boot a virtual machine, its disk appears as a file on the file system of the hypervisor (usually under

/var/lib/nova/instances/<uuid>/or/var/lib/docker/volumes/nova_compute/_data/instances/<uuid>if used Kolla-ansible for deployment). We wont be storing instance disk images on Ceph in this guide, but rather on compute nodes on which an instance is scheduled to run on.

Install and Setup Ceph Storage Cluster

Similarly, you need to be having Ceph storage cluster up and running. In our previous guides, we have setup and deployed Ceph on various Linux distros.

How to deploy Ceph Storage Cluster on Linux

Create Ceph Storage Pools

To be able to configure Ceph cluster for OpenStack images/volumes storage, you need to create storage pools. A Ceph pool is a logical partition that is used to store objects in a Ceph cluster. It serves as the basic unit for data placement and management. Each pool is associated with a specific application or use case, and administrators can configure parameters for each pool to meet the requirements of that particular workload.

In this guide, we will create three pools for following OpenStack services:

- Glance:

- Images (glance-api)

- Cinder:

- volumes (cinder-volume)

- volumes backup (cinder-backup).

Ensure Cluster is in Good Health

Before you can proceed to create the image pools, ensure the cluster is in good health, active + clean state.

sudo ceph -sSample output;

cluster:

id: 1e266088-9480-11ee-a7e1-738d8527cddc

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-osd01,osd02,osd03 (age 4h)

mgr: ceph-mon-osd01.ibdekn(active, since 4h), standbys: osd02.lfiiww

osd: 6 osds: 6 up (since 3h), 6 in (since 3h)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 959 MiB used, 299 GiB / 300 GiB avail

pgs: 1 active+clean

Create Ceph Storage Pools

Ceph has different ways of ensuring that data is safe when an OSD fails or gets corrupted. When you create a Ceph pool, it can either of the type;

- replicated pool or

- erasure-coded pool

In a replicated type of a pool, which is usually the default type of a pool when you create one, data is copied from the primary OSD across other multiple OSDs in the cluster. By default, Ceph creates two replicas of an object (a total of three copies, or a pool size of 3). Thus, replicated pool type requires more raw storage.

On the other hand, in erasure-coded pool type, Ceph uses erasure code algorithms to break object data into two types of chunks that are written across different OSDs;

- data chunks, also know as, data blocks. The data chunks are denoted by k.

- parity chunks, also known as parity blocks. They are denoted by m. If a drive fails or becomes corrupted, the parity blocks are used to rebuild the data and as such, they specify how many OSDs that are allowed to fail without causing data loss.

Ceph creates a default erasure code profile when initializing a cluster and it provides the same level of redundancy as two copies in a replicated pool. However, it uses 25% less storage capacity. The default profiles define k=2 and m=2, meaning Ceph will spread the object data over four OSDs (k+m=4) and Ceph can lose one of those OSDs without losing data.

The erasure-coded pools reduce the amount of disk space required to ensure data durability but it is computationally a bit more expensive than replicated pools.

To create Ceph pool use the command below;

ceph osd pool create {pool-name} pg_num pgp_numWhere:

{pool-name}is the name of the Ceph pool you are creating. The name rbd is recommended but you can use your preferred name.pg_numis the total number of placement groups for the pool. It determines the number of actual Placement Groups (PGs) that data objects will be divided into. See how to determine the Number of PGs.pgp_numspecifies total number of placement groups for placement purposes. Should be equal to the total number of placement groups.

So, let’s see how can calculate the number of PGs. You can use the formula;

Total PGs = (No. of OSDs x 100)/pool sizeWhere pool size refers to:

- number of replicas for replicated pools or

- the K+M sum for erasure-coded pools.

The value of PGs should be rounded UP to the nearest power of two (2^x). Rounding up is optional, but it is recommended for CRUSH to evenly balance the number of objects among placement groups.

By default, Ceph creates two replicas of an object (a total of three copies, i.e a pool size of 3).

To calculate the pool size using the default replica size;

PGs= (6x100)/3=200This rounded up to power of 2, gives 256 maximum PGs.

You can always get the replica size/replicated pool size using the command, ceph osd dump | grep 'replicated size'.

To calculate total number of PGs using erasure coding chunk size, you need to get the default values of K+M for erasure-coded pools as follows.

Get your erasure coded pool profile;

sudo ceph osd erasure-code-profile lsOutput;

defaultNext, get the erasure-coded profile information using the command ceph osd erasure-code-profile get <profile>.

sudo ceph osd erasure-code-profile get defaultk=2

m=2

plugin=jerasure

technique=reed_sol_van

Now, the sum of K and M is 2+2=4.

Hence, as per the above PG formula, PGs=6×100/4 = 600/4 = 150. The nearest power of 2 here is 2^8 = 256. Hence, our PGs should be 256.

If you are going to create multiple PGs on multiple pools, then you can get the max PGs per pool using the formula;

Total PGs = ((No. of OSDs x 100)/pool size)/No. of PoolsFor example, in this guide, we will create three pools, so to get the maximum number of PGs per pool;

- No of OSDs =6

- replicated pool size =3

- No of Pools =3

hence;

PGs=((6x100)/3)/3 = 600/9=66.66Rounding up the value to nearest power of 2;

2^0 1

2^1 2

2^2 4

2^3 8

2^4 16

2^5 32

2^6 64

2^7 128

2^8 256

...

That would be 128 total PGs per pool.

Hence, let’s create the images pools;

sudo ceph osd pool create glance-images 128 128Similarly, create the pools for the block devices and volumes backup.

sudo ceph osd pool create cinder-volume 128 128sudo ceph osd pool create cinder-backup 128 128The command will start to gradually create the PGs. After short while, you can list OSD pools using the command;

sudo ceph osd pool lsOr;

ceph osd lspoolsNote that, you might see a discrepancy between the expected number of PGs as per the creation command and the actual number reported by Ceph pool list command. This is because, if the Ceph PG autoscaler feature is enabled (usually enabled by default), Ceph will automatically adjusts the number of Placement Groups (PGs) based on the current OSD count and usage. It aims to optimize the distribution of PGs to improve performance.

For example, if you check the cluster status/health check command above, we had 1 pool and 1 placement group.

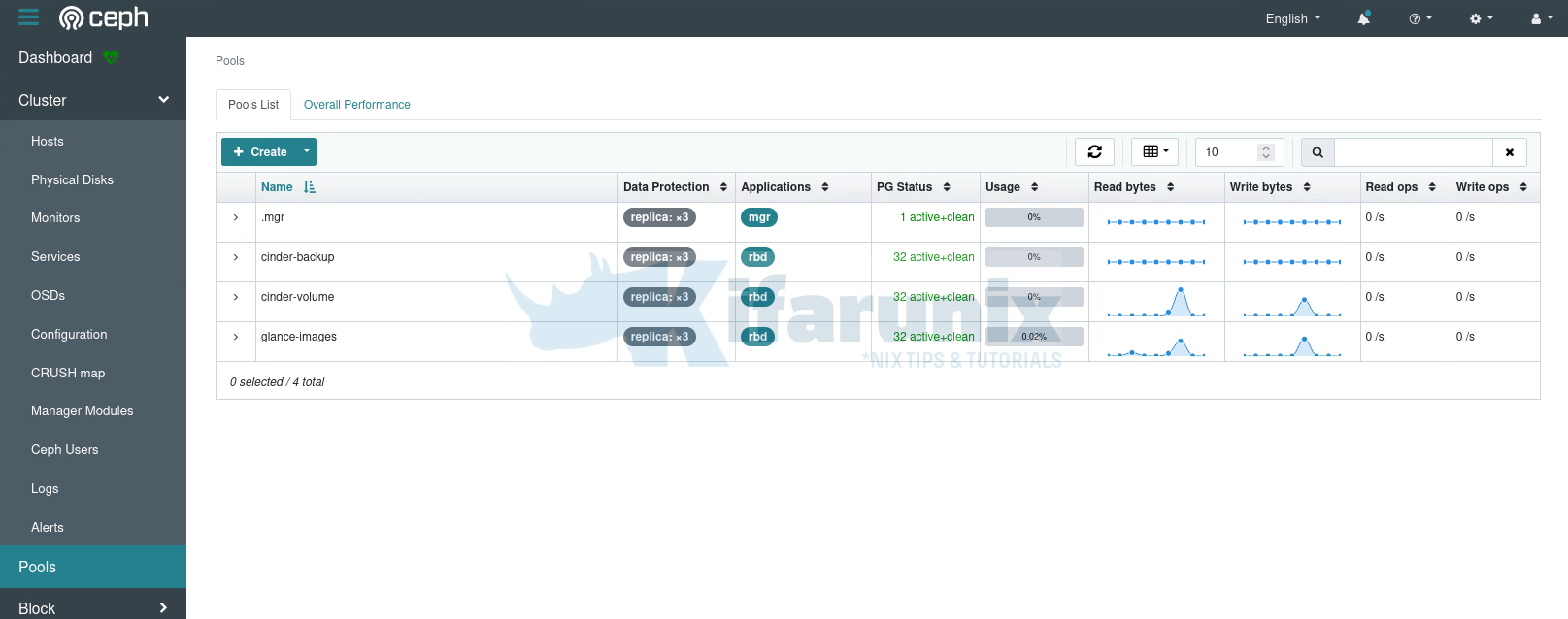

After we added our 3 pools and placement groups of 128 each, we now have 4 (including manager) pools and 97 placement groups (32 for each of the ones we created and 1 for the default mgr pool).

sudo ceph -s cluster:

id: 1e266088-9480-11ee-a7e1-738d8527cddc

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-osd01,osd02,osd03 (age 21h)

mgr: ceph-mon-osd01.ibdekn(active, since 21h), standbys: osd02.lfiiww

osd: 6 osds: 6 up (since 21h), 6 in (since 3d)

data:

pools: 4 pools, 97 pgs

objects: 2 objects, 449 KiB

usage: 1.2 GiB used, 299 GiB / 300 GiB avail

pgs: 97 active+clean

To check the number of placement groups on a Ceph pool, e.g from our glance-images pool;

sudo ceph osd pool get glance-images pg_numSample output;

pg_num: 32As you can see, there is a discrepancy between the number of PGs.

To check if pg_autoscaler is enabled for a pool,e.g on our glance-images pool;

sudo ceph osd pool get glance-images pg_autoscale_modeOutput;

pg_autoscale_mode: onYou can also get the pool auto-scale status as well as the PG recommendations;

sudo ceph osd pool autoscale-statusPOOL SIZE TARGET SIZE RATE RAW CAPACITY RATIO TARGET RATIO EFFECTIVE RATIO BIAS PG_NUM NEW PG_NUM AUTOSCALE BULK

.mgr 448.5k 3.0 300.0G 0.0000 1.0 1 on False

glance-images 0 3.0 300.0G 0.0000 1.0 32 on False

cinder-volume 0 3.0 300.0G 0.0000 1.0 32 on False

cinder-backup 0 3.0 300.0G 0.0000 1.0 32 on False

Read more on PG scaling recommendations.

Pool names beginning with . are reserved for use by Ceph’s internal operations. Do not create or manipulate them.

Initialize OpenStack Ceph OSD Pool

Prior to use, you need to initialize the Ceph OSD pool. An OSD pool can be initialized using the rbd pool init <pool-name> command.

To initialize our OpenStack pools;

sudo rbd pool init glance-imagessudo rbd pool init cinder-volumesudo rbd pool init cinder-backupYou can check each pool statistics;

sudo ceph osd pool stats <pool_name>Enable Applications for OpenStack OSD Pools

Associate the pools created with the respective application to prevent unauthorized types of clients from writing data to the pools. An application can be;

- CephFS (Ceph File System): Enabling CephFS on a pool allows creating CephFS filesystems on the pool, which allows sharing files and directories across the cluster.

- RBD (RADOS Block Device): Enabling RBD on a pool allows attaching RBD volumes to virtual machines, which provides persistent storage for the VMs.

- RGW (RADOS Gateway): Enabling RGW on a pool allows creating S3-compatible object storage interfaces, which enables using cloud storage services with Ceph. Suitable for storing large amounts of unstructured data, backups, and multimedia files.

- Custom applications: Enabling a custom application on a pool allows using the pool with specific third-party applications that might have special requirements.

Enabling an application for a pool in Ceph serves several important purposes:

- Prevents pool misuse: Avoids using the pool for unintended purposes, promoting efficient resource allocation and preventing conflicts.

- Optimizes pool configuration: Allows Ceph to configure the pool settings based on the specific requirements of the application, such as replication factor and erasure code profile.

- Provides isolation: Ensures that the pool is isolated from other applications, preventing interference and maintaining performance predictability.

- Enables application-specific features: Some features are only accessible when a specific application is enabled for a pool. For example, enabling RBD for a pool unlocks features like snapshots and volume cloning.

- Ensures compatibility: Guarantees that the pool meets the specific requirements of the application, which is crucial when dealing with third-party applications with unique property prerequisites.

To associate the pools created above with RBD, simply execute the command, ceph osd pool application enable <pool> <app> [--yes-i-really-mean-it]. Replace the name of the pool accordingly.

sudo ceph osd pool application enable glance-images rbdsudo ceph osd pool application enable cinder-volume rbdsudo ceph osd pool application enable cinder-backup rbdYou can login to Ceph dashboard and confirm the pools;

You can now proceed to the second part of this tutorial to proceed with OpenStack integration with Ceph.