Welcome to our tutorial on how to setup three node ceph storage cluster on Ubuntu 18.04. Ceph is a scalable distributed storage system designed for cloud infrastructure and web-scale object storage. It can also be used to provide Ceph Block Storage as well as Ceph File System storage.

You can check an updated guide on how to deploy Ceph cluster on Ubuntu 22.04.

Table of Contents

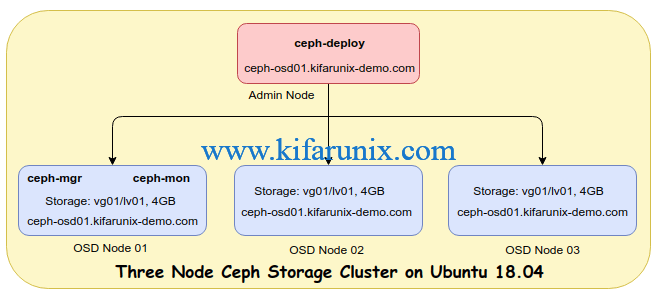

Setup Three Node Ceph Storage Cluster on Ubuntu 18.04

Ceph Storage Cluster setup requires at least one Ceph Monitor, Ceph Manager, and Ceph OSD (Object Storage Daemon) and may be Ceph Metadata Server for providing Ceph File System Storage.

Architecture of our deployment

Different Ceph components are used in the above;

- Ceph Object Storage Daemon (OSD,

ceph-osd)- It provides ceph object data store.

- It also performs data replication , data recovery, rebalancing and provides storage information to Ceph Monitor.

- Ceph Monitor (

ceph-mon)- It maintains maps of the entire ceph cluster state including monitor map, manager map, the OSD map, and the CRUSH map.

- manages authentication between daemons and clients

- Ceph Deploy/Admin node (

ceph-deploy)- It is the node on which Ceph deployment script (ceph-deploy) is installed on.

- Ceph Manager (

ceph-mgr)- keeps track of runtime metrics and the current state of the Ceph cluster, including storage utilization, current performance metrics, and system load.

- manages and exposes Ceph cluster web dashboard and API.

- At least two managers are required for HA.

Prepare Ceph Nodes for Ceph Storage Cluster Deployment on Ubuntu 18.04

Attach Storage Disks to Ceph OSD Nodes

Each Ceph OSD node in our architecture above has unallocated LVM logical volumes of 4 GB each.

lvsLV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

lv01 vg01 -wi-a----- 3.99gRun System Update

On all the nodes, update your system packages.

apt updateUpdate Hosts File

To begin with, setup up your nodes, assign the addresses and ensure that they can communicate by updating the hosts file. For example, in our setup, each node hosts file should contain the lines below;

less /etc/hosts...

192.168.2.114 ceph-admin.kifarunix-demo.com ceph-admin

192.168.2.115 ceph-osd01.kifarunix-demo.com ceph-osd01

192.168.2.116 ceph-osd02.kifarunix-demo.com ceph-osd02

192.168.2.117 ceph-osd03.kifarunix-demo.com ceph-osd03Set Time Synchronization

Ensure that the time on all the nodes is synchronized. Thus install Chrony on each and set it up such that all nodes uses the same NTP server.

apt install chronyEdit the Chrony configuration and set your NTP server by replacing the NTP server pools with your NTP server address.

vim /etc/chrony/chrony.conf...

# pool ntp.ubuntu.com iburst maxsources 4

# pool 0.ubuntu.pool.ntp.org iburst maxsources 1

# pool 1.ubuntu.pool.ntp.org iburst maxsources 1

# pool 2.ubuntu.pool.ntp.org iburst maxsources 2

pool ntp.kifarunix-demo.com iburst

...Restart Chronyd

systemctl restart chronydInstall SSH Server

Ceph deployment through ceph-deploy utility requires that an SSH server is installed on all the nodes.

Ubuntu 18.04 comes with SSH server already installed. If not, install and start it as follows;

apt install openssh-serversystemctl enable --now sshdInstall Python 2

Python 2 is required to deploy Ceph on Ubuntu 18.04. You can install Python 2 by executing the command below on all the Ceph nodes;

apt install python-minimalCreate Ceph Deployment User

On all the ceph nodes, create the ceph user with passwordless sudo required for installing ceph packages and configurations as shown below. Do not use the username ceph as it is reserved.

Replace cephadmin username accordingly.

useradd -m -s /bin/bash cephadminpasswd cephadminecho "cephadmin ALL=(ALL:ALL) NOPASSWD:ALL" >> /etc/sudoers.d/cephadminchmod 0440 /etc/sudoers.d/cephadminSetup Password-less SSH login

To be able to seamlessly run the ceph configurations on the ceph nodes using the ceph-deploy utility, you need to setup password-less ssh login from the ceph admin node.

On ceph-admin node, switch to cephadmin user created above;

su - cephadminNext, generate password-less SSH keys by leaving the passphrase empty.

ssh-keygenGenerating public/private rsa key pair.

Enter file in which to save the key (/home/cephadmin/.ssh/id_rsa):

Created directory '/home/cephadmin/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/cephadmin/.ssh/id_rsa.

Your public key has been saved in /home/cephadmin/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:hCX07NgcOmFx7gJrS8QCphNBqdjToPe73AtRNaC+f9k [email protected]

The key's randomart image is:

+---[RSA 2048]----+

|=+. .=.= |

|o+.. . @ . |

|=o.o= = * |

|+.++.= X . |

| . o* = S |

| o.+ o |

| +. o |

| ..+ o E |

| o.+o |

+----[SHA256]-----+Next, copy the keys to all other nodes. To make the copying easy, update your ~/.ssh/config file as shown below;

vim ~/.ssh/configHost ceph-osd01

Hostname ceph-osd01

User cephadmin

Host ceph-osd02

Hostname ceph-osd02

User cephadmin

Host ceph-osd03

Hostname ceph-osd03

User cephadminAfter that, copy SSH keys.

for i in ceph-osd01 ceph-osd02 ceph-osd03; do ssh-copy-id $i; doneEnter password at each prompt to copy generated SSH keys for password-less authentication from Ceph admin to the Ceph nodes.

Setup Ceph Storage Cluster on Ubuntu 18.04

Install ceph-deploy Utility on Ceph Admin Node

On the Ceph admin node, you need to install the ceph-deploy utility. To install ceph-deploy utility and other Ceph packages, you need to create the Ceph repositories.

Create Ceph repository on Ubuntu 18.04

Install Ceph repository signing key. Execute these commands as root user.

wget -q -O- 'https://download.ceph.com/keys/release.asc' | apt-key add -Check the latest stable release version of ceph and replace the {ceph-stable-release} with the version name.

echo deb https://download.ceph.com/debian-{ceph-stable-release}/ $(lsb_release -sc) main | tee /etc/apt/sources.list.d/ceph.list For example, to install the repos for Ceph Mimic on Ubuntu 18.04;

echo deb https://download.ceph.com/debian-mimic/ $(lsb_release -sc) main | tee /etc/apt/sources.list.d/ceph.listResynchronize the repos to their latest versions.

apt updateInstall Ceph deploy utility.

apt install ceph-deploySetup Ceph Cluster Monitor

Your nodes are now ready to deploy a Ceph storage cluster. To begin with, switch to cephadmin user created above.

su - cephadminNOTE: Do not run ceph-deploy with sudo nor run it as root.

Create a directory on Ceph admin home directory for storing configuration files and keys generated by the ceph-deploy command;

mkdir kifarunix-clusterNavigate to the directory created above.

cd kifarunix-clusterInitialize Ceph cluster monitor. Our Ceph cluster monitor is running on Ceph OSD 01.

ceph-deploy new ceph-osd01...

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-osd01][DEBUG ] connected to host: ceph-admin.kifarunix-demo.com

[ceph-osd01][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-osd01

[ceph-osd01][DEBUG ] connection detected need for sudo

[ceph-osd01][DEBUG ] connected to host: ceph-osd01

[ceph-osd01][DEBUG ] detect platform information from remote host

[ceph-osd01][DEBUG ] detect machine type

[ceph-osd01][DEBUG ] find the location of an executable

[ceph-osd01][INFO ] Running command: sudo /bin/ip link show

[ceph-osd01][INFO ] Running command: sudo /bin/ip addr show

[ceph-osd01][DEBUG ] IP addresses found: [u'192.168.2.114', u'10.0.2.15']

[ceph_deploy.new][DEBUG ] Resolving host ceph-osd01

[ceph_deploy.new][DEBUG ] Monitor ceph-osd01 at 192.168.2.114

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-osd01']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.2.114']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.confInstall Ceph Packages on Ceph Nodes

Next, install Ceph packages on all the nodes by executing the ceph-deploy install command on the Ceph Admin node as shown below.

By default, the ceph-deploy too installs the latest stable release version of Ceph, which is Ceph Mimic as of this writing.

ceph-deploy install ceph-osd01 ceph-osd02 ceph-osd03Deploy Ceph Cluster Monitor

Ceph cluster monitor has been initialized above. To deploy it, execute the command below on Ceph Admin node.

ceph-deploy mon create-initialThis command will generate a number of keys on the current working directory.

...

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpIzZYbVCopy the Ceph Configuration files and Keys

Next, copy the configuration file and admin keys gathered above to all your Ceph nodes to enable you use the ceph CLI without having to specify the monitor address and ceph.client.admin.keyring each time you execute a command.

ceph-deploy admin ceph-osd01 ceph-osd02 ceph-osd03...

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-osd01

[ceph-osd01][DEBUG ] connection detected need for sudo

[ceph-osd01][DEBUG ] connected to host: ceph-osd01

[ceph-osd01][DEBUG ] detect platform information from remote host

[ceph-osd01][DEBUG ] detect machine type

[ceph-osd01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-osd02

[ceph-osd02][DEBUG ] connection detected need for sudo

[ceph-osd02][DEBUG ] connected to host: ceph-osd02

[ceph-osd02][DEBUG ] detect platform information from remote host

[ceph-osd02][DEBUG ] detect machine type

[ceph-osd02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-osd03

[ceph-osd03][DEBUG ] connection detected need for sudo

[ceph-osd03][DEBUG ] connected to host: ceph-osd03

[ceph-osd03][DEBUG ] detect platform information from remote host

[ceph-osd03][DEBUG ] detect machine type

[ceph-osd03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.confDeploy Ceph Manager Daemon

Once you have copied the Ceph configuration files and keys to all the nodes, deploy a Ceph Cluster manager daemon by executing the command below.

Note that our Ceph Manager resides on Ceph node 01, osd01.

ceph-deploy mgr create ceph-osd01Attach Logical Storage Volumes to Ceph OSD Nodes

In our setup, we have unallocated logical volume of 4 GB on each OSD node to be used as a backstore for OSD daemons.

To attach the logical volumes to the OSD node, run the command below. Replace vg01/lv01 with Volume group and logical volume accordingly.

ceph-deploy osd create --data vg01/lv01 ceph-osd01...

[ceph-osd01][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0

[ceph-osd01][WARNIN] --> ceph-volume lvm create successful for: vg01/lv01

[ceph-osd01][INFO ] checking OSD status...

[ceph-osd01][DEBUG ] find the location of an executable

[ceph-osd01][INFO ] Running command: sudo /usr/bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-osd01 is now ready for osd use.Repeat the same for the other OSD nodes.

ceph-deploy osd create --data vg01/lv01 ceph-osd02ceph-deploy osd create --data vg01/lv01 ceph-osd03The Ceph Nodes are now ready for OSD use.

Check Ceph Cluster Health

To verify the health status of the ceph cluster, simply execute the command ceph health on each OSD node.

To check Ceph cluster health status from the admin node;

cephadmin@ceph-admin:~$ ssh ceph-osd01 sudo ceph healthHEALTH_OKRepeat the same for other nodes.

You can as well check the Cluster health status from the ceph nodes.

cephadmin@ceph-osd01:~$ sudo ceph healthHEALTH_OKTo check complete Cluster health status, run ceph -s, ceph --status or ceph status.

From the Ceph Admin node;

cephadmin@ceph-admin:~$ ssh ceph-osd01 sudo ceph -s cluster:

id: ecc4e749-830a-4ec5-8af9-22fcb5cadbca

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-osd01

mgr: ceph-osd01(active)

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 9.0 GiB / 12 GiB avail

pgs:To check complete health Cluster from the Ceph nodes;

cephadmin@ceph-osd01:~$ sudo ceph -s cluster:

id: ecc4e749-830a-4ec5-8af9-22fcb5cadbca

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-osd01

mgr: ceph-osd01(active)

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 9.0 GiB / 12 GiB avail

pgs:Uninstalling the Ceph Packages

If for some reasons, you want to restart Ceph deployment, remove the Ceph packages, delete the Ceph configuration data on all the nodes by executing the commands below on the Ceph Admin node.

Replace the Ceph nodes accordingly.

ceph-deploy purge ceph-osd01 ceph-osd02 ceph-osd03

ceph-deploy purgedata ceph-osd01 ceph-osd02 ceph-osd03

ceph-deploy forgetkeys

rm ceph.*Expanding Ceph Cluster on Ubuntu 18.04

Now that your basic Ceph cluster is up and running, you can expand it to ensure reliability and high availability of the Ceph cluster.

Deploying Additional Ceph Monitors on Ceph Cluster

You can be able to add more Ceph monitor daemons (ceph-mon) to your Ceph cluster nodes.

For example, to add a Ceph Monitor to Ceph Node 02,ceph-osd02, you can run the command below from the Ceph Admin node.

su - cephadmincd kifarunix-cluster/Add your Public network to the ceph.conf configuration file even if your nodes have a single network interface only.

Note the line; public network = 192.168.2.0/24

vim ceph.conf[global]

fsid = ecc4e749-830a-4ec5-8af9-22fcb5cadbca

mon_initial_members = ceph-osd01

mon_host = 192.168.2.114

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 192.168.2.0/24Next, add the Ceph monitor to your Ceph node.

ceph-deploy mon add ceph-osd02You can check the Ceph monitor quorum status by running the command below from Ceph OSD nodes.

ceph quorum_status --format json-prettyDeploying Additional Ceph Managers on Ceph Cluster

Ceph manager daemon (ceph-mgr) runs alongside monitor daemons, to provide additional monitoring and interfaces to external monitoring and management systems. They operate in an active/standby such that if one daemon or host fails, another one can take over without interrupting service.

You can add more Ceph managers to the Cluster by running the command;

ceph-deploy mgr create {ceph-node1} {ceph-node2}For example, to deploy an extra Ceph Manger on our Ceph node 2, ceph-osd02 from Ceph Admin node;

su - cephadmincd kifarunix-cluster/ceph-deploy mgr create ceph-osd02If you check the Ceph cluster status, you should see that one of the managers is active while the other is on Standby.

cephadmin@ceph-admin:~/kifarunix-cluster$ ssh ceph-osd01 sudo ceph -s cluster:

id: ecc4e749-830a-4ec5-8af9-22fcb5cadbca

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph-osd01,ceph-osd02

mgr: ceph-osd01(active), standbys: ceph-osd02

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 9.0 GiB / 12 GiB avail

pgs: There you go. Your Ceph storage cluster is now ready to provide, Object Storage, Block Storage or File System storage.

Install and Configure Ceph Block Device on Ubuntu 18.04

That marks the end of your guide on how to install and setup three node Ceph Storage Cluster on Ubuntu 18.04.

Related Tutorials

Install and Setup GlusterFS on Ubuntu 18.04

How to install and Configure iSCSI Storage Server on Ubuntu 18.04