In this guide, you will learn how to configure and use Ceph block device on Linux clients. Ceph provides various interfaces through which clients can access storage. Such interfaces include the Ceph Object Storage (for object storage), Ceph File System (for distributed posix-compliant filesystem) and the RADOS Block Device (RBD) for (block-based storage).

Ceph RBD (RADOS Block Device) block storage stripes virtual disks over objects within a Ceph storage cluster, distributing data and workload across all available devices for extreme scalability and performance. RBD disk images are thinly provisioned, support both read-only snapshots and writable clones, and can be asynchronously mirrored to remote Ceph clusters in other data centers for disaster recovery or backup, making Ceph RBD the leading choice for block storage in public/private cloud and virtualization environments.

RBD integrates well to KVMs such as QEMU, and cloud-based computing systems like OpenStack and CloudStack that rely on libvirt and QEMU to integrate with Ceph block devices.

Table of Contents

Configure and Use Ceph Block Device on Linux Clients

Deploy Ceph Storage Cluster

Before you can proceed, ensure that you have a running Ceph storage cluster.

In our previous guide, we learnt how to deploy a three node Ceph Storage Cluster. Check the links below;

Install and Setup Ceph Storage Cluster on Ubuntu

Setup Linux Client for Ceph Block Device Storage Use

Copy SSH Keys to Linux Client

Copy the Ceph SSH key generated by the bootstrap command to Ceph block device Linux client. Ensure Root Login is permitted on the client.

sudo ssh-copy-id -f -i /etc/ceph/ceph.pub root@linux-client-ip-or-hostnameInstall Ceph Packages on the Client

Login to the client and install Ceph command line tools.

ssh root@@linux-client-ip-or-hostnameInstall the Ceph client packages;

Ubuntu/Debian;

sudo apt install apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common -y

wget -q -O- 'https://download.ceph.com/keys/release.asc' | \

gpg --dearmor -o /etc/apt/trusted.gpg.d/cephadm.gpgecho deb https://download.ceph.com/debian-reef/ $(lsb_release -sc) main \

> /etc/apt/sources.list.d/cephadm.listapt updateapt install ceph-commonCentOS/RHEL based distros;

Get the release version of the Ceph you are running. For example, I am running Ceph Reef, 18.2.0.

Install the EPEL repository;

dnf install epel-releaseInstall CEPH repository. Replace {ceph-release} with the CEPH release version, e.g 18.2.0. Similarly, replace {distro} with your OS release number.

cat > /etc/yum.repos.d/ceph.repo << EOL

[ceph]

name=Ceph packages for $basearch

baseurl=https://download.ceph.com/rpm-{ceph-release}/{distro}/$basearch

enabled=1

priority=2

gpgcheck=1

gpgkey=https://download.ceph.com/keys/release.asc

EOL

For example, you can get the release number using the command below;

echo "el$(grep VERSION_ID /etc/os-release | cut -d= -f2 | awk -F. '{print $1}' | tr -d '"')"You RHEL Ceph repos should look like below;

cat /etc/yum.repos.d/ceph.repo[ceph]

name=Ceph packages for $basearch

baseurl=https://download.ceph.com/rpm-18.2.0/el9/$basearch

enabled=1

priority=2

gpgcheck=1

gpgkey=https://download.ceph.com/keys/release.asc

Next, install the Ceph packages;

dnf install ceph-common -yConfigure Ceph on Linux Client

Simply copy the Ceph configuration files from the Ceph monitor or ceph admin node.

Similarly, you need to copy the Ceph client keyring. This is necessary to authenticate the client to the Ceph cluster.

cephadmin@ceph-admin:~$ sudo scp /etc/ceph/{ceph.conf,ceph.client.admin.keyring} root@linux-client-ip-or-hostname:/etc/ceph/These are the contents of the Ceph configuration file.

cat /etc/ceph/ceph.conf# minimal ceph.conf for 70d227de-83e3-11ee-9dda-ff8b7941e415

[global]

fsid = 70d227de-83e3-11ee-9dda-ff8b7941e415

mon_host = [v2:192.168.122.240:3300/0,v1:192.168.122.240:6789/0] [v2:192.168.122.45:3300/0,v1:192.168.122.45:6789/0] [v2:192.168.122.231:3300/0,v1:192.168.122.231:6789/0] [v2:192.168.122.49:3300/0,v1:192.168.122.49:6789/0]

fsid: Specifies the Universally Unique Identifier (UUID) for the Ceph cluster. In this case, it’s70d227de-83e3-11ee-9dda-ff8b7941e415.mon_host: Lists the monitor (MON) nodes and their addresses in the cluster. Each entry consists of monitor IP addresses in bothv2andv1formats, along with port numbers.

Create Block Device Pools

In order to use the Ceph block device on your clients, you need to create a pool for the RADOS Block Device (RBD) and initialize it.

- A pool is a logical group for storing objects. They manage placement groups, replicas and the CRUSH rule for the pool.

- A placement group is a fragment of logical object pool that places objects as a group into OSDs. Ceph client calculates which placement group an object should be stored.

There are two types of Ceph OSD pools;

- replicated pool or

- erasure-coded pool

In a replicated type of a pool, which is usually the default type of a pool when you create one, data is copied from the primary OSD across other multiple OSDs in the cluster. By default, Ceph creates two replicas of an object (a total of three copies, or a pool size of 3). Thus, replicated pool type requires more raw storage.

On the other hand, in erasure-coded pool type, Ceph uses erasure code algorithms to break object data into two types of chunks that are written across different OSDs;

- data chunks, also know as, data blocks. The data chunks are denoted by k.

- parity chunks, also known as parity blocks. They are denoted by m. If a drive fails or becomes corrupted, the parity blocks are used to rebuild the data and as such, they specify how many OSDs that be allowed to fail without causing data loss.

Ensure Cluster is in Good Health

Before you can proceed further, ensure the cluster is in good health, active + clean state.

ceph -sSample output;

cluster:

id: 70d227de-83e3-11ee-9dda-ff8b7941e415

health: HEALTH_OK

services:

mon: 4 daemons, quorum ceph-admin,ceph-mon,ceph-osd1,ceph-osd2 (age 21h)

mgr: ceph-admin.ykkdly(active, since 22h), standbys: ceph-mon.grwzmv

osd: 3 osds: 3 up (since 21h), 3 in (since 21h)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 80 MiB used, 300 GiB / 300 GiB avail

pgs: 1 active+clean

Create Ceph Block Device Pool on Admin Node

Ceph creates a default erasure code profile when initializing a cluster and it provides the same level of redundancy as two copies in a replicated pool. However, it uses 25% less storage capacity. The default profiles define k=2 and m=2, meaning Ceph will spread the object data over four OSDs (k+m=4) and Ceph can lose one of those OSDs without losing data.

The erasure-coded pools reduce the amount of disk space required to ensure data durability but it is computationally a bit more expensive than replication.

To create Ceph pool use the command below;

ceph osd pool create {pool-name} pg_num pgp_numWhere:

{pool-name}is the name of the Ceph pool you are creating. The name rbd is recommended. We will use the name,glance-imagesin this guide.pg_numis the total number of placement groups for the pool. It determines the number of actual Placement Groups (PGs) that data objects will be divided into. See how to determine the Number of PGs.pgp_numspecifies total number of placement groups for placement purposes. Should be equal to the total number of placement groups.

So, let’s see how can calculate the number of PGs. You can use the formula;

Total PGs = (No. of OSDs x 100)/pool sizeWhere pool size refers to:

- number of replicas for replicated pools or

- the K+M sum for erasure-coded pools.

The value of PGs should be rounded UP to the nearest power of two (2^x). Rounding up is optional, but it is recommended for CRUSH to evenly balance the number of objects among placement groups.

By default, Ceph creates two replicas of an object (a total of three copies, i.e a pool size of 3).

To calculate the pool size using the default replica size;

PGs= (6x100)/3=200This rounded up to power of 2, gives 256 PGs.

You can always get the replica size using the command, ceph osd dump | grep 'replicated size'.

To calculate total number of PGs using erasure coding chunk size, you need to get the default values of K+M for erasure-coded pools as follows.

Get your erasure coded pool profile;

sudo ceph osd erasure-code-profile lsOutput;

defaultNext, get the erasure-coded profile information using the command ceph osd erasure-code-profile get <profile>.

sudo ceph osd erasure-code-profile get defaultk=2

m=2

plugin=jerasure

technique=reed_sol_van

Now, the sum of K and M is 2+2=4.

Hence, PGs=3×100/4 = 300/4 = 75. The nearest power of 2 here is 2^7 = 128. Hence, our PGs should be 128.

Hence, let’s create the block device pools;

ceph osd pool create rbd 128 128You can list OSD pools using the command;

ceph osd pool lsOr;

ceph osd lspoolsInitialize Block Device OSD Pool on Admin Node

Once you have created the OSD pool, you can initialize it using the command, rbd pool init <pool-name>;

sudo rbd pool init rbd

Enable RADOS Block Device Application for OSD Pool

Associate the pool created with the respective application to prevent unauthorized types of clients from writing data to the pool. An application can be;

cephfs(Ceph Filesystem).rbd(Ceph Block Device).rgw(Ceph Object Gateway).

To associate the pool created above with RBD, simply execute the command, ceph osd pool application enable <pool> <app> [--yes-i-really-mean-it]. Replace the name of the pool accordingly.

sudo ceph osd pool application enable rbd rbd

Command output;

enabled application 'rbd' on pool 'rbd'Creating Block Device Images on Client

Login to the client to execute the following commands.

You need to create a block device image (virtual block device) in the Ceph storage cluster before adding it to a node using the command below in a Ceph Client.

rbd create <image-name> --size <megabytes> --pool <pool-name>

(For more options, do rbd help create).

For example, to create a block device image of 10GB in the pool created above, rbd, simply run the command;

sudo rbd create disk01 --size 10G --pool rbd

To list the images in your pool;

sudo rbd ls -l rbd

NAME SIZE PARENT FMT PROT LOCK

disk01 10 GiB 2 To retrieve information about the image created, run the command;

sudo rbd --image disk01 -p rbd info

rbd image 'disk01':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 5f31272f93d8

block_name_prefix: rbd_data.5f31272f93d8

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Thu Nov 16 18:17:08 2023

access_timestamp: Thu Nov 16 18:17:08 2023

modify_timestamp: Thu Nov 16 18:17:08 2023

To remove an image from the pool;

sudo rbd rm disk01 -p rbd

To move it to trash for later deletion;

sudo rbd trash move rbd/disk01

To restore image from trash to the pool, obtain the image ID as assigned on the trash store then restore the image using the ID;

sudo rbd trash list rbd

38c8adcf4ca disk01

Where rbd is the name of the pool.

sudo rbd trash restore rbd/38c8adcf4ca

To empty image from trash for permanent deletion;

rbd trash remove rbd/38c8adcf4ca

Mapping Images to Block Devices

After creating an image, you can map it to block devices.

sudo rbd map disk01 --pool rbd

You will see an output like;

/dev/rbd0

To show block device images mapped to kernel modules with the rbd command;

sudo rbd showmapped

id pool namespace image snap device

0 rbd disk02 - /dev/rbd0

To unmap a block device image, use the command, rbd unmap /dev/rbd/{poolname}/{imagename} for example;

sudo rbd unmap /dev/rbd/rbd/disk01Create FileSystem on Ceph Block Device

The Ceph mapped block device is now ready. All is left is to create a file system on it and mount it to make it use-able.

For example, to create an XFS file system on it (you can use your preferred filesystem type);

sudo mkfs.xfs /dev/rbd0 -L cephdisk01

meta-data=/dev/rbd0 isize=512 agcount=16, agsize=163840 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=2621440, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

Mounting Ceph Block Device on Linux Client

You can now mount the block device. For example, to mount it under /media/ceph directory;

sudo mkdir /media/ceph

sudo mount /dev/rbd0 /media/ceph

You can as well mount it as follows;

sudo mount /dev/rbd/rbd/disk01 /media/ceph/

Check mounted Filesystems;

df -hT -P /dev/rbd0

Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd0 xfs 10G 105M 9.9G 2% /media/ceph

There you go.

If you check the Ceph cluster health;

ceph --status

cluster:

id: 70d227de-83e3-11ee-9dda-ff8b7941e415

health: HEALTH_OK

services:

mon: 4 daemons, quorum ceph-admin,ceph-mon,ceph-osd1,ceph-osd2 (age 23h)

mgr: ceph-admin.ykkdly(active, since 24h), standbys: ceph-mon.grwzmv

osd: 3 osds: 3 up (since 23h), 3 in (since 23h)

data:

pools: 2 pools, 33 pgs

objects: 45 objects, 14 MiB

usage: 236 MiB used, 300 GiB / 300 GiB avail

pgs: 33 active+clean

progress:

Global Recovery Event (15s)

[===========================.]

If the Global Recovery Event remains persistent despite the cluster Health OK, then you can try to clear the progress;

ceph progress clearThe check status again;

ceph -s cluster:

id: 70d227de-83e3-11ee-9dda-ff8b7941e415

health: HEALTH_OK

services:

mon: 4 daemons, quorum ceph-admin,ceph-mon,ceph-osd1,ceph-osd2 (age 23h)

mgr: ceph-admin.ykkdly(active, since 24h), standbys: ceph-mon.grwzmv

osd: 3 osds: 3 up (since 23h), 3 in (since 23h)

data:

pools: 2 pools, 33 pgs

objects: 45 objects, 14 MiB

usage: 236 MiB used, 300 GiB / 300 GiB avail

pgs: 33 active+clean

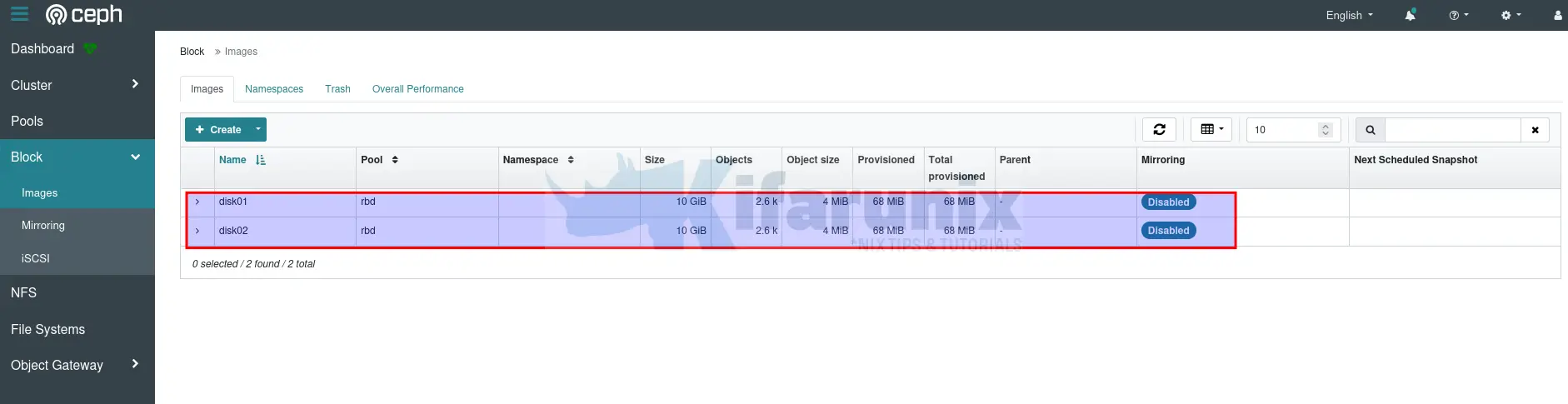

Check the Image Status on Ceph Dashboard

Login to Ceph dashboard and check the status of the virtual block devices.

On the dashboard, navigate to Block > Images.

Click on the drop down button against each disk to expand and view more details.

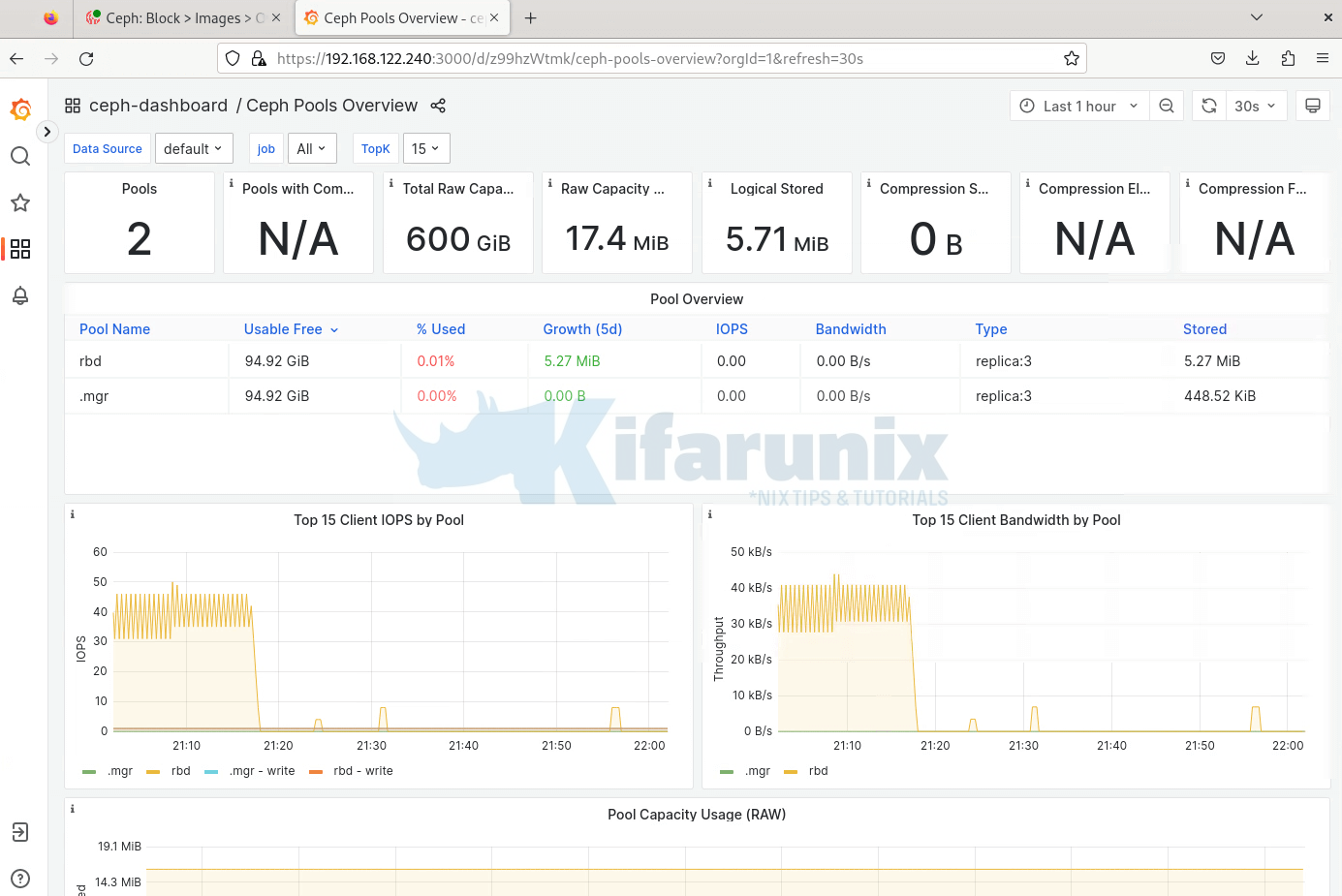

You can also access the Grafana dashboards (https://ceph-admin:3000), Replace the address accordingly.

That marks the end of our guide on how to configure and use Ceph Block Device on Linux.

Reference