In this blog post, you’ll learn how to deploy multinode OpenShift cluster using UPI (User Provisioned Infrastructure). UPI is one of the deployment methods provided by OpenShift that gives you complete control over your infrastructure setup. Unlike Installer Provisioned Infrastructure (IPI), where the installer handles everything, UPI requires you to manually provision and configure all components such as cluster machines/servers, networking, load balancers, and DNS. This method is ideal for custom environments, on-premise setups, or situations where automation is limited. By the end of this guide, you’ll have a fully functional, production-grade OpenShift cluster running across multiple nodes, tailored to your specific environment.

Table of Contents

How to Deploy Multinode OpenShift Cluster Using UPI

Different Methods of Deploying Red Hat OpenShift

OpenShift Container Platform provides four primary methods for deploying a cluster, each suited to different environments and levels of automation:

- Interactive Installation (Assisted Installer): Uses a web-based installer, ideal for internet-connected setups. It offers smart defaults, pre-flight validations, and a REST API for automation.

- Local Agent-based Installation: Designed for air-gapped or restricted environments. It uses a downloadable CLI agent and offers similar benefits to the Assisted Installer.

- Automated Installation (Installer-Provisioned Infrastructure / IPI): The installer automatically provisions and manages infrastructure using technologies like BMC. Works in both connected and restricted environments.

- Full Control (User-Provisioned Infrastructure / UPI): You manually set up and manage all infrastructure components. Ideal for custom, complex, or tightly controlled environments.

In this guide, we’ll explore the UPI method, where you provision and configure the infrastructure yourself.

Basic Architecture Overview

Below is a basic architecture depicting our OpenShift Container Platform cluster and its core components.

┌───────────────────┐

│ Bastion Host │

┌─────│ 10.184.10.75 │

│ └───────────────────┘

│

│ ┌─────────────────────┐

│ │ DNS Server │

┌─────────────────┐ │─────│ 10.184.10.51 │

│ Gateway │ │ │ ns1.kifarunix.com │

│ 192.168.122.79 │ │ └─────────────────────┘

│ 10.184.10.10 │──────┘

│ 10.185.10.10 │ ┌─────────────────────┐

└────────┼────────┘ │ DHCP/PXE Server │

│─────────────────────┼ 10.185.10.52 │

│ │ dhcp.kifarunix.com │

┌──────────▼───────────┐ └─────────────────────┘

│ External LB │

│ (API/Ingress) │

│ lb.ocp.kifarunix.com │

│ 10.185.10.120 │

└──────────┼───────────┘

│

┌────────────────────┌────────┼────────┌─────────────────┐

│ │ │ │

┌───────▼───────┐ ┌───────▼───────┐ ┌───────▼───────┐ ┌───────▼───────┐

│ bootstrap │ │ Master-01 │ │ Master-02 │ │ Master-03 │

│ temporary │ │ (Control) │ │ (Control) │ │ (Control) │

└───────────────┘ └───────────────┘ └───────────────┘ └───────────────┘

│ │ │ │

└────────────────────└───────┌─────────└─────────────────┘

│

┌───────────────┼───────────────┐

│ │ │

┌───────▼───────┐ ┌─────▼─────┐ ┌───────▼───────┐

│ Worker-01 │ │ Worker-02 │ │ Worker-03 │

│ (Compute) │ │ (Compute) │ │ (Compute) │

└───────────────┘ └───────────┘ └───────────────┘Prerequisites for Deploying OpenShift Cluster on UPI

Internet Access

Internet connectivity is required to:

- Access OpenShift Cluster Manager for downloading the installer and managing subscriptions.

- Pull required container images from Quay.io container registry.

- Perform cluster updates.

Registering for OpenShift to Access Required Files

To get started with OpenShift and deploy your cluster, you’ll need to register for access to the necessary installation files and tools. In this guide, we’ll walk you through the process of obtaining these resources using the OpenShift Self-Managed Trial version. The trial version grants you a 60-day evaluation period, allowing you to explore OpenShift’s full capabilities and configure clusters for evaluation purposes.

Steps to Register for OpenShift and access required files:

- Create a Red Hat Account

Before you can register for the OpenShift trial, you need to have a valid Red Hat account. If you don’t already have one, visit Red Hat’s registration page to create an account. - Login to Your Red Hat Account

Once you have a valid account, log in to Red Hat’s OpenShift Container Platform page. From here, you can start trial or buy OpenShift licenses. Since we’re setting up OpenShift just to explore it, we will go with trial version. - Start Your Trial

After logging in, click the Try button. This will take you to OCP self-managed trial page. Click Start your Trial button to begin the process of accessing OpenShift. This will initiate the 60-day evaluation period, giving you temporary access to the full range of OpenShift features. - Next Steps

After signing up for the trial, follow the Next Steps and select the first option, Install Red Hat OpenShift Container Platform. Click Red Hat Hybrid Cloud Console link to create your first cluster. - Cluster Management

In the Hybrid Cloud Console, go to Cluster Management and select Create Cluster.- Choose an OpenShift Cluster Type to create.

- Under Data Center, select Other data center options.

- For the Infrastructure Provider, choose Bare Metal (x86_64).

- Select the installation type that best fits your needs. For this guide, choose Full Control (for User-Provisioned Infrastructure).

- Install OpenShift on Bare Metal with User-Provisioned Infrastructure

You’ll now be directed to the Install OpenShift on Bare Metal with User-Provisioned Infrastructure page. Here’s what you need to download:- Download OpenShift Installer

Download and extract the OpenShift installer for your operating system. Place the file in the directory where you will store the installation configuration files.

Note: The OpenShift install program is only available for Linux and macOS at this time. - Download Pull Secret

Download or copy your pull secret. You’ll be prompted for this secret during installation to access Red Hat’s container images. - Command Line Interface (CLI)

Download the OpenShift command-line tools and add them to your PATH. These tools will help you interact with the OpenShift cluster during the setup process. - Red Hat Enterprise Linux CoreOS (RHCOS)

Download the Red Hat Enterprise Linux CoreOS (RHCOS) images. You’ll need these to create the machines for your OpenShift cluster. Since we are using PXE boot in this guide, download the kernel, initramfs, and rootfs images. These will be used to set up the OpenShift nodes. Alternatively, you can download the installer ISO if you’re not using PXE.

- Download OpenShift Installer

Required Machines

Minimum required hosts for a basic UPI installation:

- One bootstrap machine (temporary, can be removed after installation).

- Three control plane machines.

- At least two compute (worker) machines.

All control plane and bootstrap nodes must use RHCOS. Compute nodes can use RHCOS or RHEL 8.6+.

Minimum Hardware Requirements

- Bootstrap and Control Plane:

- 4 vCPUs, 16 GB RAM, 100 GB storage, 300 IOPS

- Compute:

- 2 vCPUs, 8 GB RAM, 100 GB storage, 300 IOPS

You can use fio command to confirm your IOPS.

Operating System Notes

- RHCOS is based on RHEL 9.6 in OpenShift 4.19.

- RHEL 7 is no longer supported.

- Compute nodes running RHEL require manual lifecycle management.

Certificate Signing Requests (CSRs)

- You must manage CSRs manually after installation.

- CSRs for serving certificates require manual validation and approval.

Networking Requirements

- Networking must be configured in initramfs at boot for all RHCOS nodes. For this deployment, a PXE boot server will be used to serve the

initramfs, kernel, and Ignition files to the cluster nodes during the initial boot process. - Machines must fetch Ignition configs via HTTP(S) after acquiring an IP (via DHCP or static config).

- Each node must be able to resolve and reach all others by hostname.

- Hostnames can be set via DHCP or static configuration.

- Persistent IPs and DNS info should be provided via DHCP or kernel args.

It is recommended to use a DHCP server to configure networking for OpenShift cluster nodes. DHCP simplifies provisioning by automatically assigning persistent IP addresses, DNS server information, and hostnames.

- If using DHCP:

- Reserve static IPs for each node by binding MAC addresses to IPs.

- Ensure the DHCP server provides the DNS server IP to all nodes.

- Configure hostnames through DHCP for consistency.

- If not using DHCP:

- You must manually configure each node’s IP address, DNS server, and optionally its hostname at install time.

Required Network Ports

OpenShift nodes must be able to communicate across specific ports for the platform to function correctly.

| Source to Destination | Protocol | Port(s) | Purpose |

|---|---|---|---|

| All nodes to All nodes | ICMP | N/A | Network reachability tests (e.g. ping) |

| TCP | 1936 | Metrics access (Node Exporter, etc.) | |

| TCP | 9000–9999 | Node-level services (e.g. CVO on 9099, Node Exporter on 9100–9101) | |

| TCP | 10250–10259 | Kubernetes internal communication (kubelet, etc.) | |

| UDP | 4789 | VXLAN overlay networking (OVN-Kubernetes or OpenShift SDN) | |

| UDP | 6081 | Geneve overlay networking (for OVN-Kubernetes) | |

| TCP/UDP | 30000–32767 | Kubernetes NodePort services (external access to workloads) | |

| UDP | 123 | Network Time Protocol (NTP); required for clock sync across nodes | |

| UDP | 500 | IPsec IKE (VPN or encrypted overlays) | |

| UDP | 4500 | IPsec NAT Traversal | |

| ESP | N/A | IPsec Encapsulating Security Payload (ESP) | |

| All nodes to Control plane nodes | TCP | 6443 | Kubernetes API server access |

| Control plane to Control plane nodes | TCP | 2379–2380 | etcd peer communication (cluster state replication) |

Ensure all required network ports are open on your firewalls to allow necessary cluster communications.

NTP Configuration

- Use public or internal NTP servers.

- Chrony can read NTP info from DHCP if configured.

DNS Requirements

In OpenShift Container Platform deployments, DNS plays a central role in ensuring that all components can reliably discover and communicate with each other. Properly configured forward and reverse DNS records are essential for the cluster’s API access, node identification, and certificate generation.

The following records must be resolvable by all nodes in the environment:

- Forward and reverse DNS resolution required for:

api.<cluster>.<domain>andapi-int.<cluster>.<domain>(Kubernetes API)*.apps.<cluster>.<domain>(application routes)bootstrap.<cluster>.<domain>(bootstrap node)- Control plane and compute nodes (

<node-name><n>.<cluster>.<domain>)

When possible, use a DHCP server that provides hostnames and integrates with DNS, as this can simplify configuration and reduce the likelihood of errors.

Load Balancer Requirements

- API Load Balancer:

- Layer 4 (TCP) only, ensure no session persistence configured.

- Required ports: 6443 (Kubernetes API), 22623 (machine config server)

- Ingress Load Balancer:

- Layer 4 (TCP) preferred.

- Connection/session persistence recommended.

- Required ports: 443 (HTTPS), 80 (HTTP)

If you are using HAProxy as a load balancer, you can check that the haproxy process is listening on ports

6443, 22623, 443, and 80. We will be using HAProxy in this guide.

Prepare OCP User Provisioned Infrastructure

Before installing OpenShift Container Platform on a User-Provisioned Infrastructure (UPI), you must first set up the core infrastructure services required by the cluster. These services include networking, PXE provisioning, DNS, load balancing, and firewall configuration.

In this guide, we will virtualize the entire OpenShift environment using KVM. All OpenShift nodes, including bootstrap, control plane, and worker nodes, will run as virtual machines created and managed on a KVM hypervisor.

Here are the key infrastructure components we will provision:

- Create KVM virtual machines for bootstrap, masters, and workers nodes

- DNS server with OpenShift-specific records

- Generate SSH Key pairs for accessing RHCOS cluster nodes

- DHCP and PXE boot server to provision RHCOS nodes

- Firewall rules to open required cluster ports

- HAProxy load balancer for API and Ingress traffic

- Bastion/provisioning node running Ubuntu 24.04 OS

If you noticed, we are also setting up a bastion/provisioning node. This node will be used as a central management point for the OpenShift installation, hosting tools like the OpenShift CLI (oc), the OpenShift installer, and other utilities required to generate Ignition configs, manage the cluster, and serve configuration files via HTTP for PXE booting, since RHCOS nodes are immutable and cannot run these commands directly.

We have already created this VM on KVM.

Create KVM VMs for OpenShift (Without Starting)

Since we are using KVM in this guide, we have created 7 vms: one bootstrap node, 3 master and 3 worker nodes.

These are the nodes:

for node in ocp-bootstrap ocp-ms-01 ocp-ms-02 ocp-ms-03 ocp-wk-01 ocp-wk-02 ocp-wk-03; do \

sudo virsh list --all | grep $node

doneSample output;

- ocp-bootstrap shut off

- ocp-ms-01 shut off

- ocp-ms-02 shut off

- ocp-ms-03 shut off

- ocp-wk-01 shut off

- ocp-wk-02 shut off

- ocp-wk-03 shut off

We already have other nodes: loadbalancer, DHCP and DNS server running already.

Setup/Update DNS Server with OCP Records

We have updated our DNS server with the required OCP records. We are using BIND9 DNS server.

If you are looking for a guide on how to configure BIND DNS server, check the link below;

How to setup BIND DNS server on Linux

Below are our sample forward records:

cat /etc/bind/forward.kifarunix.com...

;client records

ms-01 IN A 10.185.10.210

ms-02 IN A 10.185.10.211

ms-03 IN A 10.185.10.212

wk-01 IN A 10.185.10.213

wk-02 IN A 10.185.10.214

wk-03 IN A 10.185.10.215

bootstrap IN A 10.185.10.200

pxe IN A 10.185.10.100

web IN A 10.185.10.100

dhcp IN A 10.185.10.100

lb IN A 10.185.10.120

*.apps IN A 10.185.10.120

api IN A 10.185.10.120

api-int IN A 10.185.10.120

Sample reverse records:

cat /etc/bind/10.185.10.kifarunix.com...

; client PTR records

100 IN PTR web.ocp.kifarunix.com.

100 IN PTR dhcp.ocp.kifarunix.com.

100 IN PTR pxe.ocp.kifarunix.com.

120 IN PTR lb.ocp.kifarunix.com.

120 IN PTR api.ocp.kifarunix.com.

120 IN PTR api-int.ocp.kifarunix.com.

200 IN PTR bootstrap.ocp.kifarunix.com.

210 IN PTR ms-01.ocp.kifarunix.com.

211 IN PTR ms-02.ocp.kifarunix.com.

212 IN PTR ms-03.ocp.kifarunix.com.

213 IN PTR wk-01.ocp.kifarunix.com.

214 IN PTR wk-02.ocp.kifarunix.com.

215 IN PTR wk-03.ocp.kifarunix.com.

...

Since we have already setup our bastion/provisioning node, let’s ran some random DNS checks on the same node:

Check the external API address;

dig +noall +answer @10.184.10.51 api.ocp.kifarunix.comapi.ocp.kifarunix.com. 86400 IN A 10.185.10.120

It resolves to the LB IP address, which is okay.

Check internal API record name;

dig +noall +answer api-int.ocp.kifarunix.comapi-int.ocp.kifarunix.com. 86400 IN A 10.185.10.120

Verify the wildcard DNS using a random app name:

dig +noall +answer app1.apps.ocp.kifarunix.comapp1.apps.ocp.kifarunix.com. 86400 IN A 10.185.10.120

Check reverse look up:

dig -x 10.185.10.210 +shortms-01.ocp.kifarunix.com.

DNS looks fine.

Generate SSH Key Pair for Accessing RHCOS Nodes

On the Bastion host, generate an SSH key pair. The public key is used during the OpenShift installation to configure the RHCOS nodes for password-less SSH access by adding it to the core user’s authorized_keys.

- Public Key: Included in the Ignition config to enable SSH access.

- Private Key: Used on your local machine to SSH into the nodes.

This key is also required for tasks like installation debugging and using the gather command.

Execute the command below to generate the key pairs:

ssh-keygenLeave the passphrase empty for password-less authentication.

Sample output;

Generating public/private ed25519 key pair.

Enter file in which to save the key (/home/kifarunix/.ssh/id_ed25519):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/kifarunix/.ssh/id_ed25519

Your public key has been saved in /home/kifarunix/.ssh/id_ed25519.pub

The key fingerprint is:

SHA256:BB8bgct7UZmRXqo5s9n7njIjkaLYVvb0QhSgEveMZL4 kifarunix@ocp-bastion

The key's randomart image is:

+--[ED25519 256]--+

| . + oo+..= |

| * =.o.+= . |

| . +.o.++ o |

| . .o.o o |

| E oS= |

| = @ |

| o + * O |

| . + * * . |

| . o.B+ |

+----[SHA256]-----+

We will use the keys later in the guide.

Install OpenShift CLI Tools and Pull Secret

In this step, you’ll download the necessary OpenShift tools and files to the Bastion host and prepare them for installation.

Therefore, create a directory on the Bastion Host where you’ll store all your OpenShift installation configuration files:

mkdir ~/ocp-upiFiles to download:

- OpenShift Installer (installer for your OS)

- OpenShift CLI (

oc) - Pull Secret

So if you’ve already obtained these files in the previous steps, you can skip the download process and transfer them to the Bastion host.

tree ..

├── openshift-client-linux.tar.gz

├── openshift-install-linux.tar.gz

└── pull-secret

1 directory, 3 file

Extract the install the CLI tools;

cd ~/ocp-upitar xf openshift-install-linux.tar.gztar xf openshift-client-linux.tar.gzWe now have the installer and oc and kubectl command.

Move the kubectl and oc to local binary path.

sudo mv {kubectl,oc} /usr/local/bin/Verify the CLI tools are working as expected:

oc versionClient Version: 4.19.5

Kustomize Version: v5.5.0

kubectl versionClient Version: v1.32.1

Kustomize Version: v5.5.0

The connection to the server localhost:8080 was refused - did you specify the right host or port?

Create OpenShift Installation Configuration File

The OpenShift installation configuration file, typically named install-config.yaml, defines the configuration parameters for your OpenShift Container Platform cluster deployment. This includes infrastructure details, networking settings, node configurations, and platform-specific options. The OpenShift installer uses this file to provision and configure your cluster on various platforms such as AWS, Azure, GCP, VMware, or bare metal.

Typically, you generate this file using:

./openshift-install create install-config --dir=<installation_directory>This creates an interactive template that you customize for your deployment.

For KVM virtualization, you must set platform: none in your configuration. However, the current installer command doesn’t provide this option in the interactive prompts. Therefore, you must manually create your install-config.yaml file for KVM deployments.

The file contains sensitive information (credentials, pull secrets) and is consumed by the installer during cluster creation. Always back up your configuration before proceeding with installation.

Here is our sample install-config.yaml file

Hence, create a directory to store your config;

cat ~/ocp-upi/ocp-upi-installation/install-config.yamlapiVersion: v1

baseDomain: <set your base domain>

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: <cluster>

networking:

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

networkType: OVNKubernetes

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

fips: false

pullSecret: '<PASTE YOUR PULL SECRET>'

sshKey: '<PASTE YOUR SSH PUB KEY>'

- Worker replicas = 0: For User Provisioned Infrastructure (UPI) with

platform: none, you manually create and manage worker VMs. The cluster does not create or manage worker machines when UPI is used. The installer just generates ignition configs for you to use. - Control plane replicas = 3: 3 nodes control plane is required for High Availability Setting up the environment for an OpenShift installation and etcd quorum. The installer provisions exactly 3 master nodes automatically, even in UPI mode, because this is mandatory for cluster functionality.

You can use the sample template above, replacing the placeholder values accordingly:

<set your base domain>: Your organization’s domain (e.g.,kifarunix.com)<cluster>: Your cluster name (e.g.,ocp, fromocp.kifarunix.com)<PASTE YOUR PULL SECRET>: Your Red Hat pull secret from the OpenShift Cluster Manager<PASTE YOUR SSH PUB KEY>: Your SSH public key for node access.- The network blocks must not overlap with existing physical networks.

Back it up by storing a copy outside its installation directory:

cp ~/ocp-upi/ocp-upi-installation/install-config.yaml ~/ocp-upiCreate the Kubernetes Manifest and Ignition Config Files

Once you have your install-config.yaml file ready, the next step is to generate the configuration files needed to bootstrap your OpenShift cluster nodes. This involves creating two types of files in sequence:

- Kubernetes Manifests: These are YAML files that define the desired state of OpenShift resources (e.g., pods, services, deployments), which the control plane uses to manage cluster components.

- Ignition Config Files: These are machine-specific configurations (e.g.,

bootstrap.ign,master.ign,worker.ign) that are generated to set up RHCOS nodes on their first boot. They include certificates, keys, and other configuration data such as networking, storage, SSH access.

To generate Kubernetes Manifests and Ignition Files, navigate to the OpenShift directory containing the installer utility.

cd ~/ocp-upi- Generate the Kubernetes Manifests by running the command below, replacing <installation_directory> with the directory containing the

install-config.yamlfile:

For example:./openshift-install create manifests --dir <installation_directory>

This will generate a number of configuration manifests:./openshift-install create manifests --dir ocp-upi-installation/

Sample output:tree ocp-upi-installation/

Now, remember we are running a multi-node cluster. As such, we need to ensure that the workloads are not scheduled on the Master/control nodes. Therefore, edit the <installation_directory>/manifests/cluster-scheduler-02-config.yml file and locate the mastersSchedulable parameter and set its value to False.ocp-upi-installation/

├── 000_capi-namespace.yaml

├── manifests

│ ├── cluster-config.yaml

│ ├── cluster-dns-02-config.yml

│ ├── cluster-infrastructure-02-config.yml

│ ├── cluster-ingress-02-config.yml

│ ├── cluster-network-02-config.yml

│ ├── cluster-proxy-01-config.yaml

│ ├── cluster-scheduler-02-config.yml

│ ├── cvo-overrides.yaml

│ ├── kube-cloud-config.yaml

│ ├── kube-system-configmap-root-ca.yaml

│ ├── machine-config-server-ca-configmap.yaml

│ ├── machine-config-server-ca-secret.yaml

│ ├── machine-config-server-tls-secret.yaml

│ └── openshift-config-secret-pull-secret.yaml

└── openshift

├── 99_feature-gate.yaml

├── 99_kubeadmin-password-secret.yaml

├── 99_openshift-cluster-api_master-user-data-secret.yaml

├── 99_openshift-cluster-api_worker-user-data-secret.yaml

├── 99_openshift-machineconfig_99-master-ssh.yaml

├── 99_openshift-machineconfig_99-worker-ssh.yaml

└── openshift-install-manifests.yaml

You can confirm it is set to false by using the command below:sed -i '/mastersSchedulable/s/true/false/' ocp-upi-installation/manifests/cluster-scheduler-02-config.ymlgrep mastersSchedulable ocp-upi-installation/manifests/cluster-scheduler-02-config.yml - Generate the Ignition Files:

Confirm the files:./openshift-install create ignition-configs --dir ocp-upi-installation/

Sample output:tree ocp-upi-installation/ocp-upi-installation/

├── 000_capi-namespace.yaml

├── auth

│ ├── kubeadmin-password

│ └── kubeconfig

├── bootstrap.ign

├── master.ign

├── metadata.json

└── worker.ign

2 directories, 7 files

As you can see, the OpenShift installer generates several critical files during the ignition config creation process:

- Ignition Config Files are created in the installation directory for each node type:

bootstrap.ign– Bootstraps the initial cluster setupmaster.ign– Configures control plane nodesworker.ign– Configures compute/worker nodes

- Authentication Files are automatically created in the

./authsubdirectory:kubeadmin-password– Contains the temporary admin passwordkubeconfig– Client configuration for cluster access

These files provide everything needed to boot your VMs and access your cluster once installation is complete. You will need to copy them, the three ignition files, to the web root directory of your web server.

In this setup, our PXE boot server doubles us as the web server serving our OCP deployment critical files.

Hence:

rsync -avP ocp-upi-installation/*.ign root@webserver:/var/www/html/autoinstall/It is recommended to use the Ignition config files within 12 hours of their generation. This helps avoid potential installation failures caused by certificate rotation occurring during the installation.

If the cluster does not complete bootstrap within 24 hours, you must regenerate the certificates and Ignition config files, then restart the installation process.

Prepare DHCP and PXE Boot Server Configuration

We have already set up the DHCP server and PXE boot server to provision RHCOS nodes.

We used the guide below to setup PXE boot server.

How to Set Up PXE Boot Server on Ubuntu 24.04: Step-by-Step Guide

Also, we have already downloaded the necessary files required for provisioning RHCOS nodes:

- Kernel (

rhcos-live-kernel.x86_64) - Initramfs (

rhcos-live-initramfs.x86_64.img) - Root filesystem (

rhcos-live-rootfs.x86_64.img)

We will server the kernel and initramfs via TFTP and the rootfs image via HTTP.

In typical PXE boot environments, the DHCP server is configured with a single boot filename, and clients are presented with a general PXE boot menu where the user selects which node role (e.g., bootstrap, master, worker) to install.

Sample DHCP Server configuration with static IP address mapping based on the node MAC addresses:

cat /etc/dhcp/dhcpd.confoption domain-name "kifarunix.com";

option domain-name-servers 192.168.122.110;

default-lease-time 3600;

max-lease-time 86400;

authoritative;

option pxe-arch code 93 = unsigned integer 16;

# No DHCP here

subnet 10.184.10.0 netmask 255.255.255.0 {

}

# PXE Subnet

subnet 10.185.10.0 netmask 255.255.255.0 {

range 10.185.10.100 10.185.10.199;

option routers 10.185.10.10;

option domain-name-servers 10.184.10.51;

option domain-name "ocp.kifarunix.com";

option subnet-mask 255.255.255.0;

option broadcast-address 10.185.10.255;

# PXE Boot server (TFTP)

next-server 10.185.10.52;

# Match PXE boot client type

if option pxe-arch = 00:07 or option pxe-arch = 00:09 {

# UEFI x64

filename "grub/grubnetx64.efi";

} else {

# BIOS clients

filename "pxelinux.0";

}

}

host bootstrap {

hardware ethernet 52:54:00:b9:89:e7;

fixed-address 10.185.10.200;

option host-name "bootstrap.ocp.kifarunix.com";

}

host ms-01 {

hardware ethernet 52:54:00:75:5e:54;

fixed-address 10.185.10.210;

option host-name "ms-01.ocp.kifarunix.com";

}

host ms-02 {

hardware ethernet 52:54:00:26:fe:66;

fixed-address 10.185.10.211;

option host-name "ms-02.ocp.kifarunix.com";

}

host ms-03 {

hardware ethernet 52:54:00:5a:3d:87;

fixed-address 10.185.10.212;

option host-name "ms-03.ocp.kifarunix.com";

}

host wk-01 {

hardware ethernet 52:54:00:40:7e:95;

fixed-address 10.185.10.213;

option host-name "wk-01.ocp.kifarunix.com";

}

host wk-02 {

hardware ethernet 52:54:00:fe:ed:86;

fixed-address 10.185.10.214;

option host-name "wk-02.ocp.kifarunix.com";

}

host wk-03 {

hardware ethernet 52:54:00:22:7d:aa;

fixed-address 10.185.10.215;

option host-name "wk-03.ocp.kifarunix.com";

}

sudo dhcpd -t -cf /etc/dhcp/dhcpd.confIn such a setup, all nodes receive the same PXE configuration and boot into a common PXE menu. Here’s a sample PXE boot menu used in that default scenario:

cat /var/lib/tftp/pxelinux.cfg/defaultDEFAULT menu.c32

PROMPT 0

TIMEOUT 100

MENU TITLE RHCOS Installation Menu

LABEL bootstrap

MENU LABEL ^1) Install RHCOS on Boostrap Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/bootstrap.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

LABEL master

MENU LABEL ^2) Install RHCOS on Master Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/master.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

LABEL worker

MENU LABEL ^3) Install RHCOS on Compute/Worker Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/worker.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

In this case, you need to manually select the appropriate entry from the boot menu at the VM/server console to boot into the respective OS.

In this guide, we automate OS selection during PXE boot, removing the need to manually choose an option from the PXE boot menu. Instead of using a shared boot menu for all systems, we create individual PXE configuration files for each node role: bootstrap, master, and worker. Each file is linked to a specific node’s MAC address using PXE’s built-in MAC address resolution.

The DHCP configuration remains unchanged. All hosts continue to use the standard “pxelinux.0” filename.

When pxelinux.0 loads, it searches for configuration files in this order:

- A PXE boot file named after the node’s MAC address (in the format

01-xx-xx-xx-xx-xx-xx) - If no MAC-based file is found, it falls back to a default configuration file (usually named

default)

By naming the PXE boot configuration files after each node’s MAC address (for example, 01-52-54-00-b9-89-e7 for the bootstrap node), each node automatically loads its specific installer configuration.

This approach is simpler and completely avoids manual selection from the boot menu.

Therefore, create a PXE boot configuration file for each node, named after its MAC address (in the format 01-xx-xx-xx-xx-xx-xx), based on its role (bootstrap, master, or worker). Remove all node-specific boot entries from pxelinux.cfg/default, since each node will now load its own config automatically.

Let’s back up the default config (we only used this to boot OCP nodes, just FYI);

mv /var/lib/tftp/pxelinux.cfg/default{,.bak}This is how we have created the individual PXE config files for our nodes:

ls -1 /var/lib/tftp/pxelinux.cfg/01-52-54-00-22-7d-aa (worker - wk-03)

01-52-54-00-26-fe-66 (master - ms-02)

01-52-54-00-40-7e-95 (worker - wk-01)

01-52-54-00-5a-3d-87 (master - ms-03)

01-52-54-00-75-5e-54 (master - ms-01)

01-52-54-00-b9-89-e7 (bootstrap)

01-52-54-00-fe-ed-86 (worker - wk-02)

default.bak

Below are examples of the contents for each type of node configuration file:

Example: Bootstrap node (01-52-54-00-b9-89-e7):

cat /var/lib/tftp/pxelinux.cfg/01-52-54-00-b9-89-e7DEFAULT bootstrap

PROMPT 0

TIMEOUT 100

MENU TITLE Bootstrap Node Installation

UI menu.c32

LABEL bootstrap

MENU LABEL Install RHCOS on Bootstrap Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/bootstrap.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

Example: Master node (01-52-54-00-5a-3d-87), same for the three master nodes:

cat /var/lib/tftp/pxelinux.cfg/01-52-54-00-5a-3d-87DEFAULT master

PROMPT 0

TIMEOUT 100

MENU TITLE Master Node Installation

UI menu.c32

LABEL master

MENU LABEL Install RHCOS on Master Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/master.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

Example: Worker node (01-52-54-00-40-7e-95), same for all worker nodes.

cat /var/lib/tftp/pxelinux.cfg/01-52-54-00-22-7d-aaDEFAULT worker

PROMPT 0

TIMEOUT 100

MENU TITLE Worker Node Installation

UI menu.c32

LABEL worker

MENU LABEL Install RHCOS on Worker Node

KERNEL ocp/rhcos/rhcos-live-kernel.x86_64

APPEND initrd=ocp/rhcos/rhcos-live-initramfs.x86_64.img coreos.inst.install_dev=/dev/vda cloud-config-url=/dev/null coreos.inst.ignition_url=http://10.185.10.52/autoinstall/ocp/rhcos/worker.ign coreos.live.rootfs_url=http://10.185.10.52/autoinstall/ocp/rhcos/rhcos-live-rootfs.x86_64.img

As such, ensure that, the kernel and initramfs files are copied to the respective TFTP directory while the rootfs is available and accessible via the web.

tree /var/lib/tftp/ocp/rhcos//var/lib/tftp/ocp/rhcos/

├── rhcos-live-initramfs.x86_64.img

└── rhcos-live-kernel.x86_64

1 directory, 2 files

Similarly, copy ignition files from the bastion server or where you have them to the web root directory of the PXE server (if you are using PXE server to host your installation boot files). We have already copied them in this setup.

After the configuration, restart the services;

sudo systemctl restart isc-dhcp-server tftpd-hpa.service apache2Confirming access to the rootfs image and ignition files on the web server:

curl -s http://10.185.10.52/autoinstall/ocp/rhcos/ | grep -Po '(?<=href=")[^"]*' | grep -vE '^\?C=|^/' | sortbootstrap.ign

master.ign

rhcos-live-rootfs.x86_64.img

worker.ign

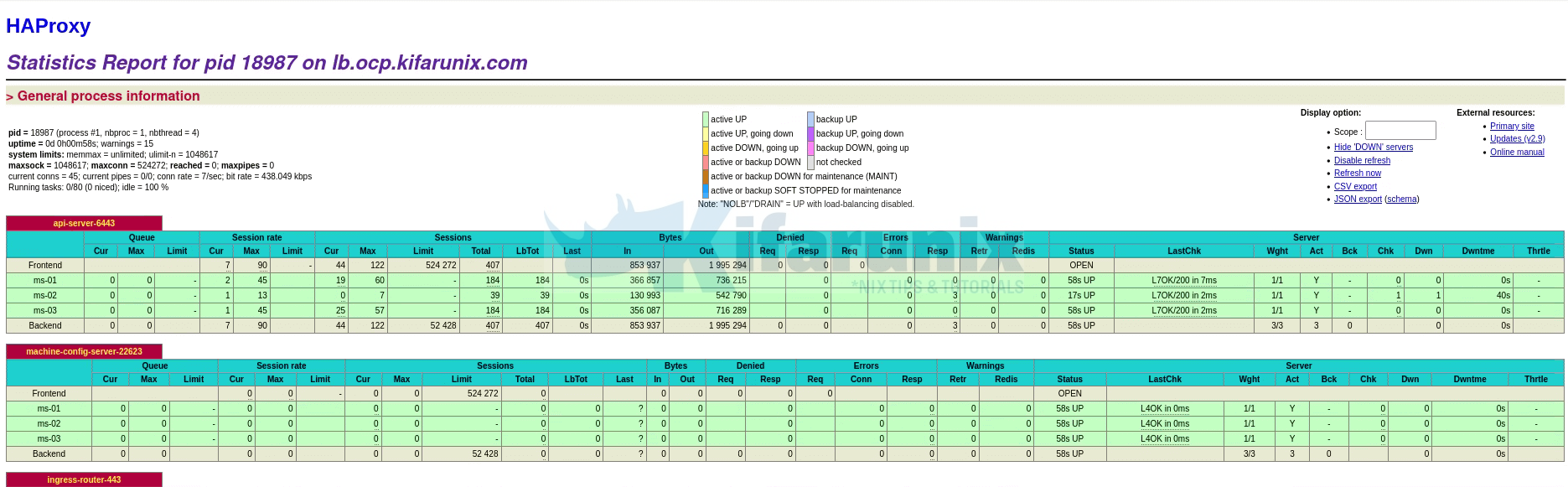

Configure Load Balancer for OCP

We are using HAProxy in our setup. You can refer to our previous guides on how to setup HAProxy.

We are running our HAProxy on Ubuntu 24.04 LTS server and here is our OCP configuration.

cat /etc/haproxy/haproxy.cfgglobal

log /var/log/haproxy.log local0 info

log-send-hostname

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

maxconn 524272

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen api-server-6443

bind *:6443

mode tcp

option tcplog

option httpchk GET /readyz HTTP/1.0

option log-health-checks

balance roundrobin

server bootstrap bootstrap.ocp.kifarunix.com:6443 verify none check check-ssl inter 10s fall 2 rise 3 backup

server ms-01 ms-01.ocp.kifarunix.com:6443 weight 1 verify none check check-ssl inter 10s fall 2 rise 3

server ms-02 ms-02.ocp.kifarunix.com:6443 weight 1 verify none check check-ssl inter 10s fall 2 rise 3

server ms-03 ms-03.ocp.kifarunix.com:6443 weight 1 verify none check check-ssl inter 10s fall 2 rise 3

listen machine-config-server-22623

bind *:22623

mode tcp

option tcplog

server bootstrap bootstrap.ocp.kifarunix.com:22623 check inter 1s backup

server ms-01 ms-01.ocp.kifarunix.com:22623 check inter 1s

server ms-02 ms-02.ocp.kifarunix.com:22623 check inter 1s

server ms-03 ms-03.ocp.kifarunix.com:22623 check inter 1s

listen ingress-router-443

bind *:443

mode tcp

option tcplog

balance source

server wk-01 wk-01.ocp.kifarunix.com:443 check inter 1s

server wk-02 wk-02.ocp.kifarunix.com:443 check inter 1s

server wk-03 wk-03.ocp.kifarunix.com:443 check inter 1s

listen ingress-router-80

bind *:80

mode tcp

option tcplog

balance source

server wk-01 wk-01.ocp.kifarunix.com:80 check inter 1s

server wk-02 wk-02.ocp.kifarunix.com:80 check inter 1s

server wk-03 wk-03.ocp.kifarunix.com:80 check inter 1s

listen stats

bind *:8443

stats enable # enable statistics reports

stats hide-version # Hide the version of HAProxy

stats refresh 30s # HAProxy refresh time

stats show-node # Shows the hostname of the node

stats auth haadmin:P@ssword # Enforce Basic authentication for Stats page

stats uri /stats # Statistics URL

Bootstrapping OpenShift Nodes

Once the infrastructure is prepared and the ignition configuration files are generated, the next step is to bootstrap the cluster. This phase initiates the installation process and brings the control plane (master nodes) online.

The bootstrap process begins with starting the bootstrap node, which plays a temporary but critical role in setting up the cluster. It runs essential services such as a temporary etcd member and the initial OpenShift control plane components.

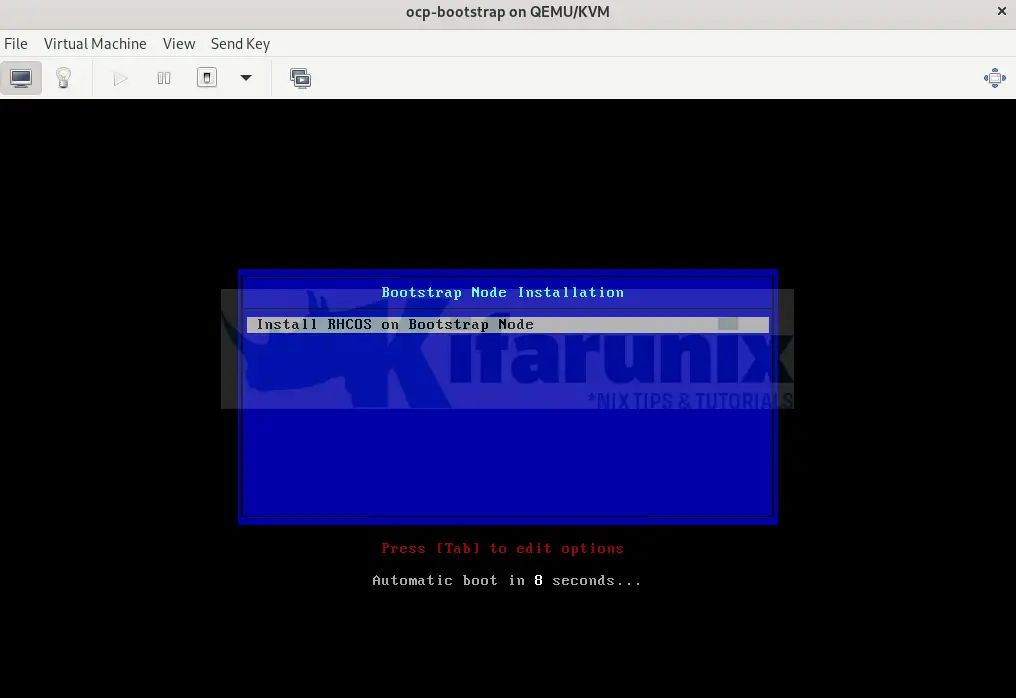

Start the Bootstrap Node

Therefore, since our infra is already setup, navigate to KVM (if you are following along with same infra setup) and start the bootstrap node.

This is the console of the bootstrap node.

In a few, the bootstrap node should be up and running.

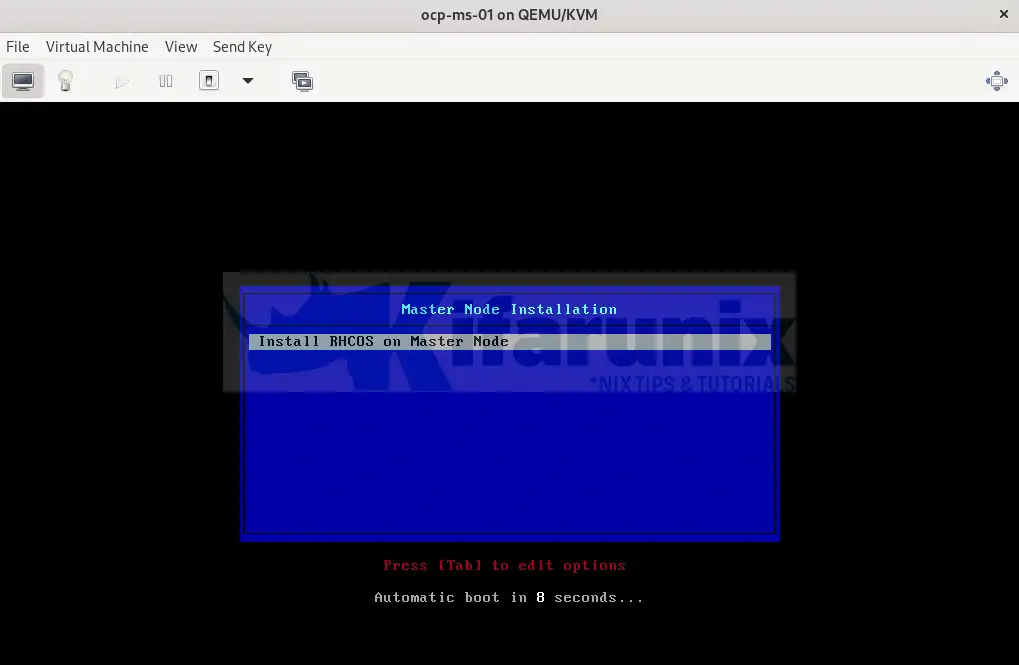

Start the Master Nodes

Once the bootstrap node is active and functional, the master nodes can be started. The master nodes use the information provided by the bootstrap node and their own ignition files to configure themselves and join the cluster.

Start all the master nodes. This is a sample console PXE boot menu.

After a few, the nodes should be up.

As you wait for the control plane/master nodes to be fully initialized, you can monitor the whole process form the bastion host.

Navigate to where you placed the installer and run the command below;

./openshift-install --dir ocp-upi-installation/ wait-for bootstrap-complete --log-level=infoSample output;

IINFO Waiting up to 20m0s (until 8:13AM UTC) for the Kubernetes API at https://api.ocp.kifarunix.com:6443...

INFO API v1.32.6 up

INFO Waiting up to 45m0s (until 8:41AM UTC) for bootstrapping to complete...

You can also login to bootstrap node and run journalctl command to view logs in realtime.

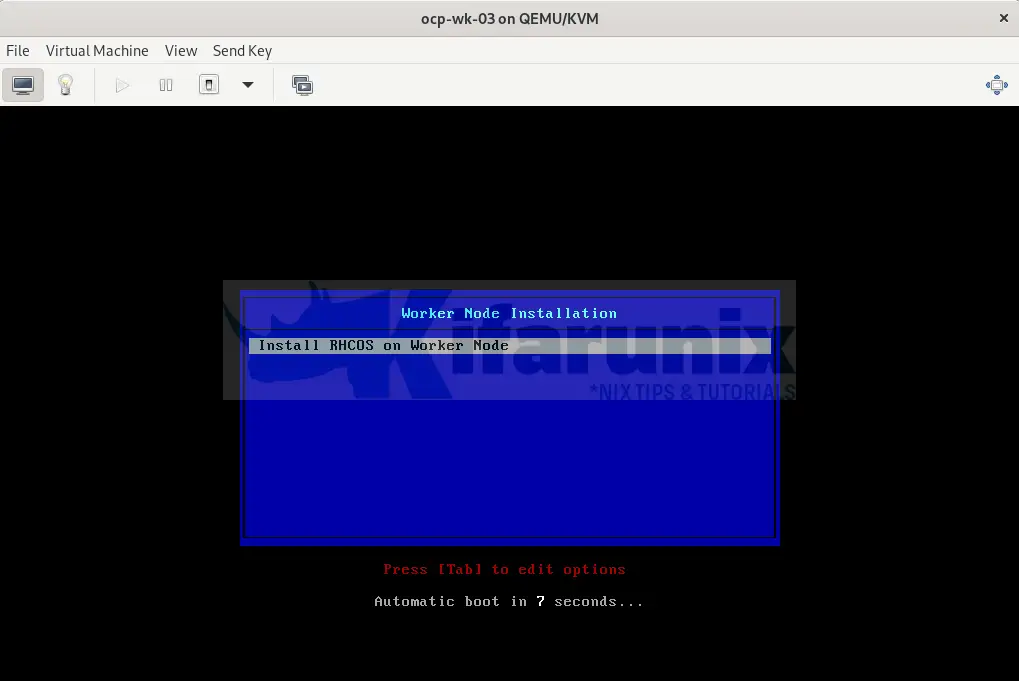

sudo journalctl -fStart the Worker Nodes

In the meantime, start all the worker nodes.

Similarly, you can also monitor installation logs from the installation directory;

tail -f ~/<installation_directory>/.openshift_install.logFor example;

tail -f ocp-upi-installation/.openshift_install.log...

time="2025-08-06T08:10:40Z" level=info msg="Waiting for the bootstrap etcd member to be removed..."

time="2025-08-06T08:11:41Z" level=debug msg="Error getting etcd operator singleton, retrying: the server was unable to return a response in the time allotted, but may still be processing the request (get etcds.operator.openshift.io cluster)"

time="2025-08-06T08:12:41Z" level=debug msg="Error getting etcd operator singleton, retrying: the server was unable to return a response in the time allotted, but may still be processing the request (get etcds.operator.openshift.io cluster)"

time="2025-08-06T08:12:46Z" level=info msg="Bootstrap etcd member has been removed"

time="2025-08-06T08:12:46Z" level=info msg="It is now safe to remove the bootstrap resources"

time="2025-08-06T08:12:46Z" level=debug msg="Time elapsed per stage:"

time="2025-08-06T08:12:46Z" level=debug msg="Bootstrap Complete: 19m14s"

time="2025-08-06T08:12:46Z" level=debug msg=" API: 3m23s"

time="2025-08-06T08:12:46Z" level=info msg="Time elapsed: 19m14s"

Remove Bootstrap Node

After all the nodes have successfully joined the cluster and the control plane becomes self-hosted, the bootstrap node is no longer needed and can be safely shut down and removed. You will see such message on the monitoring command when all is good.

INFO Waiting up to 20m0s (until 8:13AM UTC) for the Kubernetes API at https://api.ocp.kifarunix.com:6443...

INFO API v1.32.6 up

INFO Waiting up to 45m0s (until 8:41AM UTC) for bootstrapping to complete...

INFO Waiting for the bootstrap etcd member to be removed...

INFO Bootstrap etcd member has been removed

INFO It is now safe to remove the bootstrap resources

INFO Time elapsed: 19m14s

After the bootstrap process is complete, remove the bootstrap machine from the load balancer.

Hence, login to load balancer and comment out the entries of the bootstrap;

sudo sed -i '/bootstrap/s/^/#/' /etc/haproxy/haproxy.cfgRestart HAProxy;

sudo systemctl restart haproxyCheck the status:

systemctl status haproxy● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; preset: enabled)

Active: active (running) since Wed 2025-08-06 08:26:59 UTC; 5s ago

Docs: man:haproxy(1)

file:/usr/share/doc/haproxy/configuration.txt.gz

Main PID: 18983 (haproxy)

Status: "Ready."

Tasks: 5 (limit: 2266)

Memory: 74.9M (peak: 76.0M)

CPU: 403ms

CGroup: /system.slice/haproxy.service

├─18983 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

└─18987 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

Aug 06 08:26:59 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server ingress-router-443/wk-02 is DOWN, reason: Layer4 connection problem, info: "Connection refused", check duration: 0ms. 1 acti>

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server ingress-router-443/wk-03 is DOWN, reason: Layer4 connection problem, info: "Connection refused", check duration: 0ms. 0 acti>

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [ALERT] (18987) : proxy 'ingress-router-443' has no server available!

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server ingress-router-80/wk-01 is DOWN, reason: Layer4 connection problem, info: "Connection refused", check duration: 0ms. 2 activ>

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server ingress-router-80/wk-02 is DOWN, reason: Layer4 connection problem, info: "Connection refused", check duration: 0ms. 1 activ>

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Health check for server api-server-6443/ms-02 failed, reason: Layer7 wrong status, code: 500, info: "Internal Server Error", check >

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server api-server-6443/ms-02 is DOWN. 2 active and 0 backup servers left. 7 sessions active, 0 requeued, 0 remaining in queue.

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Server ingress-router-80/wk-03 is DOWN, reason: Layer4 connection problem, info: "Connection refused", check duration: 0ms. 0 activ>

Aug 06 08:27:00 lb.ocp.kifarunix.com haproxy[18987]: [ALERT] (18987) : proxy 'ingress-router-80' has no server available!

Aug 06 08:27:01 lb.ocp.kifarunix.com haproxy[18987]: [WARNING] (18987) : Health check for server api-server-6443/ms-03 succeeded, reason: Layer7 check passed, code: 200, check duration: 4ms, status: 2/2

You can also check HAProxy stats:

Logging in to the OpenShift cluster

The OpenShift cluster should now be up and ready. From the bastion host, where you ran the OpenShift installer and installed the oc CLI, you can log in using the CLI by exporting the kubeconfig file that was generated during installation.

To login:

- You can export the kubeconfig:

For example:export KUBECONFIG=<installation_directory>/auth/kubeconfigexport KUBECONFIG=ocp-upi-installation/auth/kubeconfig - Or you can login using the kubeadmin credentials:

The kubeadmin password is located in:oc login -u kubeadmin -p <kubeadmin-password><installation_directory>/auth/kubeadmin-password - Verify cluster access:

Sample output:oc get nodeNAME STATUS ROLES AGE VERSION

ms-01.ocp.kifarunix.com Ready control-plane,master 55m v1.32.6

ms-02.ocp.kifarunix.com Ready control-plane,master 54m v1.32.6

ms-03.ocp.kifarunix.com Ready control-plane,master 54m v1.32.6

Approving Machine Certificate Signing Requests (CSRs)

When new machines are added to an OpenShift cluster, two CSRs are generated for each: one for the client certificate and one for the server (kubelet serving) certificate. These must be approved before the nodes appear as Ready in the cluster.

Important considerations

- Approve CSRs within one hour of adding machines. After this, new CSRs may be generated, and all must be approved.

- On user-provisioned infrastructure (e.g. bare metal), you must implement a method to automatically approve server CSRs. Without them, commands like

oc exec,oc logs, andoc rshwill fail.

Until the CSRs are approved, worker nodes might not appear in the output of:

oc get nodesTherefore, list all CSRs and filter those in Pending state:

oc get csrSample output:

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-5rklg 70m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-72wwj 70m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-7bfkp 9m9s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-7bs9t 55m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-8b477 85m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-9s9f5 39m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-jc8gf 70m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-ll47v 24m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-lxzz4 9m9s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-mk29m 39m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-mpbhz 55m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-mqkh2 85m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-qst6c 9m8s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-t8lvj 55m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-tfhw8 24m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-vblcc 39m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-vjdzf 85m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-wj96h 24m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

You can approve the CSRs individually or all at ago.

In the meantime, you can run the installation monitoring command as you go about sorting CSRs;

./openshift-install --dir ocp-upi-installation/ wait-for install-complete --log-level=infoTo approve individual CSRs, run (Replace <csr_name> with the actual name of the CSR):

oc adm certificate approve <csr_name>To approve all the client CSRs, run the command below. Note that client CSRs must be approved before server CSRs appear. Once the client CSR is approved, the kubelet submits a server CSR, which also requires approval.

oc get csr -o go-template='{{range .items}}{{if not .status}}{{.metadata.name}}{{"\n"}}{{end}}{{end}}' | xargs --no-run-if-empty oc adm certificate approveOr simply;

for csr in `oc get csr --no-headers | grep -i pending | awk '{ print $1 }'`; do oc adm certificate approve $csr; doneOr simply:

oc get csr -o name | xargs oc adm certificate approveVerify again:

oc get csr | grep -i pendingYou will now see servers (worker nodes) CSRs now appearing as pending:

csr-nzwln 4m kubernetes.io/kubelet-serving system:node:wk-01.ocp.kifarunix.com <none> Pending

csr-p6dkf 3m57s kubernetes.io/kubelet-serving system:node:wk-02.ocp.kifarunix.com <none> Pending

csr-q7sk4 3m58s kubernetes.io/kubelet-serving system:node:wk-03.ocp.kifarunix.com <none> Pending

Thus run;

oc get csr -o go-template='{{range .items}}{{if not .status}}{{.metadata.name}}{{"\n"}}{{end}}{{end}}' | xargs oc adm certificate approveOr just use the for loop command use above.

All CSRs should now have been approved.

oc get csrNAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-5rklg 82m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-6rn7f 5m47s kubernetes.io/kube-apiserver-client system:node:wk-02.ocp.kifarunix.com 24h Approved,Issued

csr-72wwj 82m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-7bfkp 21m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-7bs9t 67m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-8b477 97m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-9s9f5 52m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-hn5hg 5m52s kubernetes.io/kube-apiserver-client system:node:wk-01.ocp.kifarunix.com 24h Approved,Issued

csr-jc8gf 82m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-jvft5 6m10s kubernetes.io/kube-apiserver-client system:node:wk-02.ocp.kifarunix.com 24h Approved,Issued

csr-ldvfj 5m46s kubernetes.io/kube-apiserver-client system:node:wk-01.ocp.kifarunix.com 24h Approved,Issued

csr-ll47v 36m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-lxzz4 21m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-mhxs8 5m47s kubernetes.io/kube-apiserver-client system:node:wk-03.ocp.kifarunix.com 24h Approved,Issued

csr-mk29m 52m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-mpbhz 67m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-mqkh2 97m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-nzwln 7m21s kubernetes.io/kubelet-serving system:node:wk-01.ocp.kifarunix.com <none> Approved,Issued

csr-p2nh2 6m14s kubernetes.io/kube-apiserver-client system:node:wk-03.ocp.kifarunix.com 24h Approved,Issued

csr-p6dkf 7m18s kubernetes.io/kubelet-serving system:node:wk-02.ocp.kifarunix.com <none> Approved,Issued

csr-q7sk4 7m19s kubernetes.io/kubelet-serving system:node:wk-03.ocp.kifarunix.com <none> Approved,Issued

csr-qst6c 21m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-t8lvj 67m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-tfhw8 36m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-vblcc 52m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-vjdzf 97m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-wj96h 36m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

After both client and server CSRs are approved, the nodes will transition to Ready. Verify with:

oc get nodesNAME STATUS ROLES AGE VERSION

ms-01.ocp.kifarunix.com Ready control-plane,master 114m v1.32.6

ms-02.ocp.kifarunix.com Ready control-plane,master 113m v1.32.6

ms-03.ocp.kifarunix.com Ready control-plane,master 113m v1.32.6

wk-01.ocp.kifarunix.com Ready worker 9m55s v1.32.6

wk-02.ocp.kifarunix.com Ready worker 9m53s v1.32.6

wk-03.ocp.kifarunix.com Ready worker 9m54s v1.32.6

Initial Operator Configuration

After the OpenShift Container Platform control plane initializes, Operators are configured to ensure all components become available.

My cluster operators are all online:

oc get coSample output;

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.19.5 True False False 26m

baremetal 4.19.5 True False False 137m

cloud-controller-manager 4.19.5 True False False 140m

cloud-credential 4.19.5 True False False 142m

cluster-autoscaler 4.19.5 True False False 137m

config-operator 4.19.5 True False False 137m

console 4.19.5 True False False 32m

control-plane-machine-set 4.19.5 True False False 136m

csi-snapshot-controller 4.19.5 True False False 136m

dns 4.19.5 True False False 127m

etcd 4.19.5 True False False 135m

image-registry 4.19.5 True False False 127m

ingress 4.19.5 True False False 34m

insights 4.19.5 True False False 137m

kube-apiserver 4.19.5 True False False 132m

kube-controller-manager 4.19.5 True False False 132m

kube-scheduler 4.19.5 True False False 134m

kube-storage-version-migrator 4.19.5 True False False 127m

machine-api 4.19.5 True False False 136m

machine-approver 4.19.5 True False False 137m

machine-config 4.19.5 True False False 136m

marketplace 4.19.5 True False False 136m

monitoring 4.19.5 True False False 28m

network 4.19.5 True False False 138m

node-tuning 4.19.5 True False False 36m

olm 4.19.5 True False False 127m

openshift-apiserver 4.19.5 True False False 127m

openshift-controller-manager 4.19.5 True False False 133m

openshift-samples 4.19.5 True False False 126m

operator-lifecycle-manager 4.19.5 True False False 136m

operator-lifecycle-manager-catalog 4.19.5 True False False 136m

operator-lifecycle-manager-packageserver 4.19.5 True False False 127m

service-ca 4.19.5 True False False 137m

storage 4.19.5 True False False 137m

If some Operators are unavailable, it may indicate issues such as misconfigurations, resource constraints, or component failures. To troubleshoot:

- Check Operator Logs: Review the logs for the affected Operators to identify specific errors. Use the oc adm must-gather command to collect diagnostic data, as detailed in the OpenShift documentation on gathering bootstrap data.

- Review Troubleshooting Resources: For detailed steps on resolving Operator-specific issues, refer to the OpenShift documentation on troubleshooting Operator issues. This includes verifying Operator subscription status, checking pod health, and gathering Operator logs.

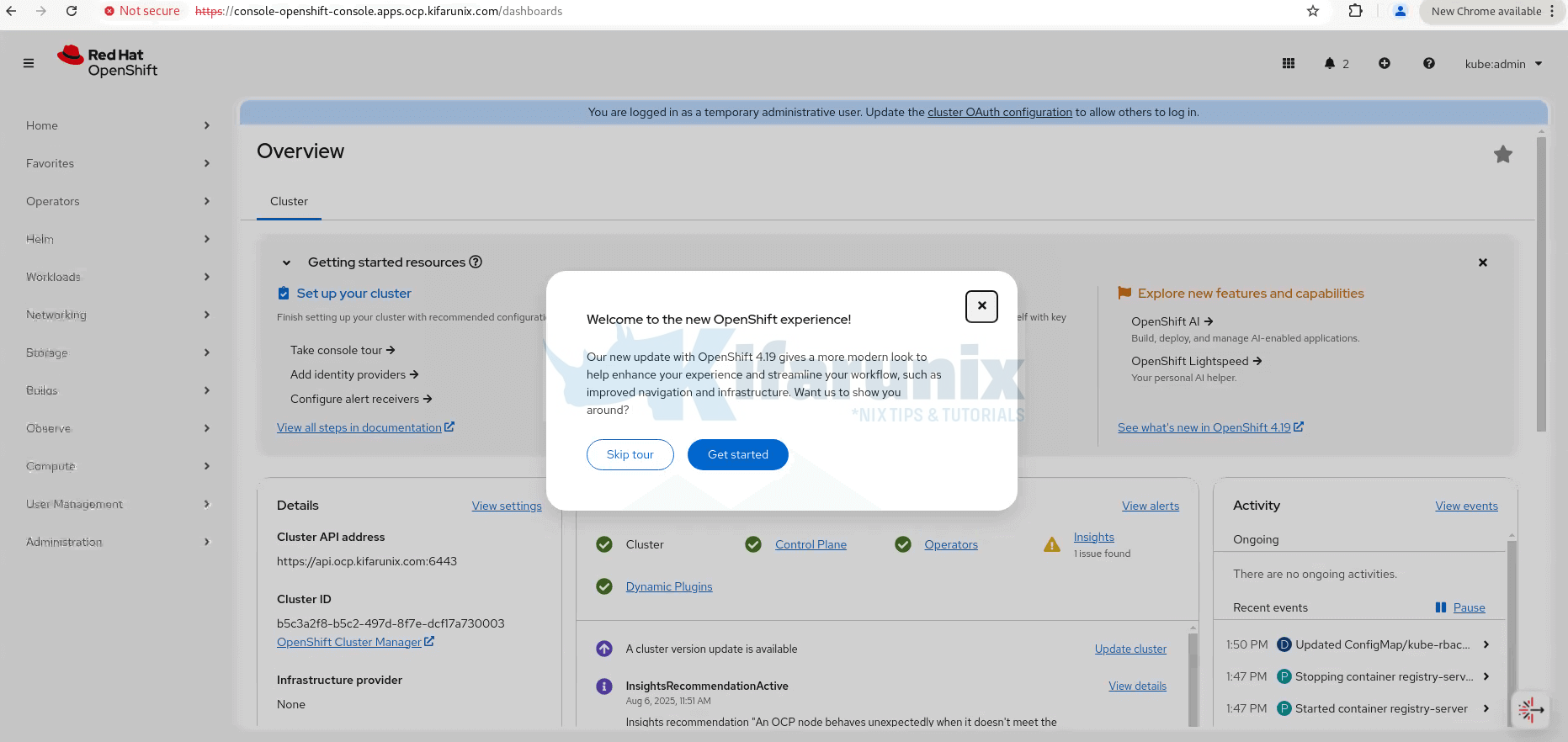

At this point, the installation complete check command should have completed!

INFO Waiting up to 40m0s (until 10:44AM UTC) for the cluster at https://api.ocp.kifarunix.com:6443 to initialize...

INFO Waiting up to 30m0s (until 10:34AM UTC) to ensure each cluster operator has finished progressing...

INFO All cluster operators have completed progressing

INFO Checking to see if there is a route at openshift-console/console...

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc', run

INFO export KUBECONFIG=/home/kifarunix/ocp-upi/ocp-upi-installation/auth/kubeconfig

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp.kifarunix.com

INFO Login to the console with user: "kubeadmin", and password: "wqWAA-9do3x-EwZKV-WF7Go"

INFO Time elapsed: 3m35s

And there we go!

The cluster is now ready. You can check all the pods in the cluster;

oc get pod -AKey things from the output above:

- CLI Access: Run the following to access the cluster as

system:adminusing theocCLI:export KUBECONFIG=<installation_directory>/auth/kubeconfig - Web Console URL: Access the OpenShift web console at https://console-openshift-console.apps.ocp.kifarunix.com

- Initial Login Credentials:

- Username:

kubeadmin - Password:

wqWAA-9do3x-EwZKV-WF7Go

- Username:

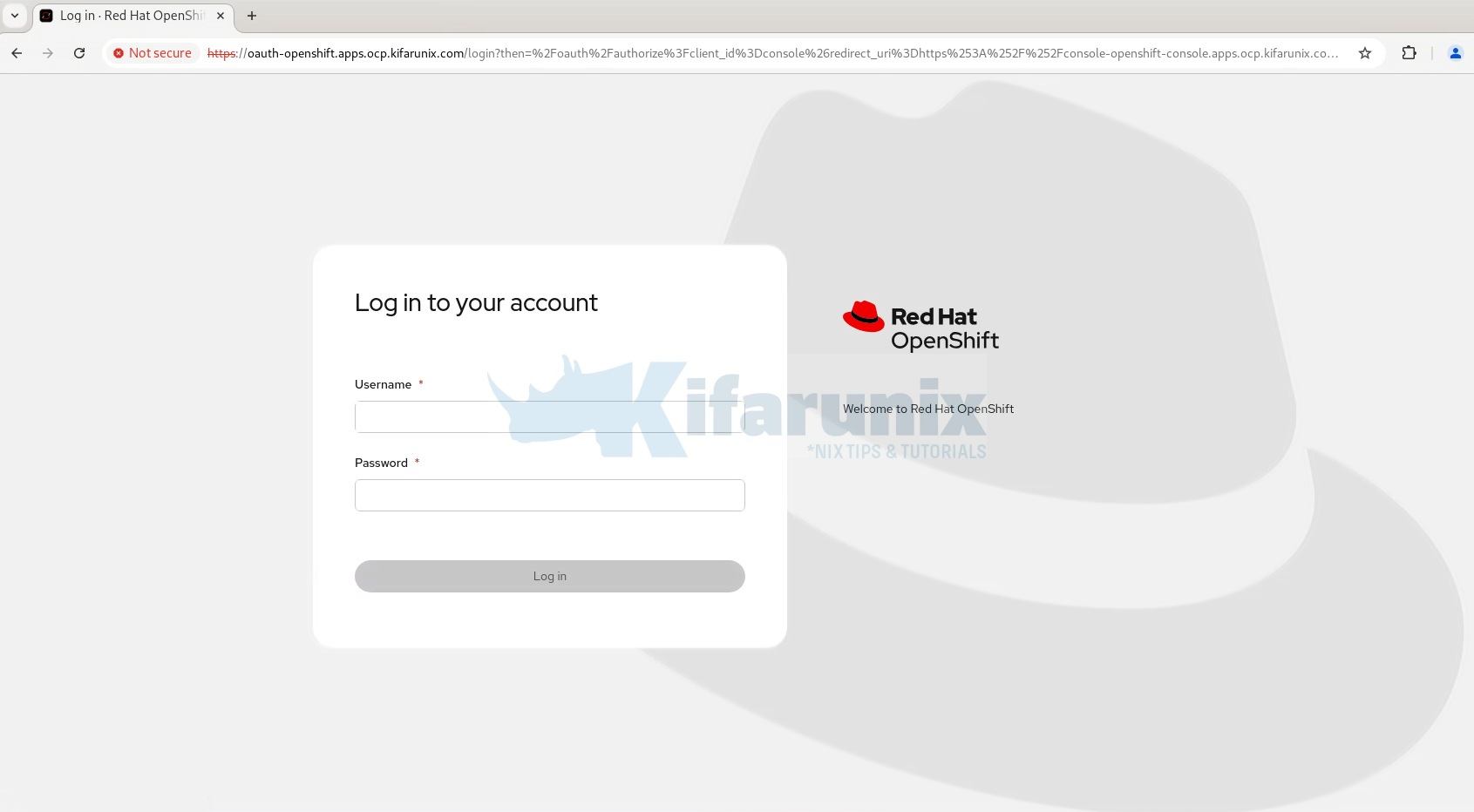

Accessing the OpenShift Cluster via Web Console

You can access the OpenShift web console using a URL provided at the end of a successful installation. It typically looks like: https://console-openshift-console.apps.ocp.kifarunix.com

If you’re not sure what your web console URL is, you can:

- Check the install-complete output. The URL is displayed there.

- Or, retrieve it from the CLI using the following command:

oc whoami --show-console

To access the OpenShift web console and related services, the console URL and other cluster subdomains must be resolvable from your local machine or bastion host. If you don’t have DNS set up, you can manually map the required hostnames to the load balancer IP address in your /etc/hosts file. The hosts file does not support wildcard DNS, so you must add each relevant hostname individually.

Some of the entries you can add to hosts file:

- api.ocp.kifarunix.com

- console-openshift-console.apps.ocp.kifarunix.com

- oauth-openshift.apps.ocp.kifarunix.com

- downloads-openshift-console.apps.ocp.kifarunix.com

- alertmanager-main-openshift-monitoring.apps.ocp.kifarunix.com

- grafana-openshift-monitoring.apps.ocp.kifarunix.com

- prometheus-k8s-openshift-monitoring.apps.ocp.kifarunix.com

- thanos-querier-openshift-monitoring.apps.ocp.kifarunix.com

cat /etc/hosts...

10.185.10.120 api.ocp.kifarunix.com console-openshift-console.apps.ocp.kifarunix.com oauth-openshift.apps.ocp.kifarunix.com downloads-openshift-console.apps.ocp.kifarunix.com alertmanager-main-openshift-monitoring.apps.ocp.kifarunix.com grafana-openshift-monitoring.apps.ocp.kifarunix.com prometheus-k8s-openshift-monitoring.apps.ocp.kifarunix.com thanos-querier-openshift-monitoring.apps.ocp.kifarunix.com

...

For production or shared environments, it’s best to configure proper DNS records that point all required OpenShift subdomains to your load balancer. This makes management easier, supports scaling, and avoids manual updates.

Accessing OpenShift web console.

If you’re using self-signed SSL certificates, some browsers especially Firefox, may block access due to HSTS (HTTP Strict Transport Security). If you encounter issues, try accessing the console in Incognito/Private mode.

Use the default login credentials for now:

- Username:

kubeadmin - Password: You can get the password from one of the following:

- The install-complete output

<installation_directory>/auth/kubeadmin-password<installation_directory>/.openshift_install.log

The kubeadmin account is meant for temporary access only. Configure a proper identity provider (such as HTPasswd, LDAP…), add your own admin users, and remove the kubeadmin account once authentication is set up.

If you want, you can click get started to be taken across some few features of the OpenShift cluster. Otherwise, click Skip tour to get to dashboard.

Integrate OpenShift with Windows AD for Authentication

If you want to control OpenShift authentication with a centralized identity provider, check our guide on how to integrate with Windows Active Directory:

Integrate OpenShift with Active Directory for Authentication

Conclusion

And that brings us to the end of this tutorial on Deploying a Multinode OpenShift Cluster Using UPI (User-Provisioned Infrastructure). Throughout this guide, we walked through each stage of the deployment process from preparing infrastructure to accessing the OpenShift web console, giving you full control over your OpenShift environment. You now have a fully functional, multi-node OpenShift cluster deployed via UPI, with deep insights into each manual step involved in the process.