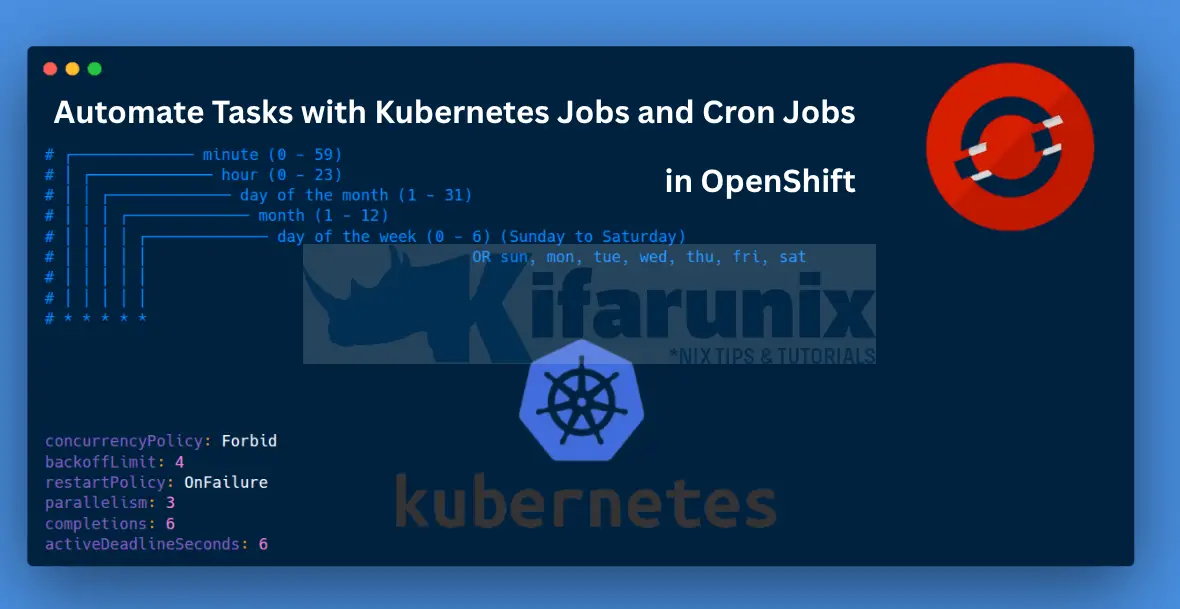

In this guide, we’ll explore how to set up and automate tasks in OpenShift with Kubernetes jobs and cron jobs. Managing tasks in a Kubernetes-based environment like Red Hat OpenShift can be challenging; time-consuming and error-prone. Whether it’s database backups, log rotations, or periodic health checks, repetitive tasks can quickly become a burden. With Kubernetes jobs/cron jobs , you can automate these regular tasks, ensuring your systems run smoothly and efficiently.

Table of Contents

Automate Tasks in OpenShift with Kubernetes Jobs and Cron Jobs

What Are Kubernetes Jobs and Cron Jobs?

Kubernetes provides two key resources for task automation:

- Kubernetes Job: These are resources that executes a task once until completion. Ideal for one-off operations like database migrations or batch processing.

- Kubernetes Cron Job: These are resources that execute recurring tasks at regular intervals. Perfect for recurring tasks like backups or report generation. They are essentially Jobs with a schedule attached. Just like the Linux cron scheduler, they create Jobs at specified intervals using the familiar cron syntax.

You can use a Cron Job to backup a database every night or a Job to initialize a new application environment.

Why Use Kubernetes Jobs and Cron Jobs on OpenShift?

Using Kubernetes Jobs and Cron Jobs on OpenShift offers several advantages:

- Efficiency: Save time by eliminating manual interventions.

- Consistency: Ensure tasks run predictably with predefined configurations.

- Scalability: Handle complex workflows in large clusters effortlessly.

- Reliability: Reduce human error with automated scheduling.

Scheduling One-Off Tasks with Kubernetes Jobs on OpenShift

Creating a Kubernetes Job on OpenShift

A Kubernetes Job creates one or more pods to execute a task and ensures it completes successfully. Here’s how to create a Job in OpenShift/K8s.

Step 1: Create a Job Specification

While it is possible to create a job via CLI imperatively, it is generally better to use declarative method via the YAML manifests. So, here is our sample manifest file for creating a job on OpenShift/Kubernetes.

cat job.yamlSample Job YAML content:

apiVersion: batch/v1

kind: Job

metadata:

name: example-job

spec:

metadata:

template:

spec:

containers:

- name: example-job-container

image: busybox

command:

- sh

- -c

- echo Processing task && sleep 5

restartPolicy: Never

backoffLimit: 4

activeDeadlineseconds: 60

From the job manifest above, some of the key fields to note are:

- restartPolicy: Never: Ensures the pod does not restart after completion, as Jobs are designed for one-time execution.

- backoffLimit and activeDeadlineSeconds. We will discuss this further below.

Step 2: Deploy the Job

Apply the Job to your OpenShift cluster using the OpenShift CLI (oc):

oc apply -f job.yamlThe Job will be created in the current project/namespace context. If you want, you can define a specific namespace/project with metadata.namepace.

Step 3: Verify Execution

Check the Job’s status and output:

oc get jobsSample output;

NAME STATUS COMPLETIONS DURATION AGE

example-job Complete 1/1 4s 20s

oc get pods --selector=job-name=example-jobSample output;

NAME READY STATUS RESTARTS AGE

example-job-psqq7 0/1 Completed 0 99s

Check the logs:

oc logs job/example-jobSample output;

Processing task

Logs on the pod;

oc logs pod/example-job-psqq7Sample output;

Processing task

Managing Failures with backoffLimit

The backoffLimit field specifies how many times Kubernetes retries a failed pod before marking the Job as failed. In the example, backoffLimit: 4 allows up to four retries if the pod encounters errors (e.g., a network issue). Note that the default value for backoffLimit is 6, allowing up to 6 retry attempts.

Always choose a backoffLimit based on task reliability. For tasks prone to transient failures (e.g., API calls), 4-6 retries are common. For critical tasks requiring immediate alerts, use a lower value.

The retry mechanism follows an exponential backoff strategy, starting with a 10-second delay and doubling with each subsequent failure, up to a maximum delay of 6 minutes.

For instance, if backoffLimit is set to 4, the retry schedule would be:

- 1st retry: after 10 seconds

- 2nd retry: after 20 seconds

- 3rd retry: after 40 seconds

- 4th retry: after 80 seconds

If all retries fail, the Job is marked as failed.

Enforcing Timeouts with activeDeadlineSeconds

The activeDeadlineSeconds field sets a maximum duration for the Job. If the Job exceeds this limit (e.g., 60 seconds in the example), Kubernetes terminates it, regardless of remaining retries.

Set activeDeadlineSeconds to accommodate typical task duration plus a buffer. For short tasks, 60-120 seconds is often sufficient; for longer tasks, adjust accordingly to prevent premature termination.

Note that a Job’s activeDeadlineSeconds takes precedence over its backoffLimit. Therefore, a Job that is retrying one or more failed Pods will not deploy additional Pods once it reaches the time limit specified by activeDeadlineSeconds, even if the backoffLimit is not yet reached.

Read more on job termination and cleanup.

Running Tasks Concurrently with Parallelism and Completions

For tasks that can be divided into smaller units, the parallelism and completions fields enable concurrent execution across multiple pods, reducing total processing time.

- parallelism: defines the number of pods to run simultaneously.

- completions: defines the total number of successful task executions required.

This is mostly used when processing large datasets, such as resizing 100 images, where each pod handles a subset.

Creating an Example Parallel Job

cat parallel-job.yamlapiVersion: batch/v1

kind: Job

metadata:

name: parallel-job

spec:

template:

spec:

containers:

- name: parallel-job-container

image: busybox

command: ["sh", "-c", "echo Processing task $$ && sleep 5"]

restartPolicy: Never

parallelism: 3

completions: 6

backoffLimit: 4

activeDeadlineSeconds: 6

This YAML file will cause Kubernetes to create and run three pods concurrently (parallelism: 3) until six tasks complete successfully (completions: 6). As each pod finishes, a new one starts.

Deploy and monitor the job;

oc apply -f parallel-job.yamloc get podsoc get jobsparallelism with available cluster capacity. It’s recommended to set resource requests and limits for each pod to ensure fair scheduling and avoid resource contention.

resources:

limits:

cpu: "500m"

memory: "512Mi"

requests:

cpu: "200m"

memory: "256Mi"Scheduling Recurring Tasks with Kubernetes Cron Jobs

Kubernetes Cron Jobs automate tasks on a schedule, creating a Job at each interval. This section covers scheduling, preventing overlaps, and applying Job configurations like failure handling and parallelism.

Step 1: Define a Cron Job Specification

Create a YAML file (cronjob.yaml) to log a timestamp every 5 minutes:

cat cronjob.yamlapiVersion: batch/v1

kind: CronJob

metadata:

name: example-cronjob

spec:

schedule: "*/5 * * * *"

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

containers:

- name: cron-container

image: busybox

command: ["sh", "-c", "date; echo Task executed"]

restartPolicy: OnFailure

backoffLimit: 4

activeDeadlineSeconds: 60

Key fields:

- schedule: “*/5 * * * *”: Runs every 5 minutes. Use Crontab Guru to validate cron expressions.

- concurrencyPolicy: Forbid: Prevents new Jobs from starting if a previous Job is still running.

- backoffLimit: 4 and activeDeadlineSeconds: 60: Apply to the generated Jobs, as explained in the Job section.

- restartPolicy: OnFailure: Allows pod restarts on failure, suitable for Cron Jobs where tasks may retry within a Job.

Step 2: Deploy the Cron Job

Apply the configuration:

oc apply -f cronjob.yamlStep 3: Monitor Execution

Verify the Cron Job and its spawned Jobs:

oc get cronjobs

oc get jobs

oc get pods

oc logs <pod-name>Example:

oc logs pod/example-cronjob-29129590-m5ldnSample output;

Tue May 20 21:10:01 UTC 2025

Task executed

Preventing Overlaps with concurrencyPolicy: Forbid

The concurrencyPolicy: Forbid setting ensures that if a scheduled Job is still running when the next interval arrives, Kubernetes skips the new Job. This prevents resource contention and ensures sequential execution.

This can be used in critical tasks like database backups, where running multiple instances simultaneously could cause data inconsistencies.

Use Forbid for tasks requiring strict order.

Other policies that can be used include:

- Allow (default option is non is defined): The CronJob allows concurrently running Jobs. It basically means, start the new job even if the previous one is still running.

- Replace: When time to run a new job comes, delete the currently running Job and starts the new one.

The Job created by the Cron Job can use parallelism and completions for concurrent task processing within a single scheduled run. Add these fields to jobTemplate.spec as shown in the parallel Job example.

Real-World Applications

Kubernetes Jobs and Cron Jobs are essential for automating tasks in OpenShift.

In our OpenShift cluster, we have a 3-tier web app running a Frontend and API NodeJS and MySQL DB as the backend.

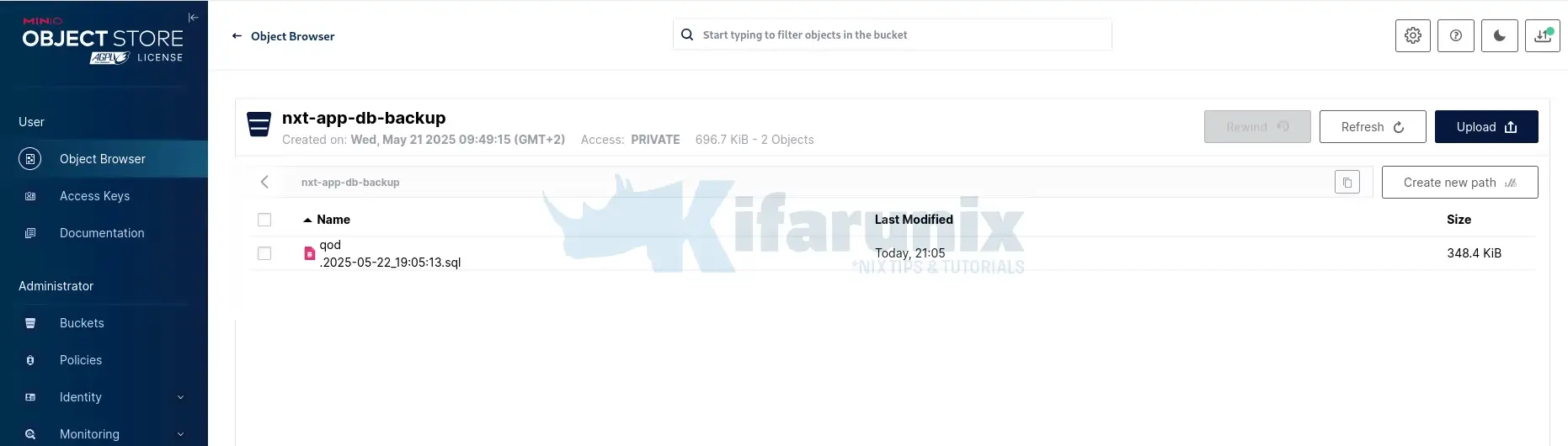

We also have a MinIO s3 bucket where we will run the backup and dump them there.

Example: Backing Up MySQL Database to MinIO s3 Bucket

If your web application relies on a database to store critical data, such as user accounts or transaction records, then regular backups are necessary to protect this data. Below, we show how to use a Kubernetes Job for a one-time backup (e.g., before a schema migration,) and a Cron Job for nightly backups at 11 PM.

If you are looking at setting up MinIO, check the guides below:

MinIO S3 Object storage guides

One-Time Backup with a Kubernetes Job

A Job is ideal for creating a one-time backup of a workload, for example before an application update, as it runs the task once and ensures completion.

Create a YAML file e.g mysql-backup-job.yaml:

cat mysql-backup-job.yamlapiVersion: batch/v1

kind: Job

metadata:

name: nxt-app-db-backup

spec:

template:

spec:

serviceAccountName: db-backup

containers:

- name: mysqldump

image: mariadb

env:

- name: DB_HOST

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_HOST

- name: DB_USER

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_USER

- name: DB_NAME

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_NAME

- name: DB_PASS

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_PASS

- name: AWS_ENDPOINT

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_ENDPOINT

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_SECRET_ACCESS_KEY

- name: BUCKET_NAME

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: BUCKET_NAME

command:

- sh

- -c

- |

set -e

apt update;apt install curl -y

curl -so /usr/local/bin/mc https://dl.min.io/client/mc/release/linux-amd64/mc

chmod +x /usr/local/bin/mc

DUMP_TIME="$(date +%F_%T)"

DUMP_FILE="$DB_NAME.$DUMP_TIME.sql"

mariadb-dump -h $DB_HOST -u $DB_USER -p$DB_PASS $DB_NAME --skip-ssl > "$DUMP_FILE"

mc alias set minio "$AWS_ENDPOINT" "$AWS_ACCESS_KEY_ID" "$AWS_SECRET_ACCESS_KEY"

mc cp "$DUMP_FILE" "minio/$BUCKET_NAME/$DUMP_FILE"

restartPolicy: Never

backoffLimit: 3

This YAML defines a Kubernetes Job named nxt-app-db-backup for database backup using a mariadb container image, which runs as the root user by default. To support this, the job uses the db-backup service account with the anyuid Security Context Constraint (SCC) to grant elevated privileges, allowing the container to run as root. The job:

- Retrieves database credentials (DB_HOST, DB_USER, DB_NAME, DB_PASS) and AWS S3-compatible storage details (AWS_ENDPOINT, AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, BUCKET_NAME) from Kubernetes secrets.

- Installs curl and the MinIO client (mc).

- Creates a timestamped SQL dump file using mariadb-dump.

- Uploads the dump file to an S3-compatible bucket using mc.

- Runs with a Never restart policy and up to 3 retries (backoffLimit: 3).

Deploy and Verify:

oc apply -f mysql-backup-job.yamlNext, check Pods;

oc get pods --selector=job-name=nxt-app-db-backup -o wideSample output;

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nxt-app-db-backup-zqkhw 1/1 Running 0 4s 10.131.0.3 k8s-wk-02.ocp.kifarunix-demo.com

Check Jobs;

oc get jobs --selector=job-name=nxt-app-db-backupSample output;

NAME STATUS COMPLETIONS DURATION AGE

nxt-app-db-backup Running 0/1 57s 8s

If all goes well, the job should complete in no time.

Check logs;

oc logs pod <podname>oc logs job/<jobname>For example;

oc logs job/nxt-app-db-backupSample output;

...

Setting up curl (8.5.0-2ubuntu10.6) ...

Processing triggers for libc-bin (2.39-0ubuntu8.4) ...

Added `minio` successfully.

`/qod

.2025-05-22_19:05:13.sql` -> `minio/nxt-app-db-backup/qod

.2025-05-22_19:05:13.sql`

┌────────────┬─────────────┬──────────┬────────────┐

│ Total │ Transferred │ Duration │ Speed │

│ 348.35 KiB │ 348.35 KiB │ 00m00s │ 5.27 MiB/s │

└────────────┴─────────────┴──────────┴────────────┘

And it successfully backed up the DB.

Confirm on the MinIO bucket that you can see the DB dump.

And there you go.

Nightly Backups with a Kubernetes Cron Job

To ensure regular backup of the DB is done, let’s create a cron job that backups up the DB every night at 11:00 PM.

cat nightly-db-backup.yamlapiVersion: batch/v1

kind: CronJob

metadata:

name: nxt-app-db-nightly-backup

spec:

schedule: "0 23 * * *"

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

serviceAccountName: db-backup

containers:

- name: mysqldump

image: mariadb

imagePullPolicy: IfNotPresent

env:

- name: DB_HOST

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_HOST

- name: DB_USER

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_USER

- name: DB_NAME

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_NAME

- name: DB_PASS

valueFrom:

secretKeyRef:

name: qod-db-credentials

key: DB_PASS

- name: AWS_ENDPOINT

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_ENDPOINT

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: AWS_SECRET_ACCESS_KEY

- name: BUCKET_NAME

valueFrom:

secretKeyRef:

name: s3-nxt-app-backup

key: BUCKET_NAME

command:

- sh

- -c

- |

set -e

apt update;apt install curl -y

curl -so /usr/local/bin/mc https://dl.min.io/client/mc/release/linux-amd64/mc

chmod +x /usr/local/bin/mc

DUMP_TIME="$(date +%F_%T)"

DUMP_FILE="$DB_NAME.$DUMP_TIME.sql"

mariadb-dump -h $DB_HOST -u $DB_USER -p$DB_PASS $DB_NAME --skip-ssl > "$DUMP_FILE"

mc alias set minio "$AWS_ENDPOINT" "$AWS_ACCESS_KEY_ID" "$AWS_SECRET_ACCESS_KEY"

mc cp "$DUMP_FILE" "minio/$BUCKET_NAME/$DUMP_FILE"

restartPolicy: Never

backoffLimit: 3

Just like the previous configuration, this YAML file defines a Kubernetes CronJob named nxt-app-db-nightly-backup that schedules a daily database backup at 23:00 (11 PM). The rest of the settings are similar.

Deploy the Cron Job;

oc apply -f nightly-db-backup.yamlTo be able to demonstrate this quickly, I adjust the schedule time for the cron job a current time so I didnt have to wait for that ling to confirm the same.

Verify;

oc get podsSample;

...

nxt-app-db-nightly-backup-29132385-6nz6p 0/1 Completed 0 68s

Check jobs;

oc get jobsSample output;

...

nxt-app-db-nightly-backup-29132385 Complete 1/1 14s 74s

And the logs:

oc logs nxt-app-db-nightly-backup-29132385-6nz6pSample output;

...

Processing triggers for libc-bin (2.39-0ubuntu8.4) ...

Added `minio` successfully.

`/qod

.2025-05-22_19:45:11.sql` -> `minio/nxt-app-db-backup/qod

.2025-05-22_19:45:11.sql`

┌────────────┬─────────────┬──────────┬────────────┐

│ Total │ Transferred │ Duration │ Speed │

│ 348.35 KiB │ 348.35 KiB │ 00m00s │ 9.63 MiB/s │

└────────────┴─────────────┴──────────┴────────────┘

And there you go!

You can verify backups in the MinIO bucket.

Best Practices for Robust Automation

To ensure reliable task automation:

- Monitor Performance: Use OpenShift’s monitoring tools (e.g., Prometheus) to track Job execution and resource usage.

- Validate Schedules: Test cron expressions with Crontab Guru to avoid errors.

- Secure Credentials: Store database passwords in Kubernetes Secrets, as shown in the backup example.

- Manage Storage: Regularly check persistent volumes to prevent backup storage from filling up.

Common Challenges and Solutions

Address these common pitfalls to ensure reliable and efficient automation:

- Resource Contention: Define resource requests and limits to avoid overloading the cluster and ensure fair scheduling.

- Permission Errors: Use appropriate ServiceAccounts and Security Context Constraints (SCCs) to grant necessary permissions without overexposing access.

- Failed Backups: Configure

backoffLimitand enable detailed logging to help diagnose and recover from transient failures.

Conclusion

Kubernetes Jobs and Cron Jobs enable efficient automation of OpenShift tasks, such as backing up your an application database before application updates or for regular maintenance. By configuring backoffLimit, activeDeadlineSeconds, parallelism, completions, and concurrencyPolicy: Forbid, you can build reliable automation workflows.

That therefore, concludes our guide on how to set up and automate tasks in OpenShift with Kubernetes jobs and cron jobs.

Read more about Kubernetes jobs and cronjobs on the documentation page.