In this tutorial, you will learn how to process and visualize ModSecurity Logs on ELK Stack. ModSecurity is an open source, cross-platform web application firewall (WAF) module developed by Trustwave’s SpiderLabs. Known as the “Swiss Army Knife” of WAFs, it enables web application defenders to gain visibility into HTTP(S) traffic and provides a power rules language and API to implement advanced protections.

ModSecurity utilizes the OWASP Core Rule Set which can be used to protect web applications against various attacks including the top 10 OWASP such as SQL Injection, Cross Site Scripting, Local File Inclusion, etc.

OWASP, has recently, in their report, OWASP Top 10 2017, included Insufficient Logging and Monitoring as one of the top 10 web application security risks.

“Insufficient logging and monitoring, coupled with missing or ineffective integration with incident response, allows attackers to further attack systems, maintain persistence, pivot to more systems, and tamper, extract, or destroy data. Most breach studies show time to detect a breach is over 200 days, typically detected by external parties rather than internal processes or monitoring“.

ELK/Elastic Stack on the other hand is powerful platform that collects and processes data from multiple data sources, stores that data in a search and analytic engine that enables users to visualize using charts, tables, graphs.

Process ModSecurity Logs for Visualizing on ELK Stack

Therefore, as a way of ensuring logging and monitoring of web application logs, we are going to learn how you can process and visualize ModSecurity WAF logs on ELK/Elastic Stack.

Prerequisites

Install and Setup ELK/Elastic Stack

Ensure that you have an ELK stack setup before you can proceed. You can follow the links below to install and setup ELK/Elastic stack on Linux;

Install ELK Stack on Ubuntu 20.04

Installing ELK Stack on CentOS 8

Install and Setup ModSecurity on CentOS/Ubuntu

Install and enable Web application protection with ModSecurity. You can check the guides below to install ModSecurity 3 (libModSecurity);

Configure LibModsecurity with Apache on CentOS 8

Configure LibModsecurity with Nginx on CentOS 8

Install LibModsecurity with Apache on Ubuntu 18.04

Configure Logstash to Process ModSecurity Logs

Logstash is a server-side data processing pipeline that ingests data from a multiple sources, transforms it, and then stashes it onto search and data analytics engines such as Elasticsearch.

Logstash pipeline is made up of three sections;

- the input: collect data from different sources

- the filter: (Optional) performs further processing on data.

- the output: stashes received data into a destination datastore such as Elasticsearch.

Configure Logstash Input plugin

Logstash supports various input plugins that enables it to read event data from various sources.

In this tutorial, we will be using Filebeat to collect and push ModSecurity logs to Logstash. Hence, we will configure Logstash to receive events from the Elastic Beats framework, which in this case is Filebeat.

To configure Logstash to collect events from Filebeat or any Elastic beat, create an input plugin configuration file.

vim /etc/logstash/conf.d/modsecurity-filter.confinput {

beats {

port => 5044

}

}Configure Logstash Filter plugin

We will be utilizing Logstash Grok filter to process our ModSecurity logs.

Below is a sample ModSecurity audit log line. An audit log line provides details about a transaction that’s blocked and why it was blocked.

[Sun Jul 12 21:22:47.978339 2020] [:error] [pid 78188:tid 140587634259712] [client 192.168.56.100:54556] ModSecurity: Warning. Matched "Operator `PmFromFile' with parameter `scanners-user-agents.data' against variable `REQUEST_HEADERS:User-Agent' (Value: `Mozilla/5.0 zgrab/0.x' ) [file "/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf"] [line "33"] [id "913100"] [rev ""] [msg "Found User-Agent associated with security scanner"] [data "Matched Data: zgrab found within REQUEST_HEADERS:User-Agent: mozilla/5.0 zgrab/0.x"] [severity "2"] [ver "OWASP_CRS/3.2.0"] [maturity "0"] [accuracy "0"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-reputation-scanner"] [tag "paranoia-level/1"] [tag "OWASP_CRS"] [tag "OWASP_CRS/AUTOMATION/SECURITY_SCANNER"] [tag "WASCTC/WASC-21"] [tag "OWASP_TOP_10/A7"] [tag "PCI/6.5.10"] [hostname "kifarunix-demo.com"] [uri "/"] [unique_id "15945817674.229523"] [ref "o12,5v48,21t:lowercase"]Every ModSecurity alert conforms to the following format when it appears in the Apache error log:

[Sun Jun 24 10:19:58 2007] [error] [client IP-Address] ModSecurity: ALERT_MESSAGEIn order to make a good visualization out of such unstructured log, we need to create filters to extract only specific fields that make sense and that gives information about an attack.

You can utilize the readily available Logstash grok patterns or utilize regular expressions if there is no grok filter for the section of the log you need to extract.

You can use the Grok Debugger on Kibana to test your Grok pattern/Regular expressions, Dev-tools > Grok Debugger, or http://grokdebug.herokuapp.com and http://grokconstructor.appspot.com/ apps.

We create Logstash Grok patterns to process the ModSecurity audit log and extract the following fields;

- Event time (

event_time)Sun Jul 12 21:22:47.978339 2020 - Log Severity Level (

log_level)error - Client IP Address (Source of Attack,

src_ip)192.168.56.100 - Alert Message (

alert_message)

ModSecurity: Warning. Matched "Operator PmFromFile' with parameter `scanners-user-agents.data`' against variable REQUEST_HEADERS:User-Agent' (Value:Mozilla/5.0 zgrab/0.x' ) [file "/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf"] [line "33"] [id "913100"] [rev ""] [msg "Found User-Agent associated with security scanner"] [data "Matched Data: zgrab found within REQUEST_HEADERS:User-Agent: mozilla/5.0 zgrab/0.x"] [severity "2"] [ver "OWASP_CRS/3.2.0"] [maturity "0"] [accuracy "0"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-reputation-scanner"] [tag "paranoia-level/1"] [tag "OWASP_CRS"] [tag "OWASP_CRS/AUTOMATION/SECURITY_SCANNER"] [tag "WASCTC/WASC-21"] [tag "OWASP_TOP_10/A7"] [tag "PCI/6.5.10"] [hostname "kifarunix-demo.com"] [uri "/"] [unique_id "15945817674.229523"] [ref "o12,5v48,21t:lowercase"]From the Alert Message (alert_message), we will further extract the following fields;

- Rules file (

rules_file):/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf - Attack type based on rules file (

attack_type):SCANNER-DETECTION - Rule ID (

rule_id):913100 - Attack Message (

alert_msg):Found User-Agent associated with security scanner - Matched Data (matched_data):

Matched Data: zgrab found within REQUEST_HEADERS:User-Agent: mozilla/5.0 zgrab/0.x - User Agent (

user_agent):mozilla/5.0 zgrab/0.x - Hostname (

dst_host):kifarunix-demo.com - Request URI (

request_uri):/ - Referer, if any. (

referer)

In order to extract these sections, below is our Logstash Grok filter.

filter {

# Extract event time, log severity level, source of attack (client), and the alert message.

grok {

match => { "message" => "(?<event_time>%{MONTH}\s%{MONTHDAY}\s%{TIME}\s%{YEAR})\] \[\:%{LOGLEVEL:log_level}.*client\s%{IPORHOST:src_ip}:\d+]\s(?<alert_message>.*)" }

}

# Extract Rules File from Alert Message

grok {

match => { "alert_message" => "(?<rulesfile>\[file \"(/.+.conf)\"\])" }

}

grok {

match => { "rulesfile" => "(?<rules_file>/.+.conf)" }

}

# Extract Attack Type from Rules File

grok {

match => { "rulesfile" => "(?<attack_type>[A-Z]+-[A-Z][^.]+)" }

}

# Extract Rule ID from Alert Message

grok {

match => { "alert_message" => "(?<ruleid>\[id \"(\d+)\"\])" }

}

grok {

match => { "ruleid" => "(?<rule_id>\d+)" }

}

# Extract Attack Message (msg) from Alert Message

grok {

match => { "alert_message" => "(?<msg>\[msg \S(.*?)\"\])" }

}

grok {

match => { "msg" => "(?<alert_msg>\"(.*?)\")" }

}

# Extract the User/Scanner Agent from Alert Message

grok {

match => { "alert_message" => "(?<scanner>User-Agent' \SValue: `(.*?)')" }

}

grok {

match => { "scanner" => "(?<user_agent>:(.*?)\')" }

}

grok {

match => { "alert_message" => "(?<matched_data>(Matched Data:+.+))\"\]\s\[severity" }

}

grok {

match => { "alert_message" => "(?<agent>User-Agent: (.*?)\')" }

}

grok {

match => { "agent" => "(?<user_agent>: (.*?)\')" }

}

# Extract the Target Host

grok {

match => { "alert_message" => "(hostname \"%{IPORHOST:dst_host})" }

}

# Extract the Request URI

grok {

match => { "alert_message" => "(uri \"%{URIPATH:request_uri})" }

}

grok {

match => { "alert_message" => "(?<ref>referer: (.*))" }

}

grok {

match => { "ref" => "(?<referer> (.*))" }

}

mutate {

# Remove unnecessary characters from the fields.

gsub => [

"alert_msg", "[\"]", "",

"user_agent", "[:\"'`]", "",

"user_agent", "^\s*", "",

"referer", "^\s*", ""

]

# Remove the Unnecessary fields so we can only remain with

# General message, rules_file, attack_type, rule_id, alert_msg, user_agent, hostname (being attacked), Request URI and Referer.

remove_field => [ "alert_message", "rulesfile", "ruleid", "msg", "scanner", "agent", "ref" ]

}

}

So how does this filter works?

In the first grok filter;

grok {

match => { "message" => "(?<event_time>%{MONTH}\s%{MONTHDAY}\s%{TIME}\s%{YEAR})\] \[\:%{LOGLEVEL:log_level}.*client\s%{IPORHOST:src_ip}:\d+]\s(?<alert_message>.*)" }

}we extract event time, log severity level, source of attack (client), and the alert message.

{

"src_ip": "192.168.56.100",

"alert_message": "ModSecurity: Warning. Matched "Operator `PmFromFile' with parameter `scanners-user-agents.data' against variable `REQUEST_HEADERS:User-Agent' (Value: `Mozilla/5.0 zgrab/0.x' ) [file "/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf"] [line "33"] [id "913100"] [rev ""] [msg "Found User-Agent associated with security scanner"] [data "Matched Data: zgrab found within REQUEST_HEADERS:User-Agent: mozilla/5.0 zgrab/0.x"] [severity "2"] [ver "OWASP_CRS/3.2.0"] [maturity "0"] [accuracy "0"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-reputation-scanner"] [tag "paranoia-level/1"] [tag "OWASP_CRS"] [tag "OWASP_CRS/AUTOMATION/SECURITY_SCANNER"] [tag "WASCTC/WASC-21"] [tag "OWASP_TOP_10/A7"] [tag "PCI/6.5.10"] [hostname "kifarunix-demo.com"] [uri "/"] [unique_id "15945817674.229523"] [ref "o12,5v48,21t:lowercase"]",

"log_level": "error",

"event_time": "Jul 12 21:22:47.978339 2020"

}From the alert_message, we then proceed to extract other fields.

For example, we begin with extracting the rule file using the pattern;

(?<rulesfile>\[file \"(/.+.conf)\"\]){

"rulesfile": "[file \"/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf\"]"

}This gets us, [file \"/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf\"].

We need to obtain just the file, /etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf and hence, we further use the pattern below to extract it renaming the new field from rulesfile to rules_file.

(?<rules_file_path>/.+.conf){

"rules_file_path": "/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf"

}The same applies to other fields.

We also mutated other fields and remove unnecessary characters including the trailing spaces, double quotes etc using the gsub option. For example, on the alert_msg field, we removed the double quotes, on user_Agent field, we removed trailing spaces etc.

mutate {

# Remove unnecessary characters from the fields.

gsub => [

"alert_msg", "[\"]", "",

"user_agent", "[:\"'`]", "",

"user_agent", "^\s*", "",

"referer", "^\s*", ""

]We also removed unnecessary fields using the remove_field mutate option.

# Remove the Unnecessary fields so we can only remain with

# General message, rules_file, attack_type, rule_id, alert_msg, user_agent, hostname (being attacked), Request URI and Referer.

remove_field => [ "alert_message", "rulesfile", "ruleid", "msg", "scanner", "agent", "ref" ]

}Configure Logstash Output Plugin

In this setup, we will forward Logstash processed data to the ES search and analytics engine.

Thus, our output plugin is configured as;

output {

elasticsearch {

hosts => ["192.168.56.119:9200"]

manage_template => false

index => "modsec-%{+YYYY.MM}"

}

}Therefore, in general, our Logstash ModSecurity processing configuration file looks like;

cat /etc/logstash/conf.d/modsecurity-filter.confinput {

beats {

port => 5044

}

}

filter {

# Extract event time, log severity level, source of attack (client), and the alert message.

grok {

match => { "message" => "(?<event_time>%{MONTH}\s%{MONTHDAY}\s%{TIME}\s%{YEAR})\] \[\:%{LOGLEVEL:log_level}.*client\s%{IPORHOST:src_ip}:\d+]\s(?<alert_message>.*)" }

}

# Extract Rules File from Alert Message

grok {

match => { "alert_message" => "(?<rulesfile>\[file \"(/.+.conf)\"\])" }

}

grok {

match => { "rulesfile" => "(?<rules_file>/.+.conf)" }

}

# Extract Attack Type from Rules File

grok {

match => { "rulesfile" => "(?<attack_type>[A-Z]+-[A-Z][^.]+)" }

}

# Extract Rule ID from Alert Message

grok {

match => { "alert_message" => "(?<ruleid>\[id \"(\d+)\"\])" }

}

grok {

match => { "ruleid" => "(?<rule_id>\d+)" }

}

# Extract Attack Message (msg) from Alert Message

grok {

match => { "alert_message" => "(?<msg>\[msg \S(.*?)\"\])" }

}

grok {

match => { "msg" => "(?<alert_msg>\"(.*?)\")" }

}

# Extract the User/Scanner Agent from Alert Message

grok {

match => { "alert_message" => "(?<scanner>User-Agent' \SValue: `(.*?)')" }

}

grok {

match => { "scanner" => "(?<user_agent>:(.*?)\')" }

}

grok {

match => { "alert_message" => "(?<agent>User-Agent: (.*?)\')" }

}

grok {

match => { "agent" => "(?<user_agent>: (.*?)\')" }

}

# Extract the Target Host

grok {

match => { "alert_message" => "(hostname \"%{IPORHOST:dst_host})" }

}

# Extract the Request URI

grok {

match => { "alert_message" => "(uri \"%{URIPATH:request_uri})" }

}

grok {

match => { "alert_message" => "(?<ref>referer: (.*))" }

}

grok {

match => { "ref" => "(?<referer> (.*))" }

}

mutate {

# Remove unnecessary characters from the fields.

gsub => [

"alert_msg", "[\"]", "",

"user_agent", "[:\"'`]", "",

"user_agent", "^\s*", "",

"referer", "^\s*", ""

]

# Remove the Unnecessary fields so we can only remain with

# General message, rules_file, attack_type, rule_id, alert_msg, user_agent, hostname (being attacked), Request URI and Referer.

remove_field => [ "alert_message", "rulesfile", "ruleid", "msg", "scanner", "agent", "ref" ]

}

}

output {

elasticsearch {

hosts => ["192.168.56.119:9200"]

manage_template => false

index => "modsec-%{+YYYY.MM}"

}

}

Please note that the extracted fields are not exhaustive. Feel free to further process your logs and extract the fields of your interest.

Forward ModSecurity Logs to Elastic Stack

Once you have installed and setup libModSecurity on your web server, forward the logs to Elastic Stack, in this case, to Logstash data processing pipeline.

In this setup, we are using Filebeat to collect and push logs to Elastic Stack. Follow the links below to install and setup Filebeat.

Install and Configure Filebeat on CentOS 8

Install Filebeat on Fedora 30/Fedora 29/CentOS 7

Install and Configure Filebeat 7 on Ubuntu 18.04/Debian 9.8

Be sure to configure the right path where ModSecurity logs are being written to.

Debugging Grok Filter

If you need to debug your filter, replace the output section of the Logstash configuration file as shown below and sent the processing to standard output;

output {

#elasticsearch {

# hosts => ["192.168.56.119:9200"]

# manage_template => false

# index => "modsec-%{+YYYY.MM}"

#}

stdout { codec => rubydebug }

}Stop Logstash;

systemctl stop logstashThe run Logstash against your filter configuration file;

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/modsecurity-filter.conf --path.settings /etc/logstash/While this run, ensure that the ModSecurity logs are streaming in so they can be processed as you see what happens.

Here is a sample debugging output;

{

"ecs" => {

"version" => "1.5.0"

},

"src_ip" => "253.63.240.101",

"dst_host" => "kifarunix-demo.com",

"@timestamp" => 2020-07-31T08:47:36.940Z,

"tags" => [

[0] "beats_input_codec_plain_applied",

[1] "_grokparsefailure"

],

"input" => {

"type" => "log"

},

...

...

},

"rules_file" => "/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf",

"log" => {

"offset" => 10787,

"file" => {

"path" => "/var/log/httpd/modsec_audit.log"

}

},

"rule_id" => "913100",

"alert_msg" => "Found User-Agent associated with security scanner",

"user_agent" => "sqlmap/1.2.4#stable (http//sqlmap.org)",

"message" => "[Tue Jul 14 16:39:28.086871 2020] [:error] [pid 83149:tid 139784827672320] [client 253.63.240.101:50102] ModSecurity: Warning. Matched \"Operator `PmFromFile' with parameter `scanners-user-agents.data' against variable `REQUEST_HEADERS:User-Agent' (Value: `sqlmap/1.2.4#stable (http://sqlmap.org)' ) [file \"/etc/httpd/conf.d/modsecurity.d/owasp-crs/rules/REQUEST-913-SCANNER-DETECTION.conf\"] [line \"33\"] [id \"913100\"] [rev \"\"] [msg \"Found User-Agent associated with security scanner\"] [data \"Matched Data: sqlmap found within REQUEST_HEADERS:User-Agent: sqlmap/1.2.4#stable (http://sqlmap.org)\"] [severity \"2\"] [ver \"OWASP_CRS/3.2.0\"] [maturity \"0\"] [accuracy \"0\"] [tag \"application-multi\"] [tag \"language-multi\"] [tag \"platform-multi\"] [tag \"attack-reputation-scanner\"] [tag \"paranoia-level/1\"] [tag \"OWASP_CRS\"] [tag \"OWASP_CRS/AUTOMATION/SECURITY_SCANNER\"] [tag \"WASCTC/WASC-21\"] [tag \"OWASP_TOP_10/A7\"] [tag \"PCI/6.5.10\"] [hostname \"kifarunix-demo.com\"] [uri \"/\"] [unique_id \"159473756862.024448\"] [ref \"o0,6v179,39t:lowercase\"], referer: http://kifarunix-demo.com:80/?p=1006",

"attack_type" => "SCANNER-DETECTION",

"request_uri" => "/",

"event_time" => "Jul 14 16:39:28.086871 2020",

"referer" => "http://kifarunix-demo.com:80/?p=1006",

"log_level" => "error",

"@version" => "1"

}

Once done debugging, you can then re-enable Elasticsearch output.

Check that your Elasticsearch Index has been created;

curl -XGET 192.168.56.119:9200/_cat/indices/modsec-*?yellow open modsec-2020.07 bKLGOrJ8SFCAnewz89XvUA 1 1 46 0 104.4kb 104.4kbVisualize ModSecurity Logs on Kibana

Create Kibana Index

On Kibana Dashboard, Navigate to Management > Kibana > Index Patterns > Create index pattern. An index pattern can match the name of a single index, or include a wildcard (*) to match multiple indices.

On the next page, select @timestamp as the time filter and click Create index pattern.

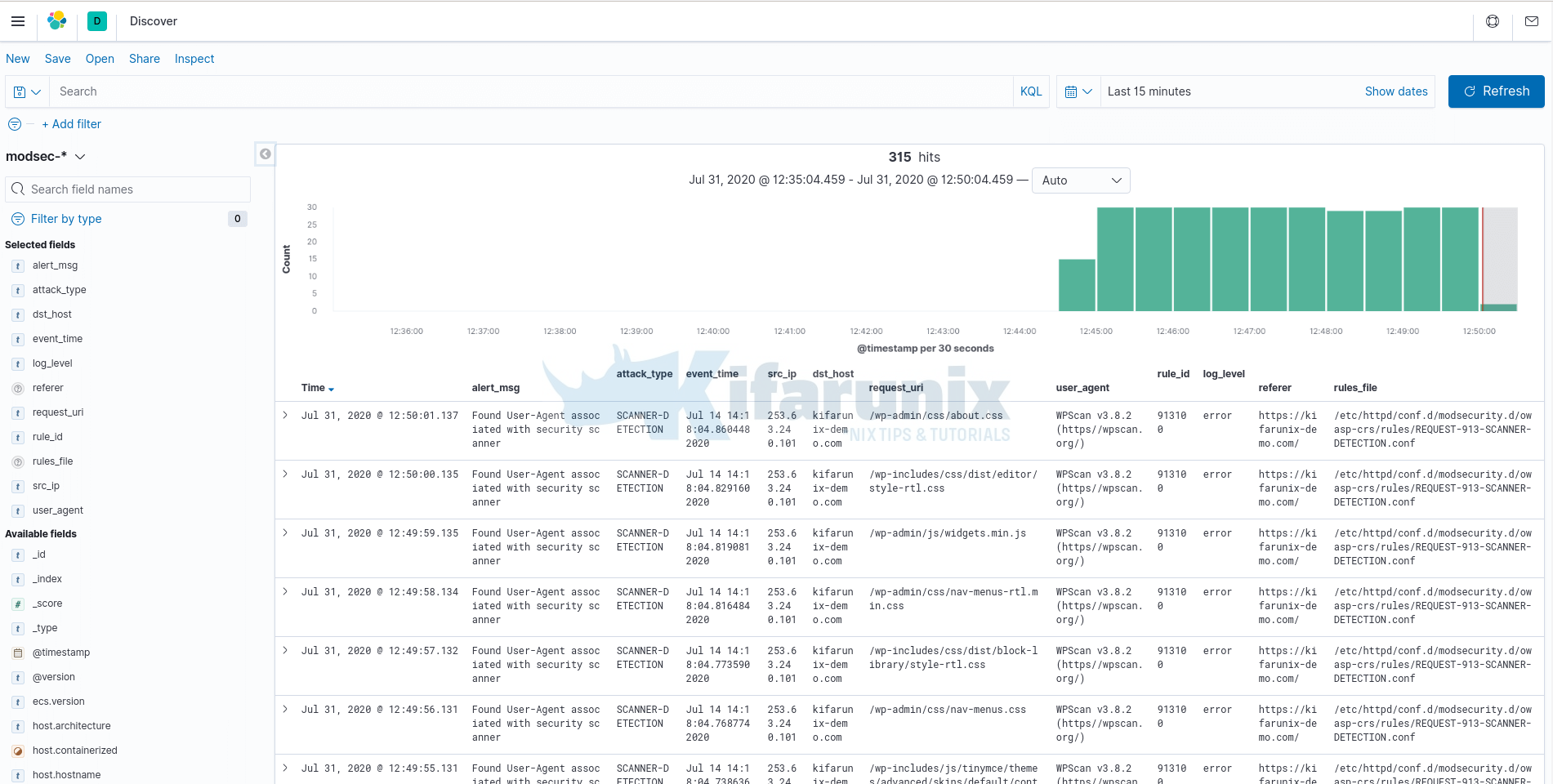

After that, click Discover tab on the left pane to view the data. Select your Index pattern, in this case, modsec-*.

The view below shows our fields selected as per our Logstash filters.

And there you go. You have your ModSecurity log fields populated on Kibana.

To complete this tutorial on how to process ModSecurity Logs and visualize on ELK Stack, you can now proceed to create ModSecurity Kibana visualization dashboards.

Create Kibana Visualization Dashboards for ModSecurity Logs

Follow the link below to create ModSecurity Kibana visualization dashboards.

Further Reading

ModSecurity Logging and Debugging

Related Tutorials

How to Debug Logstash Grok Filters

Install Logstash 7 on Fedora 30/Fedora 29/CentOS 7

Send Windows logs to Elastic Stack using Winlogbeat and Sysmon

Hi. I’m having problems while creating index patters and getting logs. It creates patterns which are different from what I have declared in the configuration. And I see that filebeat is getting data from the wrong location too. Can you help me out?

Thank you

Hi Nguyen,

Configure Filebeat to collect the modsecurity logs and set the output to Logstash.

On Logstash configuration, configure Elasticsearch output and specify your index pattern on the Logstash output config section.

Can you instruct me to configure filebeat properly?

Can you instruct me to configure filebeat properly to push modsecurity logs to logstash?

Hello. Thank you for this article. I suggest you to write your apache errorlogformat to match with the rules.

Also, grok filter are a little tricky and not state of the art. It’s better to rewrite them (by a more experimented developper).

Thank you. Fab.

Hi, thanks for the feedback. Would you be kind to show how to do that? thanks