Welcome to our guide on installing ELK Stack on CentOS 8.

ELK is the acronym for three open source projects: Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a server‑side data processing pipeline that ingests data, transforms it, and then sends it to a “stash” like Elasticsearch. Kibana lets users visualize data with charts and graphs in Elasticsearch. Beast, the data shippers is also part of the stack.

Install ELK Stack on CentOS 8

The order of installation of the Elastic Stack components is of great importance. It usually takes the order Elasticsearch > Kibana > Logstash > Beats. Also note that all components should be of the same versions.

To install Elastic Stack components on CentOS 8 system, you can choose to create the Elastic RPM repo or install each component using their respective RPM binary.

Creating Elastic Stack RPM Repo on CentOS 8

Elastic Stack version 7.x repo can be created by running the command below.

cat > /etc/yum.repos.d/elasticstack.repo << EOL

[elasticstack]

name=Elastic repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOL

Run system package update.

dnf updateInstall Elasticsearch on CentOS 8

You can install Elasticsearch on CentOS 8 from the created Elastic RPM repos or simply from using the RPM binary.

dnf install elasticsearchAlternatively, you can install ES using RPM binary, version 7.5.2 is the latest stable as of this writing.

VERSION=7.5.2

dnf install https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-$VERSION-x86_64.rpmConfiguring Elasticsearch

Out of the box, Elasticsearch works well with the default configuration options. In this demo, we are going to however make a few changes as per Important Elasticsearch Configurations.

Set the Elasticsearch bind address to a specific IP if you need to enable remote access either from Kibana or Logstash or from Beats. Replace the IP, 192.168.56.154, with your appropriate server IP address.

sed -i 's/#network.host: 192.168.0.1/network.host: 192.168.56.154/' /etc/elasticsearch/elasticsearch.ymlYou can as well leave the default settings to only allow local access to Elasticsearch.

When configured to listen on a non-loopback interface, Elasticsearch expects to join a cluster. But since we are setting up a single node Elastic Stack, you need to define in the ES configuration that this is a single node setup, by entering the line, discovery.type: single-node, under discovery configuration options. However, you can skip this if your ES is listening on a loopback interface.

vim /etc/elasticsearch/elasticsearch.yml# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.seed_hosts: ["host1", "host2"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

#cluster.initial_master_nodes: ["node-1", "node-2"]

# Single Node Discovery

discovery.type: single-nodeNext, configure JVM heap size to no more than half the size of your memory. In this case, our test server has 2G RAM and the heap size is set to 512M for both maximum and minimum sizes.

vim /etc/elasticsearch/jvm.options...

################################################################

# Xms represents the initial size of total heap space

# Xmx represents the maximum size of total heap space

-Xms512m

-Xmx512m

...Start and enable ES to run on system boot.

systemctl daemon-reload

systemctl enable --now elasticsearchVerify that Elasticsearch is running as expected.

curl -XGET 192.168.56.154:9200

{

"name" : "elastic.kifarunix-demo.com",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "iyslQrEdTISVdVGsDNDvlA",

"version" : {

"number" : "7.5.2",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "8bec50e1e0ad29dad5653712cf3bb580cd1afcdf",

"build_date" : "2020-01-15T12:11:52.313576Z",

"build_snapshot" : false,

"lucene_version" : "8.3.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

Install Kibana on CentOS 8

The next Elastic Stack component to install is Kabana. Since we already created the Elastic Stack repos, you can simply run the command below to install it.

yum install kibanaConfiguring Kibana

To begin with, you need to configure Kibana to allow remote access. It usually only allows local access on port 5601/tcp. Hence, open the Kibana configuration file for editing and uncomment and change the following lines;

vim /etc/kibana/kibana.yml...

#server.port: 5601

...

# To allow connections from remote users, set this parameter to a non-loopback address.

#server.host: "localhost"

...

# The URLs of the Elasticsearch instances to use for all your queries.

#elasticsearch.hosts: ["http://localhost:9200"]Such that it look like as shown below:

Replace the IP addresses of Kibana and Elasticsearch accordingly. Note that in this demo, All Elastic Stack components are running on the same host.

...

server.port: 5601

...

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "192.168.56.154"

...

# The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["http://192.168.56.154:9200"]Start and enable Kibana to run on system boot.

systemctl enable --now kibanaOpen Kibana Port on FirewallD, if it is running;

firewall-cmd --add-port=5601/tcp --permanentfirewall-cmd --reloadAccessing Kibana Interface

You can now access Kibana from your browser by using the URL, http://kibana-server-hostname-OR-IP:5601.

On Kibana web interface, you can choose to try sample data since we do not have any data being sent to Elasticsearch yet. You can as well choose to explore your own data, of course after sending data to Es.

Install Logstash on CentOS 8

Logstash is the component of Elastic Stack that does further processing of the event data before sending it to the Elasticsearch data store. For example, you can develop custom regex, grok patterns to extract specific fields from the event data.

It is also possible to directly sent the data to Elasticsearch instead of passing them through Logstash.

To install Logstash on CentOS 8.

yum install logstashMostly, Logstash installs with a bundled Java/JDK. But if for some reasons Logstash requires that Java be installed, then you can install Java 8 using the command below, if it is supported.

yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-develTesting Logstash

Once the installation of Logstash is done, you can verify that it is ready to process event data by running the basic pipeline command as shown below;

cd /usr/share/logstash/bin/logstash -e 'input { stdin { } } output { stdout {} }'Press ENTER to execute the command and wait for the Pipeline to be ready to receive input data.

...

[INFO ] 2020-02-09 08:37:38.732 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}

[INFO ] 2020-02-09 08:37:38.818 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

The stdin plugin is now waiting for input:

[INFO ] 2020-02-09 08:37:39.395 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}Type any string, for example, testing Logstash pipeline and press ENTER.

Logstash process the input data and adds timestamp and host address information to the message.

{

"host" => "elastic.kifarunix-demo.com",

"message" => "testing Logstash pipeline",

"@timestamp" => 2020-02-09T05:42:17.666Z,

"@version" => "1"

}You can stop Logstash pipeline by pressing Ctrl+D.

Configuring Logstash to Collect and Sent Events to Elasticsearch

Logstash is now ready to receive and process data. In this demo, we are going to learn how to configure Logstash pipeline to collect events from a local system.

Logstash pipeline is made up of three sections;

- the input: collect data from different sources

- the filter: (Optional) performs further processing on data.

- the output: stashes received data into a destination datastore such as Elasticsearch.

Configure Logstash Input plugin

To collect events from the local system, we are going to use the file input plugin. There are multiple input plugins you can use, check them on Logstash Input Plugins.

In this demo, we are using a single configuration file to define the pipeline components; input, filters, output.

vim /etc/logstash/conf.d/local-ssh-events.conf## Collect System Authentication events from /var/log/secure

input {

file {

path => "/var/log/secure"

type => "ssh_auth"

}Configure Logstash Filter plugin

Configure Logstash filter to extract only relevant events such as the lines below from the /var/log/secure file.

Mar 28 14:45:12 ssp sshd[10125]: Accepted password for johndoe from 192.168.2.106 port 37292 ssh2

Mar 28 14:45:37 ssp sshd[10199]: Failed password for johndoe from 192.168.2.106 port 37304 ssh2

Mar 28 14:45:59 ssp sshd[10202]: Failed password for invalid user koromicha from 192.168.2.106 port 37310 ssh2

Mar 28 14:46:33 ssp sshd[10230]: Accepted publickey for janedoe from 192.168.2.106 port 37330 ssh2: RSA SHA256:3soC1YLO/OVyp0+aHILa/iaBEBdQBT4L7QrwNheIVCMWe are using Grok Filters to process extract these lines from the log file;

vim /etc/logstash/conf.d/local-ssh-events.conf

## Collect System Authentication events from /var/log/secure

input {

file {

path => "/var/log/secure"

type => "ssh_auth"

}

}

## Design a grok filter to extract useful SSH authentication events.

filter {

if [type] == "ssh_auth" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp}\s+%{IPORHOST:dst_host}\s+%{WORD:syslog_program}\[\d+\]:\s+(?.+)\s+for\s+%{USER:auth_user}\s+from\s+%{SYSLOGHOST:src_host}.*" }

add_field => { "activity" => "SSH Logins" }

add_tag => "linux_auth"

}

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp}\s+%{IPORHOST:dst_host}\s+%{WORD:syslog_program}\[\d+\]:\s+(?.+)\s+for\s+invalid\s+user\s%{USER:auth_user_nonexist}\s+from\s+%{SYSLOGHOST:src_host}.*" }

add_field => { "activity" => "SSH Logins" }

add_tag => "linux_auth"

}

}

# Drop any message that doesn't contain the keywords below

if [message] !~ /(Failed password|Accepted password|Accepted publickey|for invalid)/ { drop { } }

}

Note the grok filter used above will just capture password based and public key SSH logins. You can use the Grok Debugger on Kibana to test your pattern, Dev-tools > Grok Debugger.

Also, note the filter;

if [message] !~ /(Failed password|Accepted password|Accepted publickey|for invalid)/ { drop { }For the purpose of demo and to capture only the logs we need for this demo, that line basically drops any other event log not containing the specified keywords above.

Configure Logstash Output Plugin

Next, we are going to sent our processed data to Elasticsearch running on the localhost.

Define Elasticsearch output.

vim /etc/logstash/conf.d/local-ssh-events.conf

## Collect System Authentication events from /var/log/secure

input {

file {

path => "/var/log/secure"

type => "ssh_auth"

}

}

filter {

if [type] == "ssh_auth" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp}\s+%{IPORHOST:dst_host}\s+%{WORD:syslog_program}\[\d+\]:\s+(?.+)\s+for\s+%{USER:auth_user}\s+from\s+%{SYSLOGHOST:src_host}.*" }

add_field => { "activity" => "SSH Logins" }

add_tag => "linux_auth"

}

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp}\s+%{IPORHOST:dst_host}\s+%{WORD:syslog_program}\[\d+\]:\s+(?.+)\s+for\s+invalid\s+user\s%{USER:auth_user_nonexist}\s+from\s+%{SYSLOGHOST:src_host}.*" }

add_field => { "activity" => "SSH Logins" }

add_tag => "linux_auth"

}

}

# Drop any message that doesn't contain the keywords below

if [message] !~ /(Failed password|Accepted password|Accepted publickey|for invalid)/ { drop { } }

}

## Send data to Elasticsearch on the localhost

output {

elasticsearch {

hosts => ["192.168.56.154:9200"]

manage_template => false

index => "ssh_auth-%{+YYYY.MM}"

}

}

Ensure that Logstash can read the file being monitored, /var/log/secure. By default, file is owned by root. Therefore, for logstash to be able to read the file, first change the group ownership to adm, add logstash to the group adm, and assign read access to logstash.

chown :adm /var/log/secureusermod -aG adm logstashchmod g+r /var/log/secureVerify Logstash Grok Filters

Before you can sent the data to Elasticsearch, you need to verify the grok filters. Follow our guide below to learn how to debug grok patterns.

How to Debug Logstash Grok Filters

Verify Logstash Configuration

After the configurations, run the command below to verify the Logstash configuration before you can start it.

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -tSending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2020-02-09T00:43:10,110][INFO ][org.reflections.Reflections] Reflections took 92 ms to scan 1 urls, producing 20 keys and 40 values

Configuration OK

...Configuration OK confirms that there is no error in the configuration file.

If you need to debug a specific Logstash pipeline configuration file, you can execute the command below. Replace the path to config file with your file path. Ensure logstash is not running when executing this command.

sudo -u logstash /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/local-ssh-events.conf --path.settings /etc/logstash/Running Logstash

You can start and enable Logstash to run on system boot.

systemctl enable --now logstashIf for some weird reasons Logstash did not generate the systemd service file with the symptom:(Failed to restart logstash.service: Unit logstash.service not found.)

Simply generate the service file by executing the command;

/usr/share/logstash/bin/system-install /etc/logstash/startup.options systemdYou can the start and enable it to run on boot as shown above.

To check the status;

systemctl status logstash● logstash.service - logstash

Loaded: loaded (/etc/systemd/system/logstash.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2020-02-09 14:52:12 EAT; 1min 4s ago

Main PID: 6699 (java)

Tasks: 32 (limit: 11500)

Memory: 474.9M

CGroup: /system.slice/logstash.service

└─6699 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.>

...Visualizing Data on Kibana

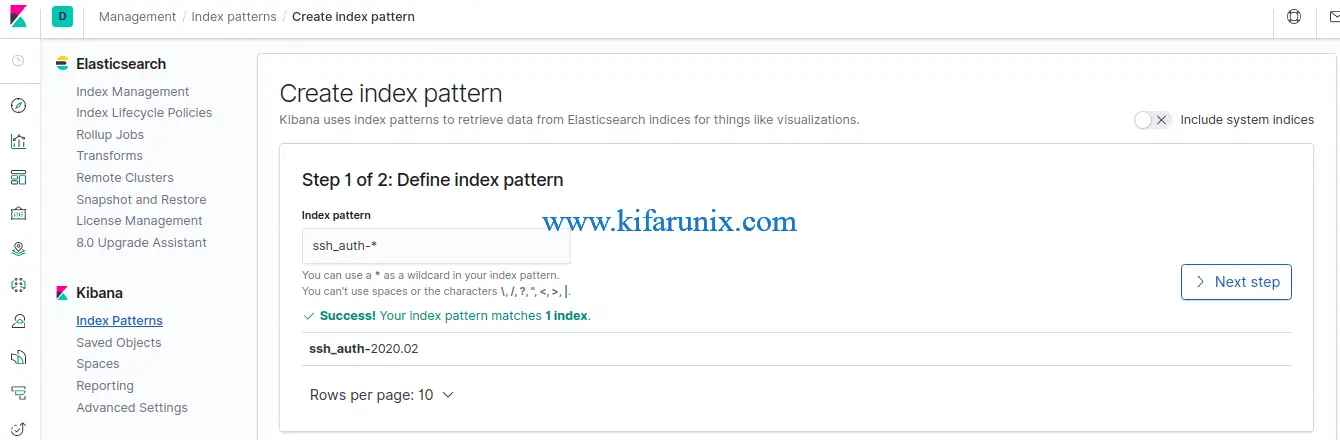

To visualize and explore data in Kibana, you need to create an index pattern to retrieve data from Elasticsearch. In this demo it is, our index pattern is ssh_auth-*, as defined on the Logstash Elasticsearch output plugin, index => "ssh_auth-%{+YYYY.MM}".

On Kibana Dashboard, Navigate to Management > Kibana > Index Patterns > Create index pattern. An index pattern can match the name of a single index, or include a wildcard (*) to match multiple indices.

Click Next and select @timestamp as the time filter and click Create index pattern.

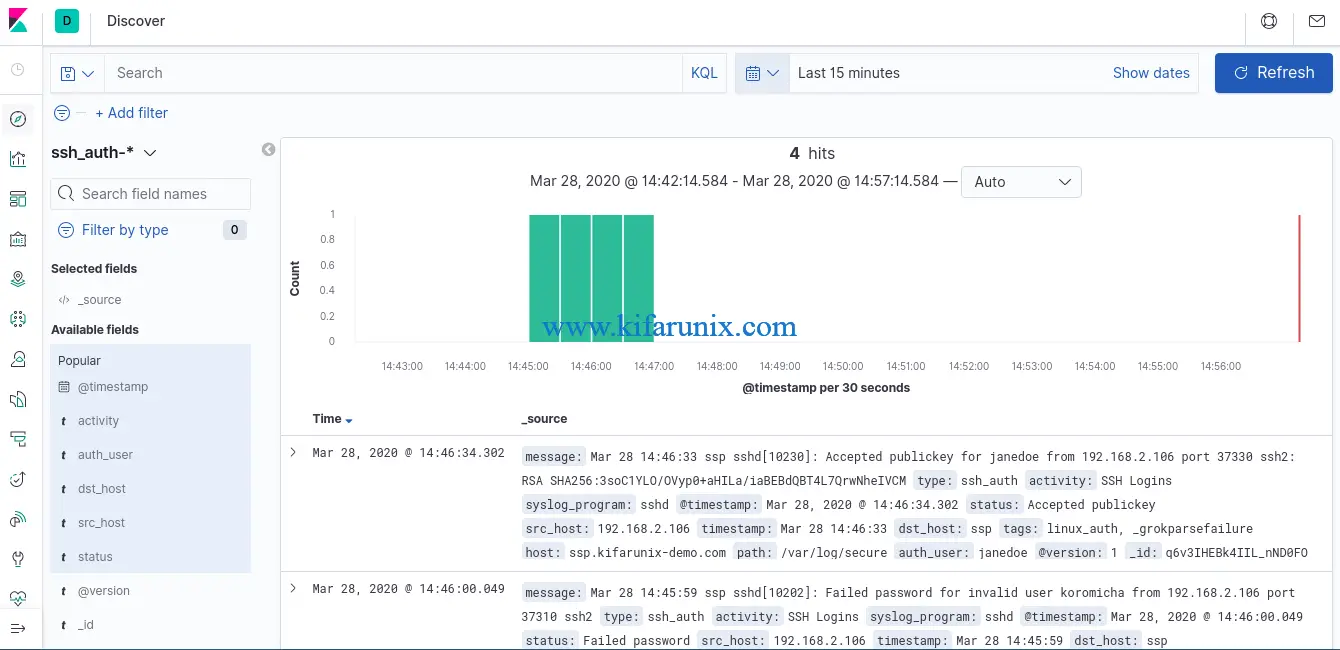

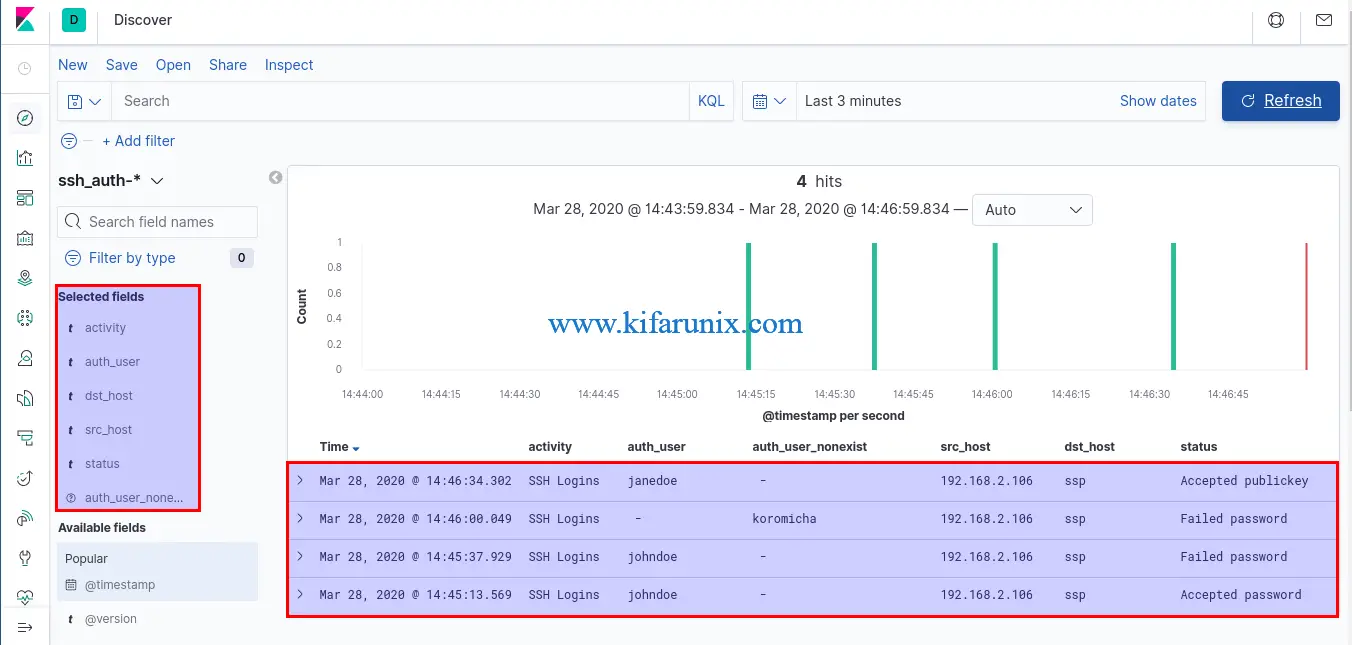

After that, click Discover tab on the left pane to view the data. Expand time range appropriately.

You can as well select the fields that you want to view based on your grok pattern. In the screenshot below, time range is last 3 minutes.

Be sure to customize your Grok patterns to your liking if using Logstash as your data processing engine.

Reference;

Related Tutorials

Install Elastic Stack 7 on Fedora 30/Fedora 29/CentOS 7

Awesome post. Thanks for making this!

Just subscribed to your RSS.

Thank you Jason. We are glad the you found the tutorial useful. Enjoy

Enjoy your posts, the Prometheus Stack was great too!

Just, fyi, you are missing the ‘s’ in elasticstack: “cat > /etc/yum.repos.d/elaticstack.repo << EOL"

This is by far the best ELK installation guide I’ve seen. I really appreciate your effort on this post. Managed to get it up and running without any issues.

I really appreciate the extra tips on how things work such as Logstash confs. Rare to find in ELK installation guides. Especially ones explained so clearly!

Cheers!

Hello Hugo, we are glad that you found the article useful. Cheers

Missing firewall rules for elasticsearch

firewall-cmd –add-port=9200/tcp –permanet