Welcome to our tutorial on how to configure ELK Stack alerting with ElastAlert. As much as ELK Stack enables you to collect, process/parse, index and visualize various system data, it can as well be configured to alert on various events. The alerting features enable you to watch for changes or anomalies in your data and perform the necessary actions in response.if certains event conditions are met. ELK stack supports alerting but it is available as a paid subscription and you need a license to use. A 30 day trial version is also available. Well, in this tutorial, we will be using the open-source alternative to Elasticsearch X-Pack alerting feature, ElastAlert.

Table of Contents

Sending ELK Stack Alerts with ElastAlert

ElastAlert is to be reliable, highly modular, and easy to set up and configure. It works by combining Elasticsearch with two types of components, rule types and alerts. Elasticsearch is periodically queried and the data is passed to the rule type, which determines when a match is found. When a match occurs, it is given to one or more alerts, which take action based on the match. This is configured by a set of rules, each of which defines a query, a rule type, and a set of alerts.

Several rule types with common monitoring paradigms are included with ElastAlert:

- “Match where there are X events in Y time” (

frequencytype) - “Match when the rate of events increases or decreases” (

spiketype) - “Match when there are less than X events in Y time” (

flatlinetype) - “Match when a certain field matches a blacklist/whitelist” (

blacklistandwhitelisttype) - “Match on any event matching a given filter” (

anytype) - “Match when a field has two different values within some time” (

changetype)

Currently, ElastAlert have built in support for these alert types:

- Command

- JIRA

- OpsGenie

- SNS

- HipChat

- Slack

- Telegram

- GoogleChat

- Debug

- Stomp

- theHive

Installing ElastAlert in Linux

There are quite a number of requirements for the installation of ElastAlert as outlined on the requirements page. These include;

- Elasticsearch

- ISO8601 or Unix timestamped data

- Python 3.6

- pip, see requirements.txt

- Packages on Ubuntu 14.x: python-pip python-dev libffi-dev libssl-dev

Install and Setup Elastic/ELK Stack

Follow the links below to install and setup ELK/Elastic Stack.

Install ELK Stack on Ubuntu 20.04

Installing ELK Stack on CentOS 8

Deploy a Single Node Elastic Stack Cluster on Docker Containers

Install Elastic Stack 7 on Fedora 30/Fedora 29/CentOS 7

Install Elastic Stack 7 on Ubuntu 18.04/Debian 9.8

Of course the log data collected are unix timestamped.

Installing Python 3 on Linux

In this demo, we are installing ElastAlert on our Elastic stack server running on a CentOS 8 system. Note that you can as well install Elastalert on the client from where you are shipping logs.

As per the requirements above, Python 3.6 is needed for ElastAlert. On CentOS 8, you can install Python 3.6 by executing the command below (if not already installed);

dnf install python36 python3-develFor other distros, refer to the respective documentation on installing Python 3.6.

Install PIP on Linux

Similarly, for our CentOS 8 system running Python 3, then you can install PIP by executing the command below;

dnf install python3-pipRefer to your OS distro for specifics on installing PIP.

Similarly, you need to install GNU Compiler Collection (gcc);

dnf install gccYou might need to install the Development Tools, which provides comprehensive build tools but installing GCC suffice.

Installing ElastAlert

Once the requirements of installing ElastAlert are in place, you can now install latest release version of ElastAlert.

Well, you got two options here;

- You can install the latest released version of ElastAlert using pip:

pip3 install elastalert- or you can simply clone the ElastAlert repository for the most recent changes:

git clone https://github.com/Yelp/elastalert.git /opt/elastalertcd /opt/elastalertpip3 install "setuptools>=11.3" -Upython3 setup.py installInstall Elasticsearch ElastAlert Module

Next, install ElastAlert Elasticsearch module (for ES version 5 and above).

pip3 install "elasticsearch>=5.0.0"cd ~Configuring ELK Stack Alerting with ElastAlert

You can now configure ElastAlert for ELK Stack alerting.

First off, the ElastAlert (as per our installation method of cloning its Github repo) ships with example configuration file, /opt/elastalert/config.yml.example.

Rename this configuration file removing the .example suffix.

cp /opt/elastalert/config.yaml{.example,}The configuration file is highly commented. By default, without comment and empty lines, this is how it looks like;

rules_folder: example_rules

run_every:

minutes: 1

buffer_time:

minutes: 15

es_host: elasticsearch.example.com

es_port: 9200

writeback_index: elastalert_status

writeback_alias: elastalert_alerts

alert_time_limit:

days: 2

rules_folderis where ElastAlert will load rule configuration files from, which in our case is/opt/elastalert/example_rules.run_everyis how often ElastAlert will query Elasticsearch.buffer_timeis the size of the query window, stretching backwards from the time each query is run.es_hostis the address of an Elasticsearch cluster where ElastAlert will store data about its state, queries run, alerts, and errors. Each rule may also use a different Elasticsearch host to query against.es_portis the port corresponding toes_host.writeback_indexis the name of the index in which ElastAlert will store data. We will create this index later.alert_time_limitis the retry window for failed alerts.

Define the Address and the Port for the Elasticsearch node;

Open the ElastAlert configuration file for editing;

vim /opt/elastalert/config.yamlThe only thing we gonna change in the default configuration file is the IP address and port for ES.

Find the IP on which ES is listening

ss -altnp | grep :9200LISTEN 0 128 [::ffff:192.168.57.30]:9200 *:*Then;

...

# The Elasticsearch hostname for metadata writeback

# Note that every rule can have its own Elasticsearch host

es_host: 192.168.57.30

# The Elasticsearch port

es_port: 9200

...

If your ES is configured with SSL/Authentication, be sure to set the respective specifics on ElastAlert config file.

Save and exit the config.

This is how our config file then looks like;

less /opt/elastalert/config.yaml

rules_folder: /opt/elastalert/example_rules

run_every:

minutes: 1

buffer_time:

minutes: 15

es_host: 192.168.57.30

es_port: 9200

writeback_index: elastalert_status

writeback_alias: elastalert_alerts

alert_time_limit:

days: 2

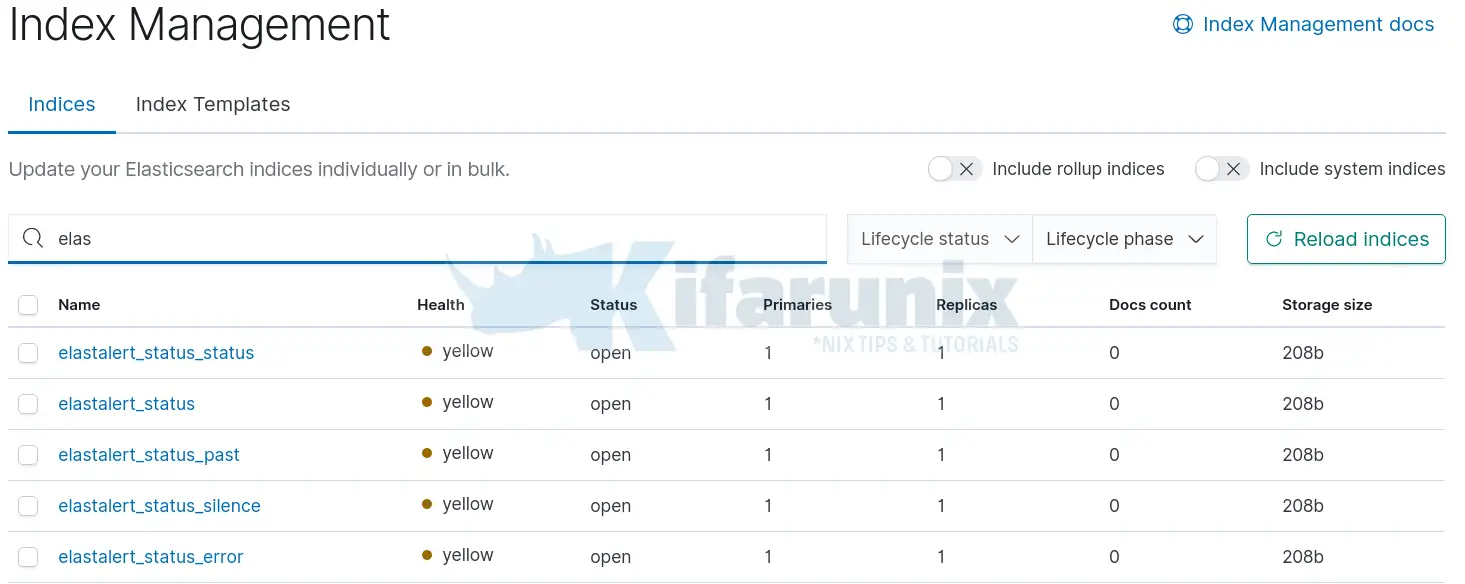

Create ElastAlert Index on Elasticsearch

Create an ElastAlert index on Elasticsearch to enable it to store information and metadata about its queries and alerts.

elastalert-create-indexElastic Version: 7.8.1

Reading Elastic 6 index mappings:

Reading index mapping 'es_mappings/6/silence.json'

Reading index mapping 'es_mappings/6/elastalert_status.json'

Reading index mapping 'es_mappings/6/elastalert.json'

Reading index mapping 'es_mappings/6/past_elastalert.json'

Reading index mapping 'es_mappings/6/elastalert_error.json'

New index elastalert_status created

Done!

If you encounter the error, AttributeError: module 'yaml' has no attribute 'FullLoader', while creating the ElastAlert ES indices, you can reinstall PyYAML;

sudo pip3 install --ignore-installed PyYAMLAfter creating the indices, if you navigate to Kibana under stack management > Elasticsearch > Index management, you should be able to see such indices;

Creating ElastAlert Rules and Alerting

As per our setup, the ElastAlert rules are located under, /opt/elastalert/example_rules directory.

ls /opt/elastalert/example_rules/ -1example_cardinality.yaml

example_change.yaml

example_frequency.yaml

example_new_term.yaml

example_opsgenie_frequency.yaml

example_percentage_match.yaml

example_single_metric_agg.yaml

example_spike_single_metric_agg.yaml

example_spike.yaml

jira_acct.txt

ssh-repeat-offender.yaml

ssh.yaml

ElastAlert supports different types of rules as explained on Rule Types page as well various alert channel types as outlined on ElastAlert Alerts page.

In this setup, we will test a few rule type and use email for alerting.

ELK Stack Email Alerting on Multiple Failed SSH Logins

If you check under the example rules directory, we have SSH rules file, /opt/elastalert/example_rules/ssh.yaml.

In this setup, we are collecting logs from the end points using Filebeat. We would be to alerted via mail in case there is a more than failed 5 login attempts on an end point. Hence, below is our sample SSH configuration without comment lines;

vim /opt/elastalert/example_rules/ssh.yamlname: Sample SSH Rule

type: frequency

num_events: 3

timeframe:

minutes: 1

filter:

- query:

query_string:

query: "event.type:authentication_failure"

index: filebeat-*

realert:

minutes: 1

query_key:

- source.ip

include:

- host.hostname

- user.name

- source.ip

include_match_in_root: true

alert_subject: "SSH Bruteforce Attacks Detected on {}"

alert_subject_args:

- host.hostname

alert_text: |-

Multiple SSH failed logins detected on {}.

Details of the event:

- User: {}

- Source IP: {}

alert_text_args:

- host.hostname

- user.name

- source.ip

alert:

- email:

from_addr: "[email protected]"

email: "[email protected]"

alert_text_type: alert_text_only

The above search for the authentication failure event type on the index, filebeat-*. It then sent alerts if three failed login attempts are noticed in under a minute. Be sure to set the correct index name and the correct search string for your events.

Save and exit the file once you are done making changes.

Note, for email alerting to work, you need to configure SMTP for email relay. You can check the guide below for setting up Postfix on Ubuntu/Fedora.

Configure Postfix to Use Gmail SMTP on Ubuntu 20.04

Configure Postfix to Use Gmail SMTP on Ubuntu 18.04

Configure Postfix as Send-Only SMTP Server on Fedora 29

Testing ElastAlert Rule

Once you have configured your rule, you need to test whether it actually works. ElastAlert provides a script called elastalert-test-rule for validating the configured rules.

The script is installed under, /usr/local/bin;

which elastalert-test-rule/usr/local/bin/elastalert-test-ruleFor example, to test the SSH rule above, navigate to

elastalert-test-rule --config /opt/elastalert/config.yaml /opt/elastalert/example_rules/ssh.yamlThe script shows the output similar to below;

INFO:elastalert:Note: In debug mode, alerts will be logged to console but NOT actually sent.

To send them but remain verbose, use --verbose instead.

Didn't get any results.

INFO:elastalert:Note: In debug mode, alerts will be logged to console but NOT actually sent.

To send them but remain verbose, use --verbose instead.

1 rules loaded

INFO:apscheduler.scheduler:Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO:elastalert:Queried rule SSH abuse (ElastAlert 3.0.1) - 2 from 2020-12-01 21:13 EAT to 2020-12-01 21:14 EAT: 0 / 0 hits

Would have written the following documents to writeback index (default is elastalert_status):

elastalert_status - {'rule_name': 'SSH abuse (ElastAlert 3.0.1) - 2', 'endtime': datetime.datetime(2020, 12, 1, 18, 14, 56, 92899, tzinfo=tzutc()), 'starttime': datetime.datetime(2020, 12, 1, 18, 13, 55, 492899, tzinfo=tzutc()), 'matches': 0, 'hits': 0, '@timestamp': datetime.datetime(2020, 12, 1, 18, 14, 56, 416136, tzinfo=tzutc()), 'time_taken': 0.08273959159851074}

As you can see, there SSH failed events currently match in our Filebeat index, INFO:elastalert:Queried rule SSH abuse (ElastAlert 3.0.1) - 2 from 2020-12-01 21:13 EAT to 2020-12-01 21:14 EAT: 0 / 0 hits.

I am gonna simulate multiple failed ssh events and rerun the script.

elastalert-test-rule --config /opt/elastalert/config.yaml /opt/elastalert/example_rules/ssh.yamlINFO:elastalert:Note: In debug mode, alerts will be logged to console but NOT actually sent.

To send them but remain verbose, use --verbose instead.

Didn't get any results.

INFO:elastalert:Note: In debug mode, alerts will be logged to console but NOT actually sent.

To send them but remain verbose, use --verbose instead.

1 rules loaded

INFO:apscheduler.scheduler:Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 22:04 EAT to 2020-12-01 22:05 EAT: 5 / 5 hits

INFO:elastalert:Alert for Sample SSH Rule at 2020-12-01T22:05:06+03:00:

INFO:elastalert:Multiple SSH failed logins detected on solr.

Details of the event:

- User: gen_t00

- Source IP: 192.168.57.1

Would have written the following documents to writeback index (default is elastalert_status):

silence - {'exponent': 0, 'rule_name': 'Sample SSH Rule.192.168.57.1', '@timestamp': datetime.datetime(2020, 12, 1, 19, 5, 17, 14585, tzinfo=tzutc()), 'until': datetime.datetime(2020, 12, 1, 19, 6, 17, 14577, tzinfo=tzutc())}

elastalert_status - {'rule_name': 'Sample SSH Rule', 'endtime': datetime.datetime(2020, 12, 1, 19, 5, 16, 979897, tzinfo=tzutc()), 'starttime': datetime.datetime(2020, 12, 1, 19, 4, 16, 379897, tzinfo=tzutc()), 'matches': 1, 'hits': 5, '@timestamp': datetime.datetime(2020, 12, 1, 19, 5, 17, 15185, tzinfo=tzutc()), 'time_taken': 0.012313127517700195}

As you can see above, we have 5 events in under one minute;

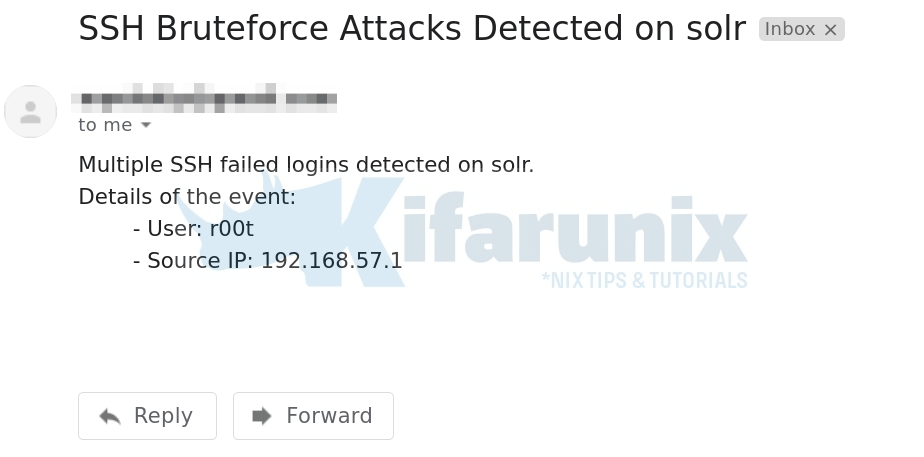

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 21:30 EAT to 2020-12-01 21:31 EAT: 5 / 5 hitsEvent Details (Email Body):

Multiple SSH failed logins detected on solr.

Details of the event:

- User: gen_t00

- Source IP: 192.168.57.1Use –help option to see the script arguments you can use.

elastalert-test-rule --help

Running ElastAlert

Once you have confirmed that your query is working fine, it is time to run ElastAlert. ElastAlert can be run as a daemon via supervisord or via Python.

You can as well run it on standard output using the elastalert binary, /usr/local/bin/elastalert.

For example, you run ElastAlert against all rules defined in the rules directory;

/usr/local/bin/elastalert --verbose --config /opt/elastalert/config.yamlTo specify a specific rules file;

/usr/local/bin/elastalert --verbose --config /opt/elastalert/config.yaml --rule /path/to/rules-file.yamlE.g

/usr/local/bin/elastalert --verbose --config /opt/elastalert/config.yaml --rule /opt/elastalert/example_rules/ssh.yaml1 rules loaded

INFO:elastalert:Starting up

INFO:elastalert:Disabled rules are: []

INFO:elastalert:Sleeping for 59.99993 seconds

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 22:01 EAT to 2020-12-01 22:16 EAT: 8 / 8 hits

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 22:16 EAT to 2020-12-01 22:31 EAT: 0 / 0 hits

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 22:31 EAT to 2020-12-01 22:46 EAT: 0 / 0 hits

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 22:46 EAT to 2020-12-01 23:01 EAT: 0 / 0 hits

INFO:elastalert:Queried rule Sample SSH Rule from 2020-12-01 23:01 EAT to 2020-12-01 23:12 EAT: 0 / 0 hits

INFO:elastalert:Sent email to ['[email protected]']

INFO:elastalert:Ignoring match for silenced rule Sample SSH Rule.192.168.57.1

INFO:elastalert:Ran Sample SSH Rule from 2020-12-01 22:01 EAT to 2020-12-01 23:12 EAT: 0 query hits (0 already seen), 2 matches, 1 alerts sent

In this setup, we run ElastAlert as a service;

cat > /etc/systemd/system/elastalert.service << 'EOL'

[Unit]

Description=ELK Stack ElastAlert Service

After=elasticsearch.service

[Service]

Type=simple

WorkingDirectory=/opt/elastalert

ExecStart=/usr/local/bin/elastalert --verbose --config /opt/elastalert/config.yaml

[Install]

WantedBy=multi-user.target

EOL

Reload systemd configurations;

systemctl daemon-reloadStart and enable the service to run on boot;

systemctl enable --now elastalertChecking the status;

systemctl status elastalert● elastalert.service - ELK Stack ElastAlert Service

Loaded: loaded (/etc/systemd/system/elastalert.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2020-12-01 23:18:47 EAT; 38s ago

Main PID: 7340 (elastalert)

Tasks: 12 (limit: 17931)

Memory: 43.9M

CGroup: /system.slice/elastalert.service

└─7340 /bin/python3 /usr/local/bin/elastalert --verbose --config /opt/elastalert/config.yaml

Dec 01 23:18:59 elastic.kifarunix-demo.com elastalert[7340]: INFO:elastalert:Queried rule SSH abuse - reapeat offender from 2020-12-01 22:31 EAT to 2020-12-01 22:46 EAT: 0>

Dec 01 23:18:59 elastic.kifarunix-demo.com elastalert[7340]: INFO:elastalert:Queried rule Event spike from 2020-12-01 23:16 EAT to 2020-12-01 23:18 EAT: 0 / 0 hits

...

To run with ElastAlert Python, see running ElastAlert.

Simulate the events and verify if any alert is send and received on mail;

And that marks the end of our tutorial on how to send ELK stack alerts with ElastAlert via Email. Feel free to explore other alert channels.

Reference

Running ElastAlert for the First time

Other Tutorials

Monitor Linux System Metrics with ELK Stack