Is it possible to add compute nodes into OpenStack using Kolla-Ansible? Yes, definitely. In this step-by-step guide, you will learn how to add compute nodes into an existing OpenStack deployment using Kolla-Ansible. Kolla-Ansible, a cutting-edge tool in the world of cloud orchestration, simplifies the process of integrating additional compute nodes into an existing OpenStack deployment seamlessly.

Table of Contents

Adding Compute Nodes into OpenStack using Kolla-Ansible

In our previous guide, we learnt how to deploy multinode OpenStack with three node, Controller, Storage and compute nodes, using Kolla-Ansible.

When you speak about openstack compute, there are two terms that can be interchangeably used. These terms are compute node and compute host.

Compute Node

- A compute node, also known as a compute server or hypervisor host, is a physical or virtual server that runs virtual machines (VMs). It provides the computational resources, such as CPU, memory, and storage, for running VMs. Compute nodes are responsible for creating, terminating, and managing VMs, including tasks like scheduling and resource allocation.

- In OpenStack, a compute node runs the Nova Compute service and is part of the compute resource pool available for launching and managing VMs.

Compute Host

- A compute host is a term that can be used interchangeably with a compute node in some contexts. However, in other cases, a compute host might refer to the physical or virtual machine that acts as a host for multiple compute nodes.

- For example, in a scenario where multiple compute nodes run on a single physical server or hypervisor, that physical server could be referred to as the compute host.

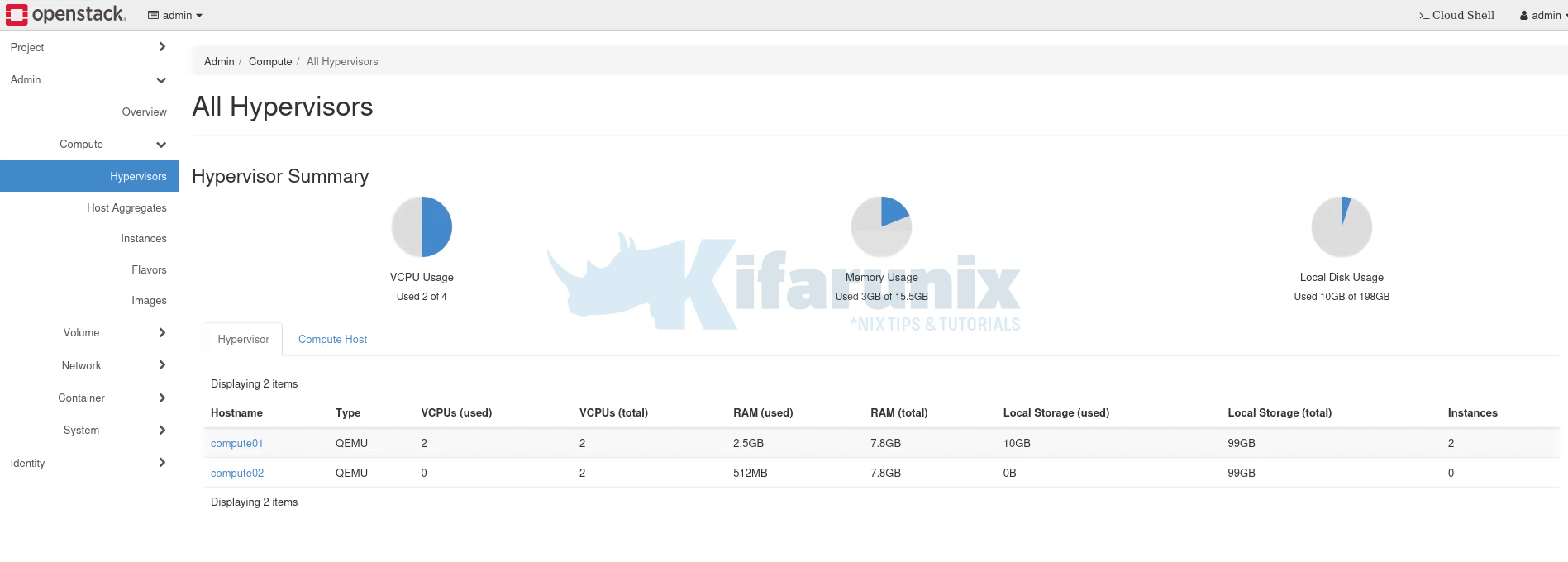

Let’s confirm the number of compute nodes we currently have in our deployment

source $HOME/kolla-ansible/bin/activatesource /etc/kolla/admin-openrc.shopenstack hypervisor listSample output;

+--------------------------------------+---------------------+-----------------+-----------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+--------------------------------------+---------------------+-----------------+-----------------+-------+

| 6aa76044-d456-4c3b-8f28-fcfc7e79b658 | compute01 | QEMU | 192.168.200.202 | up |

+--------------------------------------+---------------------+-----------------+-----------------+-------+

As you can see, we only have one single compute node at the moment.

Prepare the Nodes for Addition into OpenStack

Well, since we are using Kolla-Ansible for deployment, most of the pre-requisites will be taken care by Kolla-Ansible.

However, the fresh installation, initial IP assignent to the node, hostname, creation of first user accounts, you need to do this yourself (You can automate if you want).

Also, I would recommend that you use same OS version for uniformity across the cluster and easy management. We are running Ubuntu 22.04 LTS

Based on our basic deployment architecture;

------------------+---------------------------------------------+--------------------------------+

| | |

+-----------------+-------------------------+ +-------------+-------------+ +------------+--------------+

| [ Controller Node ] | | [ Compute01 Node ] | | [ Storage01 Node ] |

| | | | | |

| br0: VIP and Mgt IP | | enp1s0: 192.168.200.202 | | enp1s0: 192.168.200.201 |

| VIP: 192.168.200.254 | | enp2s0: 10.100.0.110/24 | | |

| Mgt IP: 192.168.200.200 | +---------------------------+ +---------------------------+

| br-ex: Provider Network |

| 10.100.0.100/24 |

+-------------------------------------------+

we have setup our node, assigned the IP addresses, create required user account with required sudo rights. Our basic architecture will now look like;

------------------+---------------------------------------------+--------------------------------+----------------------------------+

| | | |

+-----------------+-------------------------+ +-------------+-------------+ +------------+--------------+ +-------------+-------------+

| [ Controller Node ] | | [ Compute01 Node ] | | [ Storage01 Node ] | | [ Compute02 Node ] |

| | | | | | | |

| br0: VIP and Mgt IP | | enp1s0: 192.168.200.202 | | enp1s0: 192.168.200.201 | | enp1s0: 192.168.200.203 |

| VIP: 192.168.200.254 | | enp2s0: 10.100.0.110/24 | | | | enp2s0: 10.100.0.111/24 |

| Mgt IP: 192.168.200.200 | +---------------------------+ +---------------------------+ +---------------------------+

| br-ex: Provider Network |

| 10.100.0.100/24 |

+-------------------------------------------+

Copy Deployment User SSH Keys from Control Node to New Compute Node

Your control node is the node where you are running Kolla-ansible. In our setup, we are running Kolla-ansible in our controller node.

In regards to the deployment user, if you are not using SSH keys, you need to define the username and password in the multinode configuration file for the respective compute to define how Kolla-Ansible will login to configure that respective node.

We are using SSH keys on our guide which we already generated while creating Kolla-Ansible Deployment User Account.

Hence, let just copy the SSH keys into the new compute node.

First of all, let’s ensure the new compute node is reachable via the hostname from the control node.

sudo tee -a /etc/hosts << EOL

192.168.200.203 compute02

EOLLet’s confirm reachability;

ping compute02 -c 4PING compute02 (192.168.200.203) 56(84) bytes of data.

64 bytes from compute02 (192.168.200.203): icmp_seq=1 ttl=64 time=0.182 ms

64 bytes from compute02 (192.168.200.203): icmp_seq=2 ttl=64 time=0.421 ms

64 bytes from compute02 (192.168.200.203): icmp_seq=3 ttl=64 time=0.176 ms

64 bytes from compute02 (192.168.200.203): icmp_seq=4 ttl=64 time=0.446 ms

--- compute02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3082ms

rtt min/avg/max/mdev = 0.176/0.306/0.446/0.127 ms

Next, copy the keys;

ssh-copy-id kifarunix@compute02Update Kolla-Ansible Inventory

Since we are running a multinode deployment, open the multinode inventory and add your new compute node.

This is a snippet of how our multinode inventory looks like before we add the new compute node;

cat multinode# These initial groups are the only groups required to be modified. The

# additional groups are for more control of the environment.

[control]

controller01 ansible_connection=local neutron_external_interface=vethext

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

controller01 ansible_connection=local neutron_external_interface=vethext network_interface=br0

[compute]

compute01 neutron_external_interface=enp2s0 network_interface=enp1s0

[monitoring]

controller01 ansible_connection=local neutron_external_interface=vethext

# When compute nodes and control nodes use different interfaces,

# you need to comment out "api_interface" and other interfaces from the globals.yml

# and specify like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 tunnel_interface=em1

[storage]

storage01 neutron_external_interface=enp10s0 network_interface=enp1s0

[deployment]

localhost ansible_connection=local

[baremetal:children]

control

network

compute

storage

monitoring

[tls-backend:children]

control

# You can explicitly specify which hosts run each project by updating the

# groups in the sections below. Common services are grouped together.

[common:children]

control

network

compute

storage

monitoring

...

So, we will update the [compute] group to add our new node such that the configuration looks like;

vim multinode# These initial groups are the only groups required to be modified. The

# additional groups are for more control of the environment.

[control]

controller01 ansible_connection=local neutron_external_interface=vethext

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

controller01 ansible_connection=local neutron_external_interface=vethext network_interface=br0

[compute]

compute01 neutron_external_interface=enp2s0 network_interface=enp1s0

compute02 neutron_external_interface=enp2s0 network_interface=enp1s0

[monitoring]

controller01 ansible_connection=local neutron_external_interface=vethext

# When compute nodes and control nodes use different interfaces,

# you need to comment out "api_interface" and other interfaces from the globals.yml

# and specify like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 tunnel_interface=em1

[storage]

storage01 neutron_external_interface=enp10s0 network_interface=enp1s0

[deployment]

localhost ansible_connection=local

[baremetal:children]

control

network

compute

storage

monitoring

[tls-backend:children]

control

# You can explicitly specify which hosts run each project by updating the

# groups in the sections below. Common services are grouped together.

[common:children]

control

network

compute

storage

monitoring

...

Activate Kolla-Ansible Virtual Environment

Activate your respective virtual environment;

source ~/kolla-ansible/bin/activateTest Connectivity to the Node

Execute the Ansible command below to check the reachability of node in your inventory using the Ansible ping module.

ansible -i multinode -m ping compute02Sample output;

compute02 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

Bootstrap the new compute node

You need to bootstrap your server with kolla deploy dependencies by running the command below.

kolla-ansible -i <inventory> bootstrap-servers [ --limit <limit> ]Replace the <inventory> with your inventory file and <limit> with the compute node hostname defined in the inventory.

Be cautious about re-bootstrapping a cloud that has already been boostrapped. See some considerations for reboostrapping.

Thus, our command will look like;

kolla-ansible -i multinode bootstrap-servers --limit compute02If you are adding multiple compute nodes;

kolla-ansible -i multinode bootstrap-servers --limit compute02,compute03,compute0NSample output;

Bootstrapping servers : ansible-playbook -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla --limit compute02 -e kolla_action=bootstrap-servers /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/kolla-host.yml --inventory multinode

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [Gather facts for all hosts] *******************************************************************************************************************************************

TASK [Gather facts] *********************************************************************************************************************************************************

ok: [compute02]

TASK [Gather package facts] *************************************************************************************************************************************************

skipping: [compute02]

TASK [Group hosts to determine when using --limit] **************************************************************************************************************************

ok: [compute02]

PLAY [Gather facts for all hosts (if using --limit)] ************************************************************************************************************************

TASK [Gather facts] *********************************************************************************************************************************************************

skipping: [compute02] => (item=compute02)

ok: [compute02 -> controller01] => (item=controller01)

ok: [compute02 -> compute01] => (item=compute01)

ok: [compute02 -> storage01] => (item=storage01)

ok: [compute02 -> localhost] => (item=localhost)

TASK [Gather package facts] *************************************************************************************************************************************************

skipping: [compute02] => (item=compute02)

skipping: [compute02] => (item=controller01)

skipping: [compute02] => (item=compute01)

skipping: [compute02] => (item=storage01)

skipping: [compute02] => (item=localhost)

skipping: [compute02]

PLAY [Apply role baremetal] *************************************************************************************************************************************************

TASK [openstack.kolla.etc_hosts : Include etc-hosts.yml] ********************************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/etc_hosts/tasks/etc-hosts.yml for compute02

TASK [openstack.kolla.etc_hosts : Ensure localhost in /etc/hosts] ***********************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.etc_hosts : Ensure hostname does not point to 127.0.1.1 in /etc/hosts] ********************************************************************************

ok: [compute02]

TASK [openstack.kolla.etc_hosts : Generate /etc/hosts for all of the nodes] *************************************************************************************************

[WARNING]: Module remote_tmp /root/.ansible/tmp did not exist and was created with a mode of 0700, this may cause issues when running as another user. To avoid this, create

the remote_tmp dir with the correct permissions manually

changed: [compute02]

TASK [openstack.kolla.etc_hosts : Check whether /etc/cloud/cloud.cfg exists] ************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.etc_hosts : Disable cloud-init manage_etc_hosts] ******************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.baremetal : Ensure unprivileged users can use ping] ***************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Set firewall default policy] **************************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.baremetal : Check if firewalld is installed] **********************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Disable firewalld] ************************************************************************************************************************

skipping: [compute02] => (item=firewalld)

skipping: [compute02]

TASK [openstack.kolla.packages : Install packages] **************************************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.packages : Remove packages] ***************************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : include_tasks] *******************************************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/repo-Debian.yml for compute02

TASK [openstack.kolla.docker : Install CA certificates and gnupg packages] **************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.docker : Ensure apt sources list directory exists] ****************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.docker : Ensure apt keyrings directory exists] ********************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.docker : Install docker apt gpg key] ******************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Install docker apt pin] **********************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Enable docker apt repository] ****************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Check which containers are running] **********************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.docker : Check if docker systemd unit exists] *********************************************************************************************************

ok: [compute02]

TASK [openstack.kolla.docker : Mask the docker systemd unit on Debian/Ubuntu] ***********************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Install packages] ****************************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Start docker] ********************************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Wait for Docker to start] ********************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Ensure containers are running after Docker upgrade] ******************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Ensure docker config directory exists] *******************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Write docker config] *************************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Remove old docker options file] **************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Ensure docker service directory exists] ******************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Configure docker service] ********************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Ensure the path for CA file for private registry exists] *************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Ensure the CA file for private registry exists] **********************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker : Flush handlers] ******************************************************************************************************************************

RUNNING HANDLER [openstack.kolla.docker : Reload docker service file] *******************************************************************************************************

ok: [compute02]

RUNNING HANDLER [openstack.kolla.docker : Restart docker] *******************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Start and enable docker] *********************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : include_tasks] *******************************************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/configure-containerd-for-zun.yml for compute02

TASK [openstack.kolla.docker : Ensuring CNI config directory exist] *********************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Copying CNI config file] *********************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Ensuring CNI bin directory exist] ************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Copy zun-cni script] *************************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker : Copying over containerd config] **************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.kolla_user : Ensure groups are present] ***************************************************************************************************************

skipping: [compute02] => (item=docker)

skipping: [compute02] => (item=sudo)

skipping: [compute02] => (item=kolla)

skipping: [compute02]

TASK [openstack.kolla.kolla_user : Create kolla user] ***********************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.kolla_user : Add public key to kolla user authorized keys] ********************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.kolla_user : Grant kolla user passwordless sudo] ******************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker_sdk : Install packages] ************************************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.docker_sdk : Install latest pip in the virtualenv] ****************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.docker_sdk : Install docker SDK for python] ***********************************************************************************************************

changed: [compute02]

TASK [openstack.kolla.baremetal : Ensure node_config_directory directory exists] ********************************************************************************************

changed: [compute02]

TASK [openstack.kolla.apparmor_libvirt : include_tasks] *********************************************************************************************************************

included: /home/kifarunix/.ansible/collections/ansible_collections/openstack/kolla/roles/apparmor_libvirt/tasks/remove-profile.yml for compute02

TASK [openstack.kolla.apparmor_libvirt : Get stat of libvirtd apparmor profile] *********************************************************************************************

ok: [compute02]

TASK [openstack.kolla.apparmor_libvirt : Get stat of libvirtd apparmor disable profile] *************************************************************************************

ok: [compute02]

TASK [openstack.kolla.apparmor_libvirt : Remove apparmor profile for libvirt] ***********************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Change state of selinux] ******************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Set https proxy for git] ******************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Set http proxy for git] *******************************************************************************************************************

skipping: [compute02]

TASK [openstack.kolla.baremetal : Configure ceph for zun] *******************************************************************************************************************

skipping: [compute02]

RUNNING HANDLER [openstack.kolla.docker : Restart containerd] ***************************************************************************************************************

changed: [compute02]

PLAY RECAP ******************************************************************************************************************************************************************

compute02 : ok=43 changed=23 unreachable=0 failed=0 skipped=21 rescued=0 ignored=0

Run Pre-Deployment Checks on the Host

Next, run pre-deployment checks for node;

kolla-ansible -i multinode prechecks --limit compute02Sample output;

Pre-deployment checking : ansible-playbook -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla --limit compute02 -e kolla_action=precheck /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/site.yml --inventory multinode

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [Gather facts for all hosts] *******************************************************************************************************************************************

TASK [Gather facts] *********************************************************************************************************************************************************

ok: [compute02]

TASK [Gather package facts] *************************************************************************************************************************************************

ok: [compute02]

TASK [Group hosts to determine when using --limit] **************************************************************************************************************************

ok: [compute02]

PLAY [Gather facts for all hosts (if using --limit)] ************************************************************************************************************************

TASK [Gather facts] *********************************************************************************************************************************************************

skipping: [compute02] => (item=compute02)

ok: [compute02 -> controller01] => (item=controller01)

ok: [compute02 -> compute01] => (item=compute01)

ok: [compute02 -> storage01] => (item=storage01)

ok: [compute02 -> localhost] => (item=localhost)

TASK [Gather package facts] *************************************************************************************************************************************************

skipping: [compute02] => (item=compute02)

ok: [compute02 -> controller01] => (item=controller01)

ok: [compute02 -> compute01] => (item=compute01)

ok: [compute02 -> storage01] => (item=storage01)

ok: [compute02 -> localhost] => (item=localhost)

PLAY [Group hosts based on configuration] ***********************************************************************************************************************************

TASK [Group hosts based on Kolla action] ************************************************************************************************************************************

ok: [compute02]

TASK [Group hosts based on enabled services] ********************************************************************************************************************************

ok: [compute02] => (item=enable_aodh_True)

ok: [compute02] => (item=enable_barbican_False)

ok: [compute02] => (item=enable_blazar_False)

ok: [compute02] => (item=enable_ceilometer_True)

ok: [compute02] => (item=enable_ceph_rgw_False)

ok: [compute02] => (item=enable_cinder_True)

ok: [compute02] => (item=enable_cloudkitty_False)

ok: [compute02] => (item=enable_collectd_False)

ok: [compute02] => (item=enable_cyborg_False)

ok: [compute02] => (item=enable_designate_False)

ok: [compute02] => (item=enable_etcd_True)

ok: [compute02] => (item=enable_freezer_False)

ok: [compute02] => (item=enable_glance_True)

ok: [compute02] => (item=enable_gnocchi_True)

ok: [compute02] => (item=enable_grafana_True)

ok: [compute02] => (item=enable_hacluster_False)

ok: [compute02] => (item=enable_heat_True)

ok: [compute02] => (item=enable_horizon_True)

ok: [compute02] => (item=enable_influxdb_False)

ok: [compute02] => (item=enable_ironic_False)

ok: [compute02] => (item=enable_iscsid_True)

ok: [compute02] => (item=enable_keystone_True)

ok: [compute02] => (item=enable_kuryr_True)

ok: [compute02] => (item=enable_loadbalancer_True)

ok: [compute02] => (item=enable_magnum_False)

ok: [compute02] => (item=enable_manila_False)

ok: [compute02] => (item=enable_mariadb_True)

ok: [compute02] => (item=enable_masakari_False)

ok: [compute02] => (item=enable_memcached_True)

ok: [compute02] => (item=enable_mistral_False)

ok: [compute02] => (item=enable_multipathd_False)

ok: [compute02] => (item=enable_murano_False)

ok: [compute02] => (item=enable_neutron_True)

ok: [compute02] => (item=enable_nova_True)

ok: [compute02] => (item=enable_octavia_False)

ok: [compute02] => (item=enable_opensearch_False)

ok: [compute02] => (item=enable_opensearch_dashboards_False)

ok: [compute02] => (item=enable_openvswitch_True_enable_ovs_dpdk_False)

ok: [compute02] => (item=enable_outward_rabbitmq_False)

ok: [compute02] => (item=enable_ovn_False)

ok: [compute02] => (item=enable_placement_True)

ok: [compute02] => (item=enable_prometheus_True)

ok: [compute02] => (item=enable_rabbitmq_True)

ok: [compute02] => (item=enable_redis_False)

ok: [compute02] => (item=enable_sahara_False)

ok: [compute02] => (item=enable_senlin_False)

ok: [compute02] => (item=enable_skyline_False)

ok: [compute02] => (item=enable_solum_False)

ok: [compute02] => (item=enable_swift_False)

ok: [compute02] => (item=enable_tacker_False)

ok: [compute02] => (item=enable_telegraf_False)

ok: [compute02] => (item=enable_trove_False)

ok: [compute02] => (item=enable_venus_False)

ok: [compute02] => (item=enable_vitrage_False)

ok: [compute02] => (item=enable_watcher_False)

ok: [compute02] => (item=enable_zun_True)

PLAY [Apply role prechecks] *************************************************************************************************************************************************

TASK [prechecks : Checking loadbalancer group] ******************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [prechecks : include_tasks] ********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/prechecks/tasks/host_os_checks.yml for compute02

TASK [prechecks : Checking host OS distribution] ****************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [prechecks : Checking host OS release or version] **********************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [prechecks : Checking if CentOS is Stream] *****************************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Fail if not running on CentOS Stream] *********************************************************************************************************************

skipping: [compute02]

TASK [prechecks : include_tasks] ********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/prechecks/tasks/timesync_checks.yml for compute02

TASK [prechecks : Check for a running host NTP daemon] **********************************************************************************************************************

ok: [compute02]

TASK [prechecks : Fail if a host NTP daemon is not running] *****************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Checking timedatectl status] ******************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Fail if the clock is not synchronized] ********************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Ensure /etc/localtime exist] ******************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Fail if /etc/localtime is absent] *************************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Ensure /etc/timezone exist] *******************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Fail if /etc/timezone is absent] **************************************************************************************************************************

skipping: [compute02]

TASK [prechecks : include_tasks] ********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/prechecks/tasks/port_checks.yml for compute02

TASK [prechecks : Checking the api_interface is present] ********************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Checking the api_interface is active] *********************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Checking the api_interface ip address configuration] ******************************************************************************************************

skipping: [compute02]

TASK [prechecks : Checking if system uses systemd] **************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [prechecks : Checking Docker version] **********************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Checking empty passwords in passwords.yml. Run kolla-genpwd if this task fails] ***************************************************************************

ok: [compute02 -> localhost]

TASK [prechecks : Check if nscd is running] *********************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Fail if nscd is running] **********************************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Validate that internal and external vip address are different when TLS is enabled only on either the internal and external network] ***********************

skipping: [compute02]

TASK [prechecks : Validate that enable_ceph is disabled] ********************************************************************************************************************

skipping: [compute02]

TASK [prechecks : Checking docker SDK version] ******************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Checking dbus-python package] *****************************************************************************************************************************

ok: [compute02]

TASK [prechecks : Checking Ansible version] *********************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [prechecks : Check if config_owner_user existed] ***********************************************************************************************************************

ok: [compute02]

TASK [prechecks : Check if config_owner_group existed] **********************************************************************************************************************

ok: [compute02]

TASK [prechecks : Check if ansible user can do passwordless sudo] ***********************************************************************************************************

ok: [compute02]

TASK [prechecks : Check if external mariadb hosts are reachable from the load balancer] *************************************************************************************

skipping: [compute02] => (item=controller01)

skipping: [compute02]

TASK [prechecks : Check if external database address is reachable from all hosts] *******************************************************************************************

skipping: [compute02]

PLAY [Apply role common] ****************************************************************************************************************************************************

TASK [common : include_tasks] ***********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/common/tasks/precheck.yml for compute02

TASK [service-precheck : common | Validate inventory groups] ****************************************************************************************************************

skipping: [compute02] => (item=fluentd)

skipping: [compute02] => (item=kolla-toolbox)

skipping: [compute02] => (item=cron)

skipping: [compute02]

PLAY [Apply role loadbalancer] **********************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_collectd_True

PLAY [Apply role collectd] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_influxdb_True

PLAY [Apply role influxdb] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_telegraf_True

PLAY [Apply role telegraf] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_redis_True

PLAY [Apply role redis] *****************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role mariadb] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: mariadb_restart

PLAY [Restart mariadb services] *********************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: mariadb_start

PLAY [Start mariadb services] ***********************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: mariadb_bootstrap_restart

PLAY [Restart bootstrap mariadb service] ************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply mariadb post-configuration] *************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role memcached] *************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role prometheus] ************************************************************************************************************************************************

TASK [prometheus : include_tasks] *******************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/prometheus/tasks/precheck.yml for compute02

TASK [service-precheck : prometheus | Validate inventory groups] ************************************************************************************************************

skipping: [compute02] => (item=prometheus-server)

skipping: [compute02] => (item=prometheus-node-exporter)

skipping: [compute02] => (item=prometheus-mysqld-exporter)

skipping: [compute02] => (item=prometheus-haproxy-exporter)

skipping: [compute02] => (item=prometheus-memcached-exporter)

skipping: [compute02] => (item=prometheus-cadvisor)

skipping: [compute02] => (item=prometheus-alertmanager)

skipping: [compute02] => (item=prometheus-openstack-exporter)

skipping: [compute02] => (item=prometheus-elasticsearch-exporter)

skipping: [compute02] => (item=prometheus-blackbox-exporter)

skipping: [compute02] => (item=prometheus-libvirt-exporter)

skipping: [compute02] => (item=prometheus-msteams)

skipping: [compute02]

TASK [prometheus : Get container facts] *************************************************************************************************************************************

ok: [compute02]

TASK [prometheus : Checking free port for Prometheus server] ****************************************************************************************************************

skipping: [compute02]

TASK [prometheus : Checking free port for Prometheus node_exporter] *********************************************************************************************************

ok: [compute02]

TASK [prometheus : Checking free port for Prometheus mysqld_exporter] *******************************************************************************************************

skipping: [compute02]

TASK [prometheus : Checking free port for Prometheus haproxy_exporter] ******************************************************************************************************

skipping: [compute02]

TASK [prometheus : Checking free port for Prometheus memcached_exporter] ****************************************************************************************************

skipping: [compute02]

TASK [prometheus : Checking free port for Prometheus cAdvisor] **************************************************************************************************************

ok: [compute02]

TASK [prometheus : Checking free ports for Prometheus Alertmanager] *********************************************************************************************************

skipping: [compute02] => (item=9093)

skipping: [compute02] => (item=9094)

skipping: [compute02]

TASK [prometheus : Checking free ports for Prometheus openstack-exporter] ***************************************************************************************************

skipping: [compute02] => (item=9198)

skipping: [compute02]

TASK [prometheus : Checking free ports for Prometheus elasticsearch-exporter] ***********************************************************************************************

skipping: [compute02] => (item=9108)

skipping: [compute02]

TASK [prometheus : Checking free ports for Prometheus blackbox-exporter] ****************************************************************************************************

skipping: [compute02] => (item=9115)

skipping: [compute02]

TASK [prometheus : Checking free ports for Prometheus libvirt-exporter] *****************************************************************************************************

ok: [compute02] => (item=9177)

TASK [prometheus : Checking free ports for Prometheus msteams] **************************************************************************************************************

skipping: [compute02] => (item=9095)

skipping: [compute02]

PLAY [Apply role iscsi] *****************************************************************************************************************************************************

TASK [iscsi : include_tasks] ************************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/iscsi/tasks/precheck.yml for compute02

TASK [service-precheck : iscsi | Validate inventory groups] *****************************************************************************************************************

skipping: [compute02] => (item=iscsid)

skipping: [compute02] => (item=tgtd)

skipping: [compute02]

TASK [iscsi : Get container facts] ******************************************************************************************************************************************

ok: [compute02]

TASK [iscsi : Checking free port for iscsi] *********************************************************************************************************************************

ok: [compute02]

TASK [iscsi : Check supported platforms for tgtd] ***************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

[WARNING]: Could not match supplied host pattern, ignoring: enable_multipathd_True

PLAY [Apply role multipathd] ************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role rabbitmq] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: rabbitmq_restart

PLAY [Restart rabbitmq services] ********************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply rabbitmq post-configuration] ************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_outward_rabbitmq_True

PLAY [Apply role rabbitmq (outward)] ****************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: outward_rabbitmq_restart

PLAY [Restart rabbitmq (outward) services] **********************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply rabbitmq (outward) post-configuration] **************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role etcd] ******************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role keystone] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_opensearch_True

PLAY [Apply role opensearch] ************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_swift_True

PLAY [Apply role swift] *****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_ceph_rgw_True

PLAY [Apply role ceph-rgw] **************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role glance] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_ironic_True

PLAY [Apply role ironic] ****************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role cinder] ****************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role placement] *************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Bootstrap nova API databases] *****************************************************************************************************************************************

skipping: no hosts matched

PLAY [Bootstrap nova cell databases] ****************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role nova] ******************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role nova-cell] *************************************************************************************************************************************************

TASK [nova-cell : include_tasks] ********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/nova-cell/tasks/precheck.yml for compute02

TASK [service-precheck : nova | Validate inventory groups] ******************************************************************************************************************

skipping: [compute02] => (item=nova-libvirt)

skipping: [compute02] => (item=nova-ssh)

skipping: [compute02] => (item=nova-novncproxy)

skipping: [compute02] => (item=nova-spicehtml5proxy)

skipping: [compute02] => (item=nova-serialproxy)

skipping: [compute02] => (item=nova-conductor)

skipping: [compute02] => (item=nova-compute)

skipping: [compute02] => (item=nova-compute-ironic)

skipping: [compute02]

TASK [nova-cell : Get container facts] **************************************************************************************************************************************

ok: [compute02]

TASK [nova-cell : Checking available compute nodes in inventory] ************************************************************************************************************

skipping: [compute02]

TASK [nova-cell : Checking free port for Nova NoVNC Proxy] ******************************************************************************************************************

skipping: [compute02]

TASK [nova-cell : Checking free port for Nova Serial Proxy] *****************************************************************************************************************

skipping: [compute02]

TASK [nova-cell : Checking free port for Nova Spice HTML5 Proxy] ************************************************************************************************************

skipping: [compute02]

TASK [nova-cell : Checking free port for Nova SSH (API interface)] **********************************************************************************************************

ok: [compute02]

TASK [nova-cell : Checking free port for Nova SSH (migration interface)] ****************************************************************************************************

skipping: [compute02]

TASK [nova-cell : Checking free port for Nova Libvirt] **********************************************************************************************************************

ok: [compute02]

TASK [nova-cell : Checking that host libvirt is not running] ****************************************************************************************************************

ok: [compute02]

TASK [nova-cell : Checking that nova_libvirt container is not running] ******************************************************************************************************

skipping: [compute02]

PLAY [Refresh nova scheduler cell cache] ************************************************************************************************************************************

skipping: no hosts matched

PLAY [Reload global Nova super conductor services] **************************************************************************************************************************

skipping: no hosts matched

PLAY [Reload Nova cell services] ********************************************************************************************************************************************

TASK [nova-cell : Reload nova cell services to remove RPC version cap] ******************************************************************************************************

skipping: [compute02] => (item=nova-conductor)

skipping: [compute02] => (item=nova-compute)

skipping: [compute02] => (item=nova-compute-ironic)

skipping: [compute02] => (item=nova-novncproxy)

skipping: [compute02] => (item=nova-serialproxy)

skipping: [compute02] => (item=nova-spicehtml5proxy)

skipping: [compute02]

PLAY [Reload global Nova API services] **************************************************************************************************************************************

skipping: no hosts matched

PLAY [Run Nova API online data migrations] **********************************************************************************************************************************

skipping: no hosts matched

PLAY [Run Nova cell online data migrations] *********************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role openvswitch] ***********************************************************************************************************************************************

TASK [openvswitch : include_tasks] ******************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/openvswitch/tasks/precheck.yml for compute02

TASK [service-precheck : openvswitch | Validate inventory groups] ***********************************************************************************************************

skipping: [compute02] => (item=openvswitch-db-server)

skipping: [compute02] => (item=openvswitch-vswitchd)

skipping: [compute02]

TASK [openvswitch : Get container facts] ************************************************************************************************************************************

ok: [compute02]

TASK [openvswitch : Checking free port for OVSDB] ***************************************************************************************************************************

ok: [compute02]

[WARNING]: Could not match supplied host pattern, ignoring: enable_openvswitch_True_enable_ovs_dpdk_True

PLAY [Apply role ovs-dpdk] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_ovn_True

PLAY [Apply role ovn-controller] ********************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role ovn-db] ****************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role neutron] ***************************************************************************************************************************************************

TASK [neutron : include_tasks] **********************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/neutron/tasks/precheck.yml for compute02

TASK [service-precheck : neutron | Validate inventory groups] ***************************************************************************************************************

skipping: [compute02] => (item=neutron-server)

skipping: [compute02] => (item=neutron-openvswitch-agent)

skipping: [compute02] => (item=neutron-linuxbridge-agent)

skipping: [compute02] => (item=neutron-dhcp-agent)

skipping: [compute02] => (item=neutron-l3-agent)

skipping: [compute02] => (item=neutron-sriov-agent)

skipping: [compute02] => (item=neutron-mlnx-agent)

skipping: [compute02] => (item=neutron-eswitchd)

skipping: [compute02] => (item=neutron-metadata-agent)

skipping: [compute02] => (item=neutron-ovn-metadata-agent)

skipping: [compute02] => (item=neutron-bgp-dragent)

skipping: [compute02] => (item=neutron-infoblox-ipam-agent)

skipping: [compute02] => (item=neutron-metering-agent)

skipping: [compute02] => (item=ironic-neutron-agent)

skipping: [compute02] => (item=neutron-tls-proxy)

skipping: [compute02] => (item=neutron-ovn-agent)

skipping: [compute02]

TASK [neutron : Get container facts] ****************************************************************************************************************************************

ok: [compute02]

TASK [neutron : Checking free port for Neutron Server] **********************************************************************************************************************

skipping: [compute02]

TASK [neutron : Checking number of network agents] **************************************************************************************************************************

skipping: [compute02]

TASK [neutron : Checking tenant network types] ******************************************************************************************************************************

ok: [compute02] => (item=vxlan) => {

"ansible_loop_var": "item",

"changed": false,

"item": "vxlan",

"msg": "All assertions passed"

}

TASK [neutron : Checking whether Ironic enabled] ****************************************************************************************************************************

skipping: [compute02]

TASK [neutron : Get container facts] ****************************************************************************************************************************************

ok: [compute02]

TASK [neutron : Get container volume facts] *********************************************************************************************************************************

ok: [compute02]

TASK [neutron : Check for ML2/OVN presence] *********************************************************************************************************************************

skipping: [compute02]

TASK [neutron : Check for ML2/OVS presence] *********************************************************************************************************************************

skipping: [compute02]

PLAY [Apply role kuryr] *****************************************************************************************************************************************************

TASK [kuryr : include_tasks] ************************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/kuryr/tasks/precheck.yml for compute02

TASK [service-precheck : kuryr | Validate inventory groups] *****************************************************************************************************************

skipping: [compute02] => (item=kuryr)

skipping: [compute02]

TASK [kuryr : Get container facts] ******************************************************************************************************************************************

ok: [compute02]

TASK [kuryr : Checking free port for Kuryr] *********************************************************************************************************************************

ok: [compute02]

[WARNING]: Could not match supplied host pattern, ignoring: enable_hacluster_True

PLAY [Apply role hacluster] *************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role heat] ******************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role horizon] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_murano_True

PLAY [Apply role murano] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_solum_True

PLAY [Apply role solum] *****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_magnum_True

PLAY [Apply role magnum] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_mistral_True

PLAY [Apply role mistral] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_sahara_True

PLAY [Apply role sahara] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_manila_True

PLAY [Apply role manila] ****************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role gnocchi] ***************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role ceilometer] ************************************************************************************************************************************************

TASK [ceilometer : include_tasks] *******************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/ceilometer/tasks/precheck.yml for compute02

TASK [service-precheck : ceilometer | Validate inventory groups] ************************************************************************************************************

skipping: [compute02] => (item=ceilometer-notification)

skipping: [compute02] => (item=ceilometer-central)

skipping: [compute02] => (item=ceilometer-compute)

skipping: [compute02] => (item=ceilometer-ipmi)

skipping: [compute02]

TASK [ceilometer : Checking gnocchi backend for ceilometer] *****************************************************************************************************************

skipping: [compute02]

PLAY [Apply role aodh] ******************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_barbican_True

PLAY [Apply role barbican] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_cyborg_True

PLAY [Apply role cyborg] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_designate_True

PLAY [Apply role designate] *************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_trove_True

PLAY [Apply role trove] *****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_watcher_True

PLAY [Apply role watcher] ***************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role grafana] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_cloudkitty_True

PLAY [Apply role cloudkitty] ************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_freezer_True

PLAY [Apply role freezer] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_senlin_True

PLAY [Apply role senlin] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_tacker_True

PLAY [Apply role tacker] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_octavia_True

PLAY [Apply role octavia] ***************************************************************************************************************************************************

skipping: no hosts matched

PLAY [Apply role zun] *******************************************************************************************************************************************************

TASK [zun : include_tasks] **************************************************************************************************************************************************

included: /home/kifarunix/kolla-ansible/share/kolla-ansible/ansible/roles/zun/tasks/precheck.yml for compute02

TASK [service-precheck : zun | Validate inventory groups] *******************************************************************************************************************

skipping: [compute02] => (item=zun-api)

skipping: [compute02] => (item=zun-wsproxy)

skipping: [compute02] => (item=zun-compute)

skipping: [compute02] => (item=zun-cni-daemon)

skipping: [compute02]

TASK [zun : Get container facts] ********************************************************************************************************************************************

ok: [compute02]

TASK [zun : Checking free port for Zun API] *********************************************************************************************************************************

skipping: [compute02]

TASK [zun : Checking free port for Zun WSproxy] *****************************************************************************************************************************

skipping: [compute02]

TASK [zun : Checking free port for zun-cni-daemon] **************************************************************************************************************************

ok: [compute02]

TASK [zun : Ensure kuryr enabled for zun] ***********************************************************************************************************************************

ok: [compute02] => {

"changed": false,

"msg": "All assertions passed"

}

[WARNING]: Could not match supplied host pattern, ignoring: enable_vitrage_True

PLAY [Apply role vitrage] ***************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_blazar_True

PLAY [Apply role blazar] ****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_masakari_True

PLAY [Apply role masakari] **************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_venus_True

PLAY [Apply role venus] *****************************************************************************************************************************************************

skipping: no hosts matched

[WARNING]: Could not match supplied host pattern, ignoring: enable_skyline_True

PLAY [Apply role skyline] ***************************************************************************************************************************************************

skipping: no hosts matched

PLAY RECAP ******************************************************************************************************************************************************************

compute02 : ok=58 changed=0 unreachable=0 failed=0 skipped=47 rescued=0 ignored=0

Deploy Docker Containers for Required Services on the new Node

Next, deploy Docker containers for the required services on the new hosts.

To begin with, download the container images into the host

kolla-ansible -i multinode pull --limit compute02When the command completes, you can list the container images on the node.

You can login to the node and check list images;

docker imagesor just list them using ansible from the control node;

ansible -i multinode -m raw -a "docker images" compute02Sample output;

compute02 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/openstack.kolla/nova-compute 2023.1-ubuntu-jammy 2db6f9a37454 12 hours ago 1.47GB

quay.io/openstack.kolla/zun-compute 2023.1-ubuntu-jammy 430fc0a3c19a 12 hours ago 1.18GB

quay.io/openstack.kolla/zun-cni-daemon 2023.1-ubuntu-jammy ca8af14052bb 12 hours ago 1.03GB

quay.io/openstack.kolla/neutron-openvswitch-agent 2023.1-ubuntu-jammy af133962e664 12 hours ago 1.04GB

quay.io/openstack.kolla/nova-ssh 2023.1-ubuntu-jammy 82fc2363a0de 12 hours ago 1.11GB

quay.io/openstack.kolla/ceilometer-compute 2023.1-ubuntu-jammy 3abd229b37d0 12 hours ago 902MB

quay.io/openstack.kolla/kuryr-libnetwork 2023.1-ubuntu-jammy 338e95d01488 12 hours ago 933MB

quay.io/openstack.kolla/prometheus-libvirt-exporter 2023.1-ubuntu-jammy 36f33bbe9afb 12 hours ago 999MB

quay.io/openstack.kolla/kolla-toolbox 2023.1-ubuntu-jammy 6ea36670c23a 12 hours ago 817MB

quay.io/openstack.kolla/openvswitch-vswitchd 2023.1-ubuntu-jammy 182b7e1ae59c 12 hours ago 273MB

quay.io/openstack.kolla/prometheus-cadvisor 2023.1-ubuntu-jammy 7d405d5952e0 12 hours ago 293MB

quay.io/openstack.kolla/openvswitch-db-server 2023.1-ubuntu-jammy 95806e1d30c6 12 hours ago 273MB

quay.io/openstack.kolla/prometheus-node-exporter 2023.1-ubuntu-jammy ce3c047a3119 12 hours ago 275MB