In this guide, we’ll walk you through the process of provisioning Kubernetes Persistent Volumes with CephFS CSI Driver. CephFS is a shared filesystem storage solution, which allows multiple Pods to simultaneously mount the same storage volume with read/write permissions. This is particularly important for applications that rely on shared storage, such as distributed databases, clustered content management systems etc. By leveraging the CephFS CSI Driver, you can dynamically provision and manage Persistent Volumes (PVs) in Kubernetes, ensuring your applications have scalable, consistent, and high-performance storage.

Table of Contents

Provisioning Kubernetes Persistent Volumes with CephFS CSI Driver

The Ceph CSI plugin is an implementation of the Container Storage Interface (CSI) standard that allows container orchestration platforms (like Kubernetes) to use Ceph storage (RBD for block storage and CephFS for file storage) as persistent storage for containerized workloads. It acts as a bridge between Kubernetes (or other CSI-enabled platforms) and Ceph, enabling dynamic provisioning, management, and consumption of Ceph storage resources.

The CephFS plugin is able to provision new CephFS volumes and/or attach and mount existing ones to workloads.

Prerequisites

To follow along this guide, you need to have a Ceph cluster as well as Kubernetes cluster up and running.

Deploy Kubernetes Cluster

Check the link below on how to deploy Kubernetes cluster.

Install and Setup Kubernetes Cluster

For this guide, we are running Kubernetes v1.32.1:

kubectl get nodesNAME STATUS ROLES AGE VERSION

k8s-rhel-node-ms-01 Ready control-plane 11d v1.32.1

k8s-rhel-node-wk-01 Ready <none> 11d v1.32.1

k8s-rhel-node-wk-02 Ready <none> 11d v1.32.1

k8s-rhel-node-wk-03 Ready <none> 11d v1.32.1

Some other requirements for Kubernetes include;

In some Kubernetes clusters such as kubeadm, this is the default behavior. That is, Kubernetes API server and Kubelet already run with –allow-privileged flag set to true.

On control plane, run the command below to check;

sudo ps aux | grep apiserver | grep privilegedOr get the API server container and inspect to find the value of the –allow-privileged flag.

Deploy Ceph Cluster

In this tutorial, we will be using stand-alone Ceph cluster. The link below provides various setup guides.

Install and Setup Ceph Cluster

For this guide, we are using Ceph reef version 18;

ceph -vceph version 18.2.4 (e7ad5345525c7aa95470c26863873b581076945d) reef (stable)Ceph Status;

ceph -s cluster:

id: 854884fa-dcd5-11ef-b070-5254000e6c0d

health: HEALTH_OK

services:

mon: 4 daemons, quorum ceph-01,ceph-02,ceph-03,ceph-04 (age 31m)

mgr: ceph-01.ovodyv(active, since 30m), standbys: ceph-02.wbzqmx

osd: 3 osds: 3 up (since 30m), 3 in (since 30m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 83 MiB used, 60 GiB / 60 GiB avail

pgs: 1 active+clean

Create Ceph File System on Ceph Cluster

In order to provision CephFS storage volumes for Kubernetes workloads, you first need to create a filesystem on the Ceph cluster admin/or monitor node.

Create CephFS Data and Metadata Pools

A Ceph file system requires at least two RADOS pools, one for data and one for metadata:

CephFS needs a metadata pool to store the filesystem’s metadata (structure and information that describes files and directories within the filesystem). This information includes things like:

- File names

- Directory structures

- Permissions

- Timestamps (e.g., creation, modification)

- File attributes (e.g., size, owner)

- File system blocks mapping (which physical blocks the file data is stored on)

CephFS uses a Ceph Metadata Server (MDS) to manage these metadata operations.

CephFS also needs a data pool to store the actual file data. This is where the content of the files themselves (i.e., the file blocks) is stored.

To create CephFS data and metadata pool, refer to this guide, Create Ceph Storage Pools.

We have created the two pools, cephfs_data and cephfs_metadata.

ceph osd pool ls...

cephfs_data

cephfs_metadataTo get more details about the pools, run;

ceph osd pool ls detailCreate Filesystem on CephFS Data and Metadata Pools

Once the CephFS pools are created, you need to enable a filesystem on them using the command:

ceph fs new POOL_NAME METADATA_POOL DATA_POOLFor example:

ceph fs new kubernetesfs cephfs_metadata cephfs_dataList the filesystems;

ceph fs lsname: kubernetesfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ]Check status;

ceph fs status kubernetesfsVerify that one or more MDSs enter to the active state for your FS.

kubernetesfs - 0 clients

============

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active kubernetesfs.ceph-01.hfvthv Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 18.9G

cephfs_data data 0 18.9G

STANDBY MDS

kubernetesfs.ceph-03.bwcezh

kubernetesfs.ceph-04.hwerww

MDS version: ceph version 18.2.4 (e7ad5345525c7aa95470c26863873b581076945d) reef (stable)

If no MDS enter into active state as seen in the status output below;

kubernetesfs - 0 clients

============

POOL TYPE USED AVAIL

cephfs_metadata metadata 0 18.9G

cephfs_data data 0 18.9G

then run the command below to set the maximum number of active MDS instances for the file system:

ceph fs set kubernetesfs max_mds 1Then, deploy the MDS instances. The command below deploys two active MDS instances and one standby:

ceph orch apply mds kubernetesfs --placement="3"Confirm Ceph status;

ceph -s cluster:

id: 854884fa-dcd5-11ef-b070-5254000e6c0d

health: HEALTH_OK

services:

mon: 4 daemons, quorum ceph-01,ceph-02,ceph-03,ceph-04 (age 3h)

mgr: ceph-01.ovodyv(active, since 3h), standbys: ceph-02.wbzqmx

mds: 1/1 daemons up, 2 standby

osd: 3 osds: 3 up (since 3h), 3 in (since 3h)

data:

volumes: 1/1 healthy

pools: 3 pools, 49 pgs

objects: 24 objects, 451 KiB

usage: 96 MiB used, 60 GiB / 60 GiB avail

pgs: 49 active+clean

Create Namespace for CephFS

We will be deploying non cluster-wide CephFS components on a dedicated namespace. Hence:

kubectl create ns cephfs-csiInstall CephFS CSI Driver on Kubernetes

The Ceph CSI (Container Storage Interface) driver is a plugin that enables Kubernetes and other container orchestrators to manage and use Ceph storage clusters for dynamic provisioning of block and file storage.

Kindly note that, we will be using CephFS manifests files for Kubernetes obtained from the Ceph CSI github repository.

Below are the CSI driver manifest contents;

cat csidriver.yaml---

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: "cephfs.csi.ceph.com"

spec:

attachRequired: false

podInfoOnMount: false

fsGroupPolicy: File

seLinuxMount: true

To create the CephFS CSI driver object, apply the manifest file.

kubectl apply -f csidriver.yamlCSI driver is a global object and hence, you can run the command below to check it;

kubectl get csidriverNAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

cephfs.csi.ceph.com false false false false Persistent 8s

csi.tigera.io true true false false Ephemeral 12d

Deploy RBACs for Provisioner and Node Plugins

CSI Provisioner runs as a sidecar container and is responsible for creating, deleting, and managing storage volumes dynamically. It runs as a Kubernetes Deployment and interacts with the Ceph cluster to manage storage resources.

Node plugin on the other hand makes calls to the CSI driver to mount and unmount the storage volume from the storage system, making it available to the Pod to consume.

To handle these interactions, Role Based Access Controls policies are required. Therefore, the following manifests contain service accounts as well as roles/cluster roles and role bindings/cluster role bindings for both the provisioner and node plugin.

Note that we have changed the namespace from default to our own, cephfs-csi.

cat csi-provisioner-rbac.yaml---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-csi-provisioner

namespace: cephfs-csi

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-external-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims/status"]

verbs: ["update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list", "watch", "update", "patch", "create"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots/status"]

verbs: ["get", "list", "patch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["get", "list", "watch", "update", "patch", "create"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["csinodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents/status"]

verbs: ["update", "patch"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts/token"]

verbs: ["create"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotcontents"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotcontents/status"]

verbs: ["update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-csi-provisioner-role

subjects:

- kind: ServiceAccount

name: cephfs-csi-provisioner

namespace: cephfs-csi

roleRef:

kind: ClusterRole

name: cephfs-external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

# replace with non-default namespace name

namespace: cephfs-csi

name: cephfs-external-provisioner-cfg

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "watch"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-csi-provisioner-role-cfg

# replace with non-default namespace name

namespace: cephfs-csi

subjects:

- kind: ServiceAccount

name: cephfs-csi-provisioner

# replace with non-default namespace name

namespace: cephfs-csi

roleRef:

kind: Role

name: cephfs-external-provisioner-cfg

apiGroup: rbac.authorization.k8s.io

cat csi-nodeplugin-rbac.yaml---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-csi-nodeplugin

namespace: cephfs-csi

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-csi-nodeplugin

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts/token"]

verbs: ["create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-csi-nodeplugin

subjects:

- kind: ServiceAccount

name: cephfs-csi-nodeplugin

# replace with non-default namespace name

namespace: cephfs-csi

roleRef:

kind: ClusterRole

name: cephfs-csi-nodeplugin

apiGroup: rbac.authorization.k8s.io

Apply the manifests to create the service accounts and roles/bindings;

kubectl apply -f csi-provisioner-rbac.yamlkubectl apply -f csi-nodeplugin-rbac.yamlYou can then verify using the command;

kubectl get serviceaccount,role,clusterrole,rolebinding,clusterrolebinding -A | grep cephDeploy CSI Provisioner sidecar containers

Deploy the provisioner deployment which includes external-provisioner (responsible for creating volumes against the specified CSI endpoint), external-attacher (watches the Kubernetes API server for VolumeAttachment objects and triggers Controller[Publish|Unpublish]Volume operations against a CSI endpoint) for CSI CephFS.

csi-cephfsplugin-provisioner.yaml as well as csi-cephfsplugin.yaml

Provisioner manifest;

cat csi-cephfsplugin-provisioner.yamlNote that we have added the namespace parameter.

---

kind: Service

apiVersion: v1

metadata:

name: csi-cephfsplugin-provisioner

namespace: cephfs-csi

labels:

app: csi-metrics

spec:

selector:

app: csi-cephfsplugin-provisioner

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8681

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: csi-cephfsplugin-provisioner

namespace: cephfs-csi

spec:

selector:

matchLabels:

app: csi-cephfsplugin-provisioner

replicas: 3

template:

metadata:

labels:

app: csi-cephfsplugin-provisioner

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- csi-cephfsplugin-provisioner

topologyKey: "kubernetes.io/hostname"

serviceAccountName: cephfs-csi-provisioner

priorityClassName: system-cluster-critical

containers:

- name: csi-cephfsplugin

# for stable functionality replace canary with latest release version

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=cephfs"

- "--controllerserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=cephfs.csi.ceph.com"

- "--pidlimit=-1"

- "--enableprofiling=false"

- "--setmetadata=true"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: host-sys

mountPath: /sys

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: host-dev

mountPath: /dev

- name: ceph-config

mountPath: /etc/ceph/

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: csi-provisioner

image: registry.k8s.io/sig-storage/csi-provisioner:v5.1.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--leader-election=true"

- "--retry-interval-start=500ms"

- "--feature-gates=HonorPVReclaimPolicy=true"

- "--prevent-volume-mode-conversion=true"

- "--extra-create-metadata=true"

- "--http-endpoint=$(POD_IP):8090"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 8090

name: http-endpoint

protocol: TCP

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-resizer

image: registry.k8s.io/sig-storage/csi-resizer:v1.13.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--leader-election"

- "--retry-interval-start=500ms"

- "--handle-volume-inuse-error=false"

- "--feature-gates=RecoverVolumeExpansionFailure=true"

- "--http-endpoint=$(POD_IP):8091"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 8091

name: http-endpoint

protocol: TCP

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-snapshotter

image: registry.k8s.io/sig-storage/csi-snapshotter:v8.2.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--leader-election=true"

- "--extra-create-metadata=true"

- "--feature-gates=CSIVolumeGroupSnapshot=true"

- "--http-endpoint=$(POD_IP):8092"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 8092

name: http-endpoint

protocol: TCP

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: liveness-prometheus

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8681"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

ports:

- containerPort: 8681

name: http-metrics

protocol: TCP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: socket-dir

emptyDir: {

medium: "Memory"

}

- name: host-sys

hostPath:

path: /sys

- name: lib-modules

hostPath:

path: /lib/modules

- name: host-dev

hostPath:

path: /dev

- name: ceph-config

configMap:

name: ceph-config

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

Deploy it;

kubectl apply -f csi-cephfsplugin-provisioner.yamlCheck the service and deployment;

kubectl get svc,deployment -n cephfs-csiDeploy CSI node-driver-registrar and CephFS driver

The CSI node-driver-registrar is a sidecar container that fetches driver information (using NodeGetInfo) from a CSI endpoint and registers it with the kubelet on a node. Both the node registrar and CephFS drivers are deployed as a daemon set.

cat csi-cephfsplugin.yaml---

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: csi-cephfsplugin

namespace: cephfs-csi

spec:

selector:

matchLabels:

app: csi-cephfsplugin

template:

metadata:

labels:

app: csi-cephfsplugin

spec:

serviceAccountName: cephfs-csi-nodeplugin

priorityClassName: system-node-critical

hostNetwork: true

hostPID: true

# to use e.g. Rook orchestrated cluster, and mons' FQDN is

# resolved through k8s service, set dns policy to cluster first

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: csi-cephfsplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

# for stable functionality replace canary with latest release version

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=cephfs"

- "--nodeserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=cephfs.csi.ceph.com"

- "--enableprofiling=false"

# If topology based provisioning is desired, configure required

# node labels representing the nodes topology domain

# and pass the label names below, for CSI to consume and advertise

# its equivalent topology domain

# - "--domainlabels=failure-domain/region,failure-domain/zone"

#

# Options to enable read affinity.

# If enabled Ceph CSI will fetch labels from kubernetes node and

# pass `read_from_replica=localize,crush_location=type:value` during

# CephFS mount command. refer:

# https://docs.ceph.com/en/latest/man/8/rbd/#kernel-rbd-krbd-options

# for more details.

# - "--enable-read-affinity=true"

# - "--crush-location-labels=topology.io/zone,topology.io/rack"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: mountpoint-dir

mountPath: /var/lib/kubelet/pods

mountPropagation: Bidirectional

- name: plugin-dir

mountPath: /var/lib/kubelet/plugins

mountPropagation: "Bidirectional"

- name: host-sys

mountPath: /sys

- name: etc-selinux

mountPath: /etc/selinux

readOnly: true

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: host-dev

mountPath: /dev

- name: host-mount

mountPath: /run/mount

- name: ceph-config

mountPath: /etc/ceph/

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: ceph-csi-mountinfo

mountPath: /csi/mountinfo

- name: driver-registrar

# This is necessary only for systems with SELinux, where

# non-privileged sidecar containers cannot access unix domain socket

# created by privileged CSI driver container.

securityContext:

privileged: true

allowPrivilegeEscalation: true

image: registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.13.0

args:

- "--v=1"

- "--csi-address=/csi/csi.sock"

- "--kubelet-registration-path=/var/lib/kubelet/plugins/cephfs.csi.ceph.com/csi.sock"

env:

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

- name: liveness-prometheus

securityContext:

privileged: true

allowPrivilegeEscalation: true

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8681"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: socket-dir

hostPath:

path: /var/lib/kubelet/plugins/cephfs.csi.ceph.com/

type: DirectoryOrCreate

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: Directory

- name: mountpoint-dir

hostPath:

path: /var/lib/kubelet/pods

type: DirectoryOrCreate

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins

type: Directory

- name: host-sys

hostPath:

path: /sys

- name: etc-selinux

hostPath:

path: /etc/selinux

- name: lib-modules

hostPath:

path: /lib/modules

- name: host-dev

hostPath:

path: /dev

- name: host-mount

hostPath:

path: /run/mount

- name: ceph-config

configMap:

name: ceph-config

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

- name: ceph-csi-mountinfo

hostPath:

path: /var/lib/kubelet/plugins/cephfs.csi.ceph.com/mountinfo

type: DirectoryOrCreate

---

# This is a service to expose the liveness metrics

apiVersion: v1

kind: Service

metadata:

name: csi-metrics-cephfsplugin

namespace: cephfs-csi

labels:

app: csi-metrics

spec:

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8681

selector:

app: csi-cephfsplugin

Deploy;

kubectl apply -f csi-cephfsplugin.yamlConfirm;

kubectl get daemonset,svc -n cephfs-csiCreate Ceph CSI Configuration ConfigMap

Create a ConfigMap to store the Ceph cluster details. The Ceph CSI configmap manifest format;

apiVersion: v1

kind: ConfigMap

metadata:

name: ceph-csi-config

namespace: <namespace>

data:

config.json: |-

[

{

"clusterID": "<ceph-cluster-id>",

"monitors": [

"<ceph-monitor-1>:6789",

"<ceph-monitor-2>:6789",

"<ceph-monitor-3>:6789"

]

}

]

Replace:

<ceph-cluster-id>with your Ceph cluster ID (runceph fsidto get it).<ceph-monitor-1>,<ceph-monitor-2>, etc., with the IP addresses or hostnames of your Ceph monitors (runceph mon dumpto get this).

Here is my updated manifest;

cat csi-config-map.yamlapiVersion: v1

kind: ConfigMap

metadata:

name: ceph-csi-config

namespace: cephfs-csi

data:

config.json: |-

[

{

"clusterID": "854884fa-dcd5-11ef-b070-5254000e6c0d",

"monitors": [

"192.168.122.152:6789",

"192.168.122.230:6789",

"192.168.122.166:6789",

"192.168.122.175:6789"

],

"cephFS": {

"subvolumeGroup": "k8s"

}

}

]

Apply;

kubectl apply -f csi-config-map.yamlCheck;

kubectl get cm -n cephfs-csiDeploy Ceph Configuration ConfigMap for CSI pods

The Ceph configuration ConfigMap provides the Ceph cluster authentication details to the CSI pods. Here is the manifest for the same.

cat ceph-conf.yaml---

apiVersion: v1

kind: ConfigMap

data:

ceph.conf: |

[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

keyring: |

metadata:

name: ceph-config

namespace: cephfs-csi

Apply the manifest to define the Ceph authentication config map;

kubectl apply -f ceph-conf.yamlCreate User Credentials for Provisioning Volumes on Ceph

On Ceph control plane, create CephFS client credentials that will be used by the Kubernetes pods to mount the filesystem. Ideally, you can create a user with the following capabilities:

ceph auth get-or-create client.cephfs \

mgr "allow rw" \

mds "allow rw fsname=kubernetesfs path=/volumes, allow rw fsname=kubernetesfs path=/volumes/csi" \

mon "allow r fsname=kubernetesfs" \

osd "allow rw tag cephfs data=kubernetesfs, allow rw tag cephfs metadata=kubernetesfs"

Read more on capabilities of Ceph-CSI.

After that, our secret file now looks like;

cat secret.yaml---

apiVersion: v1

kind: Secret

metadata:

name: csi-cephfs-secret

namespace: cephfs-csi

stringData:

# Required for statically and dynamically provisioned volumes

# The userID must not include the "client." prefix!

userID: cephfs

userKey: AQA10Zxn32oBCxAAQLqLmNfYVVzMEg55FYXO7Q==

adminID: cephfs

adminKey: AQA10Zxn32oBCxAAQLqLmNfYVVzMEg55FYXO7Q==

You can get the user’s key;

ceph auth get-key client.cephfs;echoUpdate the file accordingly and apply;

kubectl apply -f secret.yamlCreate StorageClass for CephFS

Create a StorageClass to dynamically provision CephFS volumes.

Here is the sample SC template from git repo;

cat storageclass.yaml---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-cephfs-sc

provisioner: cephfs.csi.ceph.com

parameters:

# (required) String representing a Ceph cluster to provision storage from.

# Should be unique across all Ceph clusters in use for provisioning,

# cannot be greater than 36 bytes in length, and should remain immutable for

# the lifetime of the StorageClass in use.

# Ensure to create an entry in the configmap named ceph-csi-config, based on

# csi-config-map-sample.yaml, to accompany the string chosen to

# represent the Ceph cluster in clusterID below

clusterID: <cluster-id>

# (required) CephFS filesystem name into which the volume shall be created

# eg: fsName: myfs

fsName: <cephfs-name>

# (optional) Ceph pool into which volume data shall be stored

# pool: <cephfs-data-pool>

# (optional) Comma separated string of Ceph-fuse mount options.

# For eg:

# fuseMountOptions: debug

# (optional) Comma separated string of Cephfs kernel mount options.

# Check man mount.ceph for mount options. For eg:

# kernelMountOptions: readdir_max_bytes=1048576,norbytes

# The secrets have to contain user and/or Ceph admin credentials.

csi.storage.k8s.io/provisioner-secret-name: csi-cephfs-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-cephfs-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-cephfs-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

# (optional) The driver can use either ceph-fuse (fuse) or

# ceph kernelclient (kernel).

# If omitted, default volume mounter will be used - this is

# determined by probing for ceph-fuse and mount.ceph

# mounter: kernel

# (optional) Prefix to use for naming subvolumes.

# If omitted, defaults to "csi-vol-".

# volumeNamePrefix: "foo-bar-"

# (optional) Boolean value. The PVC shall be backed by the CephFS snapshot

# specified in its data source. `pool` parameter must not be specified.

# (defaults to `true`)

# backingSnapshot: "false"

# (optional) Instruct the plugin it has to encrypt the volume

# By default it is disabled. Valid values are "true" or "false".

# A string is expected here, i.e. "true", not true.

# encrypted: "true"

# (optional) Use external key management system for encryption passphrases by

# specifying a unique ID matching KMS ConfigMap. The ID is only used for

# correlation to configmap entry.

# encryptionKMSID: <kms-config-id>

reclaimPolicy: Delete

allowVolumeExpansion: true

# mountOptions:

# - context="system_u:object_r:container_file_t:s0:c0,c1"

So, you need to replace:

<cluster-id>with your Ceph cluster ID (ceph fsid).<cephfs-name>with the name of your CephFS file system (ceph fs ls).<cephfs-data-pool>with the metadata pool name.

Update the manifest as you so wish.

This is our updated storageclass manifest.

cat storageclass.yaml---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-cephfs-sc

provisioner: cephfs.csi.ceph.com

parameters:

clusterID: 854884fa-dcd5-11ef-b070-5254000e6c0d

fsName: kubernetesfs

pool: cephfs_data

csi.storage.k8s.io/provisioner-secret-name: csi-cephfs-secret

csi.storage.k8s.io/provisioner-secret-namespace: cephfs-csi

csi.storage.k8s.io/controller-expand-secret-name: csi-cephfs-secret

csi.storage.k8s.io/controller-expand-secret-namespace: cephfs-csi

csi.storage.k8s.io/node-stage-secret-name: csi-cephfs-secret

csi.storage.k8s.io/node-stage-secret-namespace: cephfs-csi

reclaimPolicy: Delete

allowVolumeExpansion: true

Create the SC on Kubernetes;

kubectl apply -f storageclass.yamlCreate PersistentVolumeClaim (PVC)

Create a PVC to request storage from the CephFS StorageClass.

Kindly note that from Ceph-CSI v3.10.0 Release, one of the breaking changes is the removal of the ability to pre-create a subvolumegroup before creating a subvolume. As such users will need to create the specified(or default csi) subvolumegroup before provisioning CephFS PVC on a new ceph otherwise, you may see the Pods pending to schedule and such errors as failed to provision volume with StorageClass “csi-cephfs-sc”: rpc error: code = Internal desc = rados: ret=-2, No such file or directory: “subvolume group ‘csi’ does not exist

If you check our csi-config-map.yaml file above, you will see that we defined our custom subvolumegroup as k8s.

Therefore, before we proceed, create subvolumegroup on Ceph control plane.

ceph fs subvolumegroup create fsName subVolumeGroupNameE.g;

ceph fs subvolumegroup create kubernetesfs k8sYou can list;

ceph fs subvolumegroup ls kubernetesfs Once you have created a volume group, proceed to create a PVC.

cat cephfs-pvc.yaml---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-cephfs-pvc

namespace: cephfs-csi

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: csi-cephfs-sc

Apply the PVC;

kubectl apply -f cephfs-pvc.yamlVerify CephFS CSI Resources

I have deployed CephFS resources under a namespace, cephfs-csi. Hence, you can list all resources to check;

kubectl get all -n cephfs-csiNAME READY STATUS RESTARTS AGE

pod/csi-cephfsplugin-lvghf 3/3 Running 0 1h18m

pod/csi-cephfsplugin-nqw7g 3/3 Running 0 1h18m

pod/csi-cephfsplugin-provisioner-7d4dbddd67-8c9z2 5/5 Running 0 1h18m

pod/csi-cephfsplugin-provisioner-7d4dbddd67-9xcz4 5/5 Running 4 (1h1m ago) 1h18m

pod/csi-cephfsplugin-provisioner-7d4dbddd67-cvqnv 5/5 Running 0 1h18m

pod/csi-cephfsplugin-zgsst 3/3 Running 0 1h18m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/csi-cephfsplugin-provisioner ClusterIP 10.101.222.71 <none> 8080/TCP 1h18m

service/csi-metrics-cephfsplugin ClusterIP 10.105.238.255 <none> 8080/TCP 1h18m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/csi-cephfsplugin 3 3 3 3 3 <none> 1h18m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/csi-cephfsplugin-provisioner 3/3 3 3 1h18m

NAME DESIRED CURRENT READY AGE

replicaset.apps/csi-cephfsplugin-provisioner-7d4dbddd67 3 3 3 1h18m

kubectl get sc,pvc,secret,csidriver -n cephfs-csiNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/csi-cephfs-sc cephfs.csi.ceph.com Delete Immediate true 16m

storageclass.storage.k8s.io/local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 8d

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

persistentvolumeclaim/csi-cephfs-pvc Pending csi-cephfs-sc <unset> 4m20s

NAME TYPE DATA AGE

secret/csi-cephfs-secret Opaque 4 1h22m

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

csidriver.storage.k8s.io/cephfs.csi.ceph.com false false false <unset> false Persistent 1h22m

csidriver.storage.k8s.io/csi.tigera.io true true false <unset> false Ephemeral 12d

Mount CephFS in a Pod

Create a pod to use the dynamically provisioned CephFS volume. Here is our basic Web server pod running Nginx container.

Sample manifest for our pod;

cat cepfs-csi-pod.yamlapiVersion: v1

kind: Pod

metadata:

name: csi-cephfs-demo-pod

namespace: cephfs-csi

spec:

containers:

- name: web-server

image: docker.io/library/nginx:latest

volumeMounts:

- name: cephfspvc

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: cephfspvc

persistentVolumeClaim:

claimName: csi-cephfs-pvc

readOnly: false

kubectl apply -f cepfs-csi-pod.yamlWatch the pods as they create;

kubectl get pods -n cephfs-csi -wOr

kubectl get events -n cephfs-csi -wSample events;

...

2s Normal Scheduled pod/csi-cephfs-demo-pod Successfully assigned cephfs-csi/csi-cephfs-demo-pod to k8s-rhel-node-wk-03

2s Normal Pulling pod/csi-cephfs-demo-pod Pulling image "docker.io/library/nginx:latest"

1s Normal Pulled pod/csi-cephfs-demo-pod Successfully pulled image "docker.io/library/nginx:latest" in 852ms (852ms including waiting). Image size: 72080558 bytes.

1s Normal Created pod/csi-cephfs-demo-pod Created container: web-server

1s Normal Started pod/csi-cephfs-demo-pod Started container web-server

...

Check the pods;

kubectl get pods -n cephfs-csiNAME READY STATUS RESTARTS AGE

csi-cephfs-demo-pod 1/1 Running 0 1m

csi-cephfsplugin-lvghf 3/3 Running 0 2h15m

csi-cephfsplugin-nqw7g 3/3 Running 0 2h15m

csi-cephfsplugin-provisioner-7d4dbddd67-8c9z2 5/5 Running 0 2h15m

csi-cephfsplugin-provisioner-7d4dbddd67-9xcz4 5/5 Running 4 (6h58m ago) 2h15m

csi-cephfsplugin-provisioner-7d4dbddd67-cvqnv 5/5 Running 0 2h15m

csi-cephfsplugin-zgsst 3/3 Running 0 2h15m

The pod is running fine.

The PVC should also be Bound!

kubectl get pvc -n cephfs-csiNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

csi-cephfs-pvc Bound pvc-a1c041ec-b657-419f-a4c6-0d0733e23b53 10Gi RWX csi-cephfs-sc 28m

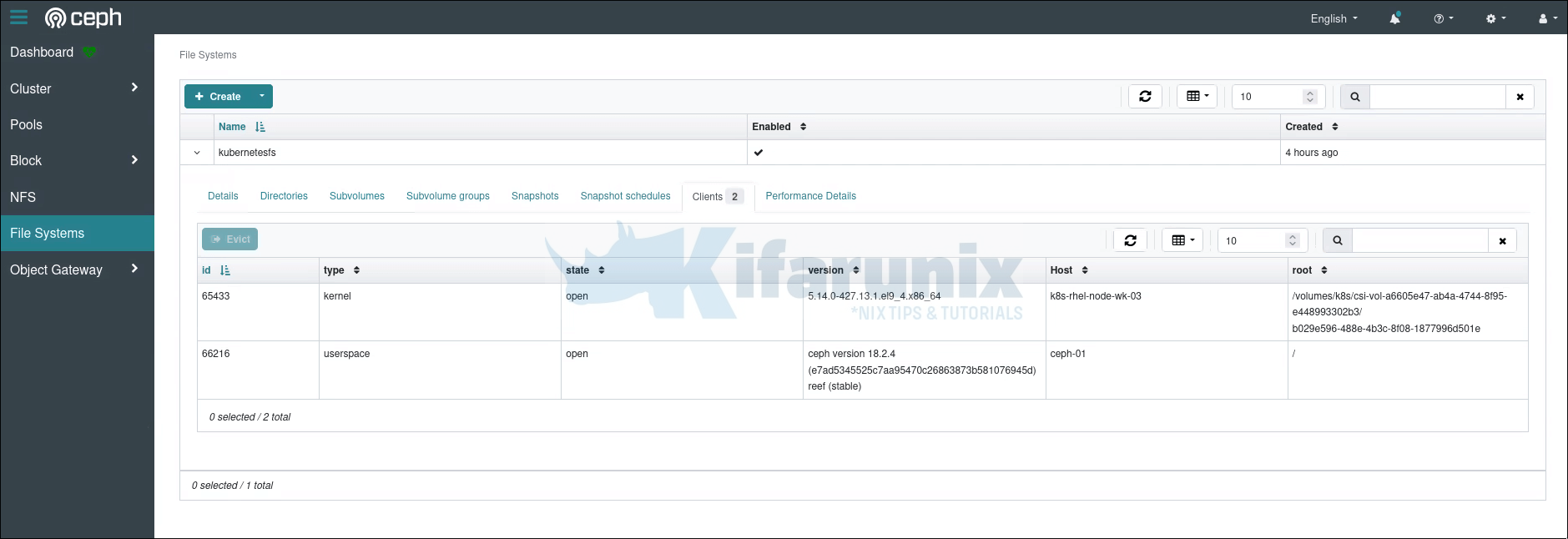

Confirm volume creation on Ceph;

Testing the Use of CephFS Shared Filesystem

The volume is now provisioned and you can be able to configure statefull resources to access data stored on the volume.

In our test pod, we have configured it to mount the web root directory on the volume. To verify that the volume contents can be shared with other Pods;

Let’s login to the pod and create a simple web page

You can login to the Pod;

kubectl exec -it pod/csi-cephfs-demo-pod -n cephfs-csi -- bashCreate basic html page within the Pod;

root@csi-cephfs-demo-pod:/# echo '<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Ceph FS Sharing is Working!</title>

</head>

<body>

<h1 style="text-align: center; color: green;">Ceph FS Sharing Works!</h1>

<p style="text-align: center; font-size: 1.2em;">The Ceph File System (Ceph FS) is successfully sharing data.</p>

</body>

</html>

' > /usr/share/nginx/html/index.html

Or Mount the CephFS volume on your Host and create Content

You can be able to mount the CephFS volume on your local host and create content.

Ensure that the ceph-common package is installed on the client and if not, install it:

On Red Hat Enterprise Linux:

sudo yum install ceph-commonOn Ubuntu/Debian:

sudo apt install ceph-commonCopy the Ceph configuration file from the monitor host to the /etc/ceph/ directory on the client host. You can run the command below to generate minimal config;

ceph config generate-minimal-confCopy the output and put under /etc/ceph/ceph.conf on the client.

cat /etc/ceph/ceph.conf[global]

fsid = 854884fa-dcd5-11ef-b070-5254000e6c0d

mon_host = [v2:192.168.122.152:3300/0,v1:192.168.122.152:6789/0] [v2:192.168.122.230:3300/0,v1:192.168.122.230:6789/0] [v2:192.168.122.166:3300/0,v1:192.168.122.166:6789/0] [v2:192.168.122.175:3300/0,v1:192.168.122.175:6789/0]

Next, copy the client keyring from the monitor host to the /etc/ceph/ directory on the client host as ceph.client.NAME.keyring. You can print client keyring using the command, ceph auth get client.NAME.

cat /etc/ceph/ceph.client.cephfs.keyring[client.cephfs]

key = AQA10Zxn32oBCxAAQLqLmNfYVVzMEg55FYXO7Q==

caps mds = "allow rw fsname=kubernetesfs path=/volumes, allow rw fsname=kubernetesfs path=/volumes/csi"

caps mgr = "allow rw"

caps mon = "allow r fsname=kubernetesfs"

caps osd = "allow rw tag cephfs data=kubernetesfs, allow rw tag cephfs metadata=kubernetesfs"

Mount the Ceph File System. To specify multiple monitor addresses, either separate them with commas in the mount command, or configure a DNS server so that a single host name resolves to multiple IP addresses and pass that host name to the mount command.

For example, we have 4 monitors, hence, to mount the volumes CephFS as client.cephfs user on the /mnt/cephfs/k8s.

mkdir -p /mnt/cephfs/k8smount -t ceph 192.168.122.152:6789,192.168.122.230:6789,192.168.122.166:6789,192.168.122.175:6789:/volumes /mnt/cephfs/k8s -o name=cephfsVerify content.

ls /mnt/cephfs/k8s/*/mnt/cephfs/k8s/_k8s:csi-vol-a6605e47-ab4a-4744-8f95-e448993302b3.meta

/mnt/cephfs/k8s/_deleting:

/mnt/cephfs/k8s/k8s:

csi-vol-a6605e47-ab4a-4744-8f95-e448993302b3

So, the shared volume as defined for our Pods above is /mnt/cephfs/k8s/k8s/csi-vol-a6605e47-ab4a-4744-8f95-e448993302b3.

For any shared content, you can place there and your other Pods should be able to mount the volume and access the content.

Let’s deploy a second Pod and configure it to use shared CephFS to see if it can access the web content.

cat nginx-pod-2.yamlapiVersion: v1

kind: Pod

metadata:

name: csi-cephfs-demo-pod-v2

namespace: cephfs-csi

spec:

containers:

- name: web-server

image: docker.io/library/nginx:latest

volumeMounts:

- name: cephfs-pod2

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: cephfs-pod2

persistentVolumeClaim:

claimName: csi-cephfs-pvc

readOnly: false

Deploy!

kubectl apply -f nginx-pod-2.yamlCheck pods;

kubectl get pods -n cephfs-csiNAME READY STATUS RESTARTS AGE

csi-cephfs-demo-pod 1/1 Running 0 173m

csi-cephfs-demo-pod-v2 1/1 Running 0 11s

Test Access to Shared Content

Login to the new pod and check if you can access the web page;

kubectl exec -it csi-cephfs-demo-pod-v2 -n cephfs-csi -- cat /usr/share/nginx/html/index.htmlAnd there you go!

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Ceph FS Sharing is Working!</title>

</head>

<body>

<h1 style="text-align: center; color: green;">Ceph FS Sharing Works!</h1>

<p style="text-align: center; font-size: 1.2em;">The Ceph File System (Ceph FS) is successfully sharing data.</p>

</body>

</html>

You have successfully configure CephFS and integrated with Kubernetes for persistent volume data access.

Conclusion

Integrating CephFS with Kubernetes using the CSI driver enables shared storage for multiple pods, making it ideal for stateful applications that require concurrent access to the same data. By creating PersistentVolumeClaims (PVCs) backed by CephFS, you can dynamically provision shared volumes and mount them in pods. Populating the CephFS volume can be done manually, via init containers, Jobs, or sidecar containers, depending on your use case.

Refer to https://github.com/ceph/ceph-csi/blob/devel/docs/cephfs/deploy.md#deployment-with-kubernetes