In this tutorial, you will learn how to integrate MinIO S3 storage with Kubernetes/OpenShift. MinIO is a high-performance, S3-compatible object storage solution ideal for cloud-native applications. Integrating an external MinIO instance with Kubernetes or OpenShift enables scalable, secure storage for backups, logs, or application data. We will cover how to connect MinIO server to Kubernetes/OpenShift cluster, configure authentication and use it as a storage backend for the cluster.

Table of Contents

How to Integrate MinIO S3 Storage with Kubernetes/OpenShift

In cloud-native environments, efficient data storage is critical for application performance and scalability. Object storage solutions like AWS S3 have become the standard for cloud-native applications, and MinIO stands out as a leading open-source alternative that provides S3-compatible object storage.

If you are running MinIO infrastructure separately from your Kubernetes/OpenShift cluster, then you need to integrate them together to be able to configure your workloads to utilize the storage. This guide explores how to effectively connect your existing MinIO S3 storage with Kubernetes and/or OpenShift.

Why Use External MinIO with Kubernetes/OpenShift?

Connecting Kubernetes/OpenShift to an existing MinIO infrastructure offers several advantages:

- Separation of Concerns: Storage infrastructure can be managed independently from application platforms

- Reduced Cluster Overhead: No need to run storage services within the container platform.

- Centralized Storage Management: Single storage platform serving multiple Kubernetes/OpenShift clusters.

- Simplified Upgrades: Update MinIO servers without affecting your container platforms.

- Optimized Resource Utilization: Dedicated hardware for storage-specific workloads

Prerequisites for Integration

Before connecting your applications to external MinIO, ensure you have:

- A running Kubernetes cluster or OpenShift cluster. We are using OpenShift cluster (v4.17) in this setup.

kubectlorocCLI tools configured to access your cluster- External MinIO server information:

- Endpoint URL

- Access key and secret key

- TLS certificates (if using HTTPS). See how to deploy MinIO server with Let’s Encrypt and Nginx Proxy.

- Network connectivity between your cluster and MinIO server

- Right privileges for creating required resources

Configuring Access to MinIO S3 Storage

Create or Verify a MinIO Bucket

Before configuring users and policies, ensure a MinIO bucket exists to store your data (e.g., backups). You can use an existing buckets or create a new ones. You can check if a bucket exists from the web console or from the MinIO server CLI.

- Thus, access the console (e.g., https://minio.kifarunix.com:9001, or directly without specifying the port if you are doing reverse proxy) with admin credentials.

- In the console, go to User > Object Browser or Administrator > Buckets to view available buckets.

CLI Alternative:

Assuming you have already created an alias for your MinIO deployment node, then you could list the buckets using the command:

mc ls minio_aliasCreate a New Bucket (if needed):

In the console, click Create Bucket +, name it backups or give it any suitable name, and save.

CLI Alternative:

mc mb ALIAS/BUCKET_NAMEFor example:

mc mb minios3/k8s-backupCreate MinIO S3 Access Credentials

In a production environment that prioritizes security, you should not use the MinIO admin credentials (minioadmin:minioadmin or equivalent) for integrating external MinIO with Kubernetes/OpenShift. Instead, create separate, least-privilege credentials for the integration to enhance security and ensure that Kubernetes/OpenShift workloads (e.g., the backup Job) can only perform necessary actions (e.g., read/write to a specific bucket), reducing the attack surface and comply with best practices.

Therefore access the console with admin credentials.

Create a Policy

To create a Policy, go to Administrator > Policies and click Create Policy +

Set the Policy Name.

Define a policy for the backups bucket. Here is a sample policy definition.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::k8s-backup",

"arn:aws:s3:::k8s-backup/*"

]

}

]

}Click Save to create the policy.

In brief, the policy above grants a user permission to:

- Upload objects to the

k8s-backupbucket (s3:PutObject) - Download objects from the bucket (

s3:GetObject) - List the contents of the bucket (

s3:ListBucket)

The permissions apply to:

- All objects within the bucket (

arn:aws:s3:::k8s-backup/*) - The bucket itself (

arn:aws:s3:::k8s-backup)

To use the CLI, save the policy definition above in a JSON fine, say k8s-backup.json and then use mc admin policy create command to create the policy.

mc admin policy create MinIO_ALIAS POLICYNAME POLICYPATHE.g

mc admin policy create minios3 k8s-backup ./k8s-backup.jsonCreate a User

- Go to Administrator > Identity > Users and click Create User.

- Set username (e.g., k8s-backup) and a strong password (e.g., openssl rand -base64 24).

- Assign a policy to the user.

- Click Save to save the access key (username) and secret key (password).

CLI Alternative:

Add user;

mc admin user add ALIAS USERNAMEAssign policy to a user;

mc admin policy attach ALIAS POLICY --user USERNAMEVerify MinIO Server Connectivity

Verify Connectivity from local MinIO Server

Before you can test connectivity from the Kubernetes/OpenShift, test the connectivity direct from the MinIO server CLI;

Create a temporary named shortcut (alias) to your MinIO server replacing the <alias-name>, <minio-api-url>, <access key> with your suitable alias name, the full URL to your MinIO API endpoint and the username with the user account name create above, in the command below.

mc alias set <alias-name> <minio-api-url> <access-key>E.g

mc alias set minio-k8s-backup https://minio-api.kifarunix.com k8s-backupYou will be required to enter your user password as the secret key value on the prompt.

Next, try to copy a random file into the bucket to test access;

mc cp hello.txt minio-k8s-backup/k8s-backup/hello.txtList the file;

mc ls minio-k8s-backup/k8s-backupOutput;

[2025-05-04 22:33:37 CEST] 15B STANDARD hello.txtThat confirms local access.

Verify Connectivity to MinIO Server from Kubernetes/OpenShift Cliuster

To test connectivity to your MinIO endpoint from within the OpenShift cluster, run the following command:

oc run -i --tty --rm debug --image=quay.io/openshift/origin-cli:latest --restart=Never -- bashOnce inside the temporary pod, run:

curl -sI https://minio-api.kifarunix.com/minio/health/liveReplace the MinIO server address accordingly.

A response code of 200 indicates the MinIO server is online and reachable. Any other HTTP codes indicate an issue with reaching the server.

Exit the container when done testing the MinIO server connectivity.

exitConfigure OpenShift to Use External MinIO S3

Create Kubernetes Secret to Store MinIO Credentials

Kubernetes Secrets provide a secure way to store and manage sensitive information. We’ll create a Secret to store the MinIO credentials, access key (username) and secret key (password). We will also store the MinIO API endpoint as well the backup bucket name on the secret.

Therefore, let’s create the secrets manifests file on a node that has access to OpenShift/Kubernetes API via oc or kubectl commands.

vim s3-secrets.yamlapiVersion: v1

kind: Secret

metadata:

name: <your-minio-secret-name>

namespace: <your-application-namespace>

type: Opaque

stringData:

AWS_ACCESS_KEY_ID: "<your-minio-username>"

AWS_SECRET_ACCESS_KEY: "<your-minio-password>"

AWS_ENDPOINT: "<your-minio-endpoint>"

BUCKET_NAME: "<your-bucket-name>"Copy the above YAML to a file, e.g. s3-secrets.yaml. Replace all placeholder values marked with <…> with respective values.

Then create the secret by applying the YAML file but before you apply the file, ensure that the project/namespace defined by the value of the metadata.namespace above already exists.

Once you have everything set, apply the YAML file.

oc apply -f s3-secrets.yamlIn production environments:

- Consider using a vault service like HashiCorp Vault to keep the secrets.

- Restrict access to the namespace containing this Secret.

- Regularly rotate your MinIO credentials and update the Secrets accordingly.

Like mentioned above, you can further restrict access to the secrets through Role-Based Access Control (RBAC) via service account.

For example, lets create a service account for this purpose.

oc create sa minios3-k8s-backup-saThe Secret safely stores your MinIO login, and the ServiceAccount with RBAC ensures only the right apps can use the secret!

Thus, to create a role that allows read only access to the secrets created above.

oc create role <ROLE_NAME> \

--namespace=<NAMESPACE> \

--verb=get \

--resource=secrets \

--resource-name=<SECRET_NAME>Where:

<ROLE_NAME>: The name you want for the role (e.g.s3-credentials-reader)<NAMESPACE>: The namespace where the secret and service account exist<SECRET_NAME>: The exact name of the secret the role should allow access to

Next, create a RoleBinding to give the read permission defined in the role above to the ServiceAccount created above.

oc create rolebinding <ROLEBINDING_NAME> \

--namespace=<NAMESPACE> \

--role=<ROLE_NAME> \

--serviceaccount=<NAMESPACE>:<SERVICEACCOUNT_NAME>Where:

<ROLEBINDING_NAME>: The name for the role binding (e.g.s3-credentials-binding)<NAMESPACE>: The namespace where the role and service account exist<ROLE_NAME>: The name of the Role being bound (e.g.s3-credentials-reader)<SERVICEACCOUNT_NAME>: The name of the ServiceAccount to bind to the role (e.g.minio-backup-sa)

Now when you create an application that needs to use the MinIO credentials above, you have to bind it the service account created above.

Test MinIO S3 Integration with a Job

Now, let’s test if the Kubernetes/OpenShift cluster can use MinIO S3 buckets to store files. We’ll create a Kubernetes Job that will create a small text file and upload it to a MinIO backups bucket.

We will use the minio/mc container image on the Job.

minio/mc container image in a Pod on OpenShift, you may encounter the following error:

mkdir: cannot create directory '/.mc': Permission denied

mc: <ERROR> Unable to save new mc config. mkdir /.mc: permission denied.

- The container defaults to writing MinIO config to

/.mc. - The Pod runs by default under the

restricted-v2SCC, which does not allow writing to/ (root directory)by a non-root user — whichminio/mcappears to use.

minio/mc work without needing elevated privileges:- Set

HOMEto a writable path, like/tmp, somcwrites its config there. - Mount a writable volume (

emptyDir) to/tmp. - Set the container’s working directory to

/tmpto allow file operations like creating a sample file.

Here is a sample YAML file for Job.

cat minio-s3-backup-job.yamlapiVersion: batch/v1

kind: Job

metadata:

name: test-k8s-minio-upload

namespace: minio-s3-demo

spec:

template:

spec:

serviceAccountName: minios3-k8s-backup-sa

containers:

- name: test

image: minio/mc

workingDir: /tmp

command: ["/bin/sh", "-c"]

args:

- |

echo "Hello Kubernetes + MinIO S3!" > hello-k8s-minio.txt

mc alias set minios3 $AWS_ENDPOINT $AWS_ACCESS_KEY_ID $AWS_SECRET_ACCESS_KEY

mc cp hello-k8s-minio.txt minios3/$BUCKET_NAME/hello-k8s-minio.txt

echo "Upload completed"

env:

- name: HOME

value: "/tmp"

- name: AWS_ENDPOINT

valueFrom:

secretKeyRef:

name: s3-k8s-backup-creds

key: AWS_ENDPOINT

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: s3-k8s-backup-creds

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: s3-k8s-backup-creds

key: AWS_SECRET_ACCESS_KEY

- name: BUCKET_NAME

valueFrom:

secretKeyRef:

name: s3-k8s-backup-creds

key: BUCKET_NAME

volumeMounts:

- name: tmp-volume

mountPath: /tmp

volumes:

- name: tmp-volume

emptyDir: {}

restartPolicy: Never

backoffLimit: 3Update the Job manifest accordingly and apply it when done.

oc apply -f minio-s3-backup-job.yamlCheck the Job’s Result:

oc get jobsSample output;

NAME STATUS COMPLETIONS DURATION AGE

test-k8s-minio-upload Complete 1/1 5s 8s

Check the pods;

oc get podsNAME READY STATUS RESTARTS AGE

test-k8s-minio-upload-rdbhn 0/1 Completed 0 10s

It looks like the job is done! Let’s check by viewing the Job’s pod output:

oc logs test-k8s-minio-upload-rdbhnSample output;

Added `minios3` successfully.

`/tmp/hello-k8s-minio.txt` -> `minios3/k8s-backup/hello-k8s-minio.txt`

┌───────┬─────────────┬──────────┬─────────┐

│ Total │ Transferred │ Duration │ Speed │

│ 29 B │ 29 B │ 00m00s │ 885 B/s │

└───────┴─────────────┴──────────┴─────────┘

Upload completed

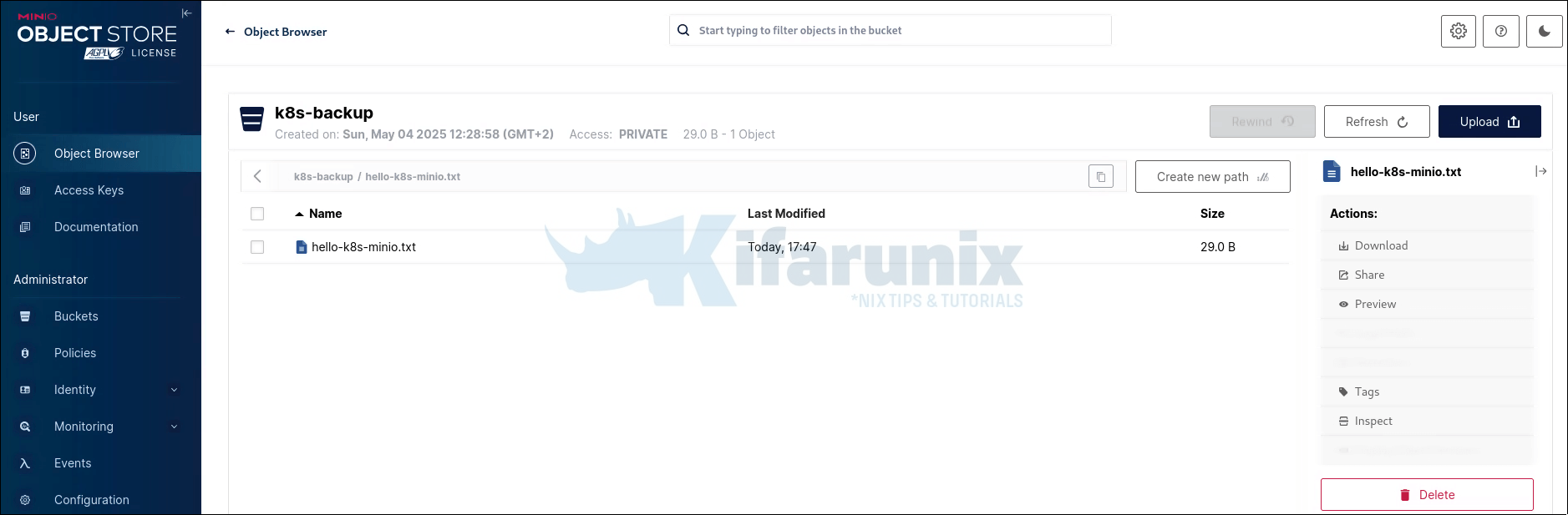

Great! Confirm on the MinIO S3 bucket!

This Job tests confirm that the cluster can log in to MinIO and store files.

You may be wondering why the Job shows 1/1 completed yet the associated Pod shows 0/1. This happens because a Kubernetes Job is considered “completed” when its task finishes successfully, but the Pod it creates may no longer be running or in a “ready” state. A Job creates a Pod to run a task (like uploading a file to MinIO), and once the task succeeds, the Pod enters a Completed state, which oc get pods reports as 0/1 because the Pod isn’t actively running or ready to serve traffic—it’s done its job.

Conclusion

So far so good! You’ve learned how to connect an external MinIO storage system to an OpenShift cluster, from setting up a bucket on MinIO server to running a backup Job on OpenShift cluster. By using a secure user, a dedicated ServiceAccount, and testing each step, you can store files safely for your apps.

While using an external S3 object storage on OpenShift for your backups or whatever the use case, some of the practices I would recommend you implement include:

- Least Privilege: Use IAM policies to grant minimal permissions for specific tasks.

- Object Locking/Versioning: Enable WORM (write-once-read-many) with versioning and retention to protect data.

- Encryption: Use HTTPS (TLS 1.2/1.3) and server-side encryption with KMS.

- Centralized Identity: Integrate with LDAP/Okta for user management.

- Policy-Based Access: Define granular permissions with PBAC and conditions.

- Service Accounts: Use restricted service accounts for apps, not user credentials.

- Certificate Auth: Use X.509/TLS certificates with STS for secure app access.

- Audit/Monitoring: Enable audit logging and monitor CVEs for security.

Read more on S3 security and access control.

That completes our guide on how to integrate MinIO S3 storage with Kubernetes/OpenShift.