Welcome to our tutorial on how to install Arkime (Moloch) Full Packet Capture tool on Ubuntu 20.04/Ubuntu 22.04/Ubuntu 18.04. Arkime, formerly Moloch “is a large scale, open source, indexed packet capture and search system“.

Using Debian system? then check the guide below;

Install Arkime (Moloch) Full Packet Capture tool on Debian 11

According to its Github repository page, some of the features of Arkime tool include;

- It stores and indexes network traffic in standard PCAP format, providing fast, indexed access.

- Provides an intuitive web interface for PCAP browsing, searching, and exporting.

- Exposes APIs which allow for PCAP data and JSON formatted session data to be downloaded and consumed directly.

- Stores and exports all packets in standard PCAP format, allowing you to also use your favorite PCAP ingesting tools, such as wireshark, during your analysis workflow.

Installing Arkime on Ubuntu

You can either install Arkime on Ubuntu using prebuilt binary packages or simply build it from the source yourself.

Installing Arkime using Prebuilt Binary on Ubuntu

Download Arkime Binary Installer

In order to install Arkime using the prebuilt binary on Ubuntu, navigate to the downloads page and grab the binary installer for your Ubuntu flavour, which in my setup is Ubuntu 22.04.

You can as well grab the link to the binary installer and pull it using curl or wget command. For example, the command below downloads the current stable release version of Arkime binary installer for Ubuntu 22.04;

wget https://s3.amazonaws.com/files.molo.ch/builds/ubuntu-22.04/arkime_4.1.0-1_amd64.debRun System Update

Update your system package cache;

apt updateInstall Arkime on Ubuntu

Next, install Arkime on Ubuntu using the downloaded binary installer.

apt install ./arkime_4.1.0-1_amd64.debIf you want, you can as well build Arkime by building it from the source. Check the installation page for instructions.

Install Elasticsearch on Ubuntu

Arkime uses Elasticsearch as a search and indexing engine. Therefore, install Elasticsearch by running the command below;

Import the Elastic stack PGP repository signing Key

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch --no-check-certificate \

| sudo gpg --dearmor > /etc/apt/trusted.gpg.d/elastic.gpgInstall Elasticsearch 7.x APT repository;

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" \

| sudo tee -a /etc/apt/sources.list.d/elastic-7.x.listUpdate package cache and install Elasticsearch;

apt updateapt install elasticsearchConfigure Elasticsearch JVM options depending on the size of your memory;

vim /etc/elasticsearch/jvm.options.d/heap.options# Xms represents the initial size of total heap space

# Xmx represents the maximum size of total heap space

-Xms512m

-Xmx512mSave and exit the configuration file.

Configure Arkime on Ubuntu

Configuring Arkime

Once the installation is done, run the script below to configure Arkime;

Check your interfaces;

ip aAnswer the script prompts accordingly;

/opt/arkime/bin/ConfigureSelect an interface to monitor;

Found interfaces: lo;enp0s3;enp0s8

Semicolon ';' seperated list of interfaces to monitor [eth1] enp0s8Choose whether to install Elasticsearch automatically or you want to install manually yourself (We have already installed Elasticsearch, hence choose no).

Install Elasticsearch server locally for demo, must have at least 3G of memory, NOT recommended for production use (yes or no) [no] noSet Elasticsearch server URL, localhost:9200 in this setup. Just press Enter to accept the defaults.

Elasticsearch server URL [http://localhost:9200] ENTERSet encryption password. Be sure to replace the password.

Password to encrypt S2S and other things [no-default] changemeThe configuration of Arkime then runs.

Arkime - Creating configuration files

Installing sample /opt/arkime/etc/config.ini

Download GEO files? You'll need a MaxMind account https://arkime.com/faq#maxmind (yes or no) [yes] yes

Arkime - Downloading GEO files

2023-02-08 15:20:47 URL:https://www.iana.org/assignments/ipv4-address-space/ipv4-address-space.csv [23331/23331] -> "/tmp/tmp.NyJWPlYxE0" [1]

2023-02-08 15:20:47 URL:https://raw.githubusercontent.com/wireshark/wireshark/master/manuf [2093338/2093338] -> "/tmp/tmp.gykkD9TZqP" [1]

Arkime - Configured - Now continue with step 4 in /opt/arkime/README.txt

4) The Configure script can install OpenSearch/Elasticsearch for you or you can install yourself

5) Initialize/Upgrade OpenSearch/Elasticsearch Arkime configuration

a) If this is the first install, or want to delete all data

/opt/arkime/db/db.pl http://ESHOST:9200 init

b) If this is an update to an Arkime package

/opt/arkime/db/db.pl http://ESHOST:9200 upgrade

6) Add an admin user if a new install or after an init

/opt/arkime/bin/arkime_add_user.sh admin "Admin User" THEPASSWORD --admin

7) Start everything

systemctl start arkimecapture.service

systemctl start arkimeviewer.service

8) Look at log files for errors

/opt/arkime/logs/viewer.log

/opt/arkime/logs/capture.log

9) Visit http://arkimeHOST:8005 with your favorite browser.

user: admin

password: THEPASSWORD from step #6

If you want IP -> Geo/ASN to work, you need to setup a maxmind account and the geoipupdate program.

See https://arkime.com/faq#maxmind

Any configuration changes can be made to /opt/arkime/etc/config.ini

See https://arkime.com/faq#arkime-is-not-working for issues

Additional information can be found at:

* https://arkime.com/faq

* https://arkime.com/settings

Sample Arkime config file;

cat /opt/arkime/etc/config.ini

# Latest settings documentation: https://arkime.com/settings

#

# Arkime capture/viewer uses a tiered system for configuration variables. This allows Arkime

# to share one config file for many machines. The ordering of sections in this

# file doesn't matter.

#

# Order of config variables use:

# 1st) [optional] The section titled with the node name is used first.

# 2nd) [optional] If a node has a nodeClass variable, the section titled with

# the nodeClass name is used next. Sessions will be tagged with

# class: which may be useful if watching different networks.

# 3rd) The section titled "default" is used last.

[default]

# Comma seperated list of OpenSearch/Elasticsearch host:port combinations. If not using a

# Elasticsearch load balancer, a different OpenSearch/Elasticsearch node in the cluster can be

# specified for each Arkime node to help spread load on high volume clusters. For user/password

# use https://user:pass@host:port OR elasticsearchBasicAuth

elasticsearch=http://localhost:9200

# elasticsearchBasicAuth=

# Where the user database is, the above is used if not set

# For user/password use https://user:pass@host:port OR usersElasticsearchBasicAuth

# usersElasticsearch=http://localhost:9200

# usersElasticsearchBasicAuth=

# How often to create a new OpenSearch/Elasticsearch index. hourly,hourly[23468],hourly12,daily,weekly,monthly

# See https://arkime.com/settings#rotateindex

rotateIndex=daily

# Uncomment if this node should process the cron queries and packet search jobs, only ONE node should

# process cron queries and packet search jobs

# cronQueries=true

# Cert file to use, comment out to use http instead

# certFile=/opt/arkime/etc/arkime.cert

# File with trusted roots/certs. WARNING! this replaces default roots

# Useful with self signed certs and can be set per node.

# caTrustFile=/opt/arkime/etc/roots.cert

# Private key file to use, comment out to use http instead

# keyFile=/opt/arkime/etc/arkime.key

# Password Hash Secret - Must be in default section. Since OpenSearch/Elasticsearch

# is wide open by default, we encrypt the stored password hashes with this

# so a malicous person can't insert a working new account.

# Comment out for no user authentication.

# Changing the value will make all previously stored passwords no longer work.

# Make this RANDOM, you never need to type in

passwordSecret=password

# Use a different password for S2S communication then passwordSecret.

# Must be in default section. Make this RANDOM, you never need to type in

#serverSecret=

# HTTP Digest Realm - Must be in default section. Changing the value

# will make all previously stored passwords no longer work

httpRealm=Moloch

# Semicolon ';' seperated list of interfaces to listen on for traffic

interface=ens3

# Host to connect to for wiseService

#wiseHost=127.0.0.1

# Log viewer access requests to a different log file

#accessLogFile=/opt/arkime/logs/access.log

# Control the log format for access requests. This uses URI % encoding.

#accessLogFormat=:date :username %1b[1m:method%1b[0m %1b[33m:url%1b[0m :status :res[content-length] bytes :response-time ms

# The directory to save raw pcap files to

pcapDir=/opt/arkime/raw

# The max raw pcap file size in gigabytes, with a max value of 36G.

# The disk should have room for at least 10*maxFileSizeG

maxFileSizeG=12

# The max time in minutes between rotating pcap files. Default is 0, which means

# only rotate based on current file size and the maxFileSizeG variable

#maxFileTimeM=60

# TCP timeout value. Arkime writes a session record after this many seconds

# of inactivity.

tcpTimeout=600

# Arkime writes a session record after this many seconds, no matter if

# active or inactive

tcpSaveTimeout=720

# UDP timeout value. Arkime assumes the UDP session is ended after this

# many seconds of inactivity.

udpTimeout=30

# ICMP timeout value. Arkime assumes the ICMP session is ended after this

# many seconds of inactivity.

icmpTimeout=10

# An aproximiate maximum number of active sessions Arkime will try and monitor

maxStreams=1000000

# Arkime writes a session record after this many packets

maxPackets=10000

# Delete pcap files when free space is lower then this in gigabytes OR it can be

# expressed as a percentage (ex: 5%). This does NOT delete the session records in

# the database. It is recommended this value is between 5% and 10% of the disk.

# Database deletes are done by the db.pl expire script

freeSpaceG=5%

# The port to listen on, by default 8005

#viewPort=8005

# The host/ip to listen on, by default 0.0.0.0 which is ALL

#viewHost=localhost

# A MaxMind account is now required, Arkime checks several install locations, or

# will work without Geo files installed. See https://arkime.com/faq#maxmind

#geoLite2Country=/var/lib/GeoIP/GeoLite2-Country.mmdb;/usr/share/GeoIP/GeoLite2-Country.mmdb;/opt/arkime/etc/GeoLite2-Country.mmdb

#geoLite2ASN=/var/lib/GeoIP/GeoLite2-ASN.mmdb;/usr/share/GeoIP/GeoLite2-ASN.mmdb;/opt/arkime/etc/GeoLite2-ASN.mmdb

# Path of the rir assignments file

# https://www.iana.org/assignments/ipv4-address-space/ipv4-address-space.csv

rirFile=/opt/arkime/etc/ipv4-address-space.csv

# Path of the OUI file from whareshark

# https://raw.githubusercontent.com/wireshark/wireshark/master/manuf

ouiFile=/opt/arkime/etc/oui.txt

# Arkime rules to allow you specify actions to perform when criteria are met with certain fields or state.

# See https://arkime.com/rulesformat

#rulesFiles=/opt/arkime/etc/arkime.rules

# User to drop privileges to. The pcapDir must be writable by this user or group below

dropUser=nobody

# Group to drop privileges to. The pcapDir must be writable by this group or user above

dropGroup=daemon

# Header to use for determining the username to check in the database for instead of

# using http digest. Use this if apache or something else is doing the auth.

# Set viewHost to localhost or use iptables

# Might need something like this in the httpd.conf

# RewriteRule .* - [E=ENV_RU:%{REMOTE_USER}]

# RequestHeader set ARKIME_USER %{ENV_RU}e

#userNameHeader=arkime_user

#

# Headers to use to determine if user from `userNameHeader` is

# authorized to use the system, and if so create a new user

# in the Arkime user database. This implementation expects that

# the users LDAP/AD groups (or similar) are populated into an

# HTTP header by the Apache (or similar) referenced above.

# The JSON in userAutoCreateTmpl is used to insert the new

# user into the arkime database (if not already present)

# and additional HTTP headers can be sourced from the request

# to populate various fields.

#

# The example below pulls verifies that an HTTP header called `UserGroup`

# is present, and contains the value "ARKIME_ACCESS". If this authorization

# check passes, the user database is inspected for the user in `userNameHeader`

# and if it is not present it is created. The system uses the

# `arkime_user` and `http_auth_mail` headers from the

# request and uses them to populate `userId` and `userName`

# fields for the new user record.

#

# Once the user record is created, this functionaity

# neither updates nor deletes the data, though if the user is no longer

# reported to be in the group, access is denied regardless of the status

# in the arkime database.

#

#requiredAuthHeader="UserGroup"

#requiredAuthHeaderVal="ARKIME_ACCESS"

#userAutoCreateTmpl={"userId": "${this.arkime_user}", "userName": "${this.http_auth_mail}", "enabled": true, "webEnabled": true, "headerAuthEnabled": true, "emailSearch": true, "createEnabled": false, "removeEnabled": false, "packetSearch": true }

# Should we parse extra smtp traffic info

parseSMTP=true

# Should we parse extra smb traffic info

parseSMB=true

# Should we parse HTTP QS Values

parseQSValue=false

# Should we calculate sha256 for bodies

supportSha256=false

# Only index HTTP request bodies less than this number of bytes */

maxReqBody=64

# Only store request bodies that Utf-8?

config.reqBodyOnlyUtf8=true

# Semicolon ';' seperated list of SMTP Headers that have ips, need to have the terminating colon ':'

smtpIpHeaders=X-Originating-IP:;X-Barracuda-Apparent-Source-IP:

# Semicolon ';' seperated list of directories to load parsers from

parsersDir=/opt/arkime/parsers

# Semicolon ';' seperated list of directories to load plugins from

pluginsDir=/opt/arkime/plugins

# Semicolon ';' seperated list of plugins to load and the order to load in

# plugins=tagger.so; netflow.so

# Plugins to load as root, usually just readers

#rootPlugins=reader-pfring; reader-daq.so

# Semicolon ';' seperated list of viewer plugins to load and the order to load in

# viewerPlugins=wise.js

# NetFlowPlugin

# Input device id, 0 by default

#netflowSNMPInput=1

# Outout device id, 0 by default

#netflowSNMPOutput=2

# Netflow version 1,5,7 supported, 7 by default

#netflowVersion=1

# Semicolon ';' seperated list of netflow destinations

#netflowDestinations=localhost:9993

# Specify the max number of indices we calculate spidata for.

# ES will blow up if we allow the spiData to search too many indices.

spiDataMaxIndices=4

# Uncomment the following to allow direct uploads. This is experimental

#uploadCommand=/opt/arkime/bin/capture --copy -n {NODE} -r {TMPFILE} -c {CONFIG} {TAGS}

# Title Template

# _cluster_ = ES cluster name

# _userId_ = logged in User Id

# _userName_ = logged in User Name

# _page_ = internal page name

# _expression_ = current search expression if set, otherwise blank

# _-expression_ = " - " + current search expression if set, otherwise blank, prior spaces removed

# _view_ = current view if set, otherwise blank

# _-view_ = " - " + current view if set, otherwise blank, prior spaces removed

#titleTemplate=_cluster_ - _page_ _-view_ _-expression_

# Number of threads processing packets

packetThreads=2

# ADVANCED - Semicolon ';' seperated list of files to load for config. Files are loaded

# in order and can replace values set in this file or previous files.

#includes=

# ADVANCED - How is pcap written to disk

# simple = use O_DIRECT if available, writes in pcapWriteSize chunks,

# a file per packet thread.

# simple-nodirect = don't use O_DIRECT. Required for zfs and others

pcapWriteMethod=simple

# ADVANCED - Buffer size when writing pcap files. Should be a multiple of the raid 5 or xfs

# stripe size. Defaults to 256k

pcapWriteSize=262143

# ADVANCED - Number of bytes to bulk index at a time

dbBulkSize=300000

# ADVANCED - Max number of connections to OpenSearch/Elasticsearch

maxESConns=30

# ADVANCED - Max number of es requests outstanding in q

maxESRequests=500

# ADVANCED - Number of packets to ask libpcap to read per poll/spin

# Increasing may hurt stats and ES performance

# Decreasing may cause more dropped packets

packetsPerPoll=50000

# ADVANCED - The base path for Arkime web access. Must end with a / or bad things will happen

# Only set when using a reverse proxy

# webBasePath=/arkime/

# DEBUG - Write to stdout info every X packets.

# Set to -1 to never log status

logEveryXPackets=100000

# DEBUG - Write to stdout unknown protocols

logUnknownProtocols=false

# DEBUG - Write to stdout OpenSearch/Elasticsearch requests

logESRequests=true

# DEBUG - Write to stdout file creation information

logFileCreation=true

### High Performance settings

# https://arkime.com/settings#high-performance-settings

# magicMode=basic

# pcapReadMethod=tpacketv3

# tpacketv3NumThreads=2

# pcapWriteMethod=simple

# pcapWriteSize=2560000

# packetThreads=5

# maxPacketsInQueue=200000

### Low Bandwidth settings

# packetThreads=1

# pcapWriteSize=65536

##############################################################################

# override-ips is a special section that overrides the MaxMind databases for

# the fields set, but fields not set will still use MaxMind (example if you set

# tags but not country it will use MaxMind for the country)

# Spaces and capitalization is very important.

# IP Can be a single IP or a CIDR

# Up to 10 tags can be added

#

# ip=tag:TAGNAME1;tag:TAGNAME2;country:3LetterUpperCaseCountry;asn:ASN STRING

#[override-ips]

#10.1.0.0/16=tag:ny-office;country:USA;asn:AS0000 This is an ASN

##############################################################################

# It is possible to define in the config file extra http/email headers

# to index. They are accessed using the expression http. and

# email. with optional .cnt expressions

#

# Possible config atributes for all headers

# type: (string|integer|ip) = data type (default string)

# count: = index count of items (default false)

# unique: = only record unique items (default true)

# headers-http-request is used to configure request headers to index

[headers-http-request]

referer=type:string;count:true;unique:true

authorization=type:string;count:true

content-type=type:string;count:true

origin=type:string

# headers-http-response is used to configure http response headers to index

[headers-http-response]

location=type:string

server=type:string

content-type=type:string;count:true

# headers-email is used to configure email headers to index

[headers-email]

x-priority=type:integer

authorization=type:string

##############################################################################

# If you have multiple clusters and you want the ability to send sessions

# from one cluster to another either manually or with the cron feature fill out

# this section

#[remote-clusters]

#forensics=url:https://viewer1.host.domain:8005;serverSecret:password4arkime;name:Forensics Cluster

#shortname2=url:http://viewer2.host.domain:8123;serverSecret:password4arkime;name:Testing Cluster

# WARNING: This is an ini file with sections, most likely you don't want to put a setting here.

# New settings usually go near the top in the [default] section, or in [nodename] sections.

Running Elasticsearch

Start and enable Elasticsearch to run on system boot;

systemctl enable --now elasticsearchVerify if Elasticsearch is running;

curl http://localhost:9200{

"name" : "ubuntu22",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "bc-twCeeSsSmgjYT6HlkXA",

"version" : {

"number" : "7.11.1",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "ff17057114c2199c9c1bbecc727003a907c0db7a",

"build_date" : "2021-02-15T13:44:09.394032Z",

"build_snapshot" : false,

"lucene_version" : "8.7.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

Initialize Elasticsearch Arkime configuration

Run the command below to initialize Elasticsearch Arkime configuration.

/opt/arkime/db/db.pl http://localhost:9200 initCreate Arkime Admin User Account

You can use the /opt/arkime/bin/arkime_add_user.sh script to create Arkime user account;

/opt/arkime/bin/arkime_add_user.sh --help

addUser.js [] []

Options:

--admin Has admin privileges

--apionly Can only use api, not web pages

--email Can do email searches

--expression Forced user expression

--remove Can remove data (scrub, delete tags)

--webauth Can auth using the web auth header or password

--webauthonly Can auth using the web auth header only, password ignored

--packetSearch Can create a packet search job (hunt)

Config Options:

-c Config file to use

-n Node name section to use in config file

--insecure Allow insecure HTTPS

Run the command below to create Arkime admin user account. Replace the username and password accordingly.

/opt/arkime/bin/arkime_add_user.sh admin "SuperAdmin" changeme --adminRunning Arkime Services

Arkime is made up of 3 components:

- capture – A threaded C application that monitors network traffic, writes PCAP formatted files to disk, parses the captured packets, and sends metadata (SPI data) to elasticsearch.

- viewer – A node.js application that runs per capture machine. It handles the web interface and transfer of PCAP files.

- elasticsearch – The search database technology powering Arkime.

We already started Elasticsearch.

Now start and enable Arkime Capture and viewer services to run on system boot;

systemctl enable --now arkimecapturesystemctl enable --now arkimeviewerCheck the status;

systemctl status arkimecapture● arkimecapture.service - Arkime Capture

Loaded: loaded (/etc/systemd/system/arkimecapture.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-11-12 21:02:08 EAT; 27s ago

Main PID: 4125 (sh)

Tasks: 2 (limit: 1133)

Memory: 30.2M

CPU: 389ms

CGroup: /system.slice/arkimecapture.service

├─4125 /bin/sh -c /opt/arkime/bin/capture -c /opt/arkime/etc/config.ini >> /opt/arkime/logs/capture.log 2>&1

└─4126 /opt/arkime/bin/capture -c /opt/arkime/etc/config.ini

Nov 12 21:02:07 debian11 systemd[1]: Starting Arkime Capture...

Nov 12 21:02:08 debian11 systemd[1]: Started Arkime Capture.

systemctl status arkimeviewer● arkimeviewer.service - Arkime Viewer

Loaded: loaded (/etc/systemd/system/arkimeviewer.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-11-12 21:02:33 EAT; 48s ago

Main PID: 4147 (sh)

Tasks: 8 (limit: 1133)

Memory: 42.1M

CPU: 2.457s

CGroup: /system.slice/arkimeviewer.service

├─4147 /bin/sh -c /opt/arkime/bin/node viewer.js -c /opt/arkime/etc/config.ini >> /opt/arkime/logs/viewer.log 2>&1

└─4148 /opt/arkime/bin/node viewer.js -c /opt/arkime/etc/config.ini

Nov 12 21:02:33 debian11 systemd[1]: Started Arkime Viewer.

Sometime, when you reboot your system, Elasticsearch may take some time before it starts. This thus affects the start of the Arkime capture and viewer services.

We have made some few updates to both Arkime capture and viewer services such that they only start after Elasticsearch has started. Please note that with this change, you may miss the traffic capture while Elasticsearch starts.

Add these lines to the Arkime capture and viewer service files;

After=network.target elasticsearch.service

Requires=network.target elasticsearch.serviceYou can use sed to update these services;

sed -i 's/network.target/network.target elasticsearch.service/' /etc/systemd/system/arkimecapture.service /etc/systemd/system/arkimeviewer.servicesed -i '/After=/a Requires=network.target elasticsearch.service' /etc/systemd/system/arkimecapture.service /etc/systemd/system/arkimeviewer.servicesystemctl daemon-reloadThis will ensure that Arkime capture and viewer will start only after Elasticsearch.

Log Files

You can find Arkime/Moloch logs and Elasticsearch logs on the log files;

/opt/arkime/logs/viewer.log/opt/arkime/logs/capture.log/var/log/elasticsearch/*Adjusting Arkime/Moloch configurations;

if you ever want to update Arkime/Moloch configs, check the configuration file /opt/arkime/etc/config.ini.

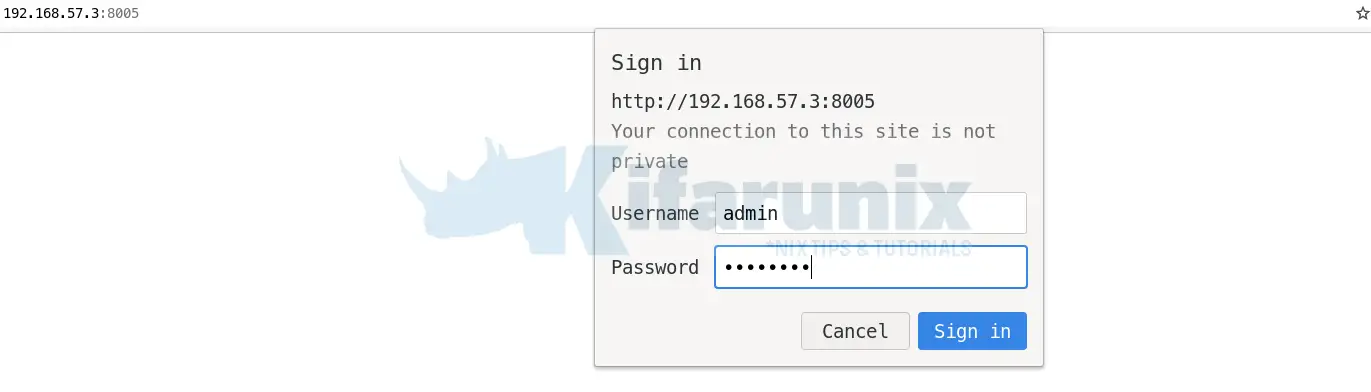

Accessing Arkime/Moloch Web Interface

Moloch is listening on port 8005/tcp by default.

If UFW is running, open this port on it to allow external access.

ufw allow 8005/tcpYou can then access Arkime/Moloch using the URL, http://MOLOCHHOST:8005 with your favorite browser.

Want to enable HTTPS? See this guide.

You will be prompted to enter the basic user authentication credentials you create above.

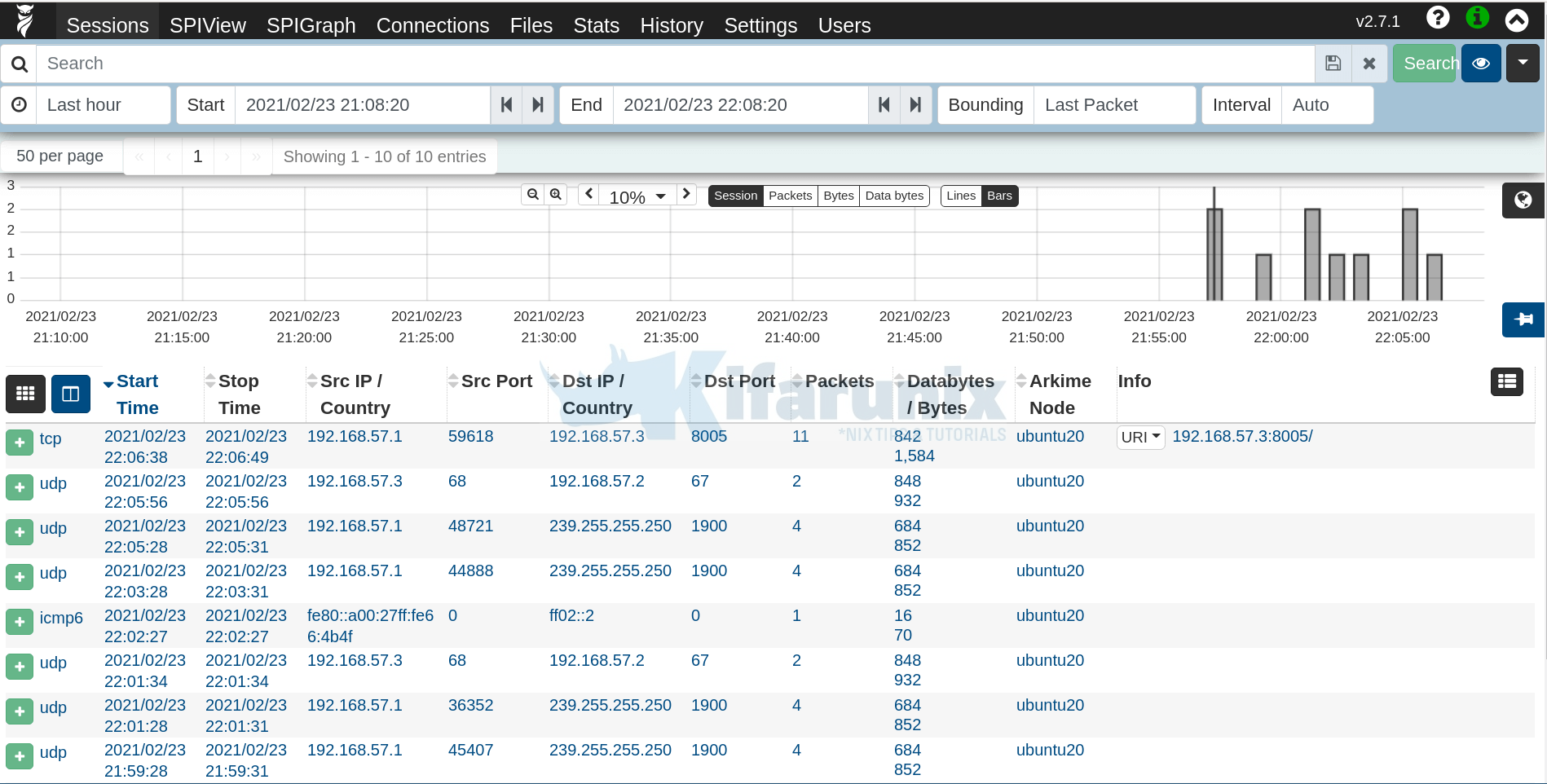

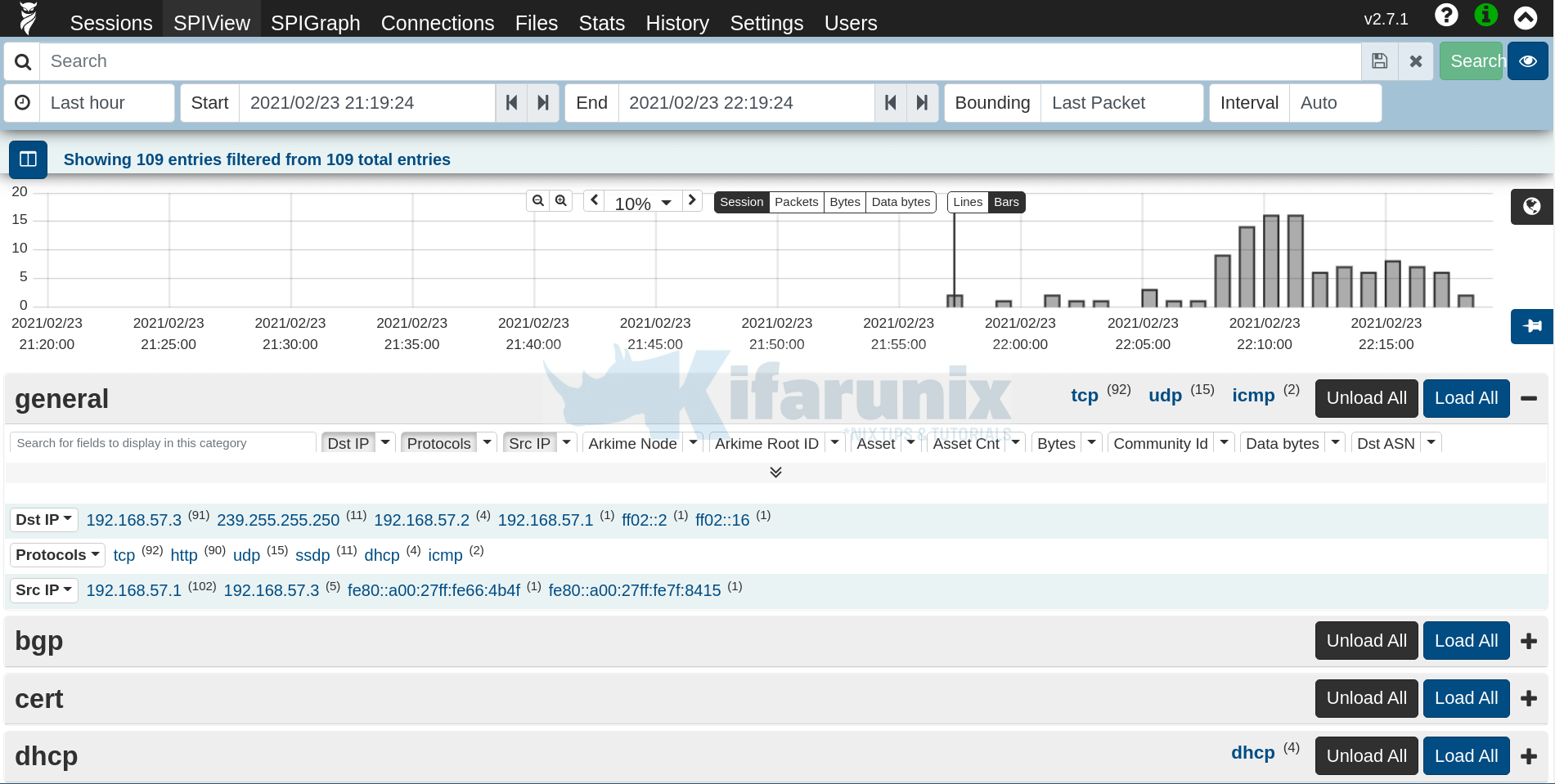

Upon successful authentication, you land on Arkime Web interface.

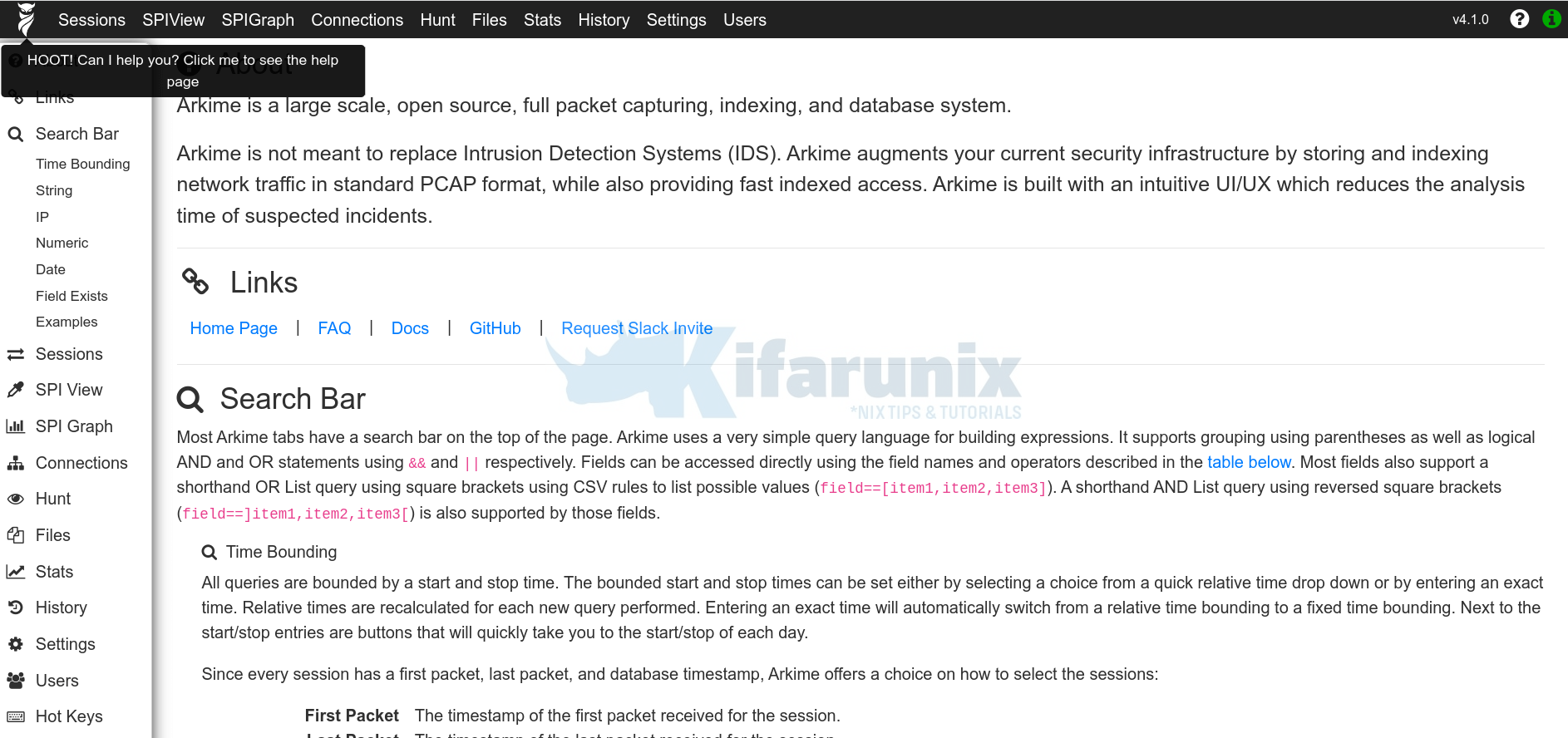

Check Arkime help page by clicking on the owl at the top right!

And that is how simple it is to install Arkime on Ubuntu.

Reference

Arkime Installation README.txt

Arkime Demo (Credentials: arkime:arkime)

Do you have instructions on how to setup a cert so its using SSL to hit https://MOLOCHHOST:8005 vs http?

Hi Kevin,

Please refer here.

hiii

while installing it i got an error

( systemctl enable –now arkimeviewer )

error- Failed to enable unit: Unit file /etc/systemd/system/arkimeviewer.service is masked

You can try to unmask the service and start it again?