In this guide, you will learn how to easily deploy AWX on Kubernetes cluster using AWX Operator. AWX is an opensource Ansible project which provides a web-based user interface, REST API and a task engine for Ansible. It serves as the upstream project from which the automation controller (formerly Ansible Tower) component in Red Hat Ansible Automation Platform is ultimately derived. AWX Operator on the other hand, is a Kubernetes operator that provides an efficient way to install, configure, and manage the lifecycle of AWX on a Kubernetes cluster or OpenShift environment.

Table of Contents

Deploy AWX on Kubernetes Cluster using AWX Operator

Use of AWX Operator is the current recommended way to deploy AWX on Kubernetes cluster or on OpenShift cluster.

To deploy AWX on Kubernetes cluster using AWX operator, proceed as follows.

Setup Kubernetes Cluster

Before you can proceed, you need to have a Kubernetes cluster (or OpenShift, if you are using it) up and running.

In our previous guides, we are have extensively covered how to deploy single master or multi-master Kubernetes cluster.

Check the links below for more details;

Install and Setup Kubernetes Cluster on RHEL 9

Setup Kubernetes cluster on Ubuntu

In our current cluster, we are running a single master-3 worker node kubeadm Kubernetes cluster.

kubectl get nodesNAME STATUS ROLES AGE VERSION

k8s-rhel-node-ms-01 Ready control-plane 3h15m v1.32.1

k8s-rhel-node-wk-01 Ready <none> 157m v1.32.1

k8s-rhel-node-wk-02 Ready <none> 155m v1.32.1

k8s-rhel-node-wk-03 Ready <none> 155m v1.32.1

You can use Minikube if you want.

Deploy AWX Operator on Kubernetes cluster

Once the Kubernetes cluster is up and running, proceed to deploy the AWX Operator.

Create AWX Operator Namespace

The default namespace for AWX Operator is typically awx. Since we are taking a different installation approach of setting up SC, PV and PVC before deploying AWX operator, let’s begin by creating the namespace.

kubectl create namespace awxEnsure Database Data Persistence via Persistent Volumes

As might already know, AWX uses PostgreSQL as its database backend to store its data, including information about job runs, inventories, users, credentials, and other AWX-related configurations. AWX deployments include an embedded PostgreSQL database, which is deployed alongside AWX.

Therefore, if you want to make sure that the database data persists beyond pod restarts, you can use Persistent Volumes (PVs) to store the database data.

For the purposes of this demo, we will use local filesystem storage to provision our AWX database PV. Essentially, to create a PV:

- Define your storage backend using StorageClass

- Create a PV that defines the actual storage space that Kubernetes can use to persist data for the app.

- Create a PVC to request for specific size of storage from a PV (if a PV matching the request needs is available) or directly from the StorageClass if no matching PV.

1. Create StorageClass

There are different storage provisioners that you can configure for use by AWX operator. However, since we are a local on-premise Kubernetes cluster, we will use local StorageClass provisioner. Local StorageClass provisioner is not usually recommended for production environments though!

Thus, create a manifest to define the local storageclass provisioner.

vim ~/local-storageclass.yamlPaste the content below. You can change the metadata name as you so wish.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

Create the StorageClass;

kubectl apply -f ~/local-storageclass.yamlYou can check the status of the StorageClass;

kubectl get sc -n awxNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 7s

2. Create AWX Operator Storage Persistent Volume

Now that you have defined the type of StorageClass to be used, proceed to define the actual storage resource on for AWX on Kubernetes (Persistent Volume, PV).

We have three worker nodes in our cluster:

kubectl get nodesNAME STATUS ROLES AGE VERSION

k8s-rhel-node-ms-01 Ready control-plane 14h v1.32.1

k8s-rhel-node-wk-01 Ready <none> 13h v1.32.1

k8s-rhel-node-wk-02 Ready <none> 13h v1.32.1

k8s-rhel-node-wk-03 Ready <none> 13h v1.32.1

and as such, we will create a PV bound to each one of them so that any pod scheduled on each node, will use a PV defined on the same node. This is just so as to ensure there is no data corruption resulting from multiple pods on different nodes writing to the same storage.

vim ~/awx-pv.yamlThe contents of the file;

apiVersion: v1

kind: PersistentVolume

metadata:

name: awx-pg-wk-01-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/awx/pg/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-rhel-node-wk-01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: awx-pg-wk-02-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/awx/pg/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-rhel-node-wk-02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: awx-pg-wk-03-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/awx/pg/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-rhel-node-wk-03

As a summary, this YAML file defines three PersistentVolumes (PVs) for AWX PostgreSQL data storage in a Kubernetes environment. Each volume is configured with the following key points:

- Storage Class: Uses the

local-storageclass created before for local disk provisioning. - Capacity: 10Gi per volume.

- Reclaim Policy:

Retain(the PV will not be deleted when the PVC is removed). - Node Affinity: Each PV is restricted to a specific node (

k8s-rhel-node-wk-01,k8s-rhel-node-wk-02, andk8s-rhel-node-wk-03). The local storage path,/mnt/k8s/awx/pg/, must already be existing on the node before this PV is used.

So, let’s create the storage path on each worker node.

sudo mkdir -p /mnt/k8s/awx/pg$ getenforce

Permissive

Apply the YAML file to create the PV.

kubectl apply -f ~/awx-pv.yamlConfirm the PV creation.

kubectl get pv -n awxNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

awx-pg-wk-01-pv 10Gi RWO Retain Available local-storage <unset> 10s

awx-pg-wk-02-pv 10Gi RWO Retain Available local-storage <unset> 10s

awx-pg-wk-03-pv 10Gi RWO Retain Available local-storage <unset> 10s

3. Create Persistent Volume Claim for AWX PostgreSQL DB

Next, create the PVC for the AWX PostgreSQL database. By default, AWX operator uses a PVC configured as follows;

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

creationTimestamp: "2025-01-19T11:19:17Z"

finalizers:

- kubernetes.io/pvc-protection

labels:

app.kubernetes.io/component: database

app.kubernetes.io/instance: postgres-15-awx-demo

app.kubernetes.io/managed-by: awx-operator

app.kubernetes.io/name: postgres-15

name: postgres-15-awx-demo-postgres-15-0

namespace: awx

resourceVersion: "37082"

uid: c07fa7f4-ed6b-4d83-bb6d-b820fd1143d4

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

volumeMode: Filesystem

status:

phase: Pending

We will just modify this PVC to use our StorageClass and PV. Here is a modified version.

vim ~/awx-pvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

finalizers:

- kubernetes.io/pvc-protection

labels:

app.kubernetes.io/component: database

app.kubernetes.io/instance: postgres-15-awx-demo

app.kubernetes.io/managed-by: awx-operator

app.kubernetes.io/name: postgres-15

name: postgres-15-awx-demo-postgres-15-0

namespace: awx

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-storage

volumeMode: Filesystem

Create the AWX PostgreSQL PVC;

kubectl apply -f ~/awx-pvc.yamlConfirm;

kubectl get pvc -n awxNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

postgres-15-awx-demo-postgres-15-0 Pending local-storage <unset> 61s

As you can see, it is not bound yet since there is no request for storage has been issues yet.

Accessing AWX Projects from the Host Level

To be able to access the projects directory (where Ansible playbooks and related files are stored), /var/lib/awx/projects/, from the host level, you need to ensure that this directory is also backed by a Persistent Volume (PV) which can be accessed from the host file system. We will use an NFS share for this.

Configure Persistent Volume for Projects Directory via NFS share:

We already have an NFS share, /mnt/awx/projects, to use for our AWX projects PV.

Let’s create a PV and PVC manifest.

vim ~/awx-projects-pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: awx-projects-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 2Gi

storageClassName: ""

persistentVolumeReclaimPolicy: Retain

nfs:

path: /mnt/awx/projects

server: 192.168.233.181

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: awx-projects-pvc

namespace: awx

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

Apply the manifest to create the PV and PVC for AWX projects.

kubectl apply -f ~/awx-projects-pv.yamlSo, we now have PV for database and projects;

kubectl get pv,pvc -n awxNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

persistentvolume/awx-pg-wk-01-pv 10Gi RWO Retain Available local-storage <unset> 7m22s

persistentvolume/awx-pg-wk-02-pv 10Gi RWO Retain Available local-storage <unset> 7m22s

persistentvolume/awx-pg-wk-03-pv 10Gi RWO Retain Available local-storage <unset> 7m22s

persistentvolume/awx-projects-pv 2Gi RWX Retain Bound awx/awx-projects-pvc <unset> 4m19s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

persistentvolumeclaim/awx-projects-pvc Bound awx-projects-pv 2Gi RWX <unset> 2m49s

persistentvolumeclaim/postgres-15-awx-demo-postgres-15-0 Pending local-storage <unset> 5m9s

Deploy AWX Operator

While you can clone the AWX operator repository and deploy it on from the repository directory on your system, you can also use a Kustomization file instead. Since we need to kustomize our deployment with custom StorageClass as well as PV/PVC created above, we will use the Kustomization route.

Here is a sample Kustomization file contents;

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=<tag>

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: <tag>

# Specify a custom namespace in which to install AWX

namespace: awx

As such, let’s create a directory from where will create our AWX operator kustomization file.

mkdir awxNavigate into the directory and create a Kustomization file.

cd awxvim kustomization.yamlPaste the contents below;

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: awx

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=2.19.1

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 2.19.1

So deploy the AWX operator first so that the necessary CRDs and other configs are created before you can customize it.

kubectl apply -k .At a high level summary, this is what happens:

- Configuration the AWX namespace for organizing resources.

- Defining Custom Resources (CRDs) for AWX-related components like backups, restores, and mesh ingress.

- Setting up service accounts and RBAC roles to manage permissions for the AWX operator.

- Configuring services and deployments for the AWX operator to run and expose necessary metrics.

Next, we can customize the AWX deployment to define the persistent storage for Database and projects. Below is our manifest to make these updates.

cat awx-configs.yamlapiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx-demo

namespace: awx

spec:

service_type: nodeport

nodeport_port: 31500 # You can remove to assign random port or change to you preference

ingress_type: none

projects_persistence: true

projects_existing_claim: awx-projects-pvc

postgres_init_container_resource_requirements: {}

postgres_data_volume_init: true

postgres_storage_class: local-storage

postgres_init_container_commands: |

chown -R 26:26 /var/lib/pgsql/data

Update the file accordingly.

Refer to the documentation for more explanation on the configuration parameters used.

To apply these changes, edit the kustomization file and include this manifest under the resources section.

cat kustomization.yamlapiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: awx

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=2.19.1

- awx-configs.yaml

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 2.19.1

Apply the Kustomization to deploy AWX operator on Kubernetes cluster.

kubectl apply -k .It will take a while before the AWX operator and other services is up and running.

You can list all resources created in the awx namespace as follows;

kubectl get all -n awxYou can also watch the pods;

kubectl get pods -n awx -wNAME READY STATUS RESTARTS AGE

awx-demo-postgres-15-0 1/1 Running 0 117s

awx-demo-task-5d5b6b856b-lhdlx 0/4 Init:0/3 0 91s

awx-demo-web-7cb57bb787-h846q 3/3 Running 0 92s

awx-operator-controller-manager-58b7c97f4b-9h8fm 2/2 Running 0 3m22s

Or watch the events;

kubectl get events -n awx -wIt will take a while before the AWX is ready.

Sample events:

0s Normal Scheduled pod/awx-demo-migration-24.6.1-kdtmp Successfully assigned awx/awx-demo-migration-24.6.1-kdtmp to k8s-rhel-node-wk-02

0s Normal Pulled pod/awx-demo-migration-24.6.1-kdtmp Container image "quay.io/ansible/awx:24.6.1" already present on machine

0s Normal Created pod/awx-demo-migration-24.6.1-kdtmp Created container: migration-job

0s Normal Started pod/awx-demo-migration-24.6.1-kdtmp Started container migration-job

0s Normal Completed job/awx-demo-migration-24.6.1 Job completed

0s Normal Pulling pod/awx-demo-task-77ddf68cf8-snzkh Pulling image "quay.io/ansible/awx-ee:24.6.1"

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Successfully pulled image "quay.io/ansible/awx-ee:24.6.1" in 26.016s (26.016s including waiting). Image size: 468650745 bytes.

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: init-receptor

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container init-receptor

0s Normal Pulling pod/awx-demo-task-77ddf68cf8-snzkh Pulling image "quay.io/centos/centos:stream9"

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Successfully pulled image "quay.io/centos/centos:stream9" in 1.897s (1.897s including waiting). Image size: 60726367 bytes.

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: init-projects

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container init-projects

0s Normal Pulling pod/awx-demo-task-77ddf68cf8-snzkh Pulling image "docker.io/redis:7"

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Successfully pulled image "docker.io/redis:7" in 4.069s (4.069s including waiting). Image size: 45006722 bytes.

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: redis

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container redis

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Container image "quay.io/ansible/awx:24.6.1" already present on machine

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: awx-demo-task

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container awx-demo-task

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Container image "quay.io/ansible/awx-ee:24.6.1" already present on machine

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: awx-demo-ee

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container awx-demo-ee

0s Normal Pulled pod/awx-demo-task-77ddf68cf8-snzkh Container image "quay.io/ansible/awx:24.6.1" already present on machine

0s Normal Created pod/awx-demo-task-77ddf68cf8-snzkh Created container: awx-demo-rsyslog

0s Normal Started pod/awx-demo-task-77ddf68cf8-snzkh Started container awx-demo-rsyslog

Pods at the end of setup;

kubectl get pod -n awxNAME READY STATUS RESTARTS AGE

awx-demo-migration-24.6.1-kdtmp 0/1 Completed 0 7m25s

awx-demo-postgres-15-0 1/1 Running 0 27m

awx-demo-task-77ddf68cf8-snzkh 4/4 Running 0 24m

awx-demo-web-6f644fc5-vkg7v 3/3 Running 5 (9m18s ago) 24m

awx-operator-controller-manager-58b7c97f4b-ggfjq 2/2 Running 2 (25m ago) 30m

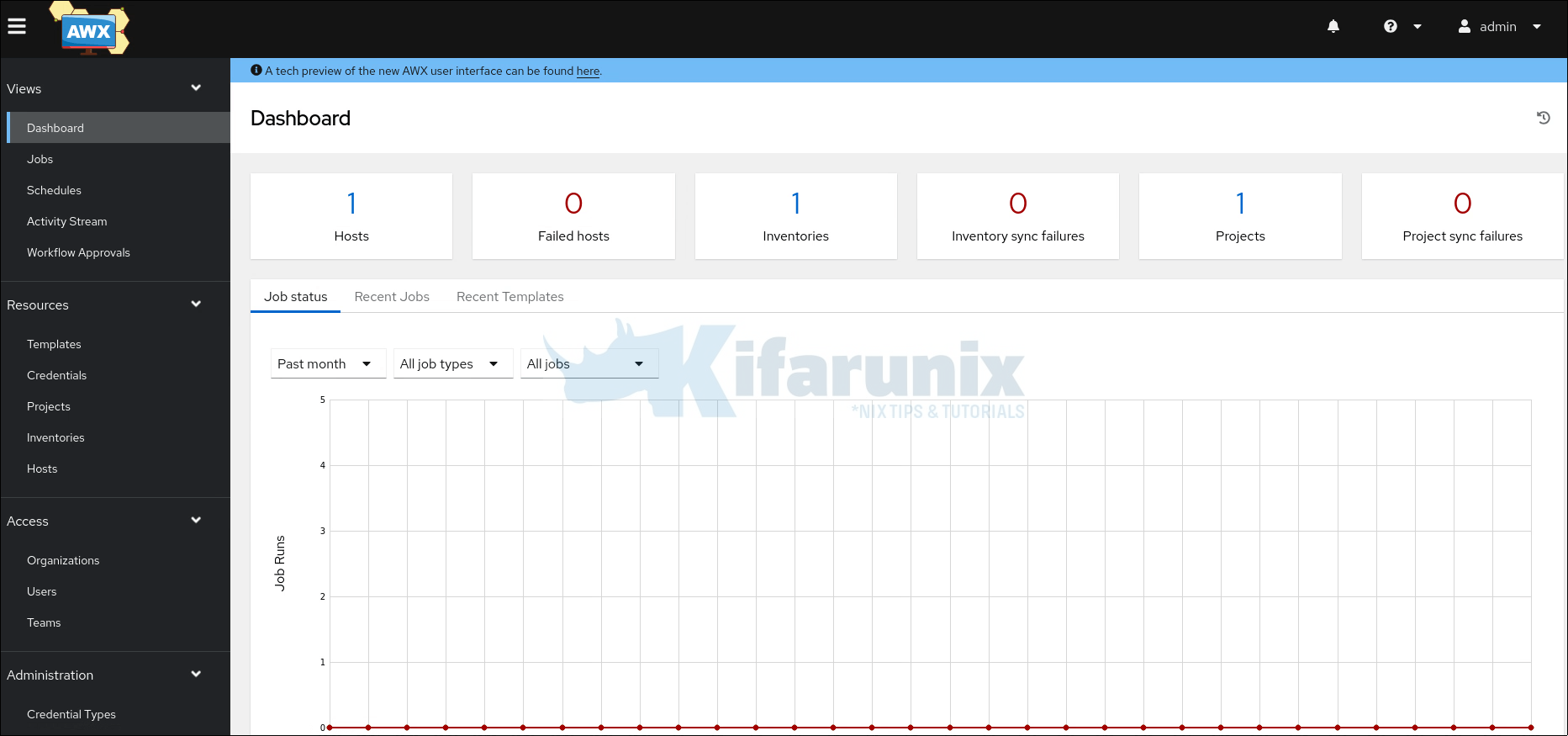

Accessing AWX Web Interface

By now, AWX should be up and running on the Kubernetes cluster.

If you check the available services;

kubectl get svc -n awxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

awx-demo-postgres-15 ClusterIP None <none> 5432/TCP 47m

awx-demo-service NodePort 10.111.226.182 <none> 80:31500/TCP 44m

awx-operator-controller-manager-metrics-service ClusterIP 10.110.9.218 <none> 8443/TCP 51m

If you see, the web service is exposed via NodePort on port 31500/tcp. This is the port that was defined in the awx-configs.yaml configuration file above. You should be able to access AWX service via the IP of any node in the cluster.

Remember, we are running our Kubernetes cluster on RHEL 9 nodes. And since we are using Calico CNI, we have disabled the firewalld. Hence, the service should be seamlessly accessible.

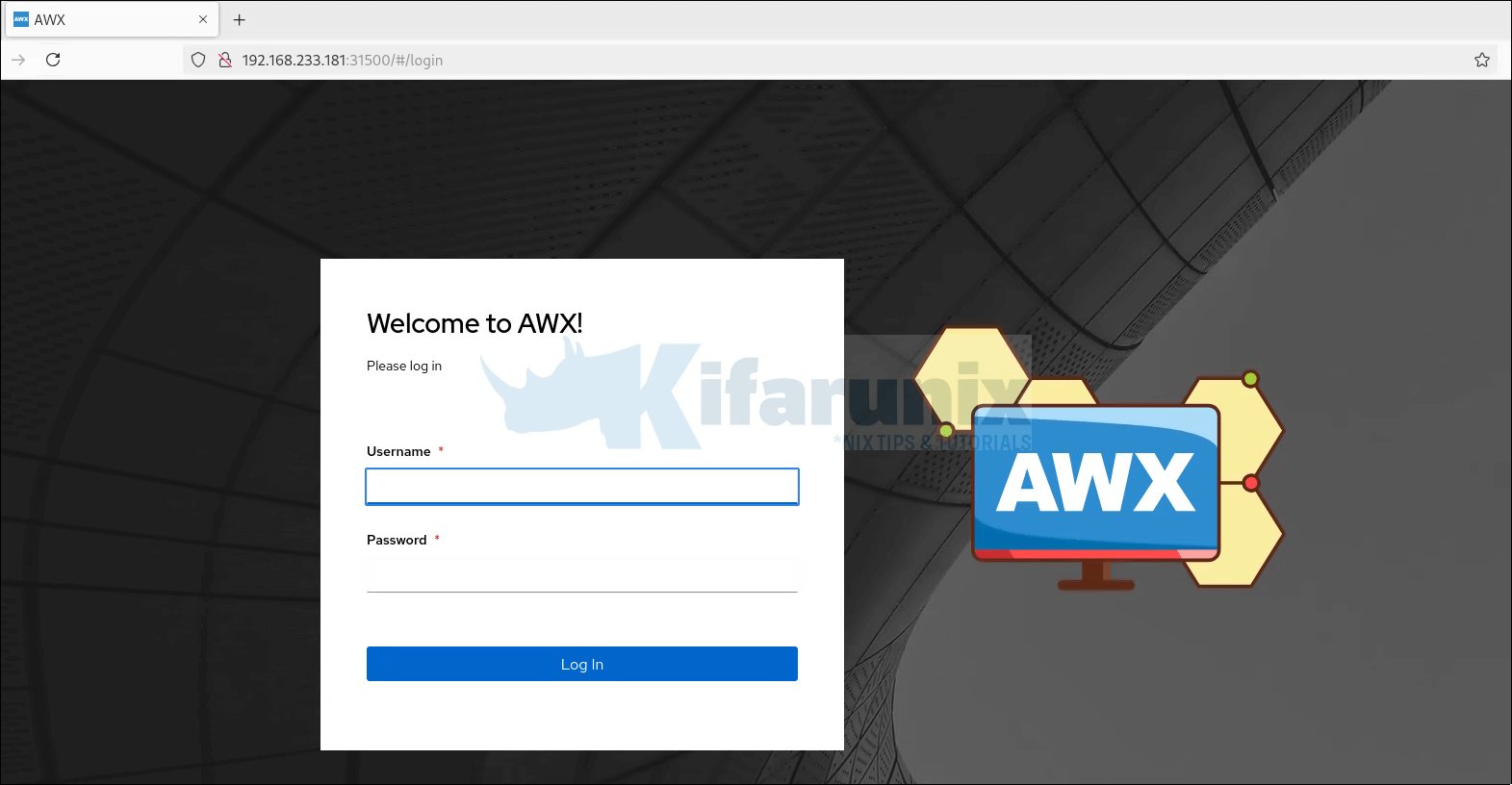

Access the AWX web interface via http://<any-cluster-node-IP>:NodePort. For example, http://192.168.233.181:31500.

The default username for AWX web is admin. You can extract the login password from the AWX admin password secret.

kubectl get secrets -n awxNAME TYPE DATA AGE

awx-demo-admin-password Opaque 1 17h

awx-demo-app-credentials Opaque 3 17h

awx-demo-broadcast-websocket Opaque 1 17h

awx-demo-postgres-configuration Opaque 6 17h

awx-demo-receptor-ca kubernetes.io/tls 2 17h

awx-demo-receptor-work-signing Opaque 2 17h

awx-demo-secret-key Opaque 1 17h

redhat-operators-pull-secret Opaque 1 17h

Check the password fields;

kubectl get secret awx-demo-admin-password -n awx -o json{

"apiVersion": "v1",

"data": {

"password": "U29lV1h5cndNNEtuTWcyWHZuS1RjWm5hSXZtZHhpZUk="

},

"kind": "Secret",

"metadata": {

"annotations": {

...

The secret values are usually Base64 encoded. Extract and decode.

kubectl get secret awx-demo-admin-password -n awx -o jsonpath='{.data.password}' | base64 --decode; echoThe resulting string should be your password. Use it to login to AWX web as user admin.

And that is it!

Confirm that the DB data is available on worker nodes Filesystem. You can do this by checking where the Pod is currently running and checking the presence of data on the respective node filesystem path

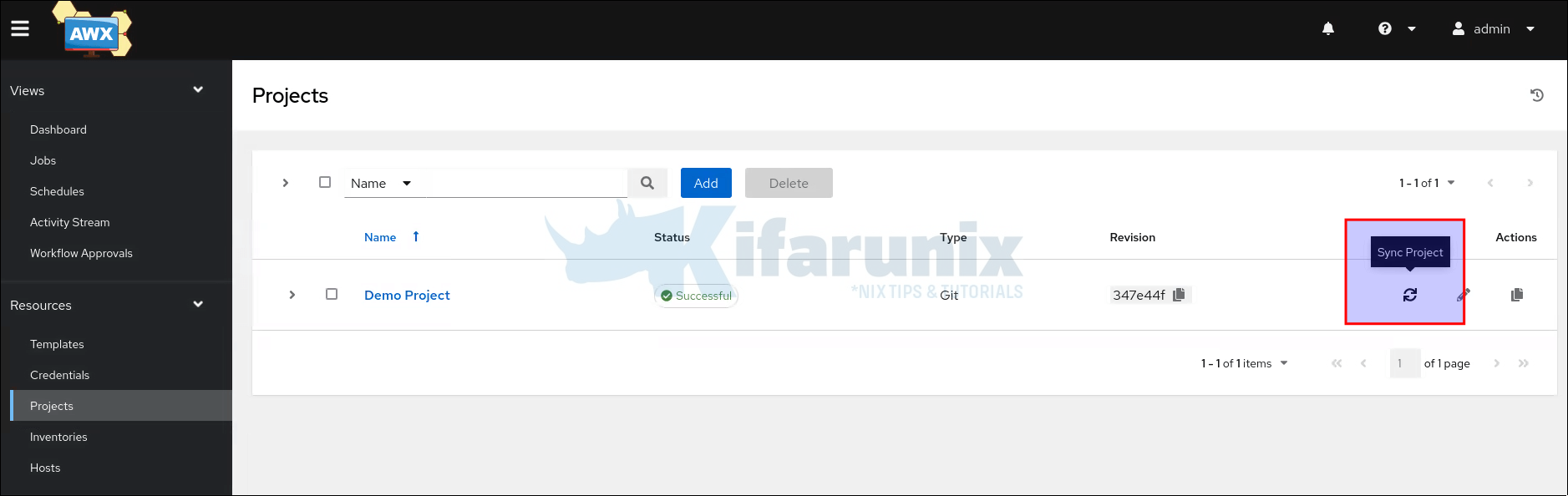

kubectl get pod awx-demo-postgres-15-0 -o wide -n awxFor the AWX projects, you can validate by navigating to the AWX web dashboard, head over to projects. You will see Demo project. Click the Sync button to sync the project data to the underlying storage. In this case, our storage path is an NFS share.

You should then be able to see project files;

ll /mnt/awx/projects/ ll /mnt/awx/projects/

total 0

drwxr-xr-x. 3 kifarunix root 58 Jan 23 08:57 _6__demo_project

-rwxr-xr-x. 1 kifarunix root 0 Jan 23 08:57 _6__demo_project.lock

So, you can as well start creating your projects here and sync them to AWX.

That marks the end of this tutorial on how to deploy AWX on Kubernetes cluster using AWX Operator.