In this blog post, you will learn about statefulsets in Kubernetes and everything you need to know: the definition and purpose of StatefulSets, their importance in handling stateful applications, and how they differ from other Kubernetes objects like Deployments. By the end, you’ll have a thorough understanding of how to create, manage, and scale StatefulSets.

Table of Contents

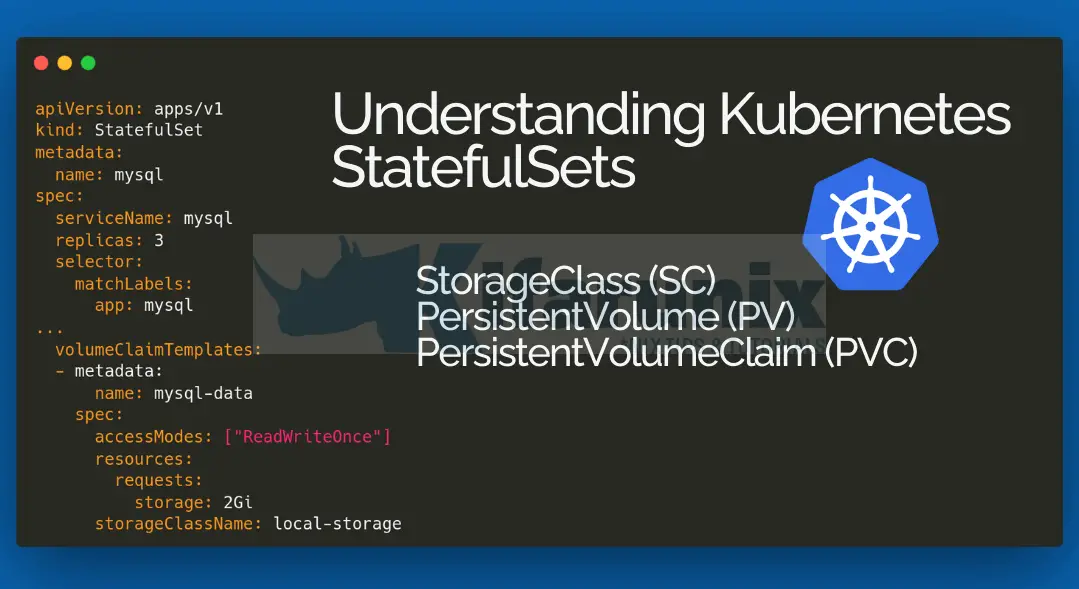

Understanding StatefulSets in Kubernetes

What are StatefulSets?

A StatefulSet in Kubernetes is a workload API object specifically designed for managing stateful applications that require persistent storage and stable network identities. They ensure order, identity and persistence of your stateful application. Imagine a database in a Kubernetes cluster. Each database Pod represents an instance, but the data itself needs to persist across restarts or Pod scaling. This is where StatefulSets come into play.

Core Concepts in Kubernetes StatefulSets

There are a number of Kubernetes concepts that are used in StatefulSets that you should be familiar with. These include:

- Pod: As you already know, a Pod is the smallest deployable unit that represents a set of one or more containers running together on a Kubernetes cluster. Pods are the fundamental building blocks of Kubernetes applications and serve as the basic unit of execution.

- Cluster DNS: The name of a StatefulSet object must be a valid DNS label. Cluster DNS facilitates service discovery by assigning DNS names to Services and Pods.

- Headless Service: In Kubernetes, regular Services use clusterIP to provide a unified virtual IP for load balancing requests across all Pods, suited for stateless applications. In contrast, headless services, used by StatefulSets, do not use clusterIP (clusterIP: None) and provide direct access to each Pod’s IP address or DNS name via internal DNS records served by the cluster’s DNS service. This setup allows stateful applications to maintain stable and direct connections to specific Pods, crucial for tasks like data replication and configuration where individual Pod identity is essential for maintaining consistency and stability across the cluster.

- PersistentVolumes (PVs): Kubernetes objects that represent persistent storage resources available in the cluster (e.g., local storage, cloud storage). They exist independently of Pods and persist data even when Pods are deleted or rescheduled.

- PersistentVolume Claims (PVCs): PVCs act as requests for storage by Pods, specifying the access mode and storage requirements. StatefulSets manage PVCs to ensure Pods have persistent storage across restarts and scaling operations.

Stateful vs Stateless Applications

So, what exactly is a stateful application and how does it differ with stateless application? Before diving further into StatefulSets, it’s essential to grasp the fundamental differences between stateful and stateless applications:

Stateful Applications

These are applications that maintain data and state between sessions. They require persistent storage and unique identities to preserve data integrity. Examples include databases (MySQL, PostgreSQL), message queues (Kafka), and caching solutions (Redis) etc.

Managing stateful applications introduces complexities related to data persistence, reliable network communication, and lifecycle management, which StatefulSets address effectively in Kubernetes.

Stateless Applications

In contrast, stateless applications do not store session data locally. They can be easily scaled horizontally by adding or removing instances without concerns about data persistence or state management. Think of a web servers serving static content, that is an example of a stateless application.

In summary;

| Feature | Stateful Applications | Stateless Applications |

| State Management | Maintains state and data between sessions | Does not maintain state locally |

| Data Persistence | Requires persistent storage for data integrity | Data is ephemeral and does not persist |

| Scalability | Scaling requires careful management to maintain data consistency | Easily scale horizontally by adding or removing instances |

| Network Identity | Typically have stable network identities (hostnames) | Network identity is not crucial and may change |

| Restart Behavior | Pods may retain state across restarts and rescheduling | Each instance is independent; no state retained |

| Use Cases | Databases (MySQL, PostgreSQL), distributed systems (Kafka, Cassandra) | Web servers, load balancers, API gateways |

| StatefulSet Management | Managed by StatefulSets for ordered deployment, scaling, and identity | Managed by Deployments for simple scaling and restarts |

StatefulSets vs. Deployments

How does StatefulSets compare to Kubernetes Deployments? While both are Kubernetes controllers used to manage Pods, they serve different purposes:

| Feature | StatefulSet | Deployment |

| Target Applications | Used with applications that require stable identities and persistent storage for data such databases, message queues, caching solutions | Ideal for stateless applications that are easily scalable and don’t rely on persistent data such as web servers, API gateways, load balancers |

| Pod Identity | StatefulSets ensure each Pod has a unique and persistent identifier (0,1,2..) that remains constant across restarts or rescheduling. This is crucial for stateful applications that depend on specific Pod order or communication | Deployment treat Pods as interchangeable units. That is, they do not maintain local state or session data that needs to be preserved between restarts or rescheduling. They also receive non-ordinal, random identifiers (Pod-abcdef1234-xyz) that may change across restarts making them simpler to manage |

| Data Persistence | Requires persistent storage (integrates with PVs). This guarantees data survives Pod restarts or scaling operations | Data is ephemeral (not persisted) |

| Deployment and Scaling | When you scale a StatefulSet (adding or removing Pods), it follows a predictable order. This is essential for applications with dependencies between Pods or specific initialization sequences. | Pods are independent of each other. Deployments does not impose any specific order for these operations, hence, Pods can start or stop in any sequence based on cluster conditions and scheduling |

| Updates | Facilitates rolling updates, but due to the ordered nature of StatefulSets, updates might require more planning to ensure data consistency across Pods. | Rolling updates: New Pods with the updated configuration are launched gradually, while old Pods are terminated. |

Creating and Managing StatefulSets

Which method to Create StatefulSet. Declarative or Imperative?

You can create a Kubernetes StatefulSet using declarative or imperative method.

- Declarative method allows you to define the desired state of your StatefulSet in a YAML manifest file which specifies the configuration details like container image, replicas, storage needs, and labels. You then use the kubectl apply command to apply the configurations defined in the YAML file and create the StatefulSet.

- Imperative method allows you directly issue kubectl create command to configure and create the StatefulSet. In the command you can specify parameters like image, replicas, storage, and labels.

While both methods work fine, the disadvantages of the imperative method is that it is:

- Error Prone: Manual configuration through commands increases the risk of typos or syntax errors.

- Difficult to Reproduce: It’s challenging to recreate the exact configuration later or deploy it consistently across environments without a manifest file.

- Limited Functionality: Imperative commands might not offer the same level of flexibility and detail as a well-defined YAML manifest.

Configure Persistent Storage Provisioner

Stateful applications often require persistent storage to store data that survives Pod restarts or rescheduling. For that reason, you need to configure a PersistentVolume StorageClass with a specific provisioner, which defines how storage volumes will be provisioned in your Kubernetes cluster. This StorageClass provisioner takes care of dynamically allocating storage based on your Persistent Volume Claim (PVC) requests within the StatefulSet. Some popular provisioners include:

- Local (local storage path on a node for testing purposes only)

- Ceph RBD (Ceph block storage): provisioner for block storage using Ceph.

- AWS Elastic Block Store (EBS) (for AWS deployments)

- Azure Managed Disks (for Azure deployments)

- Google Persistent Disk (GPD): Provisioner for Google Cloud Persistent Disks in Google Cloud Platform (GCP).

- OpenEBS: OpenEBS provisioner for block storage using iSCSI.

- NFS Client Provisioner: Provisioner for NFS volumes provided by an NFS server.

- e.t.c

In this guide, we are running a Kubernetes cluster on an on-premise local server and for that reason, we will use local storage provisioner.

Check to see if there are any existing StorageClasses in your Kubernetes cluster:

kubectl get storageclass -AIf no suitable StorageClass exists in your respective namespace, create one based on your storage solution or requirements.

Example StorageClass for local volumes:

cat local-storageclass.yamlapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

Where:

- metadata.name: Specifies the name of the StorageClass (

local-storagein this example). - provisioner: Set to

kubernetes.io/no-provisionersince local volumes are not provisioned by a storage provider. - volumeBindingMode: Determines when a PersistentVolume (PV) is bound.

WaitForFirstConsumerenables binding of the PV when the first PVC using this StorageClass is created.

Apply the YAML definition to create the StorageClass in your cluster:

kubectl apply -f <filename>.yamlFor example;

kubectl apply -f local-storageclass.yamlConfirm;

kubectl get storageclassNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 26s

In Kubernetes versions 1.30 and above, the default reclaimPolicy for StorageClasses created without explicitly specifying the reclaim policy type, is set to “Delete.” This means by default, Kubernetes will attempt to reclaim the volume when the last Persistent Volume Claim (PVC) referencing it is deleted i.e, when a PVC is deleted, the corresponding PV is automatically deleted. However, since we are using the kubernetes.io/no-provisioner which implies no dynamic storege provisioning, there won’t be any PVs automatically created for this StorageClass and as such, the reclaimPolicy setting in the StorageClass becomes less relevant because there are no PVs that will be dynamically provisioned. Therefore, when you create a PV manually, you can define the reclaim policy to apply to your PVCs.

Prepare Persistent Volume (PV) for each Node

Next, you need to manually create Persistent Volume (PV) that matches the StorageClass used above for the local volume.

If you have a single worker node, it is enough to create a PV for that single node only. However, if you have multiple worker nodes and want to schedule pods on each node, then you need to create a PV for each node.

By creating a PV for each node, you define that a particular PV is bound to the specific worker node in the cluster. This ensures that when a pod is scheduled on a node, it will use the PV attached to that node. This node affinity prevents data loss because if a pod is rescheduled due to node failure or maintenance, Kubernetes will attempt to reschedule it on the same node where its PV exists.

Note that, you need to define the nodeAffinity when using local volumes. Reason being:

- Local volumes are tied to specific directories on worker nodes. Without nodeAffinity, a pod requesting a local volume could potentially be scheduled to any node in the cluster, even if the directory containing the data doesn’t exist there. This can lead to pod scheduling failures or unexpected behavior.

- Enforcing nodeAffinity for local volumes ensures explicit scheduling of pods to the intended nodes. This promotes clarity and consistency in your deployments, especially when dealing with multiple worker nodes.

We have three worker nodes;

kubectl get nodesNAME STATUS ROLES AGE VERSION

master-01 Ready control-plane 12d v1.30.2

master-02 Ready control-plane 12d v1.30.2

master-03 Ready control-plane 12d v1.30.2

worker-01 Ready <none> 33h v1.30.2

worker-02 Ready <none> 33h v1.30.2

worker-03 Ready <none> 33h v1.30.2

Here is our sample PV manifest file for each of our three worker nodes.

cat mysql-pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv-worker-01

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/mysql/data/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker-01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv-worker-02

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/mysql/data/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker-02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv-worker-03

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /mnt/k8s/mysql/data/

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker-03

Where:

- apiVersion: Specifies the Kubernetes API version (

v1). - kind: Specifies the resource type (

PersistentVolume). - metadata: Provides metadata for the PV, including its name (

mysql-pv-NODE-NAME). - spec: Specifies the specifications for the PV:

- capacity: Defines the storage capacity of the PV (

10Gi). - volumeMode: Indicates the volume mode (

Filesystem). Can also be set to Block is using raw block devices - accessModes: Specifies the access mode (

ReadWriteOnce), allowing read and write access by a single node concurrently.

- capacity: Defines the storage capacity of the PV (

- persistentVolumeReclaimPolicy: Sets the reclaim policy for the PV to

Retain, meaning that when the associated PersistentVolumeClaim (PVC) is deleted, the PV is not automatically deleted. - storageClassName: Associates the PV with a StorageClass named

local-storagecreated before. - local: Specifies that this PV uses local storage on the Kubernetes nodes.

- path: Defines the local path on the node (

/mnt/k8s/mysql/data) where the PV will be mounted. It must already exist on the node when you deploy your application that uses it.

- path: Defines the local path on the node (

- nodeAffinity: Defines node affinity rules, ensuring the PV is bound only to nodes that match specific criteria:

- required: Specifies that the PV should be available on nodes that match the following conditions.

- nodeSelectorTerms: Specifies a list of node selectors.

- matchExpressions: Defines how nodes are selected based on label keys and values.

- key: Specifies the label key (

kubernetes.io/hostname). - operator: Defines the operator for matching (

In, meaning the label value must match one of the specified values). - values: Lists the specific worker node hostnames (

worker-01,worker-02,worker-03) where the PV should be available.

- key: Specifies the label key (

- matchExpressions: Defines how nodes are selected based on label keys and values.

- nodeSelectorTerms: Specifies a list of node selectors.

- required: Specifies that the PV should be available on nodes that match the following conditions.

Now, create the PV;;

kubectl apply -f mysql-pv.yamlConfirm;

kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

mysql-pv-worker-01 10Gi RWO Retain Available local-storage <unset> 5s

mysql-pv-worker-02 10Gi RWO Retain Available local-storage <unset> 5s

mysql-pv-worker-03 10Gi RWO Retain Available local-storage <unset> 5s

Create a PersistentVolumeClaim (PVC)

Now, you would create a PersistentVolumeClaim that your application pods in your StatefulSet will use to request storage.

However, to automate the PVC creation and ensure consistent storage configurations across all pods managed by the StatefulSet, we will utilize volumeClaimTemplates. volumeClaimTemplates allow you to define PVC specifications directly within the StatefulSet configuration. Kubernetes uses these templates to automatically create PVCs when the StatefulSet is deployed or updated.

Creating a StatefulSet in Kubernetes Cluster

Now that we have the StorageClass, PV and a PVC, you can now create a StatefulSet that provides a persistent storage for each pod.

So, we will combine two resources in one manifest file; the service to expose MySQL app, the MySQL app StatefulSet.

This is our sample manifest file;

cat mysql-app.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

ports:

- port: 3306

name: db

clusterIP: None

selector:

app: mysql

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

serviceName: mysql

replicas: 3

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:8.0

ports:

- containerPort: 3306

name: db

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: mysql-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-storage

This sample YAML configuration, consists of a Kubernetes Service (mysql) and a StatefulSet (mysql) that deploys MySQL Pods with persistent storage.

In summary:

- This StatefulSet, named

mysql, orchestrates a MySQL deployment with three replicas, each labeledapp: mysql. Pods use themysql:latestDocker image and expose MySQL on port 3306. Persistent storage of 10Gi is requested per pod usingmysql-persistent-storageVolumeClaimTemplates withReadWriteOnceaccess mode, ensuring reliable data persistence and scalability in Kubernetes environments.

Ensure the local storage path already exists on ALL worker nodes:

sudo mkdir -p /mnt/k8s/mysql/dataThen apply the StatefulSet configuration:

kubectl apply -f mysql-app.yamlListing StatefulSets

You can list statefulsets;

kubectl get statefulsetThis shows the statefulsets in the default namespace. To check specific namespace, add the option [–namespace <namespace name>|-n <namespace-name>]

Sample output;

NAME READY AGE

mysql 3/3 30s

To print a detailed description;

kubectl describe statefulset mysqlName: mysql

Namespace: default

CreationTimestamp: Sat, 29 Jun 2024 07:33:20 +0000

Selector: app=mysql

Labels:

Annotations:

Replicas: 3 desired | 3 total

Update Strategy: RollingUpdate

Partition: 0

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=mysql

Containers:

mysql:

Image: mysql:8.0

Port: 3306/TCP

Host Port: 0/TCP

Environment:

MYSQL_ROOT_PASSWORD: password

Mounts:

/var/lib/mysql from mysql-data (rw)

Volumes:

Node-Selectors:

Tolerations:

Volume Claims:

Name: mysql-data

StorageClass: local-storage

Labels:

Annotations:

Capacity: 10Gi

Access Modes: [ReadWriteOnce]

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 3m27s statefulset-controller create Claim mysql-data-mysql-0 Pod mysql-0 in StatefulSet mysql success

Normal SuccessfulCreate 3m27s statefulset-controller create Pod mysql-0 in StatefulSet mysql successful

Normal SuccessfulCreate 3m25s statefulset-controller create Claim mysql-data-mysql-1 Pod mysql-1 in StatefulSet mysql success

Normal SuccessfulCreate 3m25s statefulset-controller create Pod mysql-1 in StatefulSet mysql successful

Normal SuccessfulCreate 3m22s statefulset-controller create Claim mysql-data-mysql-2 Pod mysql-2 in StatefulSet mysql success

Normal SuccessfulCreate 3m22s statefulset-controller create Pod mysql-2 in StatefulSet mysql successful

You can also get PVCs;

kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

mysql-data-mysql-0 Bound mysql-pv-worker-03 10Gi RWO local-storage <unset> 5m1s

mysql-data-mysql-1 Bound mysql-pv-worker-02 10Gi RWO local-storage <unset> 4m59s

mysql-data-mysql-2 Bound mysql-pv-worker-01 10Gi RWO local-storage <unset> 4m56s

kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

mysql-pv-worker-01 10Gi RWO Retain Bound default/mysql-data-mysql-2 local-storage <unset> 7m43s

mysql-pv-worker-02 10Gi RWO Retain Bound default/mysql-data-mysql-1 local-storage <unset> 7m43s

mysql-pv-worker-03 10Gi RWO Retain Bound default/mysql-data-mysql-0 local-storage <unset> 7m43s

And the Pods associated with StatefulSet;

kubectl get podNAME READY STATUS RESTARTS AGE

mysql-0 1/1 Running 0 11m

mysql-1 1/1 Running 0 11m

mysql-2 1/1 Running 0 11m

Scaling StatefulSets

It is also possible to scale StatefulSets. As you can see in the output, we have three statefulsets and all of them in READY state;

kubectl get statefulsetNAME READY AGE

mysql 3/3 15m

For example;

kubectl scale statefulset mysql --replicas=6However, note that as per the setup of that we have used here where we have PV for each worker node, then you have to also create additional PVs to meet your requirements. Otherwise, that is how you can do stateful set scaling, especially where dynamic provisioning is supported.

You can also scale down;

kubectl scale statefulset mysql --replicas=2Deleting StatefulSets

When deleting a StatefulSet, ensure that PVCs and the data they hold are managed correctly to avoid data loss.

Otherwise, you can delete using the manifest file;

kubectl delete -f mysql-app.yamlAssuming that the statefulset was defined in the manifest file called mysql-app.yaml.

Similarly, use the name of the statefulset. For example, to delete just the statefulset without touching the pods or data stored in the PersistentVolumes;

kubectl delete statefulset mysql --cascade=orphanJust omit –cascade=orphan to delete everything associated with statefulset.

And that brings us to the end of our tutorial on understanding statefulsets in Kubernetes

Conclusion

StatefulSets provide stable identities, persistent storage, and controlled scaling for stateful applications, making them essential for running databases, distributed systems, and other stateful workloads in Kubernetes environments.

Read more on;