In this tutorial, we are going to learn how to setup glusterfs distributed replicated volume on CentOS 8. Gluster is a free and open source scalable network filesystem which enables you to create large, distributed storage solutions for media streaming, data analysis, and other data- and bandwidth-intensive tasks.

Setting up GlusterFS Distributed Replicated Volume

Prerequisites

Before you can proceed, ensure that the following are met;

- Have 6 nodes for your GlusterFS cluster. An even number of bricks must be used in this type of volume.

- Attach an extract disk (different from the / partition) for use in providing Gluster storage unit (

brick) - Partition the disks using LVM and format the disk/brick with an XFS filesystem.

- Ensure time is synchronized among your cluster notes

- Open the required Gluster Ports/Services on Firewall on all your cluster nodes.

In our previous tutorial, we covered how to install and setup GlusterFS storage cluster on CentOS 8 and all the above requirements. Follow the link below to check it.

Install and Setup GlusterFS Storage Cluster on CentOS 8

Types of Gluster Volumes

GlusterFS supports different types of volumes that offers various features. These include;

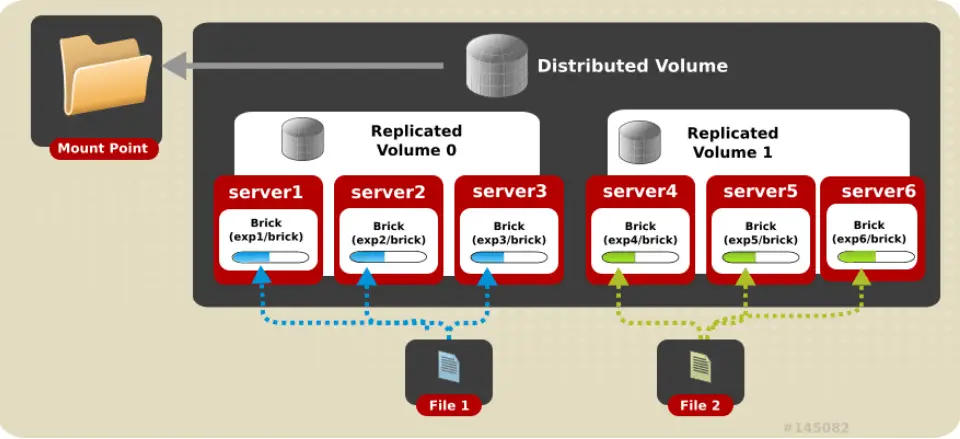

Distributed: Files are distributed across the bricks in the volume.Replicated: Files are replicated across the bricks in the volume. It ensures high storage availability and reliability.Distributed Replicated: Files are distributed across the replicated bricks in the volume. Ensures high-reliability, scalability and improved read performance.Arbitrated Replicated: Files are replicated across two bricks in a replica set and only the metadata is replicated to the third brick. Ensures data consistency.Dispersed: Files are dispersed across the bricks in the volume.Distributed Dispersed: Data is distributed across the dispersed sub-volume.

In our previous, we covered how to set-up replicated glusterfs storage volume.

How to Setup Replicated Gluster Volume on CentOS 8

Setup GlusterFS Distributed Replicated Volume

In a glusterfs distributed replicated setup, the number of bricks must be a multiple of the replica count. Also, the order in which bricks are specified is crucial in the sense that, adjacent bricks become replicas of each other.

Cluster Nodes

Below are the details of our distributed replicated Gluster volume nodes

| # | Hostname | IP Address |

| 1 | gfs01.kifarunix-demo.com | 192.168.56.111 |

| 2 | gfs02.kifarunix-demo.com | 192.168.56.112 |

| 3 | gfs03.kifarunix-demo.com | 192.168.57.114 |

| 4 | gfs04.kifarunix-demo.com | 192.168.57.113 |

| 5 | gfs05.kifarunix-demo.com | 192.168.57.117 |

| 6 | gfs06.kifarunix-demo.com | 192.168.57.118 |

Install GlusterFS Server on CentOS 8

Follow the link below to install GlusterFS server package on CentOS 8 nodes;

How to Install GlusterFS Server Package on CentOS 8

Checking the status of the GlusterFS server;

systemctl status glusterd

● glusterd.service - GlusterFS, a clustered file-system server

Loaded: loaded (/usr/lib/systemd/system/glusterd.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2020-06-15 21:56:23 EAT; 11s ago

Docs: man:glusterd(8)

Main PID: 2368 (glusterd)

Tasks: 9 (limit: 5027)

Memory: 3.9M

CGroup: /system.slice/glusterd.service

└─2368 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO

Jun 15 21:56:22 gfs01.kifarunix-demo.com systemd[1]: Starting GlusterFS, a clustered file-system server...

Jun 15 21:56:23 gfs01.kifarunix-demo.com systemd[1]: Started GlusterFS, a clustered file-system server.

You can do the same on other nodes.

Open/Allow GlusterFS Service/Ports on Firewall

Open GlusterFS ports or services on a firewall to enable the nodes communicate.

- The

24007-24008/TCPare used for the communication between nodes; 24009-24108/TCPare required for client communication.

You can simply use the service, glusterfs instead of ports;

firewall-cmd --add-service=glusterfs --permanent;firewall-cmd --reloadVerifying the GlusterFS Storage cluster disks

We are using LVM disks each of 4GBs across the nodes.

lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

root cl -wi-ao---- <6.20g

swap cl -wi-ao---- 820.00m

gfs drgfs -wi-ao---- <4.00g

The disks have XFS filesystems and mounted under, /data/glusterfs/.

df -hT

Filesystem Type Size Used Avail Use% Mounted on

...

/dev/mapper/cl-root xfs 6.2G 1.7G 4.6G 27% /

/dev/sda1 ext4 976M 260M 650M 29% /boot

tmpfs tmpfs 82M 0 82M 0% /run/user/0

/dev/mapper/drgfs-gfs xfs 4.0G 61M 4.0G 2% /data/glusterfs/

To automount the drive on system boot, enter the following entry on the /etc/fstab configuration file;

echo "/dev/mapper/drgfs-gfs /data/glusterfs xfs defaults 1 2" >> /etc/fstabCreate Gluster TSP

Create a gluster trusted storage pool (TSP) using a gluster peer probe command. It is enough to probe all the other cluster nodes from the one of the nodes;

for i in gfs{02..06}; do gluster peer probe $i; doneYou should get a success for each node, in the order with which they are probed;

peer probe: success.

peer probe: success.

peer probe: success.

peer probe: success.

peer probe: success.

Check the status of Gluster peers

From any node, you can run gluster peer status command to display the status of peers;

gluster peer status

Number of Peers: 5

Hostname: gfs02

Uuid: 148dcf14-76c9-412c-9911-aac17cc5801f

State: Peer in Cluster (Connected)

Hostname: gfs03

Uuid: 22d2a6ea-e3a4-49fc-8df6-bd70a9545b30

State: Peer in Cluster (Connected)

Hostname: gfs04

Uuid: 89ddf393-8144-4529-81f6-98128a5f1b71

State: Peer in Cluster (Connected)

Hostname: gfs05

Uuid: 53b7b05a-28ac-4dfc-8598-651dee9d2431

State: Peer in Cluster (Connected)

Hostname: gfs06

Uuid: 29d2d128-5e59-4123-b265-d27ef08f024b

State: Peer in Cluster (Connected)

You can verify the peering status from other nodes.

Configure Distributed Replicated Storage Volume

gluster volume create command can be used to create a Gluster distributed-replicated volume. The syntax of the complete command is;

gluster volume create NEW-VOLNAME [replica COUNT] [transport [tcp | rdma | tcp,rdma]] NEW-BRICK...NOTE:

- an even number of bricks must be used this when creating a distributed replicated volume (We have 6 of them). That means, the number of bricks must be a multiple of the replica count.

- The order in which bricks are specified determines how they are replicated with each other. For example, if you specify a replica of two, then it means that the first two adjacent bricks specified becomes a replicate(mirror) of each other and the next two bricks in the sequence replicate each other.

- Two-way distributed replicated volumes is NOT RECOMMENDED due to split brain issues (inconsistency in either data or metadata (permissions, uid/gid, extended attributes etc)) Hence, the use the three-way distributed replicated volume.

- If you have multiple bricks on your cluster nodes, ensure that you list the first brick on every server, then the second brick on every server in the same order etc so that replica-set members are not placed on the same node.

Creating a Three-way Distributed Replicated Volume

To create a three-way distributed replicated volume, we use six nodes in our demo with a replica of 3. This means that, the first 3 adjacent bricks with form a replica, same thing to the next three.

In our setup, we named the brick data directory as gfsbrick. This data directory will be created if it doesn’t exist. Replace the names accordingly in the following command.

gluster volume create dist-repl-gfs replica 3 transport tcp \

gfs01:/data/glusterfs/brick01 gfs02:/data/glusterfs/brick02 \

gfs03:/data/glusterfs/brick03 gfs04:/data/glusterfs/brick04 \

gfs05:/data/glusterfs/brick05 gfs06:/data/glusterfs/brick06If all is well, you should a message about the volume creation being succesful.

volume create: dist-repl-gfs: success: please start the volume to access dataStarting Distributed Replicated GlusterFS volume

You can start your volume with gluster volume start command. Replace dist-repl-gfs with the name of your volume.

gluster volume start dist-repl-gfsAnd there you go, volume start: dist-repl-gfs: success. Your volume is up.

Verify GlusterFS Volumes

You can verify GlusterFS volumes with gluster volume info command.

gluster volume info all

Volume Name: dist-repl-gfs

Type: Distributed-Replicate

Volume ID: 45f4416d-9842-4352-8802-b280a243036b

Status: Started

Snapshot Count: 0

Number of Bricks: 2 x 3 = 6

Transport-type: tcp

Bricks:

Brick1: gfs01:/data/glusterfs/brick01

Brick2: gfs02:/data/glusterfs/brick02

Brick3: gfs03:/data/glusterfs/brick03

Brick4: gfs04:/data/glusterfs/brick04

Brick5: gfs05:/data/glusterfs/brick05

Brick6: gfs06:/data/glusterfs/brick06

Options Reconfigured:

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

performance.client-io-threads: off

To get the status of the volume;

gluster volume status dist-repl-gfs

Status of volume: dist-repl-gfs

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick gfs01:/data/glusterfs/brick01 49152 0 Y 4510

Brick gfs02:/data/glusterfs/brick02 49152 0 Y 4194

Brick gfs03:/data/glusterfs/brick03 49152 0 Y 4078

Brick gfs04:/data/glusterfs/brick04 49152 0 Y 4080

Brick gfs05:/data/glusterfs/brick05 49152 0 Y 5330

Brick gfs06:/data/glusterfs/brick06 49152 0 Y 4077

Self-heal Daemon on localhost N/A N/A Y 4532

Self-heal Daemon on gfs03 N/A N/A Y 4099

Self-heal Daemon on gfs02 N/A N/A Y 4215

Self-heal Daemon on gfs04 N/A N/A Y 4101

Self-heal Daemon on gfs05 N/A N/A Y 5359

Self-heal Daemon on gfs06 N/A N/A Y 4098

Task Status of Volume dist-repl-gfs

------------------------------------------------------------------------------

There are no active volume tasks

To list additional information about the bricks;

gluster volume status dist-repl-gfs detail

Status of volume: dist-repl-gfs

------------------------------------------------------------------------------

Brick : Brick gfs01:/data/glusterfs/brick01

TCP Port : 49152

RDMA Port : 0

Online : Y

Pid : 4510

File System : xfs

Device : /dev/mapper/drgfs-gfs

Mount Options : rw,seclabel,relatime,attr2,inode64,noquota

Inode Size : 512

Disk Space Free : 3.9GB

Total Disk Space : 4.0GB

Inode Count : 2095104

Free Inodes : 2095086

------------------------------------------------------------------------------

Brick : Brick gfs02:/data/glusterfs/brick02

TCP Port : 49152

...

Mounting GlusterFS Storage Volumes on Clients

Once the distributed replicated volumes are setup, you can then mount them on clients and start writing data into them.

For the purposes of demoing how to mount glusterfs volumes, we will be using a CentOS 8 client.

There are different methods in which Gluster Storage volumes can be accessed. These include the use of;

- Native GlusterFS Client

- Network File System (NFS) v3

- Server Message Block (SMB)

We will be using Native GlusterFS client method in this case.

Install GlusterFS native client on CentOS 8

dnf install glusterfs glusterfs-fuseOnce the installation is done, create a Gluster Storage volume mount point. We use, /mnt/glusterfs as the mount point.

mkdir /mnt/glusterfsBefore you can proceed to mount the Gluster volumes, ensure that all nodes are reachable from the client.

The mounting can be done using the mount command and specifying the file-system type as glusterfs and the node and name of the volume.

mount -t glusterfs gfs01:/dist-repl-gfs /mnt/glusterfsFor automatic mounting during boot, enter the line below on the /etc/fstab, replacing the particular glusterfs storage volume and the mount point.

gfs01:/dist-repl-gfs /mnt/glusterfs/ glusterfs defaults,_netdev 0 0NOTE that you can configure the backup volfile servers in clients by using the backup-volfile-servers mount option.

backup-volfile-servers=<volfile_server2>:<volfile_server3>:...:<volfile_serverN>- If this option is specified while mounting the fuse client as show above, when the first volfile server fails, the servers specified in

backup-volfile-serversoption are used as volfile servers to mount the client until the mount is successful.

mount -t glusterfs -o backup-volfile-servers=server2:server3:.... ..:serverN server1:/VOLUME-NAME MOUNT-POINTThis might look like;

mount -t glusterfs -o backup-volfile-servers=gfs02:gfs03 gfs01:/dist-repl-gfs /mnt/glusterfsConfirm the mounting;

df -hT -P /mnt/glusterfs/Testing Mounted Volumes

To test the distribution and replication of data on our distributed replicated gluster storage volume that is mounted on the client, we will create some bogus files;

cd /mnt/glusterfs/Create files;

for i in {1..10};do echo hello > "File${i}.txt"; doneVerify the files that get stored on each node’s brick.

Same data (replication) on the first three bricks;

[root@gfs01 ~]# ls /data/glusterfs/brick01/

File1.txt File3.txt File5.txt File6.txt File8.txt[root@gfs02 ~]# ls /data/glusterfs/brick02/

File1.txt File3.txt File5.txt File6.txt File8.txt[root@gfs03 ~]# ls /data/glusterfs/brick03/

File1.txt File3.txt File5.txt File6.txt File8.txtSame data (replication) on the next three bricks;

[root@gfs04 ~]# ls /data/glusterfs/brick04/

File10.txt File2.txt File4.txt File7.txt File9.txt[root@gfs05 ~]# ls /data/glusterfs/brick05/

File10.txt File2.txt File4.txt File7.txt File9.txt[root@gfs06 ~]# ls /data/glusterfs/brick06/

File10.txt File2.txt File4.txt File7.txt File9.txtAnd that is how data is distributed and replicated on the glusterfs distributed replicated storage volume.

Reference

Creating Distributed Replicated Volumes Red Hat Gluster Storage

Related Tutorials

Install and Setup GlusterFS Storage Cluster on CentOS 8

Install and Setup GlusterFS on Ubuntu 18.04

Install and Configure Ceph Block Device on Ubuntu 18.04

How to install and Configure iSCSI Storage Server on Ubuntu 18.04