In this tutorial, you will learn how to automate Virtual Machine creation on KVM with Terraform. If you are creating multiple virtual machines on KVM more frequently, then you might have realized that the process is time consuming and not easy to manage especially where consistency is key. This is where Terraform, an Infrastructure as Code tool, comes in; to help you automate the process and ensure consistent, efficient and error-free infrastructure provisioning. It can be used to provision infrastructure both on cloud and on-premise environments, this guide is suitable for those who are new to Terraform and want to experiment it by creating virtual machines on their KVM server. Terraform provides libvirt providers for interacting with KVM hypervisor.

Table of Contents

Automate Virtual Machine Creation on KVM with Terraform

Introduction to Terraform and Basic Architecture

To begin with, you can have a look at the guide below to learn one or two things on the core functionality of Terraform.

Introduction to Terraform: Understanding Basic Architecture

Installing Terraform on Linux

Similarly, learn how to install Terraform on Linux.

Install Terraform on Ubuntu 24.04

Install KVM on Linux

And of course, you need to have KVM already installed on your machine. Check the guides below on how to install KVM.

Create Base KVM Virtual Machine with Kickstart

You can create a base image over which you can use to automate provisioning other virtual machines with Terraform.

Creating KVM Virtual Machines with Kickstart: A Step-by-Step Guide

Provision Single Basic VM using Terraform on KVM

Create Terraform Configuration File

As you already know, Terraform uses declarative configuration language to define a desired state of the infrastructure resource to be provisioned. Thus, create a directory for your project.

mkdir ~/terraform-kvmThe directory name can be anything that suits you.

Next, navigate into the directory and create Terraform main configuration file.

cd ~/terraform-kvmvim main.tfIn the configuration file, define your infrastructure provider/plugin, resources you need to provision, outputs, variables, provisioners, if necessary.

We are using Terraform Libvirt provider/plugin to provision KVM virtual machines. Refer to documentation page for more information.

Below is our configuration file;

cat main.tfterraform {

required_providers {

libvirt = {

source = "dmacvicar/libvirt"

}

}

}

provider "libvirt" {

uri = "qemu:///system"

}

resource "libvirt_volume" "volumes" {

name = "tf-jammy.${var.img_format}"

pool = "default"

source = var.img_source

format = var.img_format

}

resource "libvirt_domain" "guest" {

name = "tf-jammy"

memory = var.mem_size

vcpu = var.cpu_cores

disk {

volume_id = libvirt_volume.volumes.id

}

network_interface {

network_name = "default"

#wait_for_lease = true

}

console {

type = "pty"

target_type = "serial"

target_port = "0"

}

graphics {

type = "spice"

autoport = true

listen_type = "address"

}

}

Where:

terraformBlock specifies the required provider for this configuration. The required provider is libvirt from dmacvicar/libvirt in the registry.provider "libvirt"Block defines the specific provider configurations, including how to connect to the libvirt host.- resource “libvirt_volume” defines resource storage configurations. Image format and source are defined using variables stored in the variables.tf file.

- resource “libvirt_domain” defines the virtual machine parameters such as RAM size, CPU cores, disk configuration, network configuration, console configuration, and graphics configuration. RAM and CPU details are defined in the variables configuration file.

Here is our variables configuration file.

cat variables.tfvariable "img_source" {

description = "Ubuntu 22.04 LTS Cloud Image"

default = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

variable "img_format" {

description = "QCow2 UEFI/GPT Bootable disk image"

default = "qcow2"

type = string

}

variable "mem_size" {

description = "Amount of RAM (in MiB) for the virtual machine"

type = string

default = "2048"

}

variable "cpu_cores" {

description = "Number of CPU cores for the virtual machine"

type = number

default = 2

}

Initialize Terraform working directory

Once you have the configuration ready, you can run the initialization command to install the required Terraform plugins.

terraform initNote that we are executing the command in the same directory where we have main configuration file (main.tf).

ls -1main.tf

variables.tfSample initialization output.

Initializing the backend...

Initializing provider plugins...

- Finding latest version of dmacvicar/libvirt...

- Installing dmacvicar/libvirt v0.7.6...

- Installed dmacvicar/libvirt v0.7.6 (self-signed, key ID 0833E38C51E74D26)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Validate your Configurations

As much as Terraform will validate the configuration when you run plan/apply commands, you can as well be able to check if your Terraform files are syntactically correct before running any commands.

terraform validateIf the configuration files are syntactically correct, you should get such an output;

Success! The configuration is valid.Plan Your Infrastructure

Run the terraform plan command to create an execution plan. This command analyzes your configuration and shows you what Terraform will do when you apply it.

terraform planHere is our sample plan. Review the plan to ensure that it matches your expectations.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# libvirt_domain.guest will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "tf-jammy"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "default"

+ wait_for_lease = true

}

}

# libvirt_volume.volumes will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "tf-jammy.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

Plan: 2 to add, 0 to change, 0 to destroy.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply"

now.

To save the plan to a file, use the option, -out=<filename>

terraform plan -out=kvm-planYou will see an output like;

...

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: kvm-plan

To perform exactly these actions, run the following command to apply:

terraform apply "kvm-plan"

Apply the Configuration

If the plan is meeting your expectations, proceed to apply the changes and provision the infrastructure.

terraform applyIf you had save the plan to a file, you can specify the plan file. For example;

terraform apply "kvm-plan"When you apply the plan, Terraform will ask you to confirm that you really need to perform the actions outlined in the plan. Answer yes and proceed.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# libvirt_domain.guest will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "tf-jammy"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "default"

}

}

# libvirt_volume.volumes will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "tf-jammy.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

Plan: 2 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

libvirt_volume.volumes: Creating...

libvirt_volume.volumes: Creation complete after 6s [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_domain.guest: Creating...

libvirt_domain.guest: Creation complete after 1s [id=dbf39c27-c0bc-4052-a32c-b5c3ee2f3895]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

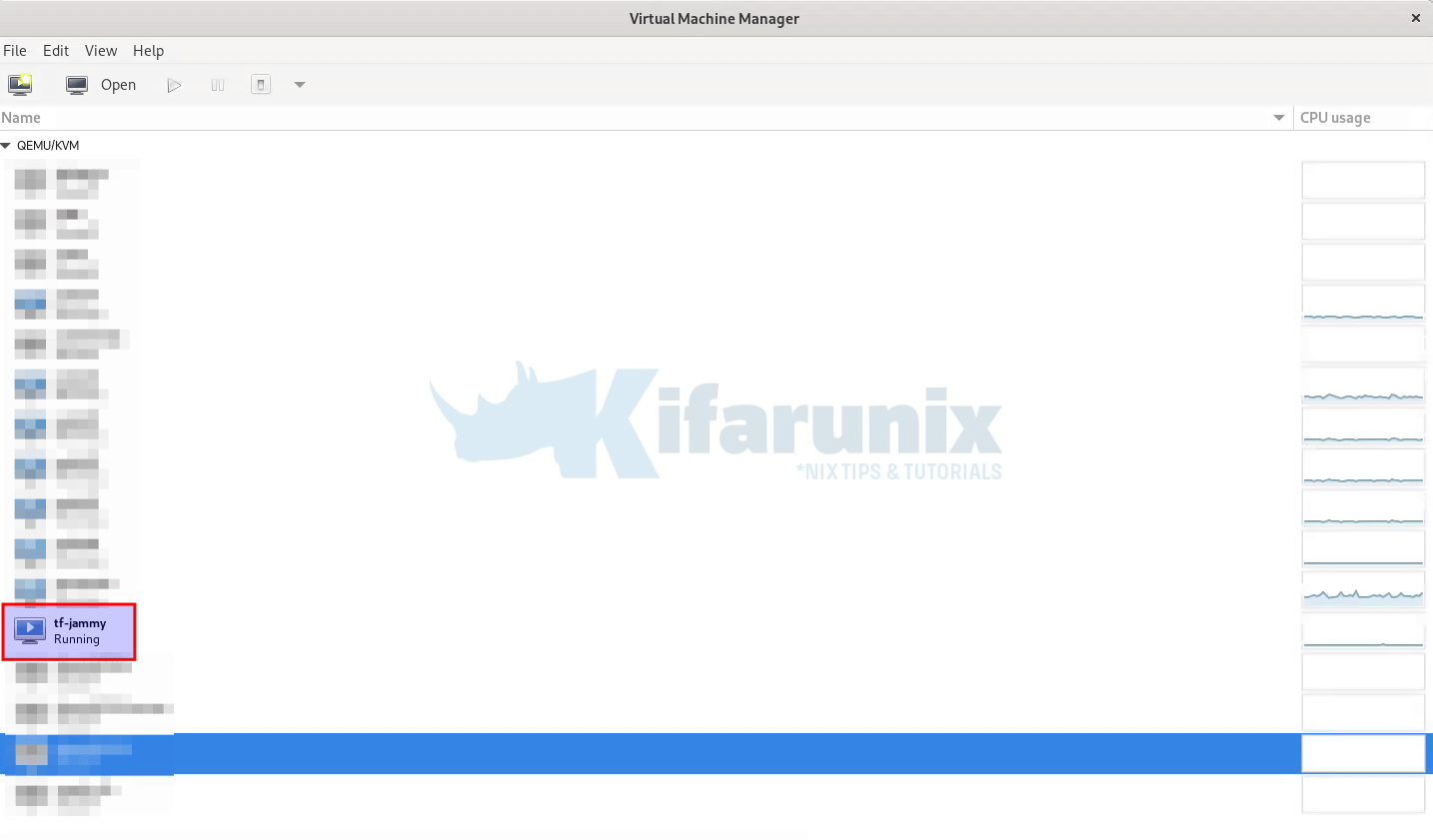

As you can see, our virtual machine is now created!

sudo virsh listAnd there you go.

19 tf-jammy runningConfirm from Virt-manager;

So, you have just created a virtual machine, with no defined usernames for login and that is using the default network.

If you want to delete the virtual machine, simply execute;

terraform destroylibvirt_volume.volumes: Refreshing state... [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_domain.guest: Refreshing state... [id=dbf39c27-c0bc-4052-a32c-b5c3ee2f3895]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# libvirt_domain.guest will be destroyed

- resource "libvirt_domain" "guest" {

- arch = "x86_64" -> null

- autostart = false -> null

- cmdline = [] -> null

- emulator = "/usr/bin/qemu-system-x86_64" -> null

- fw_cfg_name = "opt/com.coreos/config" -> null

- id = "dbf39c27-c0bc-4052-a32c-b5c3ee2f3895" -> null

- machine = "pc" -> null

- memory = 2048 -> null

- name = "tf-jammy" -> null

- qemu_agent = false -> null

- running = true -> null

- type = "kvm" -> null

- vcpu = 2 -> null

# (3 unchanged attributes hidden)

- console {

- source_host = "127.0.0.1" -> null

- source_service = "0" -> null

- target_port = "0" -> null

- target_type = "serial" -> null

- type = "pty" -> null

# (1 unchanged attribute hidden)

}

- cpu {

- mode = "custom" -> null

}

- disk {

- scsi = false -> null

- volume_id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

# (4 unchanged attributes hidden)

}

- graphics {

- autoport = true -> null

- listen_address = "127.0.0.1" -> null

- listen_type = "address" -> null

- type = "spice" -> null

- websocket = 0 -> null

}

- network_interface {

- addresses = [] -> null

- mac = "52:54:00:02:E0:E4" -> null

- network_id = "8a9db7a7-3495-49c3-8137-c43396524bfa" -> null

- network_name = "default" -> null

- wait_for_lease = false -> null

# (5 unchanged attributes hidden)

}

}

# libvirt_volume.volumes will be destroyed

- resource "libvirt_volume" "volumes" {

- format = "qcow2" -> null

- id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

- name = "tf-jammy.qcow2" -> null

- pool = "default" -> null

- size = 2361393152 -> null

- source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img" -> null

}

Plan: 0 to add, 0 to change, 2 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

libvirt_domain.guest: Destroying... [id=dbf39c27-c0bc-4052-a32c-b5c3ee2f3895]

libvirt_domain.guest: Destruction complete after 1s

libvirt_volume.volumes: Destroying... [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_volume.volumes: Destruction complete after 0s

Destroy complete! Resources: 2 destroyed.

More Advance: Provision Multiple VMs using Terraform

You can customize your Terraform configuration further to allow you create multiple vms at once, run them on specific network and create user accounts on them.

For example, I want to create three virtual machines at once, vm-01,02,03, attach the machines to a network called tf-network (192.168.133.0/24), assign the virtual machines IP addresses dynamically and create a user on each of them that uses my password for authentication.

This is how I can update my Terraform modules.

See updated variables file.

cat variables.tfvariable "img_source" {

description = "Ubuntu 22.04 LTS Cloud Image"

default = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

variable "img_format" {

description = "QCow2 UEFI/GPT Bootable disk image"

default = "qcow2"

type = string

}

variable "mem_size" {

description = "Amount of RAM (in MiB) for the virtual machine"

type = string

default = "2048"

}

variable "cpu_cores" {

description = "Number of CPU cores for the virtual machine"

type = number

default = 2

}

variable "vm_count" {

description = "Number of virtual machines to create"

type = number

default = 3

}

variable "vm_network" {

description = "Custom Network for my virtual machine names"

default = "192.168.133.0/24"

}

variable user_account {

type = string

default = "kifarunix"

}

Updating the Terraform main configuration file to create a network resource as as well the cloud init for creating the user accounts in the virtual machines. We have also updated the configuration to provision 3 virtual machines as per the count number defined in the variables configuration.

cat main.tfterraform {

required_providers {

libvirt = {

source = "dmacvicar/libvirt"

}

}

}

provider "libvirt" {

uri = "qemu:///system"

}

resource "libvirt_volume" "volumes" {

for_each = toset([for i in range(var.vm_count) : format("vm-%02d", i + 1)])

name = "${each.key}.${var.img_format}"

pool = "default"

source = var.img_source

format = var.img_format

}

resource "libvirt_network" "tf_network" {

name = "tf-network"

mode = "nat"

autostart = "true"

addresses = [var.vm_network]

dhcp {

enabled = true

}

dns {

enabled = true

}

}

resource "libvirt_domain" "guest" {

for_each = toset([for i in range(var.vm_count) : format("vm-%02d", i + 1)])

name = each.key

memory = var.mem_size

vcpu = var.cpu_cores

disk {

volume_id = libvirt_volume.volumes[each.key].id

}

network_interface {

network_name = libvirt_network.tf_network.name

# wait_for_lease = true

}

cloudinit = "${libvirt_cloudinit_disk.commoninit.id}"

console {

type = "pty"

target_type = "serial"

target_port = "0"

}

graphics {

type = "spice"

autoport = true

listen_type = "address"

}

}

resource "libvirt_cloudinit_disk" "commoninit" {

name = "commoninit.iso"

user_data = data.template_file.user_data.rendered

pool = "default"

}

data "template_file" "user_data" {

template = file("${path.module}/cloud_init.cfg")

vars = {

user_account = var.user_account

}

}

output "node_info" {

value = {

for name, vm in libvirt_domain.guest : name => {

name = vm.name

ip_address = vm.network_interface[0].addresses[0]

}

}

}

As you can see, we introduced yet another plugin, template_file, for use with cloud init.

We have also introduced outputs, just so as to get the vm names and IPs (only possible after the vms are up and running) printed to standard output.

for_each = toset([for i in range(var.vm_count) : format("vm-%02d", i + 1)])This will generate vms names as vm-01,02,03…

The cloud init user data file is created on the same directory as with main Terraform configuration.

cat cloud_init.cfg#cloud-config

users:

- name: ${user_account}

groups: sudo

shell: /bin/bash

passwd: $6$FzMWq3kKBNexpm0T$U1BNz7eXKSPoYVR9Y/LClN6FquV/MpesQu5RPI.YGA5cFRKHdh5RgiNi5MA12hUtjFtRfQ6522ymK/wH1IZZM1

lock_passwd: false

ssh_pwauth: true

Password hash is generated using mkpasswd -m sha-512 command. mkpasswd is provided by whois package, just in case it is missing on your system.

So, this will create a user called kifarunix, as defined in the variables file, that can login via password. Read more on Cloud config reference.

Thus, re-initialize the directory to install extra required plugins;

terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/template...

- Reusing previous version of dmacvicar/libvirt from the dependency lock file

- Installing hashicorp/template v2.2.0...

- Installed hashicorp/template v2.2.0 (signed by HashiCorp)

- Using previously-installed dmacvicar/libvirt v0.7.6

Terraform has made some changes to the provider dependency selections recorded

in the .terraform.lock.hcl file. Review those changes and commit them to your

version control system if they represent changes you intended to make.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Validate the configuration;

terraform validateSuccess! The configuration is valid.Run the plan!

terraform plandata.template_file.user_data: Reading...

data.template_file.user_data: Read complete after 0s [id=a8dd3d6c50dce1a1fe85bff1b7c3150b97c2b092dcdf33505c864fe4cb61a964]

libvirt_volume.volumes: Refreshing state... [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_domain.guest: Refreshing state... [id=3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15]

Note: Objects have changed outside of Terraform

Terraform detected the following changes made outside of Terraform since the last "terraform apply" which may have affected this plan:

# libvirt_domain.guest has changed

~ resource "libvirt_domain" "guest" {

+ cmdline = []

id = "3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15"

name = "tf-jammy"

# (13 unchanged attributes hidden)

# (5 unchanged blocks hidden)

}

Unless you have made equivalent changes to your configuration, or ignored the relevant attributes using ignore_changes, the following plan may

include actions to undo or respond to these changes.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

- destroy

Terraform will perform the following actions:

# libvirt_cloudinit_disk.commoninit will be created

+ resource "libvirt_cloudinit_disk" "commoninit" {

+ id = (known after apply)

+ name = "commoninit.iso"

+ pool = "default"

+ user_data = <<-EOT

#cloud-config

users:

- name: kifarunix

groups: sudo

shell: /bin/bash

passwd: $6$FzMWq3kKBNexpm0T$U1BNz7eXKSPoYVR9Y/LClN6FquV/MpesQu5RPI.YGA5cFRKHdh5RgiNi5MA12hUtjFtRfQ6522ymK/wH1IZZM1

lock_passwd: false

ssh_pwauth: true

EOT

}

# libvirt_domain.guest will be destroyed

# (because resource uses count or for_each)

- resource "libvirt_domain" "guest" {

- arch = "x86_64" -> null

- autostart = false -> null

- cmdline = [] -> null

- emulator = "/usr/bin/qemu-system-x86_64" -> null

- fw_cfg_name = "opt/com.coreos/config" -> null

- id = "3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15" -> null

- machine = "pc" -> null

- memory = 2048 -> null

- name = "tf-jammy" -> null

- qemu_agent = false -> null

- running = true -> null

- type = "kvm" -> null

- vcpu = 2 -> null

# (3 unchanged attributes hidden)

- console {

- source_host = "127.0.0.1" -> null

- source_service = "0" -> null

- target_port = "0" -> null

- target_type = "serial" -> null

- type = "pty" -> null

# (1 unchanged attribute hidden)

}

- cpu {

- mode = "custom" -> null

}

- disk {

- scsi = false -> null

- volume_id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

# (4 unchanged attributes hidden)

}

- graphics {

- autoport = true -> null

- listen_address = "127.0.0.1" -> null

- listen_type = "address" -> null

- type = "spice" -> null

- websocket = 0 -> null

}

- network_interface {

- addresses = [] -> null

- mac = "52:54:00:5A:A0:D2" -> null

- network_id = "8a9db7a7-3495-49c3-8137-c43396524bfa" -> null

- network_name = "default" -> null

- wait_for_lease = false -> null

# (5 unchanged attributes hidden)

}

}

# libvirt_domain.guest["vm-01"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-01"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_domain.guest["vm-02"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-02"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_domain.guest["vm-03"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-03"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_network.tf_network will be created

+ resource "libvirt_network" "tf_network" {

+ addresses = [

+ "192.168.133.0/24",

]

+ autostart = true

+ bridge = (known after apply)

+ id = (known after apply)

+ mode = "nat"

+ name = "tf-network"

+ dhcp {

+ enabled = true

}

+ dns {

+ enabled = true

}

}

# libvirt_volume.volumes will be destroyed

# (because resource uses count or for_each)

- resource "libvirt_volume" "volumes" {

- format = "qcow2" -> null

- id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

- name = "tf-jammy.qcow2" -> null

- pool = "default" -> null

- size = 2361393152 -> null

- source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img" -> null

}

# libvirt_volume.volumes["vm-01"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-01.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

# libvirt_volume.volumes["vm-02"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-02.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

# libvirt_volume.volumes["vm-03"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-03.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

Plan: 8 to add, 0 to change, 2 to destroy.

Changes to Outputs:

+ node_info = {

+ vm-01 = {

+ ip_address = (known after apply)

+ name = "vm-01"

}

+ vm-02 = {

+ ip_address = (known after apply)

+ name = "vm-02"

}

+ vm-03 = {

+ ip_address = (known after apply)

+ name = "vm-03"

}

}

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply"

now.

Provision the resources;

terraform applydata.template_file.user_data: Reading...

data.template_file.user_data: Read complete after 0s [id=a8dd3d6c50dce1a1fe85bff1b7c3150b97c2b092dcdf33505c864fe4cb61a964]

libvirt_volume.volumes: Refreshing state... [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_domain.guest: Refreshing state... [id=3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15]

Note: Objects have changed outside of Terraform

Terraform detected the following changes made outside of Terraform since the last "terraform apply" which may have affected this plan:

# libvirt_domain.guest has changed

~ resource "libvirt_domain" "guest" {

+ cmdline = []

id = "3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15"

name = "tf-jammy"

# (13 unchanged attributes hidden)

# (5 unchanged blocks hidden)

}

Unless you have made equivalent changes to your configuration, or ignored the relevant attributes using ignore_changes, the following plan may

include actions to undo or respond to these changes.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

- destroy

Terraform will perform the following actions:

# libvirt_cloudinit_disk.commoninit will be created

+ resource "libvirt_cloudinit_disk" "commoninit" {

+ id = (known after apply)

+ name = "commoninit.iso"

+ pool = "default"

+ user_data = <<-EOT

#cloud-config

users:

- name: kifarunix

groups: sudo

shell: /bin/bash

passwd: $6$FzMWq3kKBNexpm0T$U1BNz7eXKSPoYVR9Y/LClN6FquV/MpesQu5RPI.YGA5cFRKHdh5RgiNi5MA12hUtjFtRfQ6522ymK/wH1IZZM1

lock_passwd: false

ssh_pwauth: true

EOT

}

# libvirt_domain.guest will be destroyed

# (because resource uses count or for_each)

- resource "libvirt_domain" "guest" {

- arch = "x86_64" -> null

- autostart = false -> null

- cmdline = [] -> null

- emulator = "/usr/bin/qemu-system-x86_64" -> null

- fw_cfg_name = "opt/com.coreos/config" -> null

- id = "3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15" -> null

- machine = "pc" -> null

- memory = 2048 -> null

- name = "tf-jammy" -> null

- qemu_agent = false -> null

- running = true -> null

- type = "kvm" -> null

- vcpu = 2 -> null

# (3 unchanged attributes hidden)

- console {

- source_host = "127.0.0.1" -> null

- source_service = "0" -> null

- target_port = "0" -> null

- target_type = "serial" -> null

- type = "pty" -> null

# (1 unchanged attribute hidden)

}

- cpu {

- mode = "custom" -> null

}

- disk {

- scsi = false -> null

- volume_id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

# (4 unchanged attributes hidden)

}

- graphics {

- autoport = true -> null

- listen_address = "127.0.0.1" -> null

- listen_type = "address" -> null

- type = "spice" -> null

- websocket = 0 -> null

}

- network_interface {

- addresses = [] -> null

- mac = "52:54:00:5A:A0:D2" -> null

- network_id = "8a9db7a7-3495-49c3-8137-c43396524bfa" -> null

- network_name = "default" -> null

- wait_for_lease = false -> null

# (5 unchanged attributes hidden)

}

}

# libvirt_domain.guest["vm-01"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-01"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_domain.guest["vm-02"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-02"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_domain.guest["vm-03"] will be created

+ resource "libvirt_domain" "guest" {

+ arch = (known after apply)

+ autostart = (known after apply)

+ cloudinit = (known after apply)

+ emulator = (known after apply)

+ fw_cfg_name = "opt/com.coreos/config"

+ id = (known after apply)

+ machine = (known after apply)

+ memory = 2048

+ name = "vm-03"

+ qemu_agent = false

+ running = true

+ type = "kvm"

+ vcpu = 2

+ console {

+ source_host = "127.0.0.1"

+ source_service = "0"

+ target_port = "0"

+ target_type = "serial"

+ type = "pty"

}

+ disk {

+ scsi = false

+ volume_id = (known after apply)

}

+ graphics {

+ autoport = true

+ listen_address = "127.0.0.1"

+ listen_type = "address"

+ type = "spice"

}

+ network_interface {

+ addresses = (known after apply)

+ hostname = (known after apply)

+ mac = (known after apply)

+ network_id = (known after apply)

+ network_name = "tf-network"

}

}

# libvirt_network.tf_network will be created

+ resource "libvirt_network" "tf_network" {

+ addresses = [

+ "192.168.133.0/24",

]

+ autostart = true

+ bridge = (known after apply)

+ id = (known after apply)

+ mode = "nat"

+ name = "tf-network"

+ dhcp {

+ enabled = true

}

+ dns {

+ enabled = true

}

}

# libvirt_volume.volumes will be destroyed

# (because resource uses count or for_each)

- resource "libvirt_volume" "volumes" {

- format = "qcow2" -> null

- id = "/var/lib/libvirt/images/tf-jammy.qcow2" -> null

- name = "tf-jammy.qcow2" -> null

- pool = "default" -> null

- size = 2361393152 -> null

- source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img" -> null

}

# libvirt_volume.volumes["vm-01"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-01.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

# libvirt_volume.volumes["vm-02"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-02.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

# libvirt_volume.volumes["vm-03"] will be created

+ resource "libvirt_volume" "volumes" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "vm-03.qcow2"

+ pool = "default"

+ size = (known after apply)

+ source = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

Plan: 8 to add, 0 to change, 2 to destroy.

Changes to Outputs:

+ node_info = {

+ vm-01 = {

+ ip_address = (known after apply)

+ name = "vm-01"

}

+ vm-02 = {

+ ip_address = (known after apply)

+ name = "vm-02"

}

+ vm-03 = {

+ ip_address = (known after apply)

+ name = "vm-03"

}

}

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

libvirt_cloudinit_disk.commoninit: Creating...

libvirt_domain.guest: Destroying... [id=3c1446d1-cbf9-4ccc-98d4-c6f5527a6a15]

libvirt_network.tf_network: Creating...

libvirt_cloudinit_disk.commoninit: Creation complete after 0s [id=/var/lib/libvirt/images/commoninit.iso;7af70c2b-b9e6-44c5-b269-2e0d187f43ba]

libvirt_domain.guest: Destruction complete after 0s

libvirt_volume.volumes: Destroying... [id=/var/lib/libvirt/images/tf-jammy.qcow2]

libvirt_volume.volumes["vm-03"]: Creating...

libvirt_volume.volumes["vm-01"]: Creating...

libvirt_volume.volumes["vm-02"]: Creating...

libvirt_volume.volumes: Destruction complete after 0s

libvirt_network.tf_network: Creation complete after 5s [id=1709e5e9-3e68-4170-af0a-b89330e5921c]

libvirt_volume.volumes["vm-03"]: Creation complete after 7s [id=/var/lib/libvirt/images/vm-03.qcow2]

libvirt_volume.volumes["vm-01"]: Still creating... [10s elapsed]

libvirt_volume.volumes["vm-02"]: Still creating... [10s elapsed]

libvirt_volume.volumes["vm-01"]: Creation complete after 13s [id=/var/lib/libvirt/images/vm-01.qcow2]

libvirt_volume.volumes["vm-02"]: Creation complete after 19s [id=/var/lib/libvirt/images/vm-02.qcow2]

libvirt_domain.guest["vm-02"]: Creating...

libvirt_domain.guest["vm-03"]: Creating...

libvirt_domain.guest["vm-01"]: Creating...

libvirt_domain.guest["vm-02"]: Creation complete after 1s [id=b72a5cc8-ec24-4be1-9423-c023de1c3796]

libvirt_domain.guest["vm-01"]: Creation complete after 1s [id=b911ad4d-3e6b-4dfe-bdbe-34f65d3eb0d6]

libvirt_domain.guest["vm-03"]: Creation complete after 1s [id=6abf0652-c405-4d16-bf0b-c9098b888320]

╷

│ Error: Invalid index

│

│ on main.tf line 81, in output "node_info":

│ 81: ip_address = vm.network_interface[0].addresses[0]

│ ├────────────────

│ │ vm.network_interface[0].addresses is empty list of string

│

│ The given key does not identify an element in this collection value: the collection has no elements.

╵

╷

│ Error: Invalid index

│

│ on main.tf line 81, in output "node_info":

│ 81: ip_address = vm.network_interface[0].addresses[0]

│ ├────────────────

│ │ vm.network_interface[0].addresses is empty list of string

│

│ The given key does not identify an element in this collection value: the collection has no elements.

╵

╷

│ Error: Invalid index

│

│ on main.tf line 81, in output "node_info":

│ 81: ip_address = vm.network_interface[0].addresses[0]

│ ├────────────────

│ │ vm.network_interface[0].addresses is empty list of string

│

│ The given key does not identify an element in this collection value: the collection has no elements.

You will not get IP addresses until when all vms are up and running.

You can then re-run the apply command;

terraform applydata.template_file.user_data: Reading...

data.template_file.user_data: Read complete after 0s [id=a8dd3d6c50dce1a1fe85bff1b7c3150b97c2b092dcdf33505c864fe4cb61a964]

libvirt_cloudinit_disk.commoninit: Refreshing state... [id=/var/lib/libvirt/images/commoninit.iso;7af70c2b-b9e6-44c5-b269-2e0d187f43ba]

libvirt_volume.volumes["vm-02"]: Refreshing state... [id=/var/lib/libvirt/images/vm-02.qcow2]

libvirt_volume.volumes["vm-01"]: Refreshing state... [id=/var/lib/libvirt/images/vm-01.qcow2]

libvirt_volume.volumes["vm-03"]: Refreshing state... [id=/var/lib/libvirt/images/vm-03.qcow2]

libvirt_network.tf_network: Refreshing state... [id=1709e5e9-3e68-4170-af0a-b89330e5921c]

libvirt_domain.guest["vm-03"]: Refreshing state... [id=6abf0652-c405-4d16-bf0b-c9098b888320]

libvirt_domain.guest["vm-01"]: Refreshing state... [id=b911ad4d-3e6b-4dfe-bdbe-34f65d3eb0d6]

libvirt_domain.guest["vm-02"]: Refreshing state... [id=b72a5cc8-ec24-4be1-9423-c023de1c3796]

Changes to Outputs:

+ node_info = {

+ vm-01 = {

+ ip_address = "192.168.133.176"

+ name = "vm-01"

}

+ vm-02 = {

+ ip_address = "192.168.133.239"

+ name = "vm-02"

}

+ vm-03 = {

+ ip_address = "192.168.133.157"

+ name = "vm-03"

}

}

You can apply this plan to save these new output values to the Terraform state, without changing any real infrastructure.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

Outputs:

node_info = {

"vm-01" = {

"ip_address" = "192.168.133.176"

"name" = "vm-01"

}

"vm-02" = {

"ip_address" = "192.168.133.239"

"name" = "vm-02"

}

"vm-03" = {

"ip_address" = "192.168.133.157"

"name" = "vm-03"

}

}

Or, check the DHCP lease for the network;

sudo virsh net-dhcp-leases tf-networkExpiry Time MAC address Protocol IP address Hostname Client ID or DUID

------------------------------------------------------------------------------------------------------------------------------------------------

2024-04-15 23:55:55 52:54:00:12:36:af ipv4 192.168.133.176/24 ubuntu ff:b5:5e:67:ff:00:02:00:00:ab:11:3f:01:ff:e3:44:bc:34:d8

2024-04-15 23:55:55 52:54:00:46:4f:6b ipv4 192.168.133.239/24 ubuntu ff:b5:5e:67:ff:00:02:00:00:ab:11:39:d7:61:ea:3d:3a:c1:73

2024-04-15 23:55:55 52:54:00:ee:d5:b1 ipv4 192.168.133.157/24 - ff:b5:5e:67:ff:00:02:00:00:ab:11:c4:d3:11:18:f1:77:95:02

Check the vms;

sudo virsh list --all 55 vm-03 running

56 vm-01 running

57 vm-02 running

Login to one of them to confirm user account creation;

sudo virsh console vm-01Connected to domain 'vm-01'

Escape character is ^] (Ctrl + ])

ubuntu login: kifarunix

Password:

Welcome to Ubuntu 22.04.4 LTS (GNU/Linux 5.15.0-101-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Mon Apr 15 20:59:01 UTC 2024

System load: 0.013671875 Processes: 98

Usage of /: 71.3% of 1.96GB Users logged in: 0

Memory usage: 9% IPv4 address for ens3: 192.168.133.56

Swap usage: 0%

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo ".

See "man sudo_root" for details.

kifarunix@ubuntu:~$ groups

kifarunix sudo

kifarunix@ubuntu:~$

Conclusion

And that is it! You have just automated the creation of virtual machines on KVM using Terraform.

You can read further on the Documentation as well on further usage of libvirt provider!