Welcome to our tutorial on how you can utilize Kubernetes Ingress to manage external access to your Kubernetes services. Kubernetes Ingress is a powerful tool that simplifies the management of external traffic to services running within a cluster. It acts as a reverse proxy, routing incoming requests to specific services based on defined rules. By utilizing Ingress, you can efficiently manage load balancing, SSL termination, and name-based virtual hosting.

Table of Contents

Using Kubernetes Ingress to Manage External Access to Services

What is Kubernetes Ingress?

So, how do I access Kubernetes service externally? Kubernetes Ingress is an API object resource that manages external access to services within a cluster. It provides HTTP and HTTPS routing, allowing you to define rules for how incoming traffic should be directed to different backend services in the cluster based on the request’s hostname or path.

It can provide load balancing, SSL termination, and name-based virtual hosting. Essentially, it helps route traffic from outside the cluster to your services inside the cluster based on various rules.

Key Components of Kubernetes Ingress

What are the core components in Kubernetes Ingress?

- Ingress Resource: This is a Kubernetes configuration file that defines rules for routing external HTTP/HTTPS traffic to services within the cluster. At a high level, an Ingress resource is made up of the routing rules and configurations for the Ingress including:

- Host: The domain name (e.g.,

kifarunix.com) that the rule applies to. If not specified, the rule applies to all hosts. - HTTP: Contains HTTP-specific routing information and includes:

- Path: The URL path prefix (e.g.,

/about-us). It is used to match incoming requests and route them to the appropriate backend. - PathType: Specifies how the path is matched. Common values include:

- Prefix (match based on a path prefix). This is useful when you want to handle all paths that start with a specific prefix in the same way. It’s common for serving content that falls under a certain category or section of a site e.g. /about, about/team, /about/history…

- Exact (match based on the exact path). The

Exactpath type matches only the exact URL path specified. For instance, if you set a path/about-uswithExact, it will only match requests to/about-usand nothing else. - ImplementationSpecific: The default type, usually treated the same as Prefix. However, its behavior can vary depending on the Ingress controller implementation.

- Resource Backend: Defines the destination for the matched request and includes:

- Service: The Kubernetes Service to which the request should be routed.

- Port: The port on the Service that should receive the traffic.

- Path: The URL path prefix (e.g.,

- TLS (optional): Configures TLS/SSL termination for the Ingress. This section includes:

- Hosts: A list of hostnames that should be served over HTTPS.

- SecretName: The name of the Kubernetes Secret that contains the TLS certificate and private key.

- Default Backend: The default backend is a Service that receives all traffic that doesn’t match any of the defined rules in the Ingress resource. It’s essentially a fallback option. This can be used to display a custom error page or redirect traffic to a specific service.

- Host: The domain name (e.g.,

- Ingress Controller: This is a component that implements the Ingress rules defined. There are various Ingress controllers available (e.g., NGINX, Traefik, HAProxy).

- Service: Represents a set of pods and a port for accessing them.

- Ingress Class: This determines which Ingress controller should implement the rules. It’s useful in environments where multiple controllers are used.

How to Setup Kubernetes Ingress

To setup Kubernetes Ingress, proceed as follows;

- Install Kubernetes Ingress Controller

- Create a Deployment and a Service to expose it within the cluster.

- Create an Ingress resource to expose the service externally

- Setup a DNS for your service

- Verify the Setup.

Installing Kubernetes Ingress Controller

To get started, you’ll need an Ingress controller. There are multiple Ingress controllers you can choose from based on your use cases. In this example guide, we will use the NGINX Ingress Controller for Kubernetes.

Nginx Ingress controller can be deployed using different ways. Since we are running a Kubeadm Kubernetes cluster, we will install Kubernetes Nginx ingress controller using its YAML file by running kubectl apply command as follows.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yamlSample installation output;

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

This will deploy Ingress controller as a Deployment resource in the ingress-nginx namespace.

kubectl get all -n ingress-nginxNAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-kl4mr 0/1 Completed 0 85s

pod/ingress-nginx-admission-patch-4zqjb 0/1 Completed 1 85s

pod/ingress-nginx-controller-7d4db76476-5h4cq 1/1 Running 0 85s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.108.54.181 <pending> 80:30580/TCP,443:30896/TCP 86s

service/ingress-nginx-controller-admission ClusterIP 10.103.61.173 <none> 443/TCP 85s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 85s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-7d4db76476 1 1 1 85s

NAME STATUS COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create Complete 1/1 5s 85s

job.batch/ingress-nginx-admission-patch Complete 1/1 6s 85s

To render the controller details;

kubectl describe deployment ingress-nginx-controller -n ingress-nginx

Name: ingress-nginx-controller

Namespace: ingress-nginx

CreationTimestamp: Mon, 19 Aug 2024 19:35:45 +0000

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.11.2

Annotations: deployment.kubernetes.io/revision: 1

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 1 max unavailable, 25% max surge

Pod Template:

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.11.2

Service Account: ingress-nginx

Containers:

controller:

Image: registry.k8s.io/ingress-nginx/controller:v1.11.2@sha256:d5f8217feeac4887cb1ed21f27c2674e58be06bd8f5184cacea2a69abaf78dce

Ports: 80/TCP, 443/TCP, 8443/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

SeccompProfile: RuntimeDefault

Args:

/nginx-ingress-controller

--publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

--election-id=ingress-nginx-leader

--controller-class=k8s.io/ingress-nginx

--ingress-class=nginx

--configmap=$(POD_NAMESPACE)/ingress-nginx-controller

--validating-webhook=:8443

--validating-webhook-certificate=/usr/local/certificates/cert

--validating-webhook-key=/usr/local/certificates/key

--enable-metrics=false

Requests:

cpu: 100m

memory: 90Mi

Liveness: http-get http://:10254/healthz delay=10s timeout=1s period=10s #success=1 #failure=5

Readiness: http-get http://:10254/healthz delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

POD_NAME: (v1:metadata.name)

POD_NAMESPACE: (v1:metadata.namespace)

LD_PRELOAD: /usr/local/lib/libmimalloc.so

Mounts:

/usr/local/certificates/ from webhook-cert (ro)

Volumes:

webhook-cert:

Type: Secret (a volume populated by a Secret)

SecretName: ingress-nginx-admission

Optional: false

Node-Selectors: kubernetes.io/os=linux

Tolerations: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: ingress-nginx-controller-7d4db76476 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 3m49s deployment-controller Scaled up replica set ingress-nginx-controller-7d4db76476 to 1

Create a Deployment and Service

In our environment, we have a custom web app with an home page and an about-us page running under ingress namespace.

cat deployment.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: ingress

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

volumeMounts:

- name: nginx-conf

mountPath: /etc/nginx/conf.d

- name: index-html

mountPath: /usr/share/nginx/html

- name: about-us-html

mountPath: /usr/share/nginx/html/about

volumes:

- name: nginx-conf

configMap:

name: nginx.conf

- name: index-html

configMap:

name: index.html

- name: about-us-html

configMap:

name: about-us.html

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: ingress

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

The deployment is exposed via a Load balancer service type.

kubectl get all -n ingressNAME READY STATUS RESTARTS AGE

pod/nginx-deployment-78c6f7dd66-qxdsr 1/1 Running 0 10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-service LoadBalancer 10.100.105.43 <pending> 80:31617/TCP 10s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 1/1 1 1 10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-78c6f7dd66 1 1 1 10s

As you can see, the external IP is still pending.

Within the cluster, we can access the web pages mentioned above as;

curl 10.100.105.43<!DOCTYPE html>

<html>

<head>

<title>Home Page</title>

</head>

<body>

<h1>Welcome to the Home Page!</h1>

<p>This is the default custom page served at the root path.</p>

<a href="/about-us">About Us</a>

</body>

</html>

curl 10.100.105.43/about-us<!DOCTYPE html>

<html>

<head>

<title>About Us</title>

</head>

<body>

<h1>About Us</h1>

<p>This is the custom About Us page.</p>

<a href="/">Home</a>

</body>

</html>

Create an Ingress Resource

Next, we’ll define an Ingress resource to expose the web server service. This resource will route external traffic to the Nginx service through the load balancer IP.

You can create an Ingress controller via declarative method using a manifest YAML file or imperatively using kubect create ingress command. Imperative method however does not offer flexibility provided by the declarative method.

To use a declarative method, proceed as follows.

cat ingress-resource.yamlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress-resource

namespace: ingress

spec:

rules:

- host: kifarunix-demo.com

http:

paths:

- path: /

pathType: Exact

backend:

service:

name: nginx-service

port:

number: 80

- path: /about-us

pathType: Exact

backend:

service:

name: nginx-service

port:

number: 80

Apply;

kubectl apply -f ingress-resource.yamlIf you want to create the ingress via command line, the command line syntax is;

kubectl create ingress NAME --rule=host/path=service:port[,tls[=secret]] [options]For example, to create an ingress like the above via command line;

kubectl create ingress nginx-ingress-resource -n ingress \

--rule="kifarunix-demo.com/*=nginx-service:80" \

--rule="kifarunix-demo.com/about-us=nginx-service:80"

Read more on kubectl create ingress –help.

List Ingress resources on your namespace;

kubectl get ingress -n ingress

-n ingress specifies our namespace.

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress-resource <none> kifarunix-demo.com 80 15s

Configure DNS for an Ingress Controller

For the Ingress resource to work, you need to point the domain of your application to the external IP address of your Ingress controller.

To find the external IP address assigned to your Ingress Controller service, run the following command to list the services in the ingress-nginx namespace.

kubectl get service -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.54.181 <pending> 80:30580/TCP,443:30896/TCP 36m

ingress-nginx-controller-admission ClusterIP 10.103.61.173 <none> 443/TCP 36m

From the output;

- NAME: This column shows the name of the Kubernetes service.

ingress-nginx-controller: This is the primary service for the Ingress Controller. It’s responsible for handling incoming HTTP/HTTPS traffic and routing it based on Ingress rules.ingress-nginx-controller-admission: This service is typically used for admission control purposes, such as validating or mutating Ingress resources before they are applied.

- TYPE: This indicates the type of the service, which determines how it is exposed.

LoadBalancer: This type of service provisions a load balancer in cloud environments (like AWS, Azure, GCP) that provides an external IP address to route traffic to your service.ClusterIP: This type of service is only accessible within the Kubernetes cluster. It is not exposed to the outside world.

- CLUSTER-IP: This is the internal IP address assigned to the service within the Kubernetes cluster.

10.108.54.181: This is the internal IP address for theingress-nginx-controllerservice.10.103.61.173: This is the internal IP address for theingress-nginx-controller-admissionservice.

- EXTERNAL-IP: This is the IP address that is exposed outside the Kubernetes cluster (for LoadBalancer services).

<pending>: Indicates that an external IP address has not yet been assigned. This usually happens when the cloud provider has not yet provisioned the load balancer or assigned an IP. The external IP will be populated once the load balancer is set up. If you are running a local/on-premise K8S cluster, you won’t get an external IP out of the box.<none>: This indicates that the service does not have an external IP because it is aClusterIPservice and is only accessible within the cluster.

- PORT(S): Lists the ports that the service is listening on and the corresponding node ports (for

LoadBalancertype services).80:30580/TCP: The service is exposing port 80 internally and mapping it to port 30270 on the node.443:30896/TCP: The service is exposing port 443 internally and mapping it to port 32487 on the node.

So, you would typically set the DNS entry of the application domain to point to the value of the EXTERNAL_IP address.

External IP for Local/On-Premise Kubernetes Cluster: Install MetalLB

If you’re running a non-cloud (on-premises or bare-metal) Kubernetes cluster, you won’t have a cloud provider’s load balancer automatically provisioning an external IP address for your LoadBalancer service. Instead, you can use NodePort service type to expose your Ingress controller or simply use MetalLB, a load-balancer implementation for bare metal Kubernetes clusters.

In this demo, we will use MetalLB. MetalLB allows you to create Kubernetes services of type LoadBalancer in clusters that don’t run on a cloud provider.

Before you can proceed, check the kube-proxy mode from the cluster. iptables is the default mode. For example, run this command on the master node;

curl localhost:10249/proxyModeSample output in my case shows, iptables. If the output is IPVS, it shows you are using IPVS to manage and distribute network traffic.

If IPVS is enabled, you have to edit the kube-proxy to and set strict ARP mode to true.

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

kubectl apply -f - -n kube-system

Next, proceed to install MetalLB using manifest. Just get the current release version and replace the value of VER below;

VER=v0.14.8kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/${VER}/config/manifests/metallb-native.yamlSample installation output;

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

configmap/metallb-excludel2 created

secret/metallb-webhook-cert created

service/metallb-webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

As you can see, the LoadBalancer is installed in a separate namespace, metallb-system.

The MetalLB components that are deployed include:

- The

metallb-system/controllerdeployment which is the cluster-wide controller that handles IP address assignments. - The

metallb-system/speakerdaemonset which is the component that speaks the protocol(s) of your choice to make the services reachable. - Service accounts for the controller and speaker, along with the RBAC permissions that the components need to function.

kubectl get pods -n metallb-systemNAME READY STATUS RESTARTS AGE

controller-6dd967fdc7-brn6z 1/1 Running 0 24s

speaker-cqms7 1/1 Running 0 24s

speaker-f4d2t 1/1 Running 0 24s

speaker-hjnhq 1/1 Running 0 24s

speaker-lxpts 1/1 Running 0 24s

speaker-wprwg 1/1 Running 0 24s

speaker-xk4rg 1/1 Running 0 24s

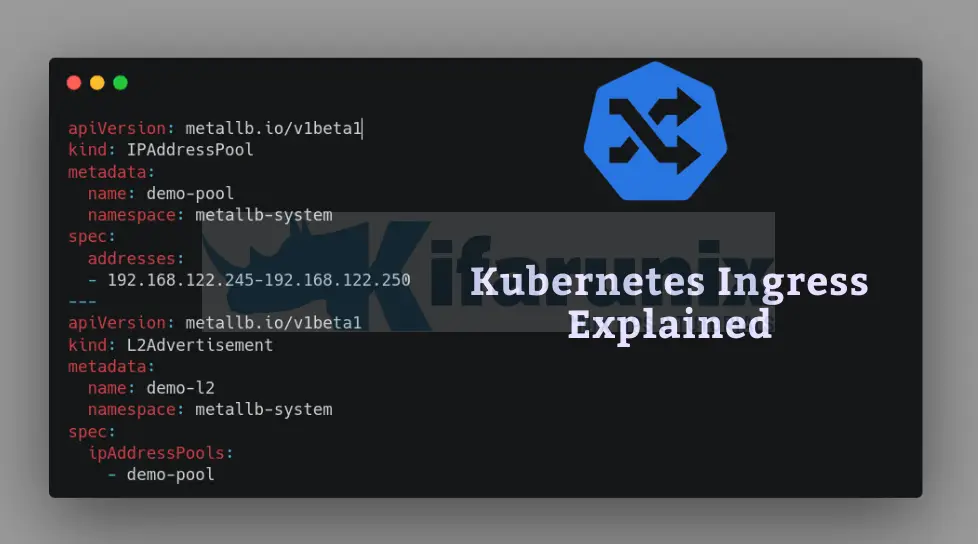

Configure MetalLB Address Allocation

By default, MetalLB will allocate IPs from any configured address pool with free addresses. As such, you need to configure it to use a pool of IP addresses for load balancing through the use of IPAddressPool resource.

You also need to define an L2Advertisement resource to allow MetalLB to broadcast the presence of services with assigned external IPs to devices on the same local network.

We will create these resources via YAML files as follows;

cat metallb-resources.yamlapiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: demo-pool

namespace: metallb-system

spec:

addresses:

- 192.168.122.245-192.168.122.250

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: demo-l2

namespace: metallb-system

spec:

ipAddressPools:

- demo-pool

Adjust the name and address space for the pool as well as the L2Advertisement name.

Let’s apply the resources;

kubectl apply -f metallb-resources.yamlNow, if you check our Nginx ingress controller LoadBalancer, it should be having an EXTERNAL IP;

kubectl get service -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.54.181 192.168.122.246 80:30580/TCP,443:30896/TCP 21h

ingress-nginx-controller-admission ClusterIP 10.103.61.173 <none> 443/TCP 21h

Similarly, our deployment service should also be having an EXTERNAL IP;

kubectl get service -n ingressNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service LoadBalancer 10.100.105.43 192.168.122.245 80:31617/TCP 21h

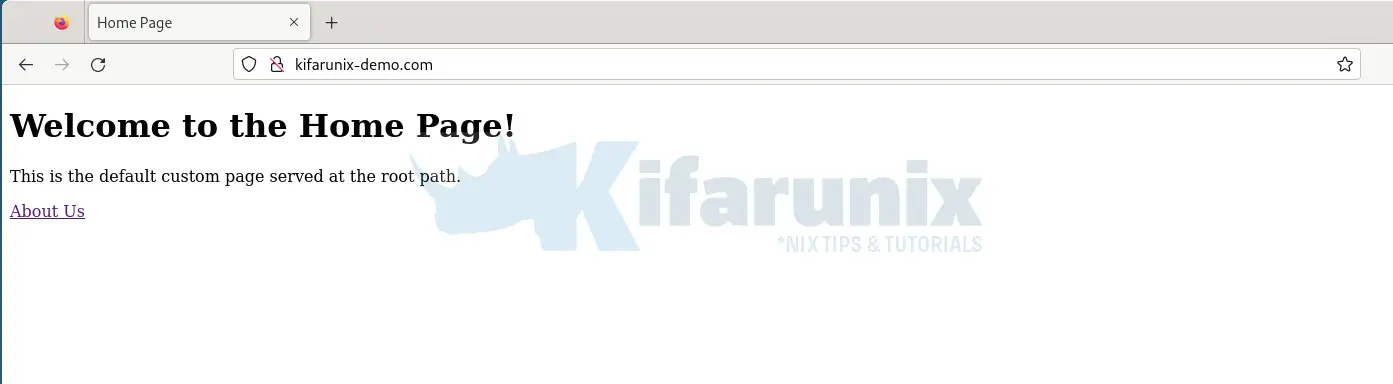

So, to externally access our Nginx app via ingress controller, we need to use our app service external IP.

We have mapped it locally to the domain;

Accessing home page;

Accessing about-us page;

And that is it!

Conclusion

Kubernetes Ingress is a powerful tool for managing external access to your services, offering flexible routing based on hostnames and URL paths. By following the steps outlined above, you can set up Ingress, expose your services, and leverage advanced features like TLS termination and path-based routing.

Read more about Kubernetes Ingress controller: