What is the difference between Kubernetes kubectl drain and kubectl cordon commands? Well, keeping your Kubernetes cluster healthy and running smoothly requires regular maintenance on individual nodes. The maintenance might involve software updates, hardware upgrades, or even a complete OS reinstall. But how do you prepare a node for maintenance without disrupting your running applications? That’s where drain and cordon come in, let’s explore!

Table of Contents

Kubernetes Nodes Maintenance: Drain vs. Cordon

kubectl drain and kubectl cordon are two essential commands in the Kubernetes toolbox that are used to manage the scheduling and eviction of pods on a node, typically during maintenance or upgrades.

Kubectl Drain and Cordon in Layman Terms

- Imagine you’re renovating a house. You want everyone to move out temporarily so you can work without disturbances. So, you politely ask the house occupants (pods) to move out to another house (node) for a while. You make sure they leave gracefully and settle into their new house (nodes) without any hassle. Once the renovations are done, they can move back in smoothly, and your house is ready to shine again! This is act of kubectl drain.

- Now, let’s say you need to fix a few things in your apartment (node), but not everything. You put up a sign saying “closed for renovation,” indicating that no new tenants (pods) can move in for now. However, the ones already living there (pods running on the node) can stay put and continue with their daily routines. This way, you can manage the repairs without causing any disruptions to the existing occupants. This is the action of kubectl cordon.

Diving further, what does kubectl drain and kubectl cordon do exactly?

kubectl cordon

The kubectl cordon command marks a Kubernetes node as unschedulable. This means that no new pods can be scheduled on a node that has been cordoned. However, any existing pods hosted on that specific node are not affected and will continue to run as usual.

kubectl cordon is used when you want to prepare a node for maintenance or upgrades without affecting the currently running pods.

Ideally, if you are planning on deleting the pods running a node, you can simply use the drain command instead.

Example usage of kubectl cordon command

First of all, if you want to set the a node for maintenance, you need to identify that specific node. You can use the command below to get a list of nodes;

kubectl get nodesSample output;

NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane 3h29m v1.30.1

master-02 Ready control-plane 3h21m v1.30.1

master-03 Ready control-plane 3h16m v1.30.1

worker-01 Ready 174m v1.30.1

worker-02 Ready 172m v1.30.1

worker-03 Ready 172m v1.30.1

Once you know the specific node, then you can go ahead and cordon it.

kubectl cordon <node-name>For example;

kubectl cordon master-01So the command;

- marks master-01 node as unschedulable.

- ensures that no new pods will be scheduled on this node.

- cause existing pods on this node to continue runing until they are manually evicted or terminated.

kubectl drain

The kubectl drain command on the other hand, performs the actions of the kubectl cordon on a node and then evicts all the pods from that node. That is to say, it marks the node as unschedulable so that no new deployments are made on that node. After that, it then gracefully evicts all pods from a node. It is typically used for maintenance tasks where the node needs to be emptied of running pods, for example, during a node upgrade or decommissioning.

Note: The drain command will wait for graceful eviction of all pods. Therefore, you should not operate on the machine until the command completes.

Example Usage of kubectl drain command

To drain a node, identify the node to drain:

kubectl get nodesExecute the drain command against a node:

kubectl drain <node-name>In regards to draining the pods running on a Kubernetes node, all pods except DaemonSets and static/mirror pods are safely evicted.

Therefore, if there are daemon set-managed pods on a node, the drain command will not proceed without –ignore-daemonsets option which tells it to skip the drain of such pods.

For more command line options to use, refer to;

kubectl drain --helpAll evicted pods are rescheduled on other available nodes.

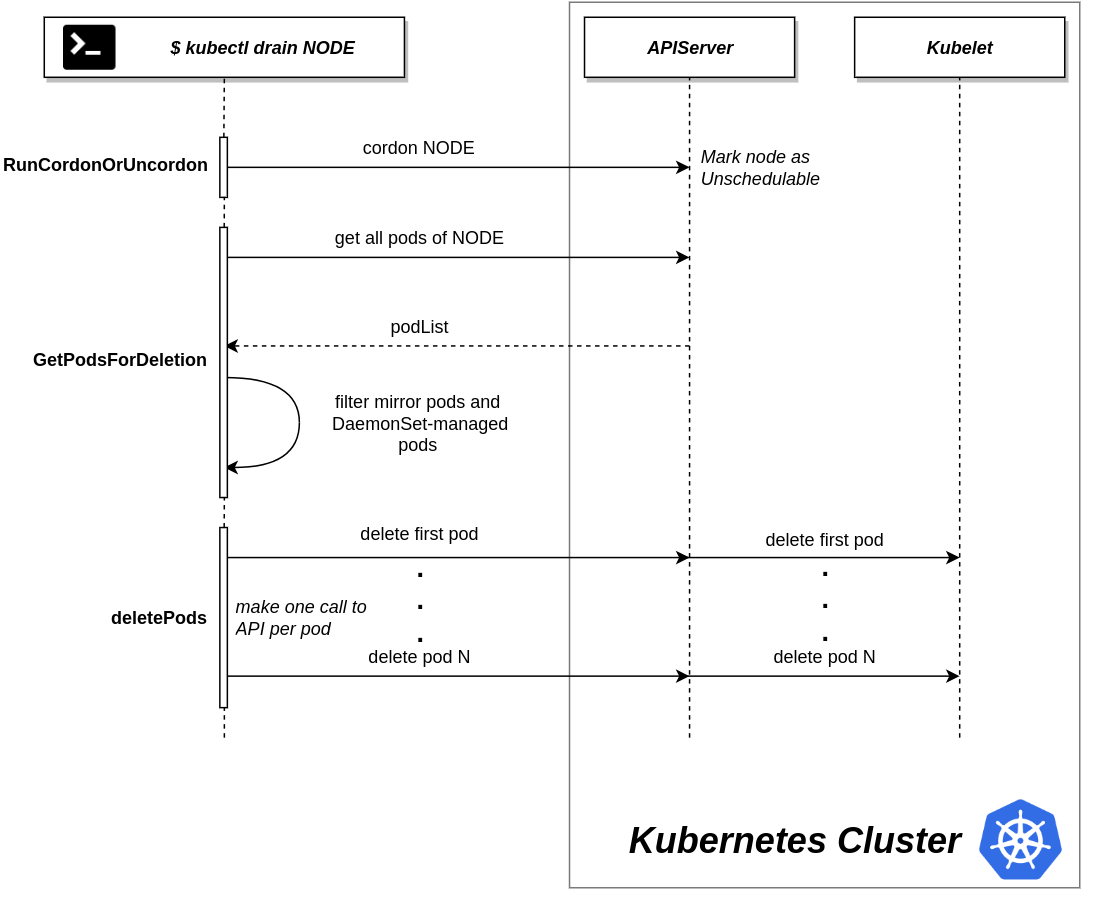

Kubectl Cordon/Drain Illustration

The process of cordoning and evicting pods from the nodes is illustrated in the diagram below.

kubectl drain node gets stuck forever [Apparmor Bug]

Have you tried to drain a node and it taking long or even fails? Well, my worker nodes hosted on Ubuntu 24.04 system are using containerd CRI. And as of this writing, when I tried to drain the, they took get stuck forever!

kubectl drain worker-01 --ignore-daemonsetsnode/worker-01 cordoned

Warning: ignoring DaemonSet-managed Pods: calico-system/calico-node-7lg6j, calico-system/csi-node-driver-vbztn, kube-system/kube-proxy-9g7c6

evicting pod calico-system/calico-typha-bd8d4bc69-8mnjl

evicting pod apps/nginx-app-6ff7b5d8f6-hsljs

...

And pods stuck at terminating… state!

This prompted me to check the logs on the worker nodes and alas! Apparmor!

sudo tail -f /var/log/kern.log2024-06-14T19:04:43.331091+00:00 worker-01 kernel: audit: type=1400 audit(1718391883.329:221): apparmor="DENIED" operation="signal" class="signal" profile="cri-containerd.apparmor.d" pid=7445 comm="runc" requested_mask="receive" denied_mask="receive" signal=kill peer="runc"The log file, it basically says;

- The runc, a runtime used by CRI, e.g containerd to manage containers attempted to receive the kill signal to terminate the containers.

- However, when it tried to receive the kill signal, this action was denied by the AppArmor security policy under the profile, cri-containerd.apparmor.d. AppArmor is a Linux kernel security module that restricts what actions processes can perform based on security profiles.

Current versions of containerd/runc;

root@worker-03:~# containerd --version

containerd github.com/containerd/containerd 1.7.12

root@worker-03:~# runc --version

runc version 1.1.12-0ubuntu3

spec: 1.0.2-dev

go: go1.22.2

libseccomp: 2.5.5

root@worker-03:~# crictl --version

crictl version v1.30.0

root@worker-03:~#

You realized that while you can circumvent this nuisance by stopping apparmor service, you might actually be opening a pandora’s box in your system. Therefore, to avoid having to do this, you can simply unload the cri-containerd.apparmor.d profile which is not stored under the apparmor profiles directory, /etc/apparmor.d/, by default using the aa-remove-unknown command.

According to man page;

aa-remove-unknown will inventory all profiles in /etc/apparmor.d/, compare that list to the profiles currently loaded into the kernel, and then remove all of the loaded profiles that were not found in /etc/apparmor.d/. It will also report the name of each profile that it removes on standard out.Therefore, execute the command;

sudo aa-remove-unknownYour drain command should now execute to completion.

You can temporarily stop apparmor service when you are draining the nodes/deleting pods and start it again after the nodes are drained/pods terminated.

BUT, fortunately, Sebastian, managed to hand-craft a cri-containerd.apparmor.d profile that is handling this bug handsomely. You can install the profile by running the command below.

sudo tee /etc/apparmor.d/cri-containerd.apparmor.d << 'EOL'

#include <tunables/global>

profile cri-containerd.apparmor.d flags=(attach_disconnected,mediate_deleted) {

#include <abstractions/base>

network,

capability,

file,

umount,

# Host (privileged) processes may send signals to container processes.

signal (receive) peer=unconfined,

# runc may send signals to container processes.

signal (receive) peer=runc,

# crun may send signals to container processes.

signal (receive) peer=crun,

# Manager may send signals to container processes.

signal (receive) peer=cri-containerd.apparmor.d,

# Container processes may send signals amongst themselves.

signal (send,receive) peer=cri-containerd.apparmor.d,

deny @{PROC}/* w, # deny write for all files directly in /proc (not in a subdir)

# deny write to files not in /proc/<number>/** or /proc/sys/**

deny @{PROC}/{[^1-9],[^1-9][^0-9],[^1-9s][^0-9y][^0-9s],[^1-9][^0-9][^0-9][^0-9]*}/** w,

deny @{PROC}/sys/[^k]** w, # deny /proc/sys except /proc/sys/k* (effectively /proc/sys/kernel)

deny @{PROC}/sys/kernel/{?,??,[^s][^h][^m]**} w, # deny everything except shm* in /proc/sys/kernel/

deny @{PROC}/sysrq-trigger rwklx,

deny @{PROC}/mem rwklx,

deny @{PROC}/kmem rwklx,

deny @{PROC}/kcore rwklx,

deny mount,

deny /sys/[^f]*/** wklx,

deny /sys/f[^s]*/** wklx,

deny /sys/fs/[^c]*/** wklx,

deny /sys/fs/c[^g]*/** wklx,

deny /sys/fs/cg[^r]*/** wklx,

deny /sys/firmware/** rwklx,

deny /sys/devices/virtual/powercap/** rwklx,

deny /sys/kernel/security/** rwklx,

# allow processes within the container to trace each other,

# provided all other LSM and yama setting allow it.

ptrace (trace,tracedby,read,readby) peer=cri-containerd.apparmor.d,

}

EOL

Then, check the file for syntactical errors and load it into the AppArmor security subsystem.

sudo apparmor_parser -r /etc/apparmor.d/cri-containerd.apparmor.dThis should also sort the issue perfectly. More on the likely bug.

Be sure to watch the logs, kern.log or syslog for any issue related to apparmor.

Dealing with Pod Disruption Budget (PDB)

A Pod Disruption Budget (PDB) is a Kubernetes resource that specifies the minimum number or percentage of pods in a deployment that must be available at any given time. It is used to ensure that a certain number of pods remain operational during voluntary disruptions, such as maintenance or updates. PDBs are especially important for high availability and stability of applications, ensuring that critical services remain available even during planned disruptions.

When you drain a node using kubectl drain, Kubernetes checks the PDBs to ensure the defined disruption budget is not violated. If evicting a pod would violate the PDB, Kubernetes will block the eviction and display an error message.

If the drain command is blocked due to PDB violations, you have a few options:

- Adjust PDBs: Temporarily adjust the PDBs to allow more flexibility during the maintenance window.

- Force Drain: As a last resort, you can use the –force flag to force the drain, ignoring PDBs. This should be done with extreme caution as it can cause application downtime.

Re-enable Scheduling of Pods on a Node

When you are ready to put the node back into service, you can make it schedulable again using the command;

kubectl uncordon <cordoned-node-name>For example;

kubectl uncordon master-01Conclusion

In summary;

| Command | Scheduling New Pods | Pod Eviction |

|---|---|---|

| kubectl cordon | Prevents new pods from being scheduled on the node | Does not evict existing pods. |

| kubectl drain | Prevents new pods from being scheduled on the node and evicts existing pods. | Evicts existing pods, excluding DaemonSets and static pods by default. |

Read more on:

kubectl cordon --helpkubectl drain --help