In this guide, we’ll walk you through how Kubernetes schedules Pods, from creation to running on a node. Kubernetes is a powerful tool for managing containerized applications, and understanding it at first can feel overwhelming. One question I get asked a lot as a DevOps enthusiast is: How does Kubernetes decide where to run the Pods? The answer lies in the Kubernetes scheduler—a behind-the-scenes service that assigns Pods to nodes. When I started with K8s, I spent hours scratching my head over “Pending” Pods until I cracked the scheduling process. Now, I’m here to break it down for you, step-by-step.

Table of Contents

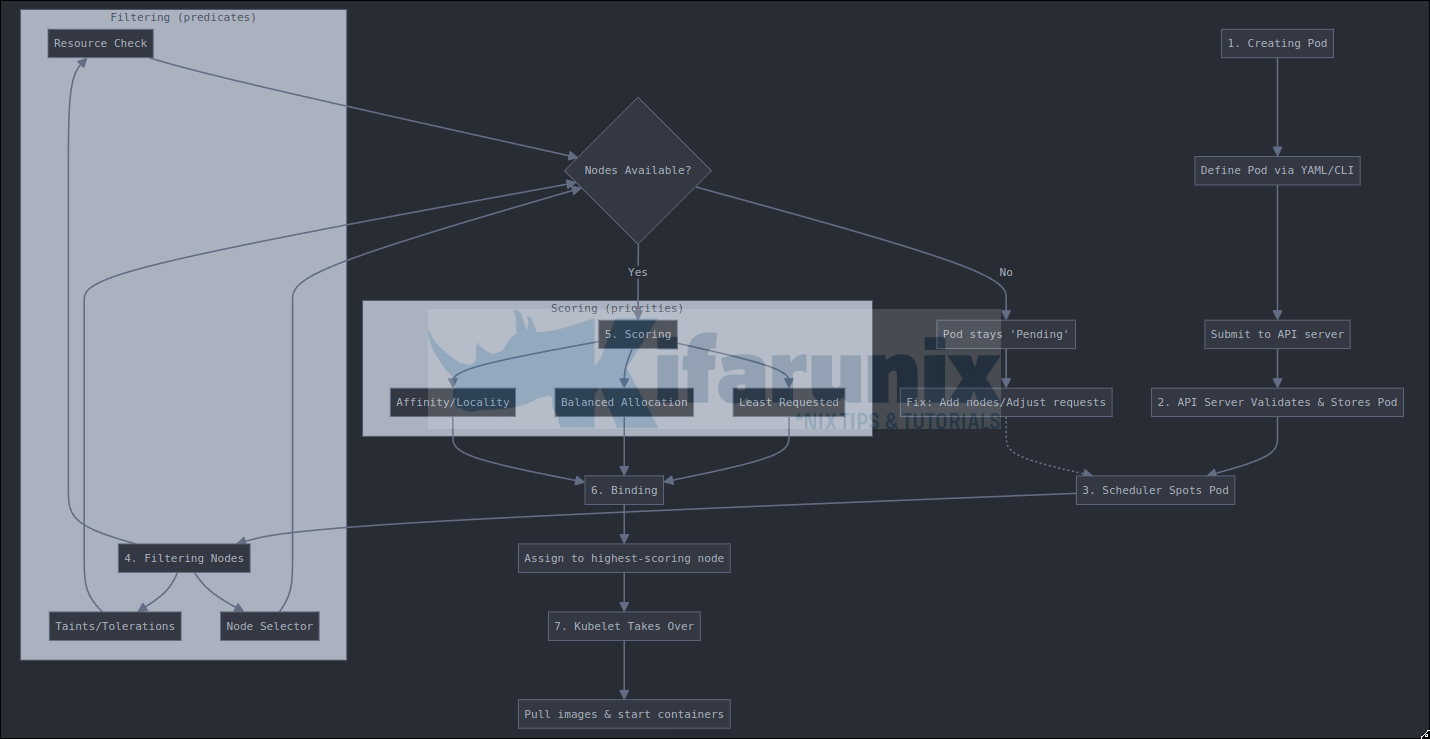

How Kubernetes Schedules Pods

What Is Pod Scheduling in Kubernetes?

Before we get to the steps, let’s clarify: a Pod is the smallest deployable unit in Kubernetes, usually containing one or more containers. Scheduling is how Kubernetes picks a node (a machine in your cluster) to run that Pod. The scheduler considers resources (like CPU and memory), rules you set, and cluster conditions to make its choice. Think of it like finding the perfect parking spot for your car in a busy garage.

Step 1: Creating a Pod

It all starts with you. You define a Pod using a YAML file (declarative approach) or a command line (imperative approach) and send it to the Kubernetes API server. This file tells K8s what you want—like which container image to use and how much CPU or memory it needs.

Here’s a simple example YAML file for creating a Pod.

cat nginx.yamlapiVersion: v1

kind: Pod

metadata:

name: web-svr

spec:

containers:

- name: web-svr-nginx

image: nginx

resources:

requests:

cpu: "0.5"

memory: "512Mi"

When you are done creating the Pod manifest YAML file, you submit it with kubectl apply -f nginx.yaml, and the API server registers it.

Step 2: The API Server Processing

When you submit the Pods creation commands, the API server checks the Pod spec for correct syntax (e.g., valid YAML or CLI generated spec) and compliance with the Kubernetes API (e.g., required fields like image). If valid, the Pod definition is saved in etcd, ensuring the cluster’s desired state is updated. The Pod’s status is then set to “Pending,” signaling it’s waiting for the scheduler to assign it to a node.

Step 3: The Scheduler Spots the Pod

The Kubernetes scheduler is always watching the API server for new, unscheduled Pods. When it sees your Pod with no nodeName spec assigned, it jumps into action. This is like a librarian noticing a new book that needs to be placed in a shelf, -:).

kubectl get pods Step 4: Filtering Nodes (formerly predicates)

Now the scheduler filters all the nodes in your cluster to find ones that can run your Pod. It’s picky—it checks things like:

- Resources: Does the node have enough CPU and memory? If your Pod needs 0.5 CPU and 512Mi memory, nodes below that are out.

- Node Selectors: Did you specify a label like disktype: ssd or other labels? Only nodes matching the labels will pass.

- Taints and Tolerations: If a node has a “NoSchedule” taint (a “keep out” sign) for example, your Pod needs a matching toleration to get in.

For example, if you have three nodes:

- Node A: 2 CPU, 4Gi memory, tainted.

- Node B: 1 CPU, 2Gi memory, no taints.

- Node C: 0.5 CPU, 1Gi memory, no taints.

And your Pod has no tolerations, Node A gets filtered out due to its taint. Node B has 1 CPU and 2Gi memory, which is more than enough ✅. Node C has exactly 0.5 CPU and 1Gi memory, so it just meets the minimum CPU requirement. If Node C isn’t already running too many workloads, your Pod might barely fit—but it’s a close call!

Learn More: Check the official Kubernetes docs on taints and tolerations for details.

Step 5: Scoring the Options (formerly priorities)

Kubernetes scheduler applies a set of scoring algorithms (priorities) to rank surviving nodes. Each node is given a score of 0-10. The higher the score the better fit the node is. Examples of scoring criteria:

- Least Requested Resources: Prefers nodes with more free resources (to balance load).

- Formula: score = (capacity – requested) / capacity * 10.

- capacity: Represents the total capacity of a resource (e.g., CPU, memory) on a node.

- requested: Represents the amount of a resource requested by pods running on that node.

- (capacity – requested): Calculates the remaining capacity or unused resources.

- (capacity – requested) / capacity: Calculates the utilization percentage (unused capacity as a fraction of total capacity).

- … * 10: Scales the utilization percentage to a score between 0 and 10, where 10 represents 100% utilization and 0 represents 0% utilization

- Formula: score = (capacity – requested) / capacity * 10.

- Balanced Resource Allocation: Favors nodes where resource usage (CPU, memory) is more balanced after adding the Pod.

- Node Affinity: Boosts scores for nodes matching preferred affinity rules.

- Image Locality: Gives a higher score if the node already has the Pod’s container image cached.

- Inter-Pod Affinity/Anti-Affinity: Adjusts scores based on proximity to or distance from other Pods.

With the outcome of scoring, say Node B scores 8 and Node C scores 6—Node B wins.

Step 6: Binding (Assign Pod to Node)

The scheduler then assigns the Pod to the highest-scoring node. The scheduler updates the Pod’s spec with the chosen node’s name (e.g., nodeName: node-1) via the API server.

This is called a “binding” operation.

Step 7: Kubelet Takes Over

The kubelet on the assigned node detects the Pod binding and starts it. The kubelet pulls the container images, starts the containers, and monitors their health.

If the Pod fails (and the restart policy allows), the kubelet handles restarts locally.

What If Scheduling Fails?

Sometimes, no node fits—like if your cluster is maxed out or your Pod’s rules are too strict. The Pod stays “Pending.” I’ve been there: once, I forgot to add a toleration, and my Pod sat lonely for hours. Run kubectl describe pod to debug—it’ll show clues like “0/3 nodes are available” because events explaining why scheduling failed are recorded.

The scheduler will also retry periodically to schedule the node until conditions change If required, administrators may need to provide some fixes.

Example Fixes:

- Add more nodes.

- Adjust pod requirements

- Check taints with (kubectl get nodes -o custom-columns=NAME:.metadata.name,TAINTS:.spec.taints)

Conclusion

That’s it: Kubernetes scheduling in 7 steps! From creating a Pod to watching it run, the scheduler’s job is to find the perfect node match. Next time you deploy, you’ll know exactly what’s happening under the hood. Try it out in a test cluster. That closes our guide on how Kubernetes assigns pods to nodes.

Other Tutorials

More Kubernetes guides.

Working Guide: Deploy AWX on Kubernetes Cluster with AWX Operator

Step-by-Step: Provisioning Kubernetes Persistent Volumes with CephFS CSI Driver