This tutorial provides a step-by-step guide on how to setup highly available Kubernetes cluster with Haproxy and Keepalived. For anyone managing critical containerized applications with Kubernetes, ensuring reliability isn’t just an option—it’s a necessity. Downtime is more than an inconvenience; it’s a direct threat to revenue, brand reputation, and operational stability. This is where a Highly Available (HA) Kubernetes cluster setup becomes indispensable. An HA cluster guarantees that your Kubernetes deployment can endure node failures without affecting your applications’ performance. But how do you achieve this robustness?

Table of Contents

How to Set Up a Highly Available Kubernetes Cluster

Understanding HA in Kubernetes

A Kubernetes cluster is usually made up a control plane and worker nodes. While control plane, also known as master node, controls the Kubernetes cluster by managing the scheduling and orchestration of applications, maintaining the cluster’s desired state and responding to cluster events, worker nodes handle the real workload of running and managing containers (Pods).

Most Kubernetes deployment usually run a multi-worker nodes and a single control plane architectures. While there is a distributed workload among the worker nodes in this setup, it poses a risk of inability to manage and control the cluster’s resources, such as scheduling new pods, updating configurations, and handling API requests in the event that the control plane goes down.

These are the issues that redundancy and high availability aims to solve as it ensures:

- Fault tolerance: HA ensures that the Kubernetes cluster can continue to operate even if individual components or nodes fail.

- Business Continuity: HA setup minimizes the risk of downtime, ensuring business continuity and customer satisfaction.

- Resilience to Maintenance: HA setup facilitate rolling updates and maintenance activities without disrupting application availability.

- Improved Performance: HA configurations can enhance performance by distributing workloads across multiple nodes and leveraging resources more efficiently.

- Disaster Recovery: In the event of a catastrophic failure or disaster, HA setup can help minimize data loss and facilitate recovery.

Kubernetes HA Setup Options

There are two major options of deploying Kubernetes cluster in a high availability setup: stacked control plane nodes and external etcd cluster. Each of these setups have their own pros and cons.

- Stacked Control Plane Nodes:

- In this setup, multiple master nodes are deployed to provide high availability.

- As usual, all control plane components (such as the API server, scheduler, controller manager, and etcd) are installed on each master node.

- This setup simplifies deployment and management as all components are colocated on the same nodes. Hence, suitable for smaller deployments.

- However, it introduces a risk of compromised redundancy. If one control node goes down, all the components including the key-value data store, etcd, in the same node, becomes inaccessible.

- To mitigate this risk, you need multiple control planes and at least three of them are recommended.

- External etcd Cluster:

- In this configuration, the etcd key-value store, which stores cluster state and configuration data, is deployed separately from the master nodes.

- The etcd cluster can be deployed in a highly available manner across multiple nodes or even multiple data centers, ensuring resilience against failures.

- This setup adds complexity to the deployment and management of the Kubernetes cluster but provides greater fault tolerance and reliability.

- It’s recommended for production environments or large-scale deployments where high availability and data integrity are critical requirements.

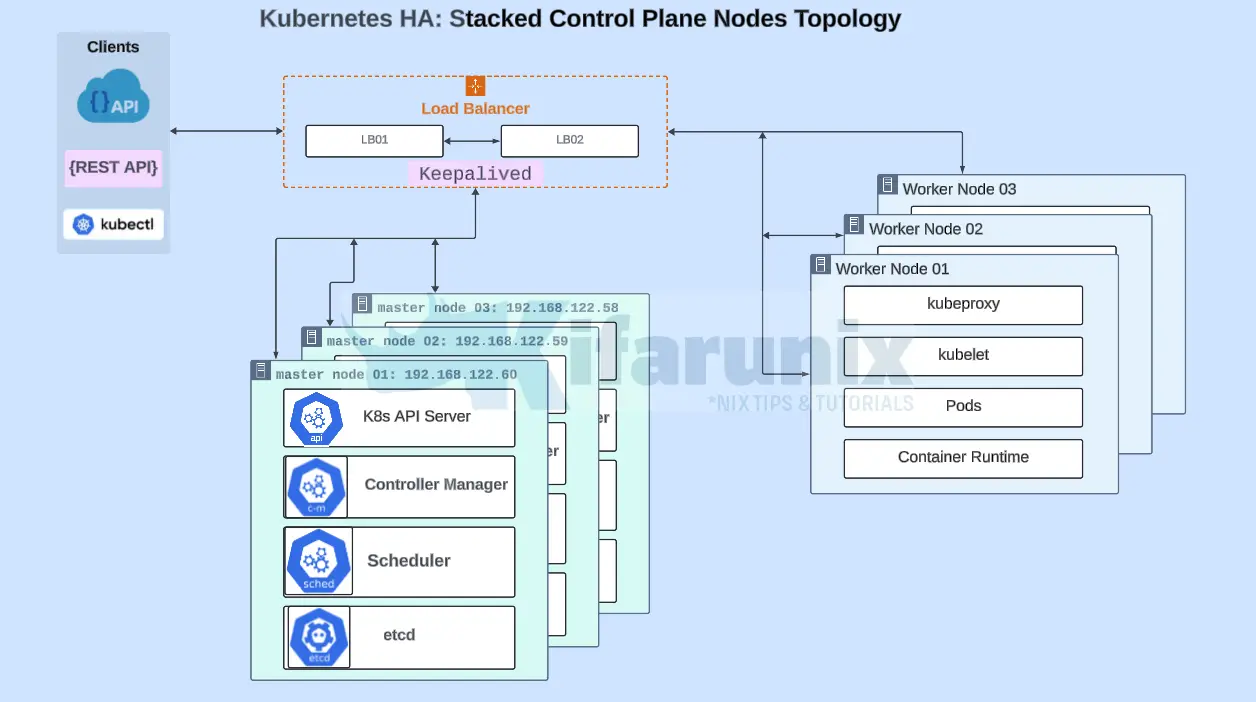

Our Deployment Architecture: Stacked Control Plane

In this guide, we will deploy a highly available Kubernetes cluster using stacked control plane architecture. The diagram below depicts our architecture.

From the architecture above, we have;

- 2 load balancers running Haproxy and Keepalived

- 3 Kubernetes control planes in stacked topology.

- 3 Kubernetes worker nodes

Kubernetes cluster nodes;

| Node | Hostname | IP Address | vCPUs | RAM (GB) | OS |

| LB 01 | lb-01 | 192.168.122.56 | 2 | 4 | Ubuntu 24.04 |

| LB 02 | lb-02 | 192.168.122.57 | 2 | 4 | Ubuntu 24.04 |

| Master 1 | master-01 | 192.168.122.58 | 2 | 4 | Ubuntu 24.04 |

| Master 2 | master-02 | 192.168.122.59 | 2 | 4 | Ubuntu 24.04 |

| Master 3 | master-03 | 192.168.122.60 | 2 | 4 | Ubuntu 24.04 |

| Worker 1 | worker-01 | 192.168.122.61 | 2 | 4 | Ubuntu 24.04 |

| Worker 2 | worker-02 | 192.168.122.62 | 2 | 4 | Ubuntu 24.04 |

| Worker 3 | worker-03 | 192.168.122.63 | 2 | 4 | Ubuntu 24.04 |

All the nodes used in this guide are running Ubuntu 24.04 LTS server.

Therefore, to proceed with this setup;

Deploy Kubernetes in HA with Haproxy and Keepalived

Why Choose HAProxy and Keepalived?

HAProxy is a powerful, open-source load balancer and proxy server known for its high performance and reliability. It distributes traffic across your Kubernetes control plane nodes, ensuring that the load is balanced and no single node is overwhelmed.

Keepalived is a routing software that provides high availability and load balancing. It complements HAProxy by managing Virtual IP addresses (VIPs), ensuring that if one load balancer goes down, another can take over seamlessly.

Install Haproxy and Keepalived on Load Balancer Nodes

On Load balance node lb-01 and lb-02, install both Haproxy and Keepalived packages.

How to Install Keepalived on Ubuntu 24.04

Install HAProxy on Ubuntu 24.04

Configure Keepalived to Provide Virtual IP

Once you have installed Keepalived package, you can configure it to provide a VIP.

Here is our sample configurations;

LB-01;

cat /etc/keepalived/keepalived.confglobal_defs {

enable_script_security

script_user root

vrrp_version 3

vrrp_min_garp true

}

vrrp_script chk_haproxy {

script "/usr/bin/systemctl is-active --quiet haproxy"

fall 2

rise 2

interval 2

weight 50

}

vrrp_instance LB_VIP {

state MASTER

interface enp1s0

virtual_router_id 51

priority 150

advert_int 1

track_interface {

enp1s0 weight 50

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.122.254/24

}

}

LB-02;

cat /etc/keepalived/keepalived.confglobal_defs {

enable_script_security

script_user root

vrrp_version 3

vrrp_min_garp true

}

vrrp_script chk_haproxy {

script "/usr/bin/systemctl is-active --quiet haproxy"

fall 2

rise 2

interval 2

weight 50

}

vrrp_instance LB_VIP {

state BACKUP

interface enp1s0

virtual_router_id 51

priority 100

advert_int 1

track_interface {

enp1s0 weight 50

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.122.254/24

}

}

Start Keepalived on both nodes;

sudo systemctl enable --now keepalivedConfirm IP address on the node defined as master lb-01 (with higher priority value);

ip -br alo UNKNOWN 127.0.0.1/8 ::1/128

enp1s0 UP 192.168.122.56/24 192.168.122.254/32 fe80::5054:ff:fedb:1d46/64

As you can see, we have VIP (192.168.122.254) assigned.

Sample logs can be checked using;

sudo journalctl -f -u keepalivedYou can also check traffic related to VRRP protocol (protocol 112)

sudo tcpdump proto 112Configure HAProxy Load Balancer

On both load balancers, the HAProxy configuration files have the same settings.

Sample confugration used;

cat /etc/haproxy/haproxy.cfgglobal

log /var/log/haproxy.log local0 info

log-send-hostname

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

maxconn 524272

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend kube-apiserver

bind *:6443 # BIND to any address so it is accessible via VIP

mode tcp

option tcplog

default_backend kube-apiserver

backend kube-apiserver

balance roundrobin

mode tcp

option tcp-check

server master-01 192.168.122.58:6443 check

server master-02 192.168.122.59:6443 check

server master-03 192.168.122.60:6443 check

listen stats

bind 192.168.122.254:8443

stats enable # enable statistics reports

stats hide-version # Hide the version of HAProxy

stats refresh 30s # HAProxy refresh time

stats show-node # Shows the hostname of the node

stats auth haadmin:P@ssword # Enforce Basic authentication for Stats page

stats uri /stats # Statistics URL

Be sure to confirm validity of the HAProxy configuration file;

sudo haproxy -f /etc/haproxy/haproxy.cfg -c -VConfiguration file is validUpdate HAProxy file descriptor (FD)/open files (NOFILE) limit (done system wide);

echo "fs.nr_open = 1048599" | sudo tee -a /etc/sysctl.confsudo sysctl -pStart and enable HAProxy to run on system bootl

sudo systemctl enable --now haproxyPrepare Nodes for Kubernetes Deployment

In this setup, we will use kubeadm to deploy our Kubernetes cluster, the usual way. Therefore, follow the steps below setup the nodes for Kubernetes deployment in HA.

- Disable Swap on Cluster Nodes

- Enable Kernel IP forwarding on Cluster Nodes

- Load Some Required Kernel Modules on Cluster Nodes

- Install Container Runtime on Cluster Nodes

- Install Kubernetes on Cluster Nodes

- Mark Hold Kubernetes Packages

It is recommended that the versions Kubernetes components, kubeadm, kubelet, kubectl, match.

kubectl version -o yamlclientVersion:

buildDate: "2024-05-14T10:50:53Z"

compiler: gc

gitCommit: 6911225c3f747e1cd9d109c305436d08b668f086

gitTreeState: clean

gitVersion: v1.30.1

goVersion: go1.22.2

major: "1"

minor: "30"

platform: linux/amd64

kustomizeVersion: v5.0.4-0.20230601165947-6ce0bf390ce3

kubelet --versionKubernetes v1.30.1kubeadm version -o yamlclientVersion:

buildDate: "2024-05-14T10:49:05Z"

compiler: gc

gitCommit: 6911225c3f747e1cd9d109c305436d08b668f086

gitTreeState: clean

gitVersion: v1.30.1

goVersion: go1.22.2

major: "1"

minor: "30"

platform: linux/amd64

Open Kubernetes Cluster Ports on Firewall

Ensure that the required cluster ports are opened.

On the Load balancer, ensure the API server port, 6443/tcp is opened;

sudo iptables -A INPUT -p tcp -m multiport --dports 22,6443 -j ACCEPTOn Control plane nodes;

sudo iptables -A INPUT -p tcp -m multiport --dports 6443,2379:2380,10250:10252 -j ACCEPTOn Worker Nodes

sudo iptables -A INPUT -p tcp -m multiport --dports 10250,10256,30000:32767 -j ACCEPTYou can also use UFW or Firewalld…

Save the rules;

sudo iptables-save | sudo tee /etc/iptables/rules.v4sudo systemctl restart netfilter-persistentInitialize First Control Plane

Once the nodes are ready as per above steps, login to one of the control plane nodes, for example, master-01 in our setup, and initialize it using the command below.

sudo kubeadm init \

--control-plane-endpoint "LOAD_BALANCER_ADDRESS:LOAD_BALANCER_PORT" \

--upload-certs \

--pod-network-cidr=POD_NETWORK

Where

- –control-plane-endpoint “LOAD_BALANCER_ADDRESS:LOAD_BALANCER_PORT” defines the Load balancer VIP and port. The control plane components will register themselves with this endpoint. Replace LOAD_BALANCER_ADDRESS with the IP/DNS name of the load balancer and LOAD_BALANCER_PORT with the port number.

- –upload-certs option tells

kubeadmto upload the TLS certificates to the Kubernetes control plane configuration. This is necessary for other control plane nodes to join the cluster securely. It is also possible to manually upload the certs - –pod-network-cidr=POD_NETWORK: Depending on the whether the CNI you will use require the Pod network defined, you need to specify the same. We will be using Calico CNI, which requires Pod network defined, hence. This option sets the CIDR (Classless Inter-Domain Routing) block for the pod network. The pod network CIDR must not overlap with any existing networks in your environment and must be large enough to accommodate the maximum number of pods you anticipate deploying in your cluster

So, my first control plane initialization command will be like;

sudo kubeadm init \

--control-plane-endpoint "192.168.122.254:6443" \

--upload-certs \

--pod-network-cidr=10.100.0.0/16

Sample control plane initialization command output;

[init] Using Kubernetes version: v1.30.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master-01] and IPs [10.96.0.1 192.168.122.58 192.168.122.254]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master-01] and IPs [192.168.122.58 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master-01] and IPs [192.168.122.58 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests"

[kubelet-check] Waiting for a healthy kubelet. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 502.855988ms

[api-check] Waiting for a healthy API server. This can take up to 4m0s

[api-check] The API server is healthy after 3.505516376s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

8089be8fb63febd32a17e9d623d6c514088235de2637c75add56c9905078575f

[mark-control-plane] Marking the node master-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: x1yxt2.3bbj98bg05ynqx6x

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.122.254:6443 --token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f \

--control-plane --certificate-key 8089be8fb63febd32a17e9d623d6c514088235de2637c75add56c9905078575f

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.122.254:6443 --token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f

Next, to be able to interact with the cluster from the first control plane, run the following commands, as non-root user.

Next, create a Kubernetes cluster directory.

mkdir -p $HOME/.kube

Copy Kubernetes admin configuration file to the cluster directory created above.

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

Set the proper ownership for the cluster configuration file.

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Verify that you can interact with the Kubernetes cluster from the first control plane by running random kubectl commands;

kubectl cluster-infoKubernetes control plane is running at https://192.168.122.254:6443

CoreDNS is running at https://192.168.122.254:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Deploy Pod Network Addon on the Control Plane

For Pods to communicate with one another, you must deploy a Container Network Interface (CNI) based Pod network add-on.

Check the link below on how to install a CNI.

How to Install Kubernetes Pod Network Addon

After a short while. you can verify that Calico CNI pods are running;

kubectl get pods --all-namespaceskubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-68cdb9587c-9xffh 1/1 Running 0 39s

calico-system calico-node-lbhjx 1/1 Running 0 39s

calico-system calico-typha-5845c66fc9-7vv7x 1/1 Running 0 39s

calico-system csi-node-driver-6mpls 2/2 Running 0 39s

kube-system coredns-7db6d8ff4d-jnswv 1/1 Running 0 3m5s

kube-system coredns-7db6d8ff4d-n9sz6 1/1 Running 0 3m5s

kube-system etcd-master-01 1/1 Running 0 3m20s

kube-system kube-apiserver-master-01 1/1 Running 0 3m20s

kube-system kube-controller-manager-master-01 1/1 Running 0 3m20s

kube-system kube-proxy-txjrh 1/1 Running 0 3m5s

kube-system kube-scheduler-master-01 1/1 Running 0 3m20s

tigera-operator tigera-operator-7d5cd7fcc8-l8bl5 1/1 Running 0 71s

Initialize Other Control Plane Nodes

When you initialized the first control plane, a command to initialize and join other control planes into the cluster is printed to the standard output.

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.122.254:6443 --token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f \

--control-plane --certificate-key 8089be8fb63febd32a17e9d623d6c514088235de2637c75add56c9905078575f

Thus, copy the command and execute it on the other control plane nodes to join them to the cluster;

sudo kubeadm join 192.168.122.254:6443 --token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f \

--control-plane \

--certificate-key 8089be8fb63febd32a17e9d623d6c514088235de2637c75add56c9905078575f

Please note that the certificate-key gives access to cluster sensitive data. As a safeguard, uploaded-certs will be deleted in two hours. If you want to add another control plane into the cluster after two hours since you initialized the first control plane, you can use the command below to re-upload the certificates and generate a new decryption key.

sudo kubeadm init phase upload-certs --upload-certsSample output;

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 458b0e87a28080c4792333e2d1fdbe7c28ea216e72016998c0b04326a75579c8Print the join command

kubeadm token create --print-join-commandSample output;

kubeadm join 192.168.122.254:6443 --token q7sc7n.snwhru3n8e3o9lsq --discovery-token-ca-cert-hash sha256:ac08ef4c66538dfbf86a9cd554399c3d979ff370dfc9ca9119ac4ec45fdd0691Then the command to join other control plane into cluster becomes;

sudo kubeadm join 192.168.122.254:6443 --token q7sc7n.snwhru3n8e3o9lsq --discovery-token-ca-cert-hash sha256:ac08ef4c66538dfbf86a9cd554399c3d979ff370dfc9ca9119ac4ec45fdd0691 --control-plane --certificate-key XXXWhere XXX is the certificate key printed by the sudo kubeadm init phase upload-certs –upload-certs command.

Sample cluster join command output;

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master-02] and IPs [10.96.0.1 192.168.122.59 192.168.122.254]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master-02] and IPs [192.168.122.59 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master-02] and IPs [192.168.122.59 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-check] Waiting for a healthy kubelet. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 501.537305ms

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

{"level":"warn","ts":"2024-06-08T05:37:40.997002Z","logger":"etcd-client","caller":"[email protected]/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc0006b9180/192.168.122.58:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

{"level":"warn","ts":"2024-06-08T05:37:41.494954Z","logger":"etcd-client","caller":"[email protected]/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc0006b9180/192.168.122.58:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node master-02 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master-02 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

You can see that the type of cluster is auto-detected, A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from other control plane nodes, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run the same join command on other control plane node and install Kubeconfig to allow you administer cluster as regular user.

Then run the command below to confirm if the node is added to the cluster.

kubectl get nodesSample output;

NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane 13m v1.30.1

master-02 Ready control-plane 5m39s v1.30.1

master-03 Ready control-plane 18s v1.30.1

As you can see, we now have three control plane nodes in the cluster.

Similarly, check the Pods related to the control plane on the kube-system namespace.

kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGE

coredns-7db6d8ff4d-jnswv 1/1 Running 0 21m

coredns-7db6d8ff4d-n9sz6 1/1 Running 0 21m

etcd-master-01 1/1 Running 0 21m

etcd-master-02 1/1 Running 0 14m

etcd-master-03 1/1 Running 0 8m55s

kube-apiserver-master-01 1/1 Running 0 21m

kube-apiserver-master-02 1/1 Running 0 14m

kube-apiserver-master-03 1/1 Running 0 9m1s

kube-controller-manager-master-01 1/1 Running 0 21m

kube-controller-manager-master-02 1/1 Running 0 14m

kube-controller-manager-master-03 1/1 Running 0 8m54s

kube-proxy-hfb98 1/1 Running 0 9m3s

kube-proxy-mfwvj 1/1 Running 0 14m

kube-proxy-txjrh 1/1 Running 0 21m

kube-scheduler-master-01 1/1 Running 0 21m

kube-scheduler-master-02 1/1 Running 0 14m

kube-scheduler-master-03 1/1 Running 0 8m59s

Looks good!

Add Worker Nodes to Kubernetes Cluster

You can now add Worker nodes to the Kubernetes cluster using the kubeadm join command.

Ensure that container runtime is installed, configured and running. We are using containerd CRI;

systemctl status containerdSample output from worker01 node;

● containerd.service - containerd container runtime

Loaded: loaded (/usr/lib/systemd/system/containerd.service; enabled; preset: enabled)

Active: active (running) since Fri 2024-06-07 06:20:19 UTC; 23h ago

Docs: https://containerd.io

Main PID: 38484 (containerd)

Tasks: 9

Memory: 12.9M (peak: 13.4M)

CPU: 2min 32.555s

CGroup: /system.slice/containerd.service

└─38484 /usr/bin/containerd

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751551807Z" level=info msg=serving... address=/run/containerd/containerd.sock.ttrpc

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751584533Z" level=info msg=serving... address=/run/containerd/containerd.sock

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751793867Z" level=info msg="Start subscribing containerd event"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751859875Z" level=info msg="Start recovering state"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751939832Z" level=info msg="Start event monitor"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751986850Z" level=info msg="Start snapshots syncer"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.751999301Z" level=info msg="Start cni network conf syncer for default"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.752004404Z" level=info msg="Start streaming server"

Jun 07 06:20:19 worker-01 containerd[38484]: time="2024-06-07T06:20:19.752041268Z" level=info msg="containerd successfully booted in 0.068873s"

Jun 07 06:20:19 worker-01 systemd[1]: Started containerd.service - containerd container runtime.

Next, get the cluster join command that was output during cluster boot strapping and execute on each worker node.

Note that this command is displayed after initializing the first control plane above;

...

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.122.254:6443 --token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f

Get the command and execute it as root user.

sudo kubeadm join 192.168.122.254:6443 \

--token x1yxt2.3bbj98bg05ynqx6x \

--discovery-token-ca-cert-hash sha256:9e8aa4b38599a819f5b80de36871d95947295135b30a07915f7cf152760bbf4f

If you didn’t save the Kubernetes Cluster joining command, you can at any given time print using the command below on any of the control plane nodes;

kubeadm token create --print-join-command

Worker node cluster join command sample output;

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-check] Waiting for a healthy kubelet. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 501.841547ms

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

On the Kubernetes control plane (as the regular user with which you created the cluster as), run the command below to verify that the nodes have joined the cluster.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane 37m v1.30.1

master-02 Ready control-plane 29m v1.30.1

master-03 Ready control-plane 24m v1.30.1

worker-01 Ready 2m53s v1.30.1

worker-02 Ready 22s v1.30.1

worker-03 Ready 17s v1.30.1

All worker nodes are joined to the cluster and are Ready to handle workloads.

Role of the Worker nodes may show up as <none>. This is okay. No role is assigned to the node by default. It is only until the control plane assign a workload on the node then it shows up the correct role.

You can however update this ROLE using the command;

kubectl label node <worker-node-name> node-role.kubernetes.io/worker=trueGet Kubernetes Cluster Information

As you can see, we now have a cluster. Run the command below to get cluster information.

kubectl cluster-info

Kubernetes control plane is running at https://192.168.122.254:6443

CoreDNS is running at https://192.168.122.254:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

List Kubernetes Cluster API Resources

You can list all Kubernetes cluster resources using the command below;

kubectl api-resourcesNAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingadmissionpolicies admissionregistration.k8s.io/v1 false ValidatingAdmissionPolicy

validatingadmissionpolicybindings admissionregistration.k8s.io/v1 false ValidatingAdmissionPolicyBinding

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

selfsubjectreviews authentication.k8s.io/v1 false SelfSubjectReview

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

bgpconfigurations crd.projectcalico.org/v1 false BGPConfiguration

bgpfilters crd.projectcalico.org/v1 false BGPFilter

bgppeers crd.projectcalico.org/v1 false BGPPeer

blockaffinities crd.projectcalico.org/v1 false BlockAffinity

caliconodestatuses crd.projectcalico.org/v1 false CalicoNodeStatus

clusterinformations crd.projectcalico.org/v1 false ClusterInformation

felixconfigurations crd.projectcalico.org/v1 false FelixConfiguration

globalnetworkpolicies crd.projectcalico.org/v1 false GlobalNetworkPolicy

globalnetworksets crd.projectcalico.org/v1 false GlobalNetworkSet

hostendpoints crd.projectcalico.org/v1 false HostEndpoint

ipamblocks crd.projectcalico.org/v1 false IPAMBlock

ipamconfigs crd.projectcalico.org/v1 false IPAMConfig

ipamhandles crd.projectcalico.org/v1 false IPAMHandle

ippools crd.projectcalico.org/v1 false IPPool

ipreservations crd.projectcalico.org/v1 false IPReservation

kubecontrollersconfigurations crd.projectcalico.org/v1 false KubeControllersConfiguration

networkpolicies crd.projectcalico.org/v1 true NetworkPolicy

networksets crd.projectcalico.org/v1 true NetworkSet

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

flowschemas flowcontrol.apiserver.k8s.io/v1 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1 false PriorityLevelConfiguration

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

apiservers operator.tigera.io/v1 false APIServer

imagesets operator.tigera.io/v1 false ImageSet

installations operator.tigera.io/v1 false Installation

tigerastatuses operator.tigera.io/v1 false TigeraStatus

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

bgpconfigurations bgpconfig,bgpconfigs projectcalico.org/v3 false BGPConfiguration

bgpfilters projectcalico.org/v3 false BGPFilter

bgppeers projectcalico.org/v3 false BGPPeer

blockaffinities blockaffinity,affinity,affinities projectcalico.org/v3 false BlockAffinity

caliconodestatuses caliconodestatus projectcalico.org/v3 false CalicoNodeStatus

clusterinformations clusterinfo projectcalico.org/v3 false ClusterInformation

felixconfigurations felixconfig,felixconfigs projectcalico.org/v3 false FelixConfiguration

globalnetworkpolicies gnp,cgnp,calicoglobalnetworkpolicies projectcalico.org/v3 false GlobalNetworkPolicy

globalnetworksets projectcalico.org/v3 false GlobalNetworkSet

hostendpoints hep,heps projectcalico.org/v3 false HostEndpoint

ipamconfigurations ipamconfig projectcalico.org/v3 false IPAMConfiguration

ippools projectcalico.org/v3 false IPPool

ipreservations projectcalico.org/v3 false IPReservation

kubecontrollersconfigurations projectcalico.org/v3 false KubeControllersConfiguration

networkpolicies cnp,caliconetworkpolicy,caliconetworkpolicies projectcalico.org/v3 true NetworkPolicy

networksets netsets projectcalico.org/v3 true NetworkSet

profiles projectcalico.org/v3 false Profile

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment

You are now ready to deploy an application on Kubernetes cluster.

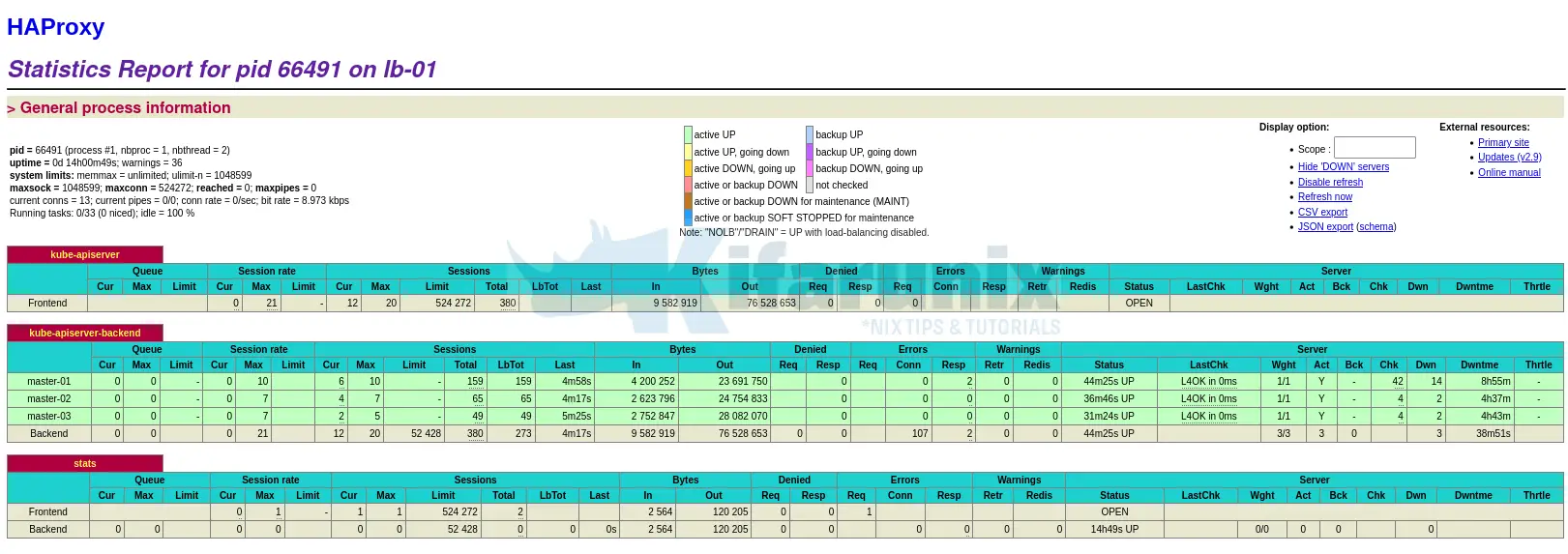

You can also check the Load balancer statistics;

Testing the HA Setup

As a basic HA setup test, simulate a failure by shutting down one control plane node or load balancer. Verify that the cluster remains functional and the VIP is still accessible.

For example, you can shut down one of the Load balancers to begin with;

root@lb-01:~# systemctl poweroff

Broadcast message from root@lb-01 on pts/1 (Sat 2024-06-08 06:57:48 UTC):

The system will power off now!

root@lb-01:~# Connection to 192.168.122.56 closed by remote host.

Connection to 192.168.122.56 closed.

Check that you can still be able to access the API server via the VIP address. For example, let’s try to get the nodes details from control plane

kubectl get node --selector='node-role.kubernetes.io/control-plane'NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane 88m v1.30.1

master-02 Ready control-plane 80m v1.30.1

master-03 Ready control-plane 75m v1.30.1

The fact that I am able to use the kubectl to access the cluster basically shows that the LB high availability is working.

You can bring up the load balancer and shut down one of the control plane nodes, e.g master-01;

kifarunix@master-01:~$ sudo systemctl poweroff

Broadcast message from root@master-01 on pts/1 (Sat 2024-06-08 07:01:07 UTC):

The system will power off now!

From the other control plane nodes, check if you can administer cluster as usual;

kubectl get nodes --selector="node-role.kubernetes.io/control-plane"NAME STATUS ROLES AGE VERSION

master-01 NotReady control-plane 93m v1.30.1

master-02 Ready control-plane 85m v1.30.1

master-03 Ready control-plane 80m v1.30.1

Master-01 is not ready but still am able to run the cluster!

You can do thorough tests to be completely sure that you have a working cluster.

AppArmor Bug Blocks runc Signals, Pods Stuck Terminating

You might have realized that in the recent version of Ubuntu, there is an issue whereby draining the nodes or deleting the pods get stuck with such errors in apparmor logs as;

2024-06-14T19:04:43.331091+00:00 worker-01 kernel: audit: type=1400 audit(1718391883.329:221): apparmor="DENIED" operation="signal" class="signal" profile="cri-containerd.apparmor.d" pid=7445 comm="runc" requested_mask="receive" denied_mask="receive" signal=kill peer="runcThis is a bug on AppArmor profile that denies signals from runc. This results in many pods being stuck in a terminating state. The bug was reported by Sebastian Podjasek on 2024-05-10. It affects Ubuntu containerd-app package.

Read how to fix on kubectl drain node gets stuck forever [Apparmor Bug]

Conclusion

In this blog post, you have successfully deployed Kubernetes in High availability with HAProxy and Keepalived. To ensure you have a visibility on what is happening on the cluster, you can introduce monitoring and alerting.

Read more on Creating Highly Available Clusters with kubeadm.